Actual-time insights extracted from video streams can drastically enhance effectivity for a way industries function. One high-impact software of that is in stock administration. Whether or not you’re a manufacturing unit supervisor trying to enhance stock administration or a retailer proprietor striving to forestall stockouts, real-time information could be a game-changer.

This tutorial will stroll you thru learn how to use pc imaginative and prescient to research video streams, extract insights from video frames at outlined intervals, and current these as actionable visualizations and CSV outputs.

💡

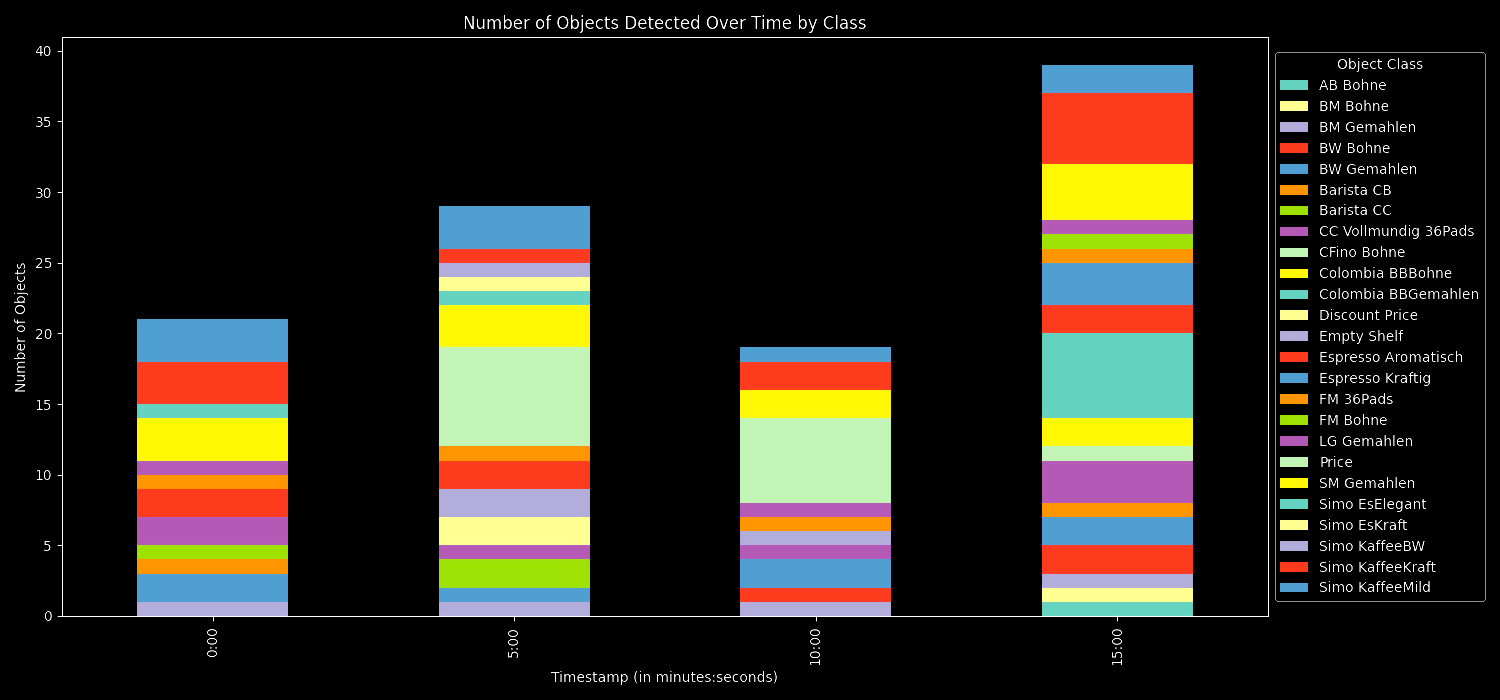

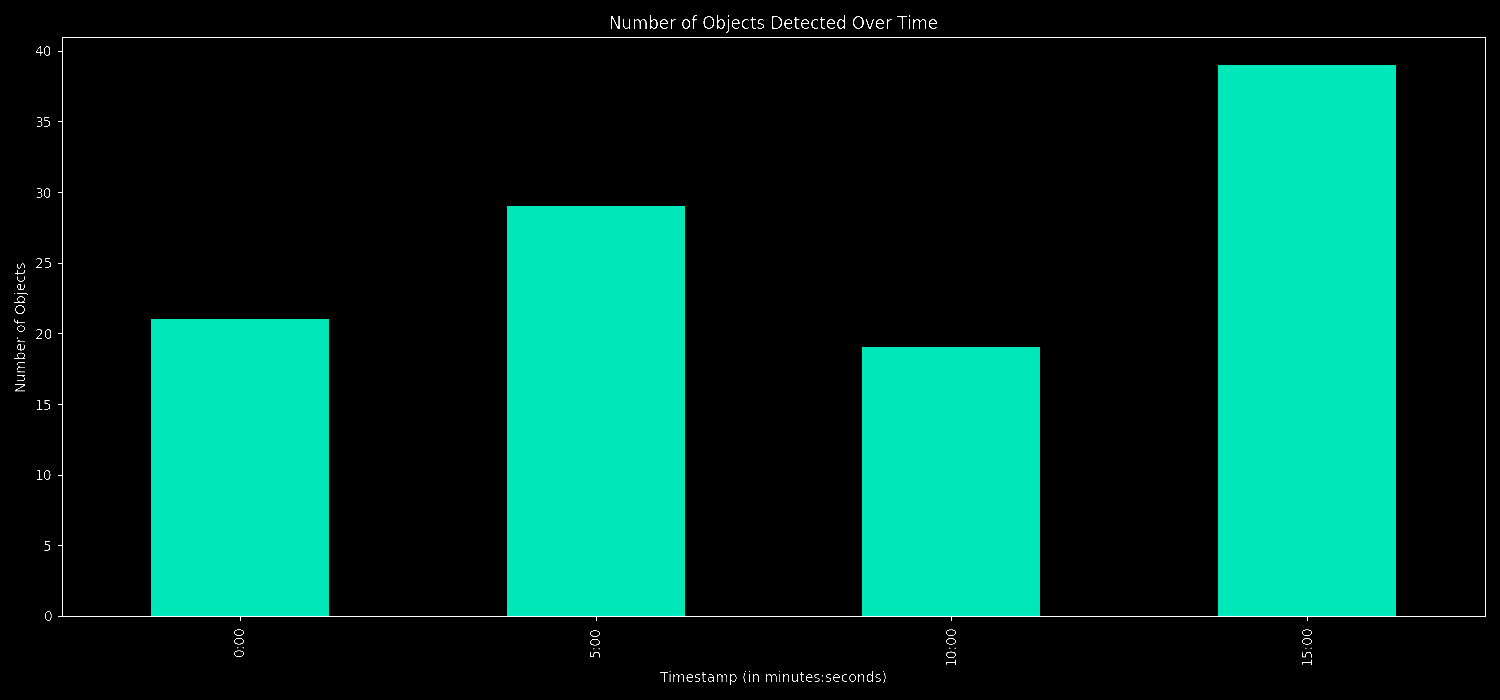

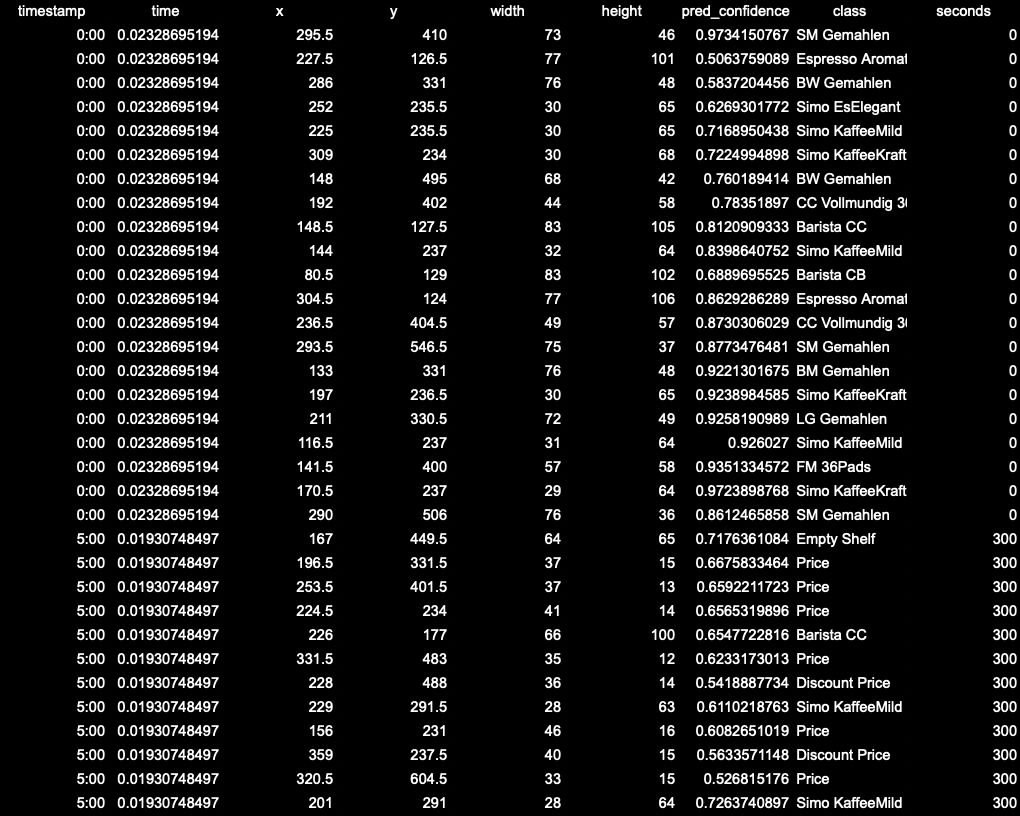

Right here is the output of the appliance we are going to construct for detecting the objects from the movies:

By the tip, you may have detailed visualizations and CSV outputs of detected objects. You may examine what every visuals characterize on the finish of the weblog.

object_counts_by_class_per_interval.csv

object_counts_per_interval.csv

predictions.csv

- timestamp: Refers back to the time interval at which a body was extracted from the video.

- time: Refers back to the time the API took to course of and return predictions for the body. That is normally by way of milliseconds and helps in understanding the efficiency of the API.

To construct a dashboard with inference server, we are going to:

- Set up the dependencies

- Extract frames at intervals

- Get predictions utilizing inference server

- Visualize the end result

We’ll be working with a picture dataset which are packaged espresso gadgets on cabinets and using a mannequin educated on these stock gadgets.

We might be deploying the mannequin utilizing the Roboflow Inference Server, an inference server on this case is used to deploy, handle, and serve machine studying fashions for inference in a manufacturing setting. As soon as a machine studying mannequin has been educated utilizing a dataset, it is not sufficient to only have the mannequin – you want a manner to make use of it, particularly in real-time purposes. That is the place the inference server is available in.

It is also necessary to understand that these predictions are primarily samples of knowledge and you will need to create the proper setting to seize information at constant intervals, which then might be time stamped and used for evaluation, alerts, or as an enter to different purposes.

Step #1 Set up the dependencies

Earlier than we will begin constructing our dashboard, we have to guarantee you’ve got your setting arrange. Relying on if you will be utilizing CPU or GPU, we run totally different sorts of dockerfiles. To optimize efficiency, it’s a good suggestion to make use of GPU for the inference. For more information, you possibly can take a look at our doc.

1. Run the inference server

- For techniques with x86 CPU:

docker run --net=host roboflow/roboflow-inference-server-cpu:newest- For techniques with NVIDIA GPU:

docker run --network=host --gpus=all roboflow/roboflow-inference-server-gpu:newestWith the required dependencies put in, we at the moment are prepared to start out constructing our dashboard. Begin by importing the required dependencies for the challenge:

import cv2

import pandas as pd

import pickle

import requests

import matplotlib.pyplot as plt

import osNow, let’s begin engaged on the principle code for our dashboard.

We’re going to get frames from a given video at common intervals and save them in frames array. Let’s outline a operate that extracts the frames at particular time intervals:

def extract_frames(video_path, interval_minutes): cap = cv2.VideoCapture(video_path) frames = [] timestamps = [] fps = int(cap.get(cv2.CAP_PROP_FPS)) frame_count = Zero whereas cap.isOpened(): ret, body = cap.learn() if not ret: break if frame_count % (fps * interval_minutes) == 0: frames.append(body) timestamps.append(frame_count / fps) frame_count += 1 cap.launch() return frames, timestamps

Step #three Get Predictions utilizing Inference Server

Utilizing the frames we extracted, let’s use the fetch_predictions operate to ship every extracted body to our inference server.

We use the fetch_predictions operate to work together with our inference server. Merely put, for every picture body, the operate sends it to our API, which then returns predictions about objects within the body. These predictions, together with their timestamps, are organized right into a desk, making certain you possibly can rapidly overview ends in chronological order. It is all about streamlining the method of getting insights from our photographs!

def fetch_predictions(base_url, frames, timestamps, dataset_id, version_id, api_key, confidence=0.5): headers = {"Content material-Kind": "software/x-www-form-urlencoded"} df_rows = [] for idx, body in enumerate(frames): numpy_data = pickle.dumps(body) res = requests.put up( f"{base_url}/{dataset_id}/{version_id}", information=numpy_data, headers=headers, params={"api_key": api_key, "confidence": confidence, "image_type": "numpy"} ) predictions = res.json() for pred in predictions['predictions']: time_interval = f"{int(timestamps[idx] // 60)}:{int(timestamps[idx] % 60):02}" row = { "timestamp": time_interval, "time": predictions['time'], "x": pred["x"], "y": pred["y"], "width": pred["width"], "top": pred["height"], "pred_confidence": pred["confidence"], "class": pred["class"] } df_rows.append(row) df = pd.DataFrame(df_rows) df['seconds'] = df['timestamp'].str.break up(':').apply(lambda x: int(x[0])*60 + int(x[1])) df = df.sort_values(by="seconds") return df

Step #4 Visualize the Outcome

This operate turns your information into bar charts and saves them as picture recordsdata.

def plot_and_save(information, title, filename, ylabel, stacked=False, legend_title=None, legend_loc=None, legend_bbox=None): plt.fashion.use('dark_background') information.plot(type='bar', stacked=stacked, figsize=(15,7)) plt.title(title) plt.ylabel(ylabel) plt.xlabel('Timestamp (in minutes:seconds)') if legend_title: plt.legend(title=legend_title, loc=legend_loc, bbox_to_anchor=legend_bbox) plt.tight_layout() plt.savefig(filename)

Placing It All Collectively

The primary operate reveals your complete pipeline:

- First, we Initialize: Arrange constants like the bottom URL, video path, dataset ID, and API key.

- Then, we extract frames: Extract frames from the video at specified intervals utilizing extract_frames.

- We fetch predictions: Use the fetch_predictions operate to acquire object predictions for every body from the inference server and save the predictions in a CSV format inside a outcomes listing.

- Lastly, we visualize: Plot the general depend of detected objects over time utilizing plot_and_save and group and plot object detections by class over time.

Execute the script, and the outcomes are routinely processed, saved, and visualized.

def principal(): base_url = "http://localhost:9001" video_path = "video_path" dataset_id = "dataset_id" version_id = "version_id" api_key = "roboflow_api_key" interval_minutes = args.interval_minutes * 60 frames, timestamps = extract_frames(video_path, interval_minutes) df = fetch_predictions(base_url, frames, timestamps, dataset_id, version_id, api_key) if not os.path.exists("outcomes"): os.makedirs("outcomes") #saving predictions response to csv df.to_csv("outcomes/predictions.csv", index=False) # Rework timestamps to minutes and group df['minutes'] = df['timestamp'].str.break up(':').apply(lambda x: int(x[0]) * 60 + int(x[1])) object_counts_per_interval = df.groupby('minutes').measurement().sort_index() object_counts_per_interval.index = object_counts_per_interval.index.map(lambda x: f"{x // 60}:{x % 60:02}") object_counts_per_interval.to_csv("outcomes/object_counts_per_interval.csv") # Fast insights print(f"Complete distinctive objects detected: {df['class'].nunique()}") print(f"Most steadily detected object: {df['class'].value_counts().idxmax()}") print(f"Time interval with essentially the most objects detected: {object_counts_per_interval.idxmax()}") print(f"Time interval with the least objects detected: {object_counts_per_interval.idxmin()}") plot_and_save(object_counts_per_interval, 'Variety of Objects Detected Over Time', "outcomes/objects_over_time.png", 'Variety of Objects') # Group by timestamp and sophistication, then type by minutes objects_by_class_per_interval = df.groupby(['minutes', 'class']).measurement().unstack(fill_value=0).sort_index() objects_by_class_per_interval.index = objects_by_class_per_interval.index.map(lambda x: f"{x // 60}:{x % 60:02}") objects_by_class_per_interval.to_csv("outcomes/object_counts_by_class_per_interval.csv") plot_and_save(objects_by_class_per_interval, 'Variety of Objects Detected Over Time by Class', "outcomes/objects_by_class_over_time.png", 'Variety of Objects', True, "Object Class", "heart left", (1, 0.5)) if __name__ == "__main__": principal()Working the Code Immediately

Here is a fast rundown on learn how to get it up and working:

- Start by cloning the inference dashboard instance from GitHub:

git clone https://github.com/roboflow/inference-dashboard-example.git

cd inference-dashboard-example

pip set up -r necessities.txt

2. Now, execute the principle script with acceptable parameters:

python principal.py --dataset_id [YOUR_DATASET_ID] --api_key [YOUR_API_KEY] --video_path [PATH_TO_VIDEO] --interval_minutes [INTERVAL_IN_MINUTES]

After working the script, head to the outcomes folder to see the processed video information. Inside, you may discover:

predictions.csv: Holds specifics of each object detected, like the place it is situated, its measurement, and its kind.object_counts_per_interval.csv: Provides a sum of all objects picked up inside set time frames.object_counts_by_class_per_interval.csv: Lists how usually every form of object seems inside these time frames.- Bar Charts: These graphs illustrate object detections over the video’s period, each as a complete depend and by particular person object varieties.

Conclusion

By extracting frames and analyzing them, it offers a summarized view of exercise over time. The flexibility to categorize detections means you possibly can discern patterns – maybe sure objects seem extra throughout particular durations. The insights might be utilized throughout numerous fields, from detecting anomalies in safety footage to understanding wildlife actions in recorded analysis information.