Contrastive Language-Picture Pre-training (CLIP) is a multimodal studying structure developed by OpenAI. It learns visible ideas from pure language supervision. It bridges the hole between textual content and visible knowledge by collectively coaching a mannequin on a large-scale dataset containing pictures and their corresponding textual descriptions. That is much like the zero-shot capabilities of GPT-2 and GPT-3.

This text will present insights into how CLIP bridges the hole between pure language and picture processing. Specifically, you’ll be taught:

- How does CLIP work?

- Structure and coaching course of

- How CLIP resolves key challenges in laptop imaginative and prescient

- Sensible functions

- Challenges and limitations whereas implementing CLIP

- Future developments

How Does CLIP Work?

CLIP (Contrastive Language–Picture Pre-training) is a mannequin developed by OpenAI that learns visible ideas from pure language descriptions. Its effectiveness comes from a large-scale, numerous dataset of pictures and texts.

What’s distinction studying?

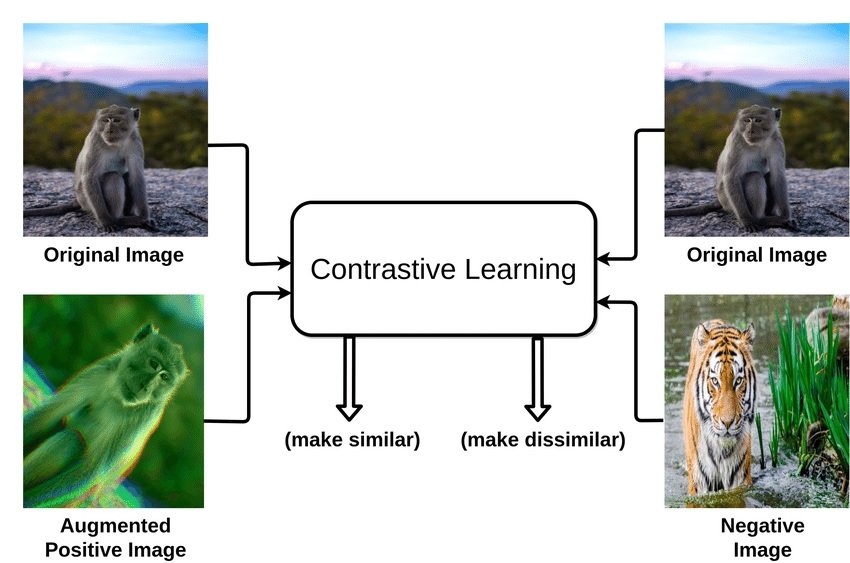

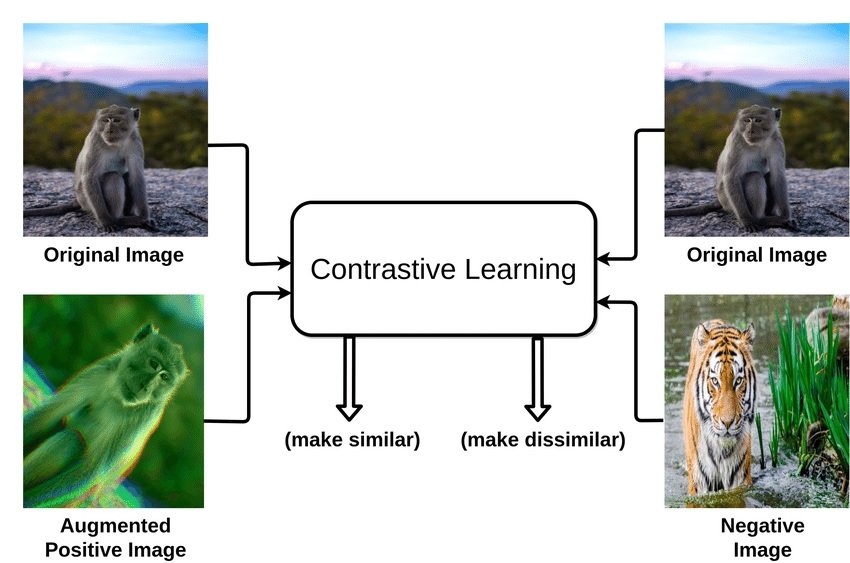

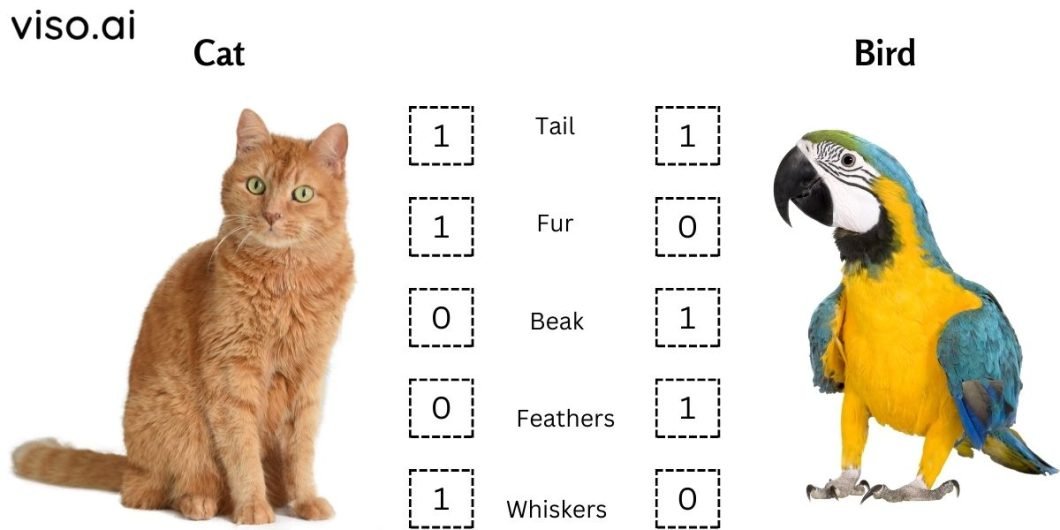

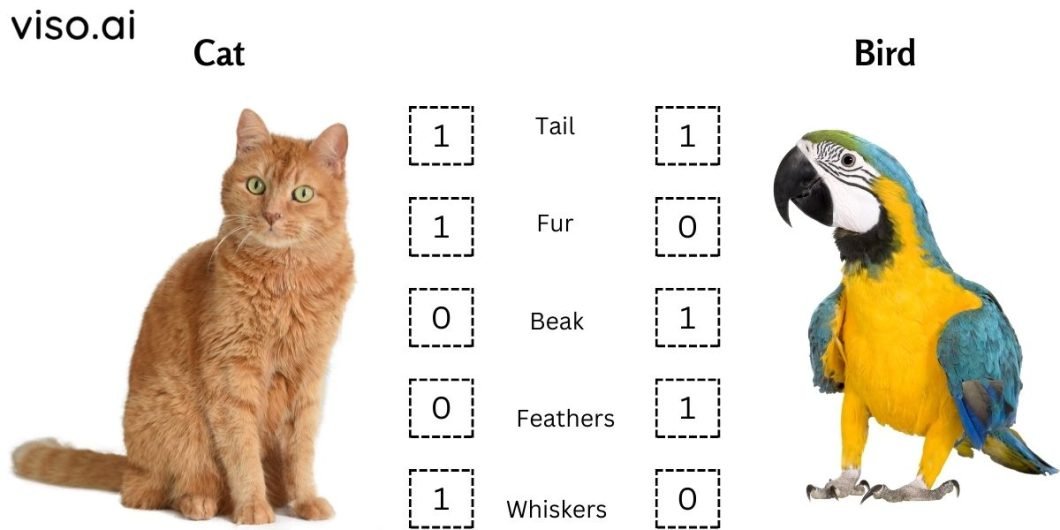

Contrastive studying is a way utilized in machine studying, significantly within the discipline of unsupervised studying. Contrastive studying is a technique the place we educate an AI mannequin to acknowledge similarities and variations of numerous knowledge factors.

Think about you may have a essential merchandise (the “anchor pattern”), an identical merchandise (“optimistic”), and a distinct merchandise (“adverse pattern”). The objective is to make the mannequin perceive that the anchor and the optimistic merchandise are alike, so it brings them nearer collectively in its thoughts, whereas recognizing that the adverse merchandise is completely different and pushing it away.

What’s an instance of distinction studying?

In a pc imaginative and prescient instance of distinction studying, we intention to coach a device like a convolutional neural community to carry comparable picture representations nearer and separate the dissimilar ones.

The same or “optimistic” picture or is likely to be from the identical class (e.g., canine) as the principle picture or a modified model of it, whereas a “adverse” picture could be totally completely different, sometimes from one other class (e.g., cats).

CLIP Structure defined

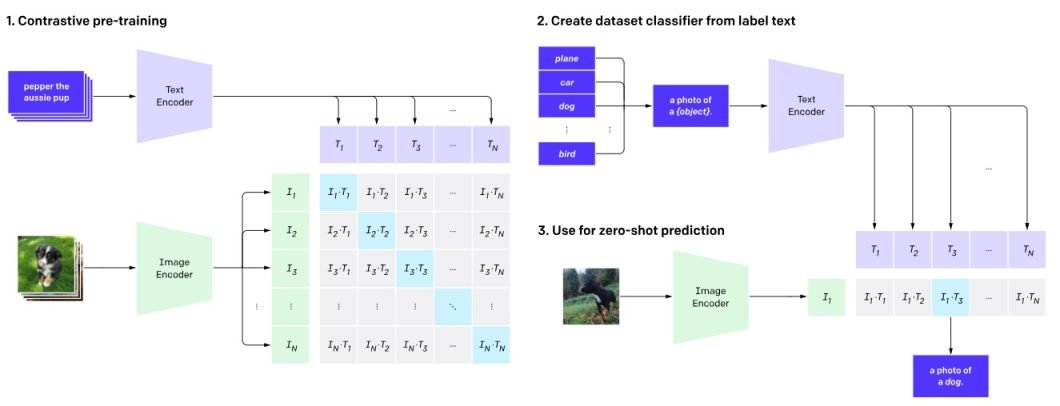

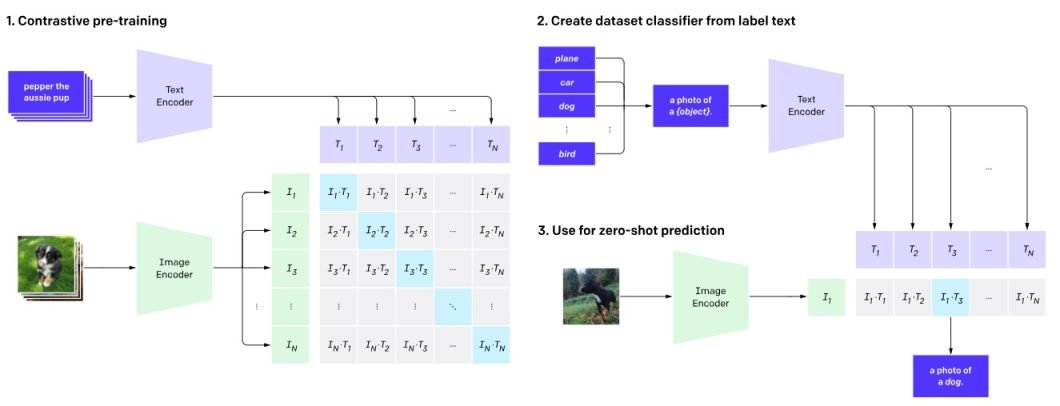

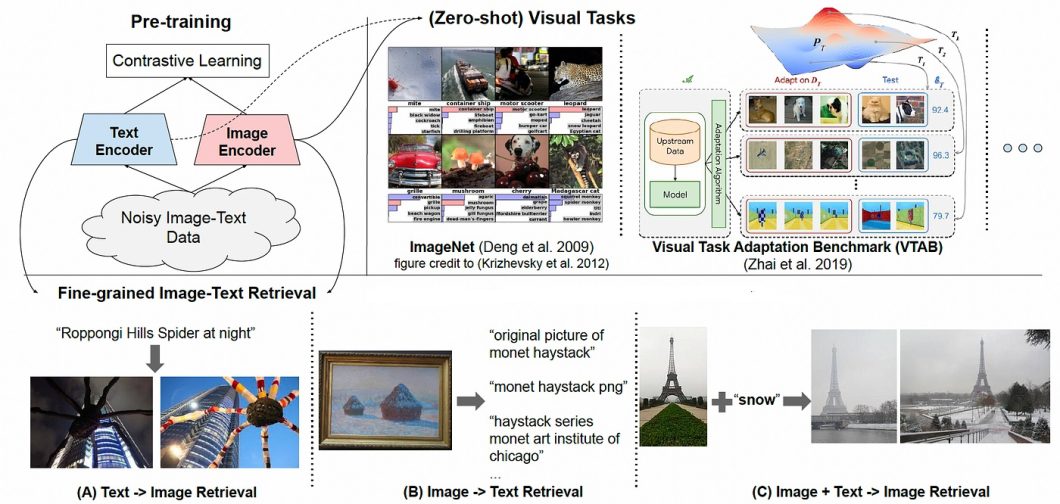

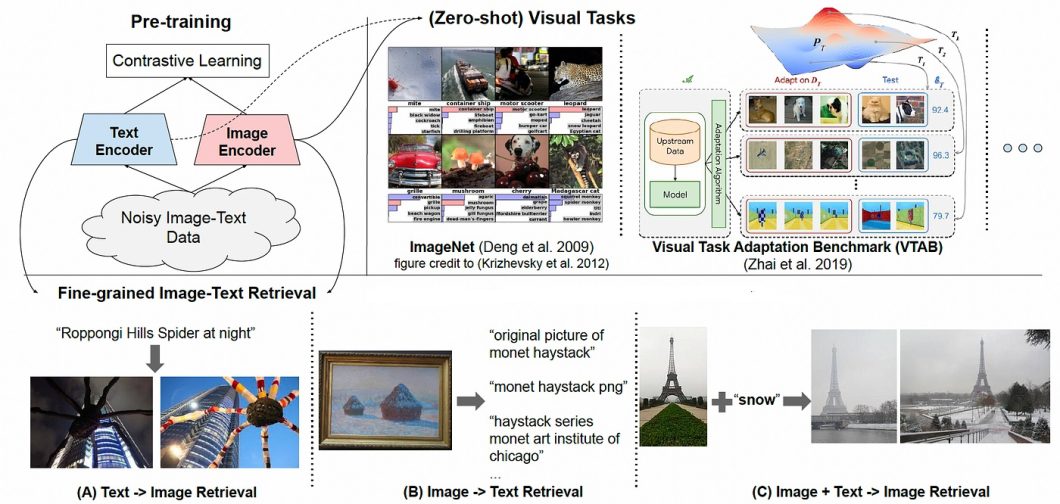

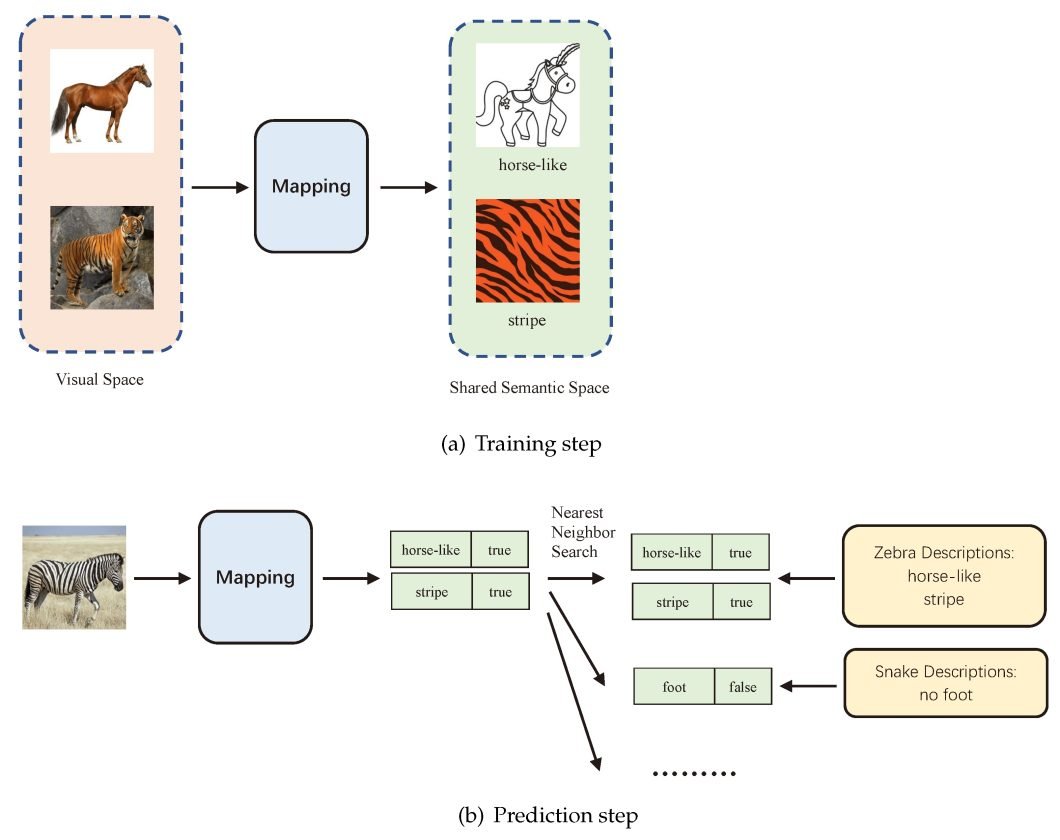

Contrastive Language-Picture Pre-training (CLIP) makes use of a dual-encoder structure to map pictures and textual content right into a shared latent area. It really works by collectively coaching two encoders. One encoder for pictures (Imaginative and prescient Transformer) and one for textual content (Transformer-based language mannequin).

- Picture Encoder: The picture encoder extracts salient options from the visible enter. This encoder takes an ‘picture as enter’ and produces a high-dimensional vector illustration. It sometimes makes use of a convolutional neural community (CNN) structure, like ResNet, for extracting picture options.

- Textual content Encoder: The textual content encoder encodes the semantic that means of the corresponding textual description. It takes a ‘textual content caption/label as enter’ and produces one other high-dimensional vector illustration. It typically makes use of a transformer-based structure, like a Transformer or BERT, to course of textual content sequences.

- Shared Embedding House: The 2 encoders produce embeddings in a shared vector area. These shared embedding areas enable CLIP to check textual content and picture representations and be taught their underlying relationships.

CLIP Coaching Course of

Step 1: Contrastive Pre-training

CLIP is pre-trained on a large-scale dataset of 400 million (picture, textual content knowledge) pairs collected from the web. Throughout pre-training, the mannequin is offered with pairs of pictures and textual content captions. A few of these pairs are real matches (the caption precisely describes the picture), whereas others are mismatched. It creates shared latent area embeddings.

Step 2: Create Dataset Classifiers from Label Textual content

For every picture, a number of textual content descriptions are created, together with the proper one and several other incorrect ones. This creates a mixture of optimistic samples (matching) and adverse pattern (mismatched) pairs. These descriptions are fed into the textual content encoder, producing class-specific embeddings.

At this stage, one essential operate additionally got here into play: Contrastive Loss Operate. This operate penalizes the mannequin for incorrectly matching (image-text) pairs. However, rewards it for appropriately matching (image-text) pairs within the latent area. It encourages the mannequin to be taught representations that precisely seize visible and textual info similarities.

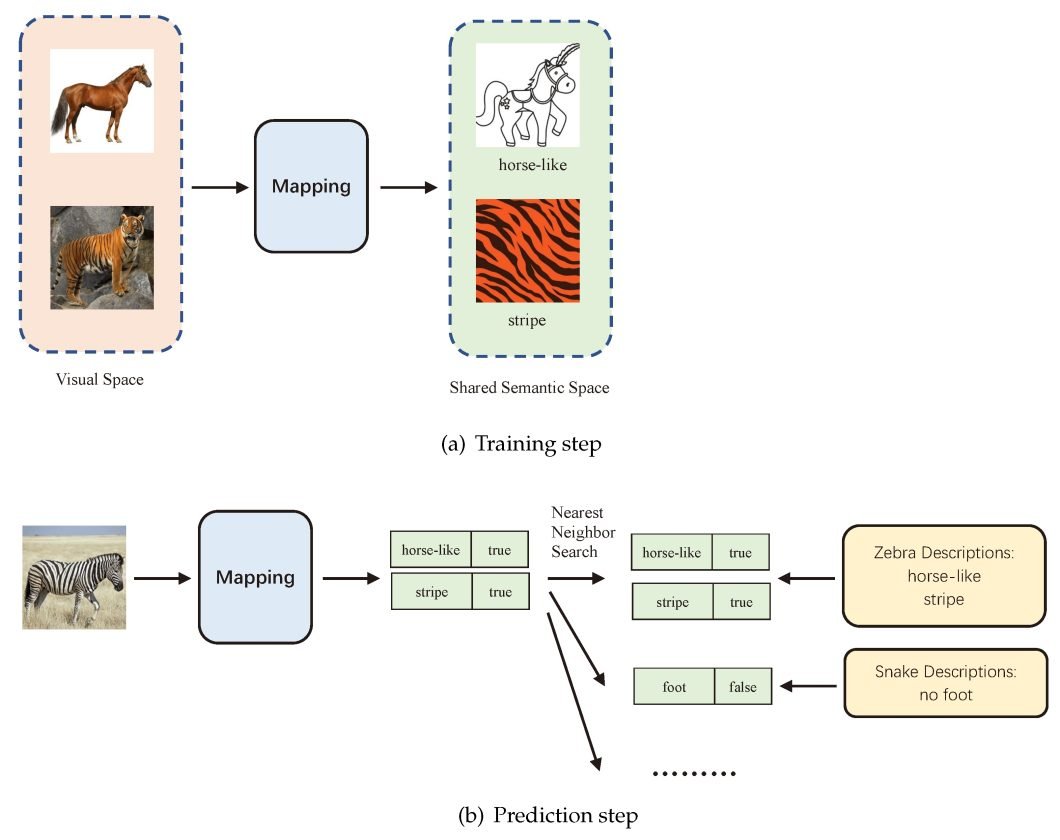

Step 3: Zero-shot Prediction

Now, the educated textual content encoder is used as a zero-shot classifier. With a brand new picture, CLIP could make zero-shot predictions. That is accomplished by passing it by way of the picture encoder and the dataset classifier with out fine-tuning.

CLIP computes the cosine similarity between the embeddings of all picture and textual content description pairs. It optimizes the parameters of the encoders to extend the similarity of the proper pairs. Thus, lowering the similarity of the wrong pairs.

This manner, CLIP learns a multimodal embedding area the place semantically associated pictures and texts are mapped shut to one another. The anticipated class is the one with the very best logit worth.

Integration Between Pure Language and Picture Processing

CLIP’s means to map pictures and textual content right into a shared area permits for the combination of NLP and picture processing duties. This enables CLIP to:

- Generate textual content descriptions for pictures. It will probably retrieve related textual content descriptions from the coaching knowledge by querying the latent area with a picture illustration. In flip, successfully performing picture captioning.

- Classify pictures based mostly on textual descriptions. It will probably straight evaluate textual descriptions with the representations of unseen pictures within the latent area. Consequently, zero-shot picture classification is carried out with out requiring labeled coaching knowledge for particular courses.

- Edit pictures based mostly on textual prompts. Textual directions can be utilized to switch present pictures. Customers can manipulate the textual enter and feed it again into CLIP. This guides the mannequin to generate or modify pictures following the required textual prompts. This functionality lays a basis for revolutionary text-to-image era and modifying instruments.

Main Issues in Pc Imaginative and prescient and How CLIP Helps

Semantic Gaps

One of many largest hurdles in laptop imaginative and prescient is the “semantic hole.” The semantic hole is the disconnect between the low-level visible options that computer systems extract from pictures and the high-level semantic ideas that people readily perceive.

Conventional imaginative and prescient fashions excel at duties like object detection and picture classification. Nonetheless, they typically battle to know the deeper that means and context inside a picture. This makes it tough for them to motive about relationships between objects, interpret actions, or infer intentions.

However, CLIP can perceive the relationships between objects, actions, and feelings depicted in pictures. Given a picture of a kid enjoying in a park, CLIP can determine the presence of the kid and the park. Additional, it could possibly additionally infer that the kid is having enjoyable.

Knowledge Efficiencies

One other vital problem is the sheer quantity of knowledge required to coach laptop imaginative and prescient fashions successfully. Deep studying algorithms demand huge labeled picture datasets to be taught complicated relationships between visible options and semantic ideas. Buying and annotating such giant datasets is pricey and time-consuming, limiting the usability and scalability of imaginative and prescient fashions.

In the meantime, CLIP can be taught from fewer image-text pairs than conventional imaginative and prescient fashions. This makes it extra resource-efficient and adaptable to specialised domains with restricted knowledge.

Lack of Explainability and Generalizability

Conventional laptop imaginative and prescient fashions typically battle with explaining their reasoning behind predictions. This “black field” nature hinders belief and limits its utility in numerous situations.

Nonetheless, CLIP, educated on large image-text pairs, learns to affiliate visible options with textual descriptions. This enables for producing captions that specify the mannequin’s reasoning, enhancing interpretability and boosting belief. Moreover, CLIP’s means to adapt to varied textual content prompts enhances its generalizability to unseen conditions.

Sensible Purposes of CLIP

Contrastive Language-Picture Pre-training is beneficial for numerous sensible functions, equivalent to:

Zero-Shot Picture Classification

Probably the most spectacular options of CLIP is its means to carry out zero-shot picture classification. Which means CLIP can classify pictures it has by no means seen earlier than, utilizing solely pure language descriptions.

For conventional picture classification duties, AI fashions are educated on particularly labeled datasets, limiting their means to acknowledge objects or scenes outdoors their coaching scope. With CLIP, you may present pure language descriptions to the mannequin. In flip, this permits it to generalize and classify pictures based mostly on textual enter with out particular coaching in these classes.

Multimodal Studying

One other utility of CLIP is its use as a element of multimodal studying programs. These can mix various kinds of knowledge, equivalent to textual content and pictures.

As an illustration, it may be paired with a generative mannequin equivalent to DALL-E. Right here, it’ll create pictures from textual content inputs to supply sensible and numerous outcomes. Conversely, it could possibly edit present pictures based mostly on textual content instructions, equivalent to altering an object’s colour, form, or model. This permits customers to create and manipulate pictures creatively with out requiring creative expertise or instruments.

Picture Captioning

CLIP’s means to grasp the connection between pictures and textual content makes it appropriate for laptop imaginative and prescient duties like picture captioning. Given a picture, it could possibly generate captions that describe the content material and context.

This performance might be helpful in functions the place a human-like understanding of pictures is required. This will likely embrace assistive applied sciences for the visually impaired or enhancing content material for search engines like google. For instance, it might present detailed descriptions for visually impaired customers or contribute to extra exact search outcomes.

Semantic Picture Search and Retrieval

CLIP might be employed for semantic picture search and retrieval past easy keyword-based searches. Customers can enter pure language queries, and the CLIP AI mannequin will retrieve pictures that finest match the textual descriptions.

This method improves the precision and relevance of search outcomes. Thus, making it a worthwhile device in content material administration programs, digital asset administration, and any use case requiring environment friendly and correct picture retrieval.

Knowledge Content material Moderation

Content material moderation filters inappropriate or dangerous content material from on-line platforms, equivalent to pictures containing violence, nudity, or hate speech. CLIP can help within the content material moderation course of by detecting and flagging such content material based mostly on pure language standards.

For instance, it could possibly determine pictures that violate a platform’s phrases of service or neighborhood tips or which can be offensive or delicate to sure teams or people. Moreover, it could possibly justify choices by highlighting related elements of the picture or textual content that triggered the moderation.

Deciphering Blurred Pictures

In situations with compromised picture high quality, equivalent to in surveillance footage or medical imaging, CLIP can present worthwhile insights by deciphering the obtainable visible info along side related textual descriptions. It will probably present hints or clues about what the unique picture may appear like based mostly on its semantic content material and context. Nonetheless, it could possibly generate partial or full pictures from blurred inputs utilizing its generative capabilities or retrieving comparable pictures from a big database.

CLIP Limitations and Challenges

Regardless of its spectacular efficiency and potential functions, CLIP additionally has some limitations, equivalent to:

Lack of Interpretability

One other disadvantage is the shortage of interpretability in CLIP’s decision-making course of. Understanding why the mannequin classifies a particular picture in a sure method might be difficult. This will hinder its utility in delicate areas the place interpretability is essential, equivalent to healthcare diagnostics or authorized contexts.

Lack of High-quality-Grained Understanding

CLIP’s understanding can be restricted by way of fine-grained particulars. Whereas it excels at high-level duties, it could battle with intricate nuances and delicate distinctions inside pictures or texts. Thus, limiting its effectiveness in functions requiring granular evaluation.

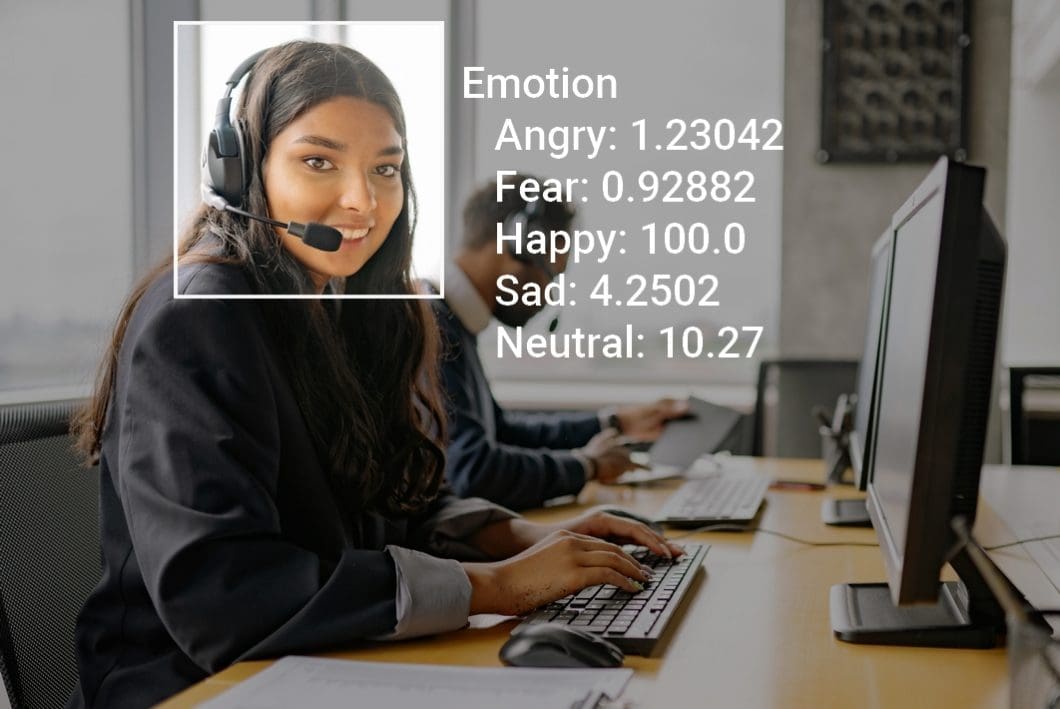

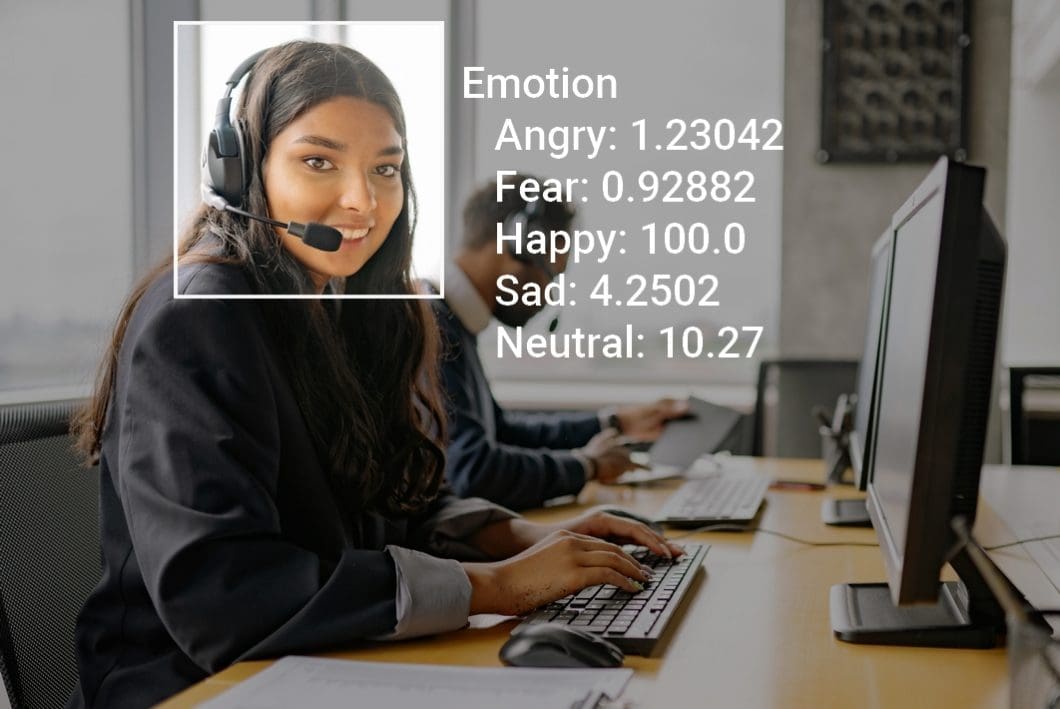

Restricted Understanding of Relationships (Feelings, Summary Ideas, and many others.)

CLIP’s comprehension of relationships, particularly feelings and summary ideas, stays constrained. It’d misread complicated or nuanced visible cues. In flip, impacting its efficiency in duties requiring a deeper understanding of human experiences.

Biases in Pretraining Knowledge

Biases current within the pretraining knowledge can switch to CLIP, probably perpetuating and amplifying societal biases. This raises moral issues, significantly in AI functions like content material moderation or decision-making programs. In these use circumstances, biased outcomes result in real-world penalties.

CLIP Developments and Future Instructions

As CLIP continues to reshape the panorama of multimodal studying, its integration into real-world functions is promising. Knowledge scientists are exploring methods to beat its limitations, with a watch on creating much more superior and interpretable fashions.

CLIP guarantees breakthroughs in areas like picture recognition, NLP, medical diagnostics, assistive applied sciences, superior robotics, and extra. It paves the way in which for extra intuitive human-AI interactions as machines grasp contextual understanding throughout completely different modalities.

The flexibility of CLIP is shaping a future the place AI comprehends the world as people do. Future analysis will form AI capabilities, unlock novel functions, drive innovation, and develop the horizons of prospects in machine studying and deep studying programs.

What’s Subsequent for Contrastive Language-Picture Pre-Coaching?

As CLIP continues to evolve, it holds immense potential to vary the way in which we work together with info throughout modalities. By bridging language and imaginative and prescient, CLIP promotes a future the place machines can actually “see” and “perceive” the world.

To achieve a extra complete understanding, take a look at the next articles: