Introduction

Over 150 million tons of plastic lie within the planet’s oceans, with research discovering that solely 20% of plastic waste at sea is linked to maritime actions. The remaining 80% of waste reaches the ocean by means of rivers.

Blue Eco Line works with native authorities organizations to watch and scale back air pollution in rivers utilizing laptop imaginative and prescient. Blue Eco Line makes use of present infrastructure (bridges) to position cameras earlier than and after a metropolis to measure the impression of their resolution. This helps governments to know how their native space pertains to river air pollution which, for the primary time, permits them to evaluate their efforts and modify investments or packages to positively impression the water system.

With a imaginative and prescient system in place able to figuring out and monitoring air pollution, Blue Eco Line can then implement an autonomous river cleansing system to take away plastic from the river utilizing a imaginative and prescient powered conveyor belt.

The autonomous system is ready to determine, gather, and take away air pollution from the river. Imaginative and prescient is then used to information an autonomous conveyor belt that’s used for retrieving pollution and bringing them to the river financial institution for processing.

This autonomous system takes the place of options reliant on human intervention, an element that often results in insufficient upkeep and consequently, decrease ranges of waste seize. These methods seize air pollution however don’t take away the waste which ends up in seize units reaching capability and new waste shouldn’t be collected.

On this technical information, we are going to stroll by means of the method of constructing an autonomous river monitoring and cleansing system. We’ll use Roboflow to gather, label, and course of visible knowledge, then use Intel C3 Sapphire Rapids for mannequin coaching and mannequin deployment.

We’ll deal with the implementation utilized by Blue Eco Line to showcase how one can create a imaginative and prescient system for distant environments. This similar system can be utilized for infrastructure monitoring, environmental understanding, transportation analytics, and extra.

The total system is open supply and out there to be used within the GitHub repository right here. Let’s get began!

System Overview and Structure

The Blue Eco Line system is at present put in to assist clear three distinct rivers: in Adria for Canal Bianco river, Eraclea for Piave river, and Ficarolo for Po river (the most important river in Italy).

This method is architected to cope with the troublesome and numerous constraints of deploying laptop imaginative and prescient methods in distant and dynamic environments with out wifi. Blue Eco Line makes use of a proprietary machine studying mannequin to determine natural and inorganic materials.

The cameras function on the edge utilizing a Raspberry Pi and two cameras inside distant areas that wouldn’t have wifi. The cameras run from 30 minutes earlier than dawn and 30 minutes after sundown gathering 1 picture each 5 seconds. When there may be not sufficient daylight to visually monitor the waterway, the system sends the information to a server for processing and understanding.

Now that you’ve an understanding of how the system works in follow, lets start to construct the system.

Acquire, Add, and Label Plastic Waste Information Utilizing Roboflow

With a view to construct a proprietary laptop imaginative and prescient mannequin, you may wish to seize knowledge that resembles the atmosphere you wish to analyze and perceive. You possibly can deploy an empty state mannequin to seize knowledge utilizing lively studying, begin with an open supply pre-trained mannequin from Roboflow Universe, or use your present pictures and movies.

Whether or not you are beginning with no knowledge, some knowledge, or a mannequin, capturing knowledge out of your actual world atmosphere will enhance the accuracy of your mannequin and tailor it to your efficiency wants.

Acquire Pictures on the Edge

After Blue Eco line configures the Reolink digital camera in a distant atmosphere close to the river, the subsequent step is accumulating pictures from the RTSP stream to coach the mannequin utilizing gather.py. If you have already got the information you may skip this part.

import cv2

import os class RTSPImageCapture: def __init__(self, rtsp_url, output_dir): self.rtsp_url = rtsp_url self.output_dir = output_dir self.cap = None self.image_count = Zero def open_stream(self): # Create a VideoCapture object to connect with the RTSP stream self.cap = cv2.VideoCapture(self.rtsp_url) # Verify if the VideoCapture object was efficiently created if not self.cap.isOpened(): print("Error: Couldn't open RTSP stream.") exit() # Create the output listing if it would not exist os.makedirs(self.output_dir, exist_ok=True) def capture_images(self): whereas True: # Seize a body from the RTSP stream ret, body = self.cap.learn() # Verify if the body was captured efficiently if not ret: print("Error: Couldn't learn body from RTSP stream.") break # Save the body as a JPG picture image_filename = os.path.be part of(self.output_dir, f'image_{self.image_count:04d}.jpg') cv2.imwrite(image_filename, body) # Increment the picture rely self.image_count += 1 # Show the captured body (non-compulsory) cv2.imshow('Captured Body', body) # Exit the loop when 'q' is pressed if cv2.waitKey(1) & 0xFF == ord('q'): break def close_stream(self): # Launch the VideoCapture object and shut the OpenCV window if self.cap shouldn't be None: self.cap.launch() cv2.destroyAllWindows() def principal(self): strive: self.open_stream() self.capture_images() lastly: self.close_stream() if __name__ == "__main__": # Outline the RTSP stream URL and output listing rtsp_url = 'rtsp://your_rtsp_stream_url' output_dir = 'rivereye005_cam1_data' # Create an occasion of the RTSPImageCapture class image_capture = RTSPImageCapture(rtsp_url, output_dir) # Run the principle operate of the category image_capture.principal()

Add Pictures to Roboflow

Subsequent, add the photographs to Roboflow and assign labeling jobs through API – load.py. Documentation on accessing your API key will be discovered right here.

import os

import requests

from roboflow import Roboflow class RoboflowUploader: def __init__(self, workspace, venture, api_key, labeler_email, reviewer_email): self.WORKSPACE = workspace self.PROJECT = venture self.ROBOFLOW_API_KEY = api_key self.LABELER_EMAIL = labeler_email self.REVIEWER_EMAIL = reviewer_email self.job_info = {} # Initialize job_info as an example variable self.rf = Roboflow(api_key=self.ROBOFLOW_API_KEY) self.upload_project = self.rf.workspace(self.WORKSPACE).venture(self.PROJECT) def upload_images(self, folder_path): if not os.path.exists(folder_path): print(f"Folder '{folder_path}' doesn't exist.") return for image_name in os.listdir(folder_path): image_path = os.path.be part of(folder_path, image_name) if os.path.isfile(image_path): print('Picture path:', image_path) response = self.upload_project.add(image_path, batch_name='intelbatchtest', tag_names=['RE005']) print(image_path, response) def extract_batches(self): url = f"https://api.roboflow.com/{self.WORKSPACE}/{self.PROJECT}/batches" headers = {"Authorization": f"Bearer {self.ROBOFLOW_API_KEY}"} response = requests.get(url, headers=headers) if response.status_code == 200: batch = response.json() print(batch) for batch in batch['batches']: self.job_info[batch['id']] = batch['images'] # Retailer job_info as an example variable else: print(f"Didn't retrieve knowledge. Standing code: {response.status_code}") def assign_jobs(self): url = f"https://api.roboflow.com/{self.WORKSPACE}/{self.PROJECT}/jobs" headers = { "Content material-Kind": "software/json", } for job_id in self.job_info.keys(): knowledge = { "identify": "Job created by API", "batch": job_id, "num_images": self.job_info[job_id], "labelerEmail": f"{self.LABELER_EMAIL}", "reviewerEmail": f"{self.REVIEWER_EMAIL}", } params = { "api_key": self.ROBOFLOW_API_KEY, } response = requests.submit(url, headers=headers, json=knowledge, params=params) if response.status_code == 200: print("Job created efficiently!") else: print(f"Error occurred: {response.status_code} - {response.textual content}") if __name__ == "__main__": uploader = RoboflowUploader( workspace="workspace_name", venture="project_name", api_key="api_key", labeler_email="labeler_email", reviewer_email="reviewer_email" ) uploader.upload_images("rivereye005_cam1_data") uploader.extract_batches() uploader.assign_jobs()

Be sure to fill out the workspace identify, venture identify, api key, labeler_email and reviewer_email.

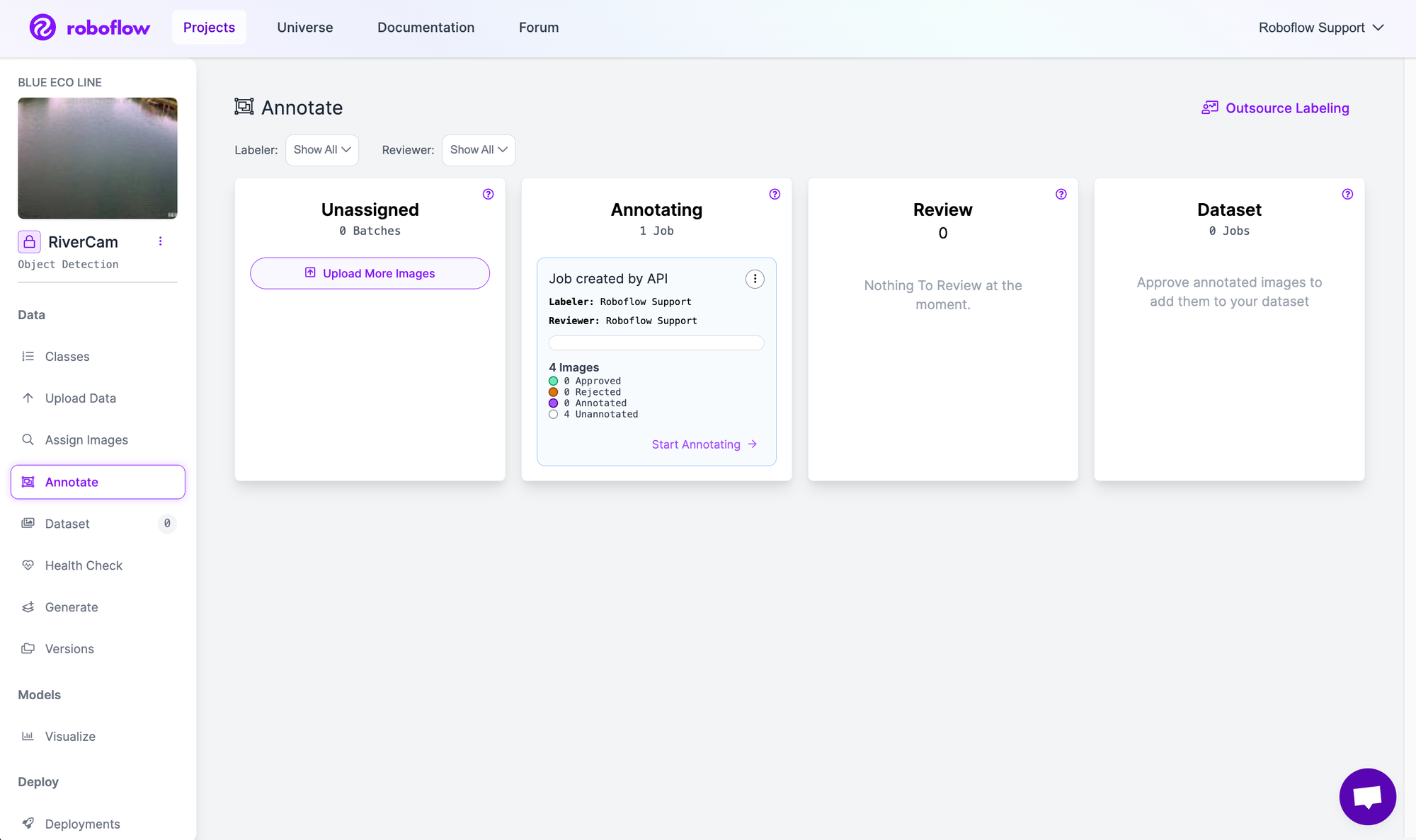

As soon as the photographs are added to Roboflow for labeling, they may present up as a Job within the Annotate dashboard. Picture labeling will be carried out by a number of individuals and transfer by means of an approval workflow to make sure solely prime quality knowledge is added to the Dataset.

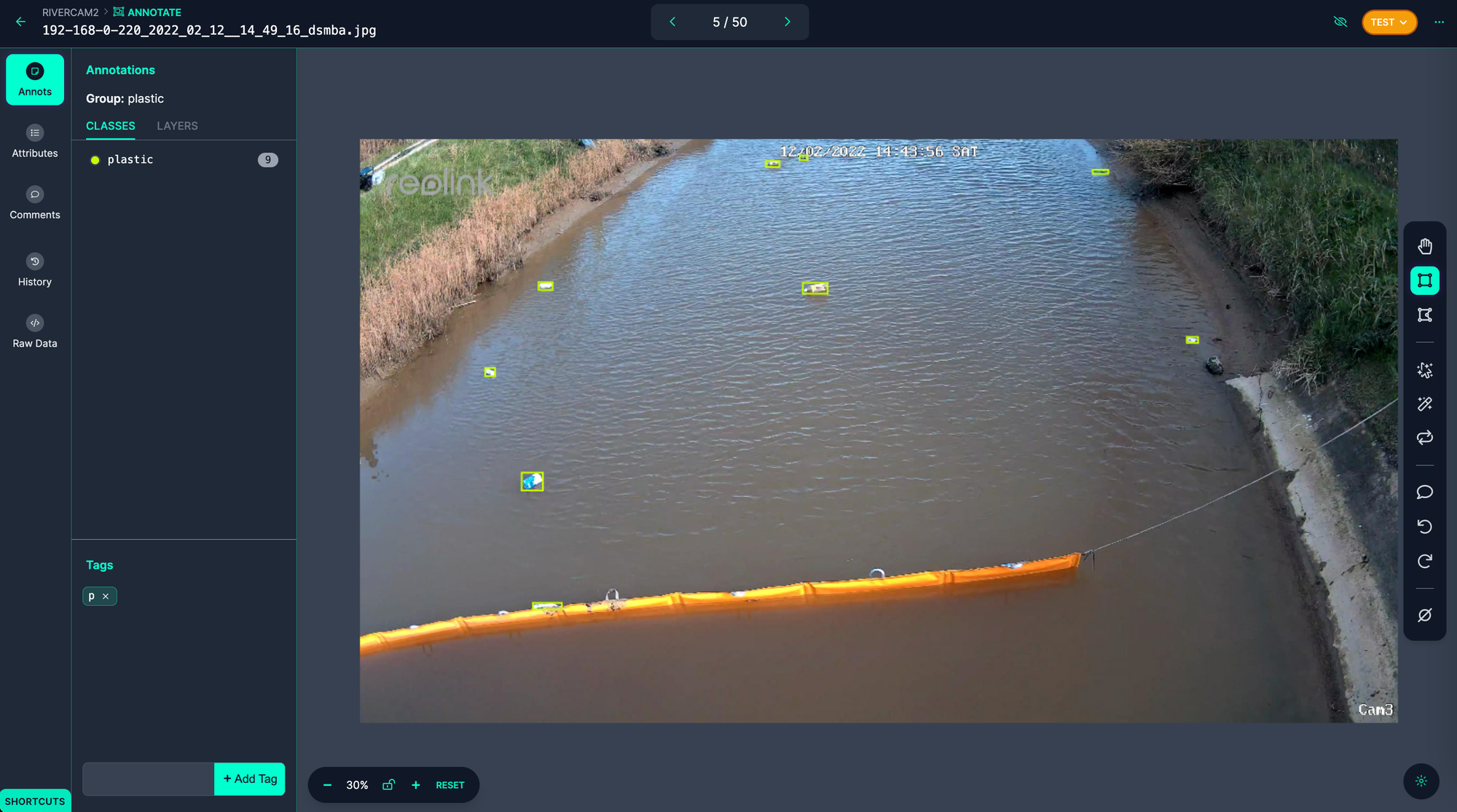

Label Pictures with Roboflow for Mannequin Coaching

Inside Roboflow, pictures will be labeled for object detection, occasion segmentation, keypoint detection, and classification. Information labeling will be carried out by handbook click on and drag methods, single-click object annotation, per picture computerized labeling, or full dataset automated labeling. These techniques depend upon the kind of knowledge you’re utilizing and scale of information labeling

Generate a dataset Model for Mannequin Coaching

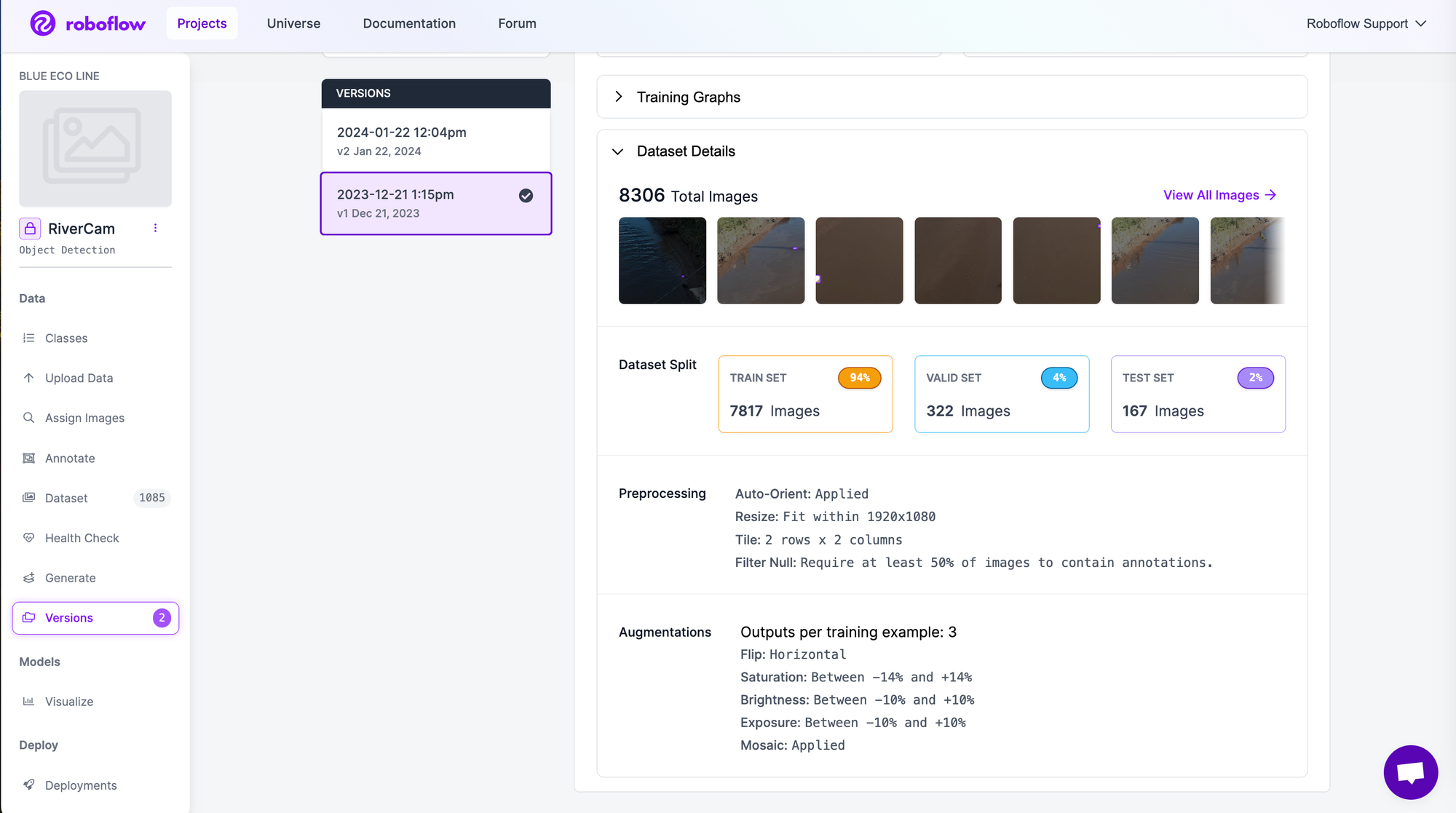

Now that you’ve pictures with annotations, the subsequent step is to generate a dataset Model. Within the hosted Roboflow software (under screenshot), it is possible for you to to pick preprocessing and augmentations earlier than created a Model. Every distinctive Model is then saved so that you can preserve as you iterate on experiments to enhance mannequin high quality.

To create an automatic deployment, you should utilize the Roboflow Python Bundle to generate a dataset model – generate.py.

This contains including preprocessing and augmentations equivalent to tiling, resizing, and brightness to enhance the robustness of the mannequin. For instance, tiling can enhance the accuracy of detecting small objects, equivalent to plastic, by zooming your detector in on small objects whereas nonetheless with the ability to run quick inference.

from roboflow import Roboflow API_KEY = "api_key"

WORKSPACE = "workspace_name"

PROJECT = "project_name"

rf = Roboflow(api_key= API_KEY) venture = rf.workspace(WORKSPACE).venture(PROJECT)

settings = { "augmentation": { "bbblur": { "pixels": 1.5 }, "bbbrightness": { "brighten": True, "darken": False, "%": 91 } }, "preprocessing": { "distinction": { "kind": "Distinction Stretching" }, "filter-null": { "%": 50 }, "grayscale": True, } } variations = venture.generate_version(settings=settings)Now that the dataset model is generated, export the dataset to coach a mannequin on Google Cloud Platform (GCP) utilizing Intel C3 Sapphire Rapids.

from roboflow import Roboflow rf = Roboflow(api_key='YOUR_API_KEY')

venture = rf.workspace('WORKSPACE').venture('PROJECT')

dataset = venture.model(1).obtain('yolov8')Prepare an Ultralytics YOLOv8 mannequin with Intel C3 Sapphire Rapids on GCP

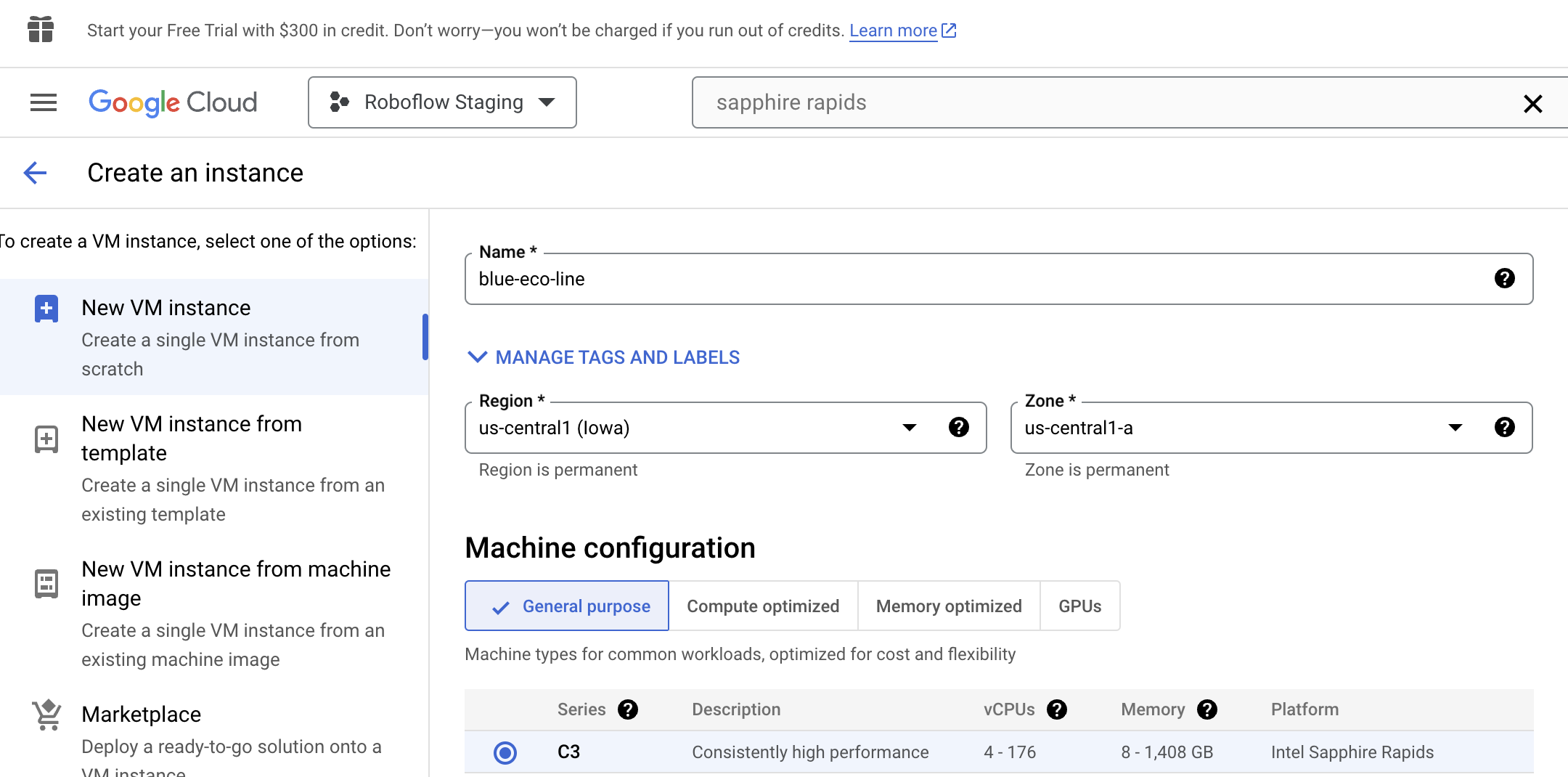

Listed below are the steps for utilizing a C3 Sapphire Rapids Digital Machine on GCP to coach a YOLOv8 mannequin.

Create an C3 VM occasion utilizing GCP to entry the machine for mannequin coaching.

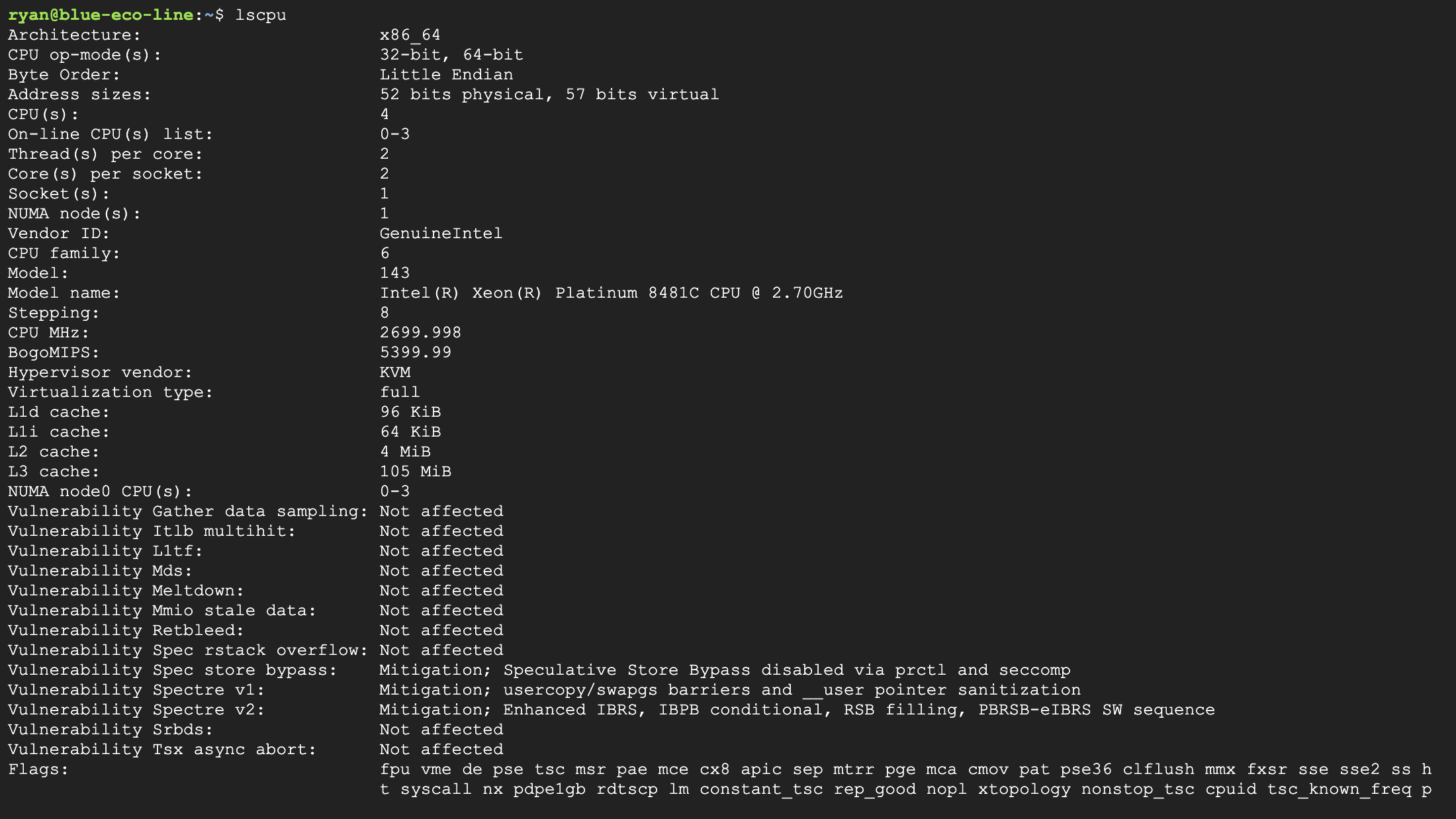

When you SSH into your C3 Digital Machine, you may see the Intel {Hardware} Specs under.

Utilizing the pocket book train_c3_sapphire_rapids.ipynb you may prepare a customized YOLOv8 mannequin your dataset using the C3 VM. Then set up YOLOv8 & Verify C3 Sapphire Rapids {Hardware} Specs.

Utilizing the next command, prepare the customized mannequin utilizing the exported dataset.

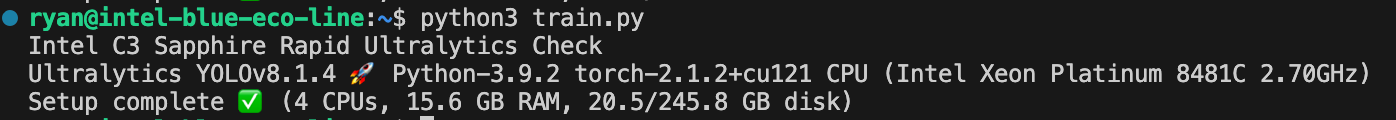

%cd {HOME} !yolo activity=detect mode=prepare mannequin=yolov8s.pt knowledge=blue_eco_line.location}/knowledge.yaml epochs=25 imgsz=800 plots=TruePrepare a customized YOLOv8 mannequin

As soon as coaching is full, you’ll see and consider coaching graphs associated to the mannequin. That is useful for mannequin comparisons when utilizing numerous datasets, preprocessing, and augmentation methods.

%cd {HOME}

Picture(filename=f'{HOME}/runs/detect/prepare/outcomes.png', width=600)

After the mannequin is skilled, you may visualize predictions to see efficiency with a selected picture.

import glob

from IPython.show import Picture, show !yolo activity=detect mode=predict mannequin={HOME}/runs/detect/prepare/weights/finest.pt conf=0.25 supply={dataset.location}/take a look at/pictures save=True for image_path in glob.glob(f'{HOME}/runs/detect/predict/*.jpg')[:3]: show(Picture(filename=image_path, width=600)) print("n")Listed below are the prediction outcomes, success!

Deploy YOLOv8 with OpenVINO Format and Intel C3 Sapphire Rapids

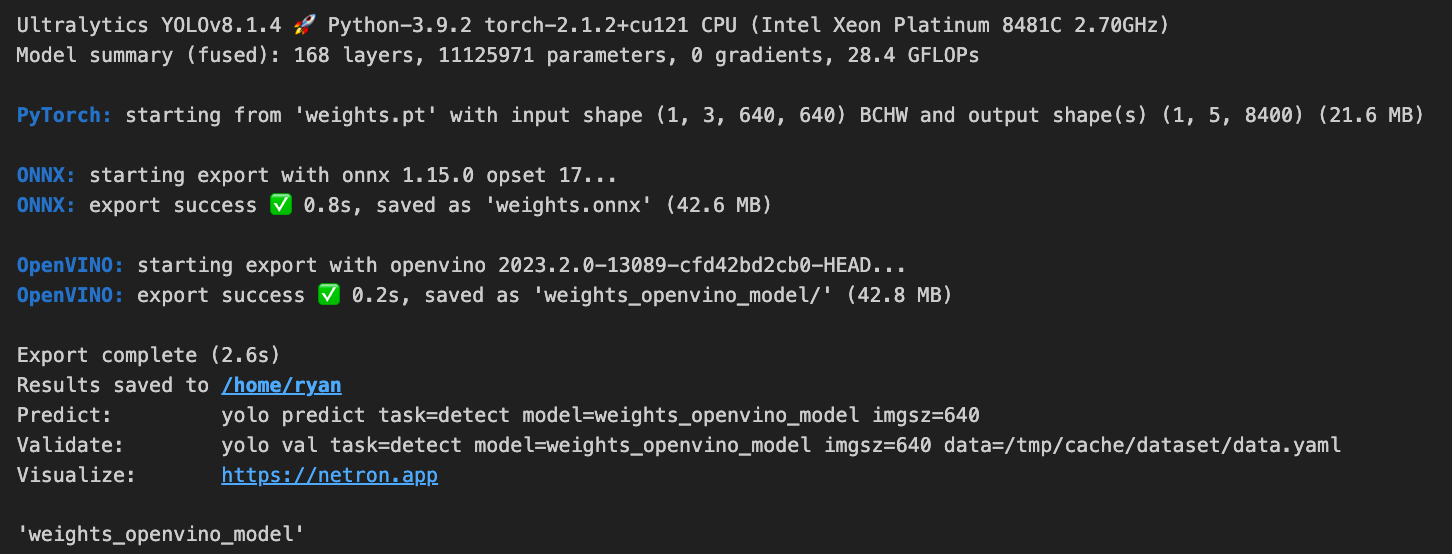

See our performance benchmarking of Sapphire Rapids to know the advantages of utilizing this over one thing like AWS Lambda. We noticed the typical response time per request is about 20% quicker within the C3 Sapphire Rapids occasion than that of AWS Lambda. Export the YOLOv8 mannequin to OpenVINO format for as much as 3x CPU pace up – deploy.ipynb

from ultralytics import YOLO # Load a YOLOv8n PyTorch mannequin

mannequin = YOLO('finest.pt') # Export the mannequin

mannequin.export(format='openvino',imgsz=[640,640]) #Load the OpenVINO Mannequin

ov_model = YOLO('weights_openvino_model/')As soon as that is profitable, you will notice logs of a profitable conversion and export of the mannequin on Sapphire Rapids.

Subsequent, deploy the mannequin on Sapphire Rapids with Supervision annotations to visualise predictions:

import supervision as sv bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator() for picture in image_files: print(picture) outcomes = ov_model(picture) detections = sv.Detections.from_ultralytics(outcomes[0]) print(f'supervision detections{detections}') picture = cv2.imread(picture) labels = [ model.model.names[class_id] for class_id in detections.class_id ] annotated_image = bounding_box_annotator.annotate( scene=picture, detections=detections) annotated_image = label_annotator.annotate( scene=annotated_image, detections=detections, labels=labels) sv.plot_image(annotated_image) You are actually capable of cross pictures to the deployed mannequin and obtain predictions!

Conclusion

This completes the complete implementation of deploying an autonomous river monitoring and cleansing system. With this tutorial and related open supply repo, you may replicate an analogous system for numerous use instances of counting, monitoring, and monitoring with laptop imaginative and prescient.

Blue Eco Line is pushing the boundary for innovation in conservation utilizing the newest expertise for laptop imaginative and prescient with Roboflow and Intel. With the ability to monitor waste and autonomously take away air pollution is a essential resolution to enhance the well being of waterways internationally.