As MLOps turns into an integral a part of extra companies, the demand for professionals who can proficiently handle, deploy, and scale machine studying operations is rising quickly.

This information is for individuals who have expertise in MLOps and are making ready for technical interviews geared toward mid-level to senior positions. It explores superior interview questions deep into mannequin governance, scalability, efficiency optimization, and regulatory compliance—areas the place seasoned professionals can showcase their experience. By way of detailed explanations, strategic reply hints, and insightful discussions, this text will make it easier to articulate your experiences and display your problem-solving expertise successfully.

Let’s equip you with the data to not solely reply MLOps questions however to face out in your subsequent job interview.

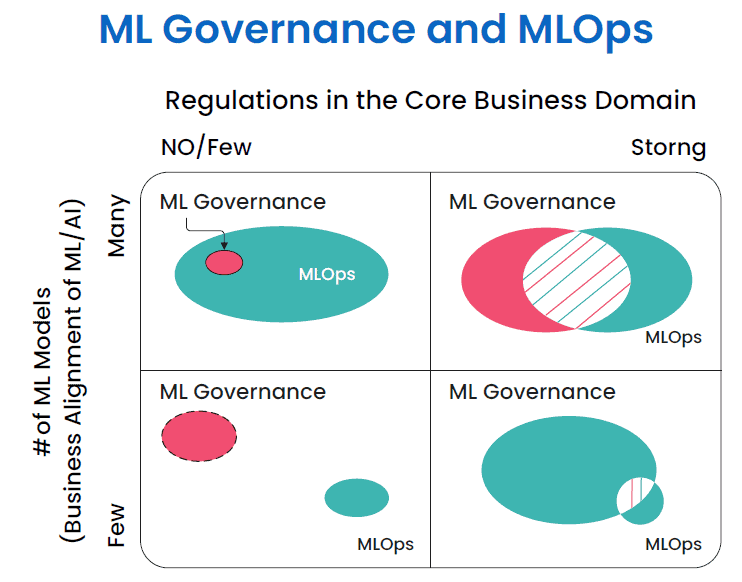

Mannequin Governance in MLOps

Query 1: How do you implement mannequin governance in MLOps?

Mannequin governance not solely maintains management but in addition enhances reliability and trustworthiness of fashions in manufacturing. It’s essential for managing dangers associated to information privateness and operational effectivity, and is a key ability for senior-level roles in MLOps, demonstrating each technical experience and strategic oversight.

- Mannequin Model Management: Use programs that monitor mannequin variations together with their datasets and parameters to make sure transparency and permit for rollback if essential.

- Audit Trails: Maintain detailed logs of all mannequin actions, together with coaching and deployment, to help in troubleshooting and meet regulatory compliance.

- Compliance and Standardization: Set up requirements for mannequin processes to stick to inside and exterior regulatory necessities.

- Efficiency Monitoring: Arrange ongoing monitoring of mannequin efficiency to shortly handle points like mannequin drift.

Reply Hints:

- Spotlight instruments like Kubeflow, for monitoring experiments and managing deployments.

- Emphasize collaboration between information scientists, operations, and IT to make sure efficient implementation of governance insurance policies.

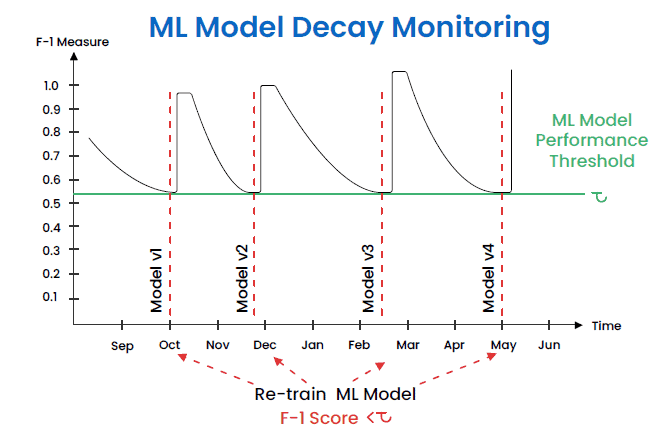

Dealing with Mannequin Drift

Query 2: What methods do you utilize to deal with mannequin drift in manufacturing?

Addressing mannequin drift is important for sustaining the accuracy and relevance of fashions in dynamic environments. It showcases an MLOps skilled’s means to make sure long-term mannequin efficiency and adaptableness.

- Steady Monitoring: Implement automated programs to repeatedly assess mannequin efficiency and detect drift.

- Suggestions Loops: Use real-time suggestions from mannequin outputs to shortly determine and handle points.

- Mannequin Re-training: Schedule common updates and re-train fashions with new information to keep up accuracy and relevance.

Reply Hints:

- Point out instruments like Apache Kafka for real-time information streaming, monitoring, and alerting with Grafana.

- Talk about the function of A/B testing in evaluating mannequin updates earlier than full-scale deployment.

Scalability and Efficiency

Query 3: How do you guarantee scalability and efficiency of machine studying fashions in a manufacturing atmosphere?

Scalability and efficiency are essential for supporting the rising wants of a corporation and display an MLOps skilled’s functionality to handle and improve machine studying infrastructure.

- Useful resource Administration: Make the most of dynamic useful resource allocation to effectively deal with various masses.

- Load Balancing: Implement load balancing and multithreading to distribute information processing throughout a number of servers.

- Environment friendly Algorithms: Go for algorithms and information constructions that scale nicely with elevated information.

Reply Hints:

- Talk about the usage of containerization applied sciences like Docker and Kubernetes for scaling functions.

- Clarify how cloud companies may be leveraged for elastic scalability and efficiency optimization.

Latency vs. Throughput in MLOps

Query 4: Talk about the trade-offs between latency and throughput in MLOps.

Balancing latency and throughput is a crucial side of optimizing machine studying fashions for manufacturing environments. Right here’s how these components play towards one another:

- Latency: Refers back to the time it takes for a single information level to be processed by way of the mannequin. Decrease latency is essential for functions that require real-time decision-making, resembling fraud detection or autonomous driving.

- Throughput: Measures how a lot information the system can course of in a given time-frame. Larger throughput with BigQuery platform is important for functions needing to deal with massive volumes of information effectively, like batch processing in information analytics.

Reply Hints:

- When optimizing for latency, think about strategies resembling mannequin simplification, utilizing extra environment friendly algorithms, or {hardware} acceleration.

- For throughput, methods like lossless quantization,parallel processing, growing {hardware} capability, or optimizing information pipeline administration may be efficient.

Query 5: Examine and distinction totally different MLOps platforms you’ve gotten used (e.g., Kubeflow, MLflow, TFX).

Efficient use of MLOps platforms entails understanding their strengths and weaknesses in varied eventualities. Key factors embody:

- Kubeflow: Greatest for end-to-end orchestration of machine studying pipelines on Kubernetes.

- MLflow: Robust for experiment monitoring, mannequin versioning, and serving.

- TFX: Preferrred for integrating with TensorFlow, offering elements for deploying production-ready ML pipelines.

Reply Hints:

- Spotlight the combination capabilities of every platform with current enterprise programs.

- Talk about the training curve and neighborhood help related to every instrument.

Evaluating MLOps Platforms

Query 6: Examine and distinction totally different MLOps platforms you’ve gotten used (e.g., Kubeflow, MLflow, TFX).

Choosing the proper MLOps platform is essential for the environment friendly administration of machine studying fashions from improvement to deployment. Right here’s a comparability of three standard platforms:

- Kubeflow: Preferrred for customers deeply built-in into the Kubernetes ecosystem, Kubeflow provides strong instruments for constructing and deploying scalable machine studying workflows.

- MLflow: Excelling in experiment monitoring and mannequin administration, MLflow is flexible for managing the ML lifecycle, together with mannequin versioning and serving.

- TFX (TensorFlow Prolonged): Particularly designed to help TensorFlow fashions, TFX gives end-to-end elements wanted to deploy production-ready ML pipelines.

Reply Hints:

- Kubeflow is nice for individuals who want tight integration with Kubernetes’ scaling and managing capabilities.

- MLflow’s flexibility makes it appropriate for varied environments, not tying the consumer to any explicit ML library or framework.

- TFX provides complete help for TensorFlow, making it the go-to for TensorFlow customers in search of superior pipeline capabilities.

Leveraging Apache Spark for MLOps

Query 7: How do you leverage distributed computing frameworks like Apache Spark for MLOps?

Apache Spark is a robust instrument for dealing with large-scale information processing, which is a cornerstone of efficient MLOps practices. Right here’s how Spark enhances MLOps:

- Knowledge Processing at Scale: Spark’s means to course of massive datasets shortly and effectively is invaluable for coaching complicated machine studying fashions that require dealing with huge quantities of information.

- Stream Processing: With Spark Streaming, you’ll be able to develop and deploy real-time analytics options, essential for fashions requiring steady enter and quick response.

- Integration with ML Libraries: Spark integrates seamlessly with standard machine studying libraries like MLlib, offering a variety of algorithms which can be optimized for distributed environments.

Reply Hints:

- Emphasize Spark’s scalability, explaining the way it helps each batch and stream processing, which may be essential for deploying fashions that have to function in dynamic environments.

- Talk about the advantage of Spark’s built-in MLlib for machine studying duties, which simplifies the event of scalable ML fashions.

Safety and Compliance

Query 8: How do you handle safety issues when deploying ML fashions in manufacturing?

Addressing safety in machine studying deployments entails a number of strategic measures:

- Knowledge Encryption: Use encryption for information at relaxation and in transit to guard delicate info.

- Entry Controls: Implement strict entry controls and authentication protocols to restrict who can work together with the fashions and information.

- Common Audits: Conduct common safety audits and vulnerability assessments to determine and mitigate dangers.

Reply Hints:

- Point out instruments like HashiCorp Vault for managing secrets and techniques and AWS Identification and Entry Administration (IAM) for entry controls.

- Talk about the significance of adhering to safety greatest practices and frameworks just like the NIST cybersecurity framework.

Guaranteeing Compliance with Knowledge Safety Laws in MLOps

Query 9: Clarify the way you guarantee compliance with information safety rules (e.g., GDPR) in MLOps.

Guaranteeing compliance with information safety rules like GDPR is essential in MLOps to guard consumer information and keep away from authorized penalties. Right here’s how this may be achieved:

- Knowledge Anonymization and Encryption: Implement robust information anonymization strategies to redact personally identifiable info (PII) from datasets utilized in coaching and testing fashions. Use encryption to safe information at relaxation and in transit.

- Entry Controls and Auditing: Set up strict entry controls to make sure that solely licensed personnel have entry to delicate information. Preserve complete audit logs to trace entry and modifications to information, which is important for compliance.

- Knowledge Minimization and Retention Insurance policies: Adhere to the precept of information minimization by accumulating solely the info essential for particular functions. Implement clear information retention insurance policies to make sure information is just not stored longer than essential.

Reply Hints:

- Spotlight the usage of applied sciences like safe enclaves for processing delicate information and instruments like Databricks for implementing and implementing information governance.

- Talk about the function of steady monitoring and common audits to make sure ongoing compliance with information safety legal guidelines.

Optimization and Automation

Query 10: What strategies do you utilize for hyperparameter optimization at scale?

Optimizing hyperparameters effectively at scale requires superior strategies:

- Grid Search and Random Search: For exhaustive or random exploration of parameter house.

- Bayesian Optimization: For smarter, probability-based exploration of parameter house, specializing in areas prone to yield enhancements.

- Automated Machine Studying (AutoML): Makes use of algorithms to mechanically take a look at and modify parameters to search out optimum settings.

Reply Hints:

- Talk about the usage of platforms like Google Cloud’s AI Platform or Azure Machine Studying for implementing these strategies at scale.

- Clarify the trade-offs between computation time and mannequin accuracy when selecting optimization strategies.

Automating the ML Pipeline Finish-to-Finish

Query 11: Describe your strategy to automating the ML pipeline end-to-end.

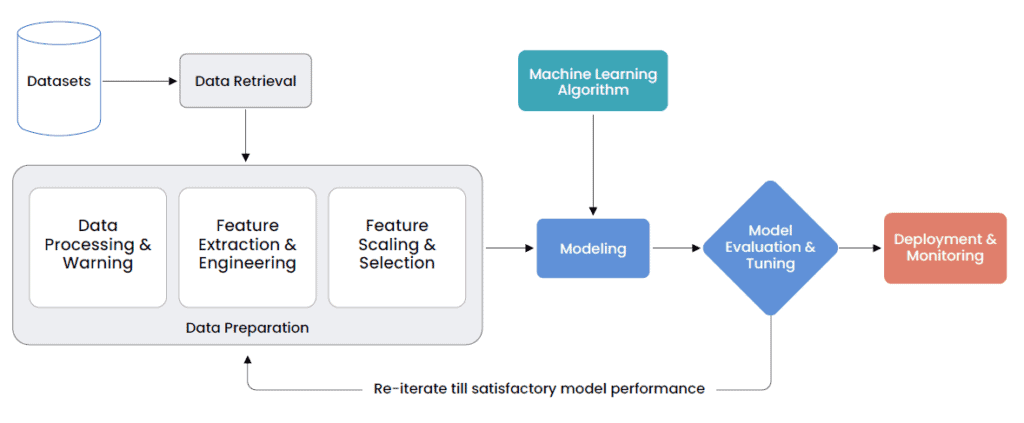

Automating the machine studying pipeline from information assortment to mannequin deployment is important for enhancing effectivity and decreasing errors in manufacturing environments. Right here’s a structured strategy:

- Knowledge Assortment and Preparation: Automate the ingestion and preprocessing of information utilizing scripts or instruments that clear, remodel, and normalize information, making ready it for evaluation and mannequin coaching.

- Mannequin Coaching and Analysis: Use automated scripts or workflow orchestration instruments to coach fashions on ready datasets. Routinely consider mannequin efficiency utilizing predefined metrics to make sure they meet the required requirements earlier than deployment.

- Mannequin Deployment and Monitoring: Automate the deployment course of by way of steady integration and steady deployment (CI/CD) pipelines. Implement automated monitoring to trace mannequin efficiency and well being in real-time, triggering alerts for any important deviations.

Reply Hints:

- Talk about the usage of instruments like Jenkins or GitLab for CI/CD, which streamline the deployment of machine studying fashions into manufacturing.

- Spotlight the function of monitoring frameworks like Prometheus or customized dashboards in Kubernetes to supervise mannequin efficiency constantly.

Case Research and Actual-World Situations

Query 12: Talk about a posh MLOps undertaking you led. What have been the challenges and the way did you overcome them?

Sharing a real-world instance can illustrate sensible problem-solving:

- Situation Description: Define the undertaking’s scope, targets, and the precise MLOps challenges encountered.

- Options Applied: Describe the methods used to deal with challenges resembling information heterogeneity, scalability points, or mannequin drift.

- Outcomes and Learnings: Spotlight the outcomes achieved and classes discovered from the undertaking.

Reply Hints:

- Emphasize the collaborative side of the undertaking, detailing how cross-functional staff coordination was essential.

- Talk about the iterative enhancements made based mostly on steady suggestions and monitoring.

Integrating A/B Testing and Steady Experimentation in MLOps

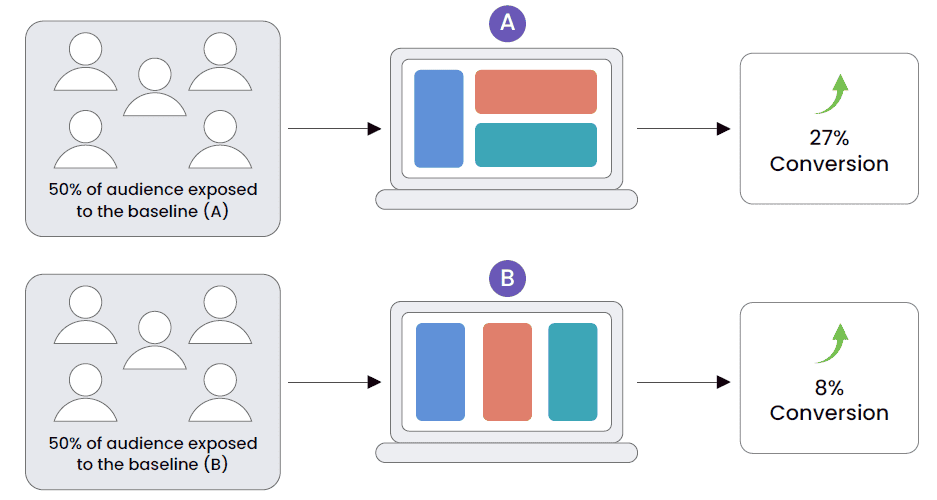

Query 13: How do you combine A/B testing and steady experimentation in MLOps?

Integrating A/B testing and steady experimentation is essential for optimizing and validating machine studying fashions in real-world settings. Right here’s how this may be successfully carried out:

- Experiment Design: Begin by clearly defining the targets and hypotheses for the A/B assessments. Decide what metrics might be used to measure success and the way information might be cut up amongst totally different variations of the mannequin.

- Implementation of Testing Framework: Use a strong platform that helps A/B testing and might route site visitors between totally different mannequin variations with out disrupting consumer expertise. Instruments like TensorFlow Prolonged (TFX) or Kubeflow can handle deployments and experimentations seamlessly.

- Knowledge Assortment and Evaluation: Make sure that information collected throughout the assessments is clear and dependable. Analyze the efficiency of every mannequin variant based mostly on predefined metrics, utilizing statistical instruments to find out important variations and make knowledgeable selections.

- Iterative Enhancements: Based mostly on the outcomes of A/B testing, constantly refine and retest fashions. Use insights from testing to reinforce options, tune hyperparameters, or redesign components of the mannequin.

Reply Hints:

- Talk about the significance of utilizing managed environments and phased rollouts to attenuate dangers throughout testing.

- Point out the combination of steady integration/steady deployment (CI/CD) pipelines with A/B testing instruments to automate the deployment and rollback of various mannequin variations based mostly on take a look at outcomes.

Greatest Practices and Tendencies

Query 14: What are the rising traits in MLOps and the way are you making ready for them?

Staying present with MLOps traits is vital to advancing within the subject:

- Automation and AI Operations: Elevated use of automation in deploying and monitoring machine studying fashions.

- Federated Studying: This strategy to coaching algorithms throughout a number of decentralized gadgets or servers ensures privateness and reduces information centralization dangers.

- MLOps as a Service (MLOpsaaS): Rising reputation of cloud-based MLOps options, providing scalable and versatile mannequin administration.

Reply Hints:

- Spotlight your ongoing training and coaching, resembling collaborating in workshops and following trade leaders.

- Talk about the way you incorporate these traits into your present tasks or plans, demonstrating proactive adaptation.

Advance Your Profession with OpenCV College

OpenCV College provides programs tailor-made for expertise lovers at each stage:

FREE Programs:

Our Pc Imaginative and prescient Grasp Bundle is the world’s most complete curation of newbie to expert-level programs in Pc Imaginative and prescient, Deep Studying, and AI.

<!–

–>