AWS has introduced that Amazon Lookout for Imaginative and prescient shall be discontinued as of October 31, 2025. Till the discontinuation of the product, Lookout for Imaginative and prescient will nonetheless be accessible for present clients to entry however no new clients can use the product. Earlier than the service is deprecated, it’s endorsed that you simply make plans for an alternate answer for the automated high quality assurance imaginative and prescient programs run on Lookout.

On this information, we’re going to talk about:

- The timing of the Lookout for Imaginative and prescient deprecation;

- The way to export your knowledge from Lookout for Imaginative and prescient, and;

- The way to migrate from AWS Lookout for Imaginative and prescient and prepare a brand new model of your defect detection mannequin.

With out additional ado, let’s get began!

AWS Lookout for Imaginative and prescient Discontinuation

On October 10, 2024, AWS introduced that Amazon Lookout for Imaginative and prescient shall be discontinued.

Within the AWS announcement submit, it was famous that “New clients will be unable to entry the service efficient October 10, 2024, however current clients will be capable to use the service as regular till October 31, 2025.”

Because of this anybody utilizing Lookout for Imaginative and prescient can proceed to make use of the product till the discontinuation date, after which level the product will not be accessible in its present state.

Lookout for Imaginative and prescient supported two imaginative and prescient undertaking varieties for defect detection:

- Picture classification, the place you possibly can assign a label to the contents of a picture (i.e. accommodates defect vs. doesn’t include defect), and;

- Picture segmentation, which lets you discover the exact location of defects in a picture.

You may export your knowledge from AWS Lookout for Imaginative and prescient in each classification and segmentation codecs. These codecs can then be imported into different options.

Deploy AWS Lookout for Imaginative and prescient Fashions on Roboflow

AWS Lookout for Imaginative and prescient provides knowledge export for the photographs you used to coach fashions that had been deployed with Lookout for Imaginative and prescient. These pictures can be utilized to coach fashions in different platforms similar to Roboflow.

Roboflow helps importing classification knowledge from AWS Lookout for Imaginative and prescient for all customers. If you wish to import segmentation knowledge, contact our assist staff. When you have a business-critical software operating, our area engineering staff might help you architect a brand new imaginative and prescient answer regardless of at what scale you might be operating.

Under, we’re going to speak by the high-level steps emigrate knowledge from AWS Lookout for Imaginative and prescient for classification.

Step #1: Export Information from AWS Lookout for Imaginative and prescient

First, it’s essential to export your datasets from Lookout for Imaginative and prescient. You can not export your mannequin weights, however you possibly can re-use your exported knowledge to coach a brand new mannequin.

To export your datasets, observe the “Export the datasets from a Lookout for Imaginative and prescient undertaking utilizing an AWS SDK” information from AWS. This can let you export your knowledge to an AWS S3 bucket.

After you have exported your knowledge to S3, obtain it to a neighborhood ZIP file in case your dataset is small (i.e. < 1,000 pictures). Then, unzip the file.

In case your dataset is massive, observe the Roboflow AWS S3 Bucket import information to learn to immediately import your annotations from S3 to Roboflow. For those who use the bucket import, you will have to fcreate a undertaking in Roboflow first, as detailed firstly of th enext step.

Step #2: Import Information to Roboflow

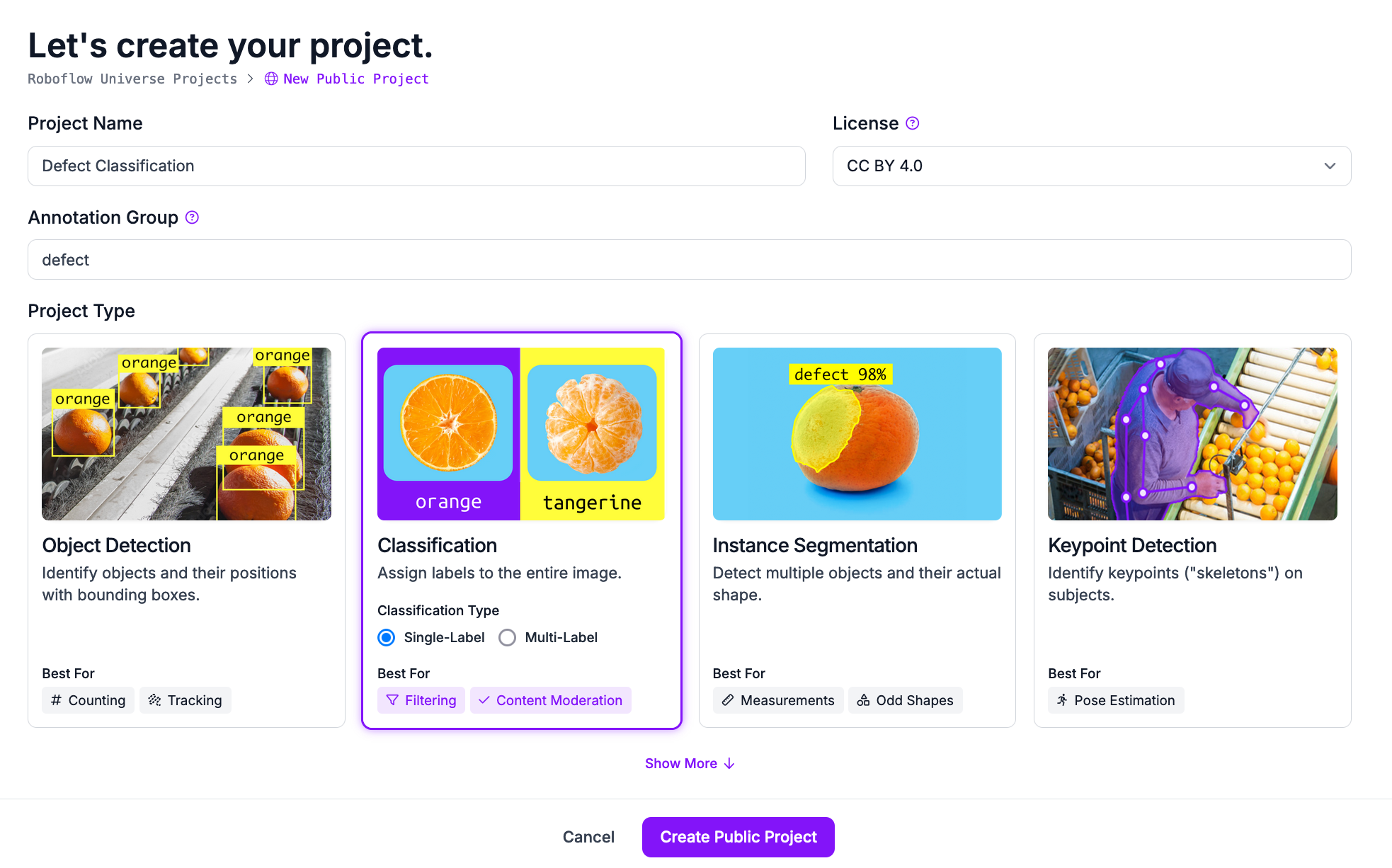

Subsequent, create a free Roboflow account. Create a brand new undertaking and select Classification because the undertaking kind (Notice: segmentation initiatives can solely be imported with help from assist):

Then, click on “Create Challenge”.

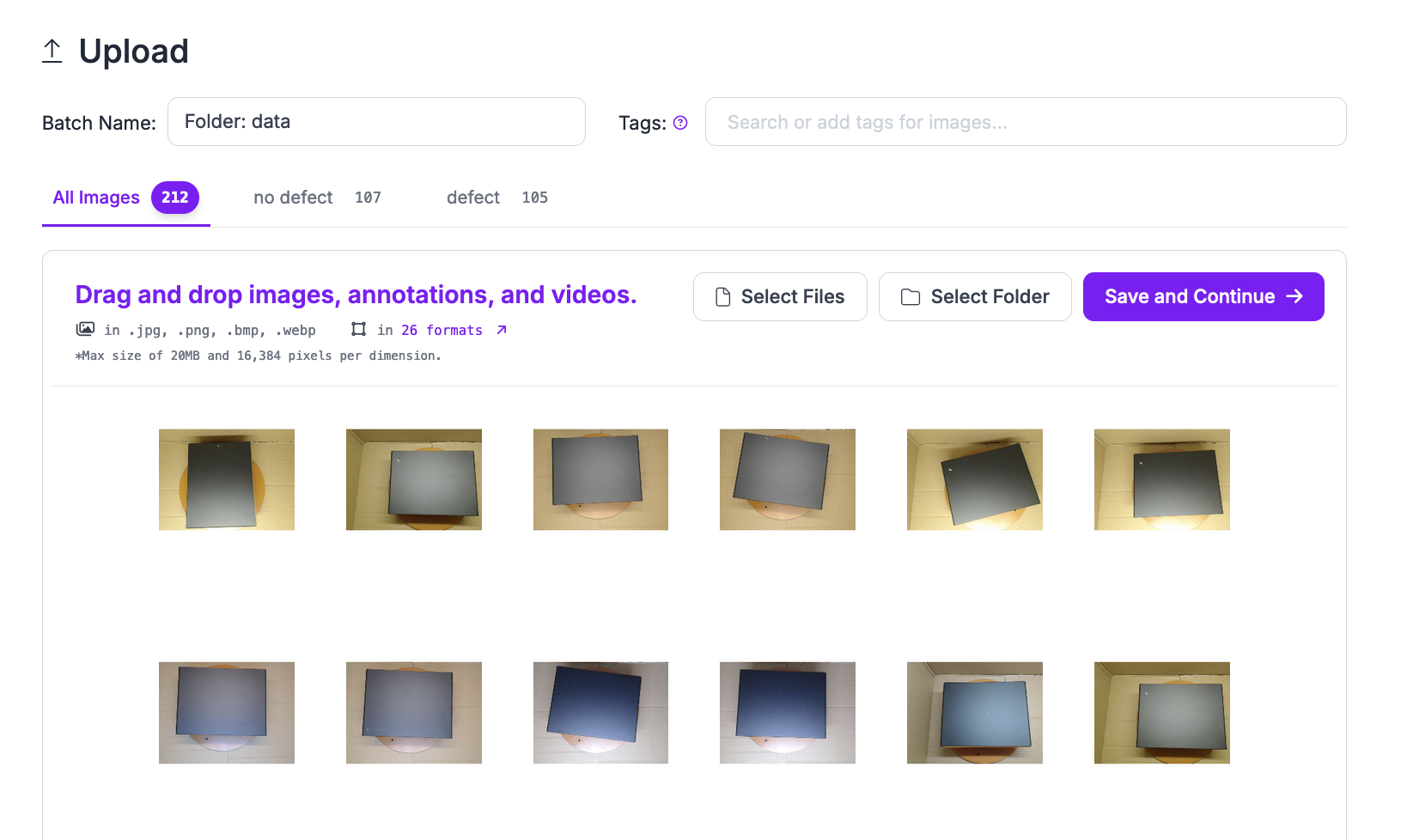

Subsequent, drag and drop the info you exported from AWS Lookout for Imaginative and prescient. When the “Save and Proceed” button seems, click on it to start out importing your knowledge.

Your classification annotations will robotically be acknowledged and added to your dataset in Roboflow. You may also add uncooked pictures with out annotations that you could label within the Roboflow Annotate interface.

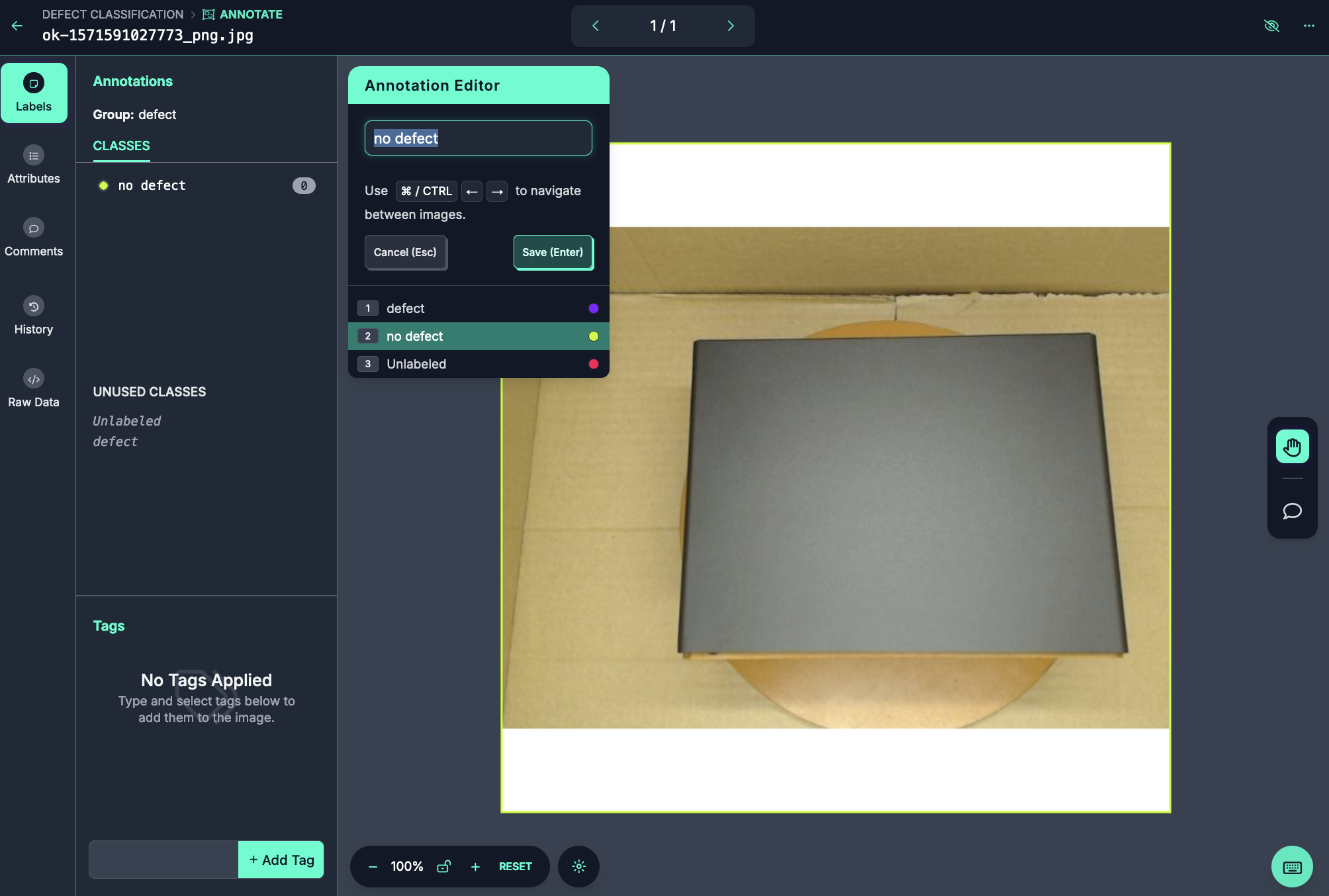

Right here is an instance of the labeling interface to be used in classification:

In case you are working with a segmentation dataset, you should utilize our SAM-powered Sensible Polygon software to label polygons in a single click on.

Step #3: Generate a Dataset Model

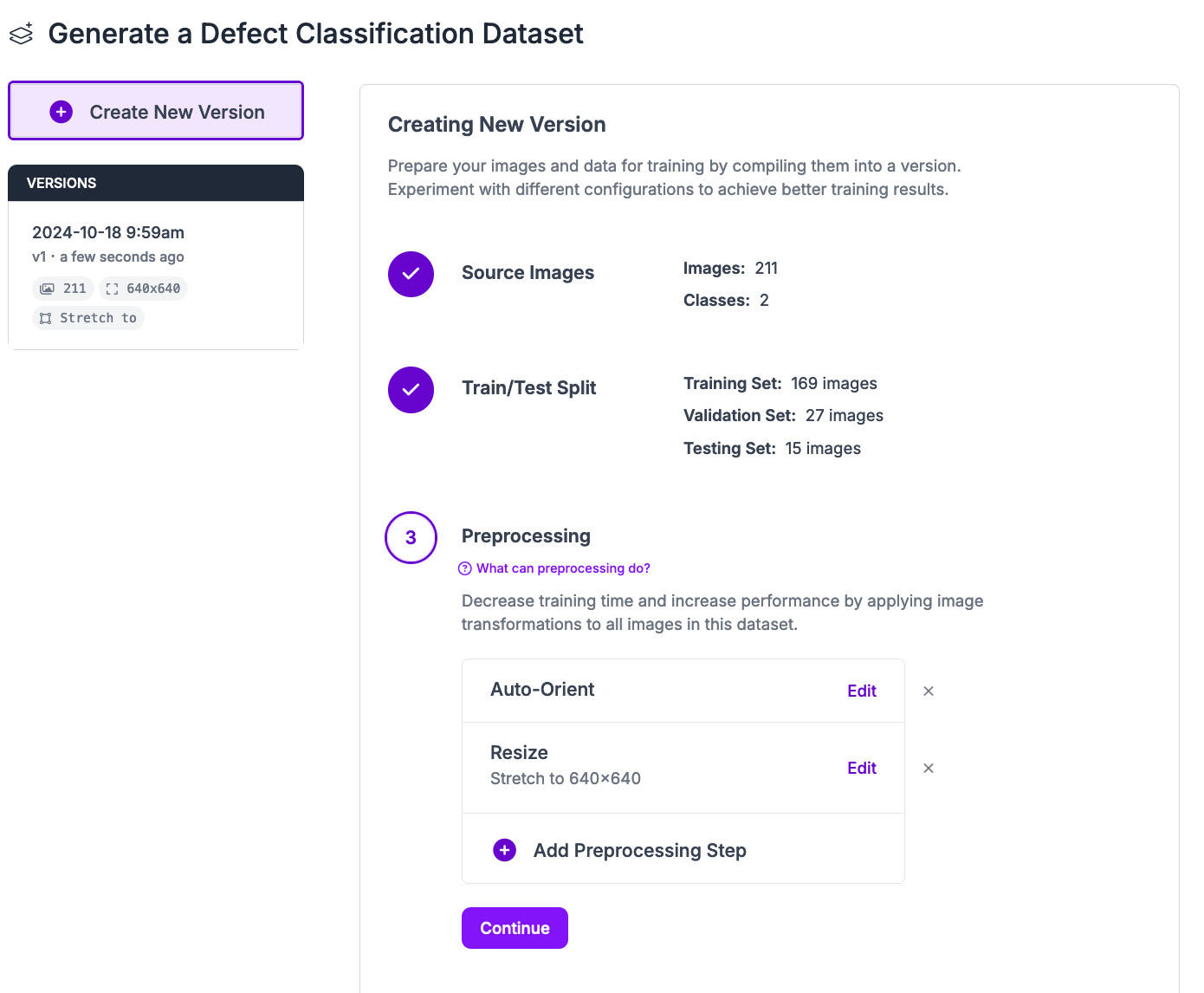

Together with your dataset in Roboflow, now you can create a dataset model to be used in coaching.

Click on “Generate” within the left sidebar of your Roboflow undertaking. A web page will seem from which you’ll be able to create a dataset model. A model is a snapshot of your knowledge frozen in time.

You may apply preprocessing and augmentation steps to pictures in dataset variations which can enhance mannequin efficiency.

To your first mannequin model, we suggest making use of the default preprocessing steps and no augmentation steps. In future coaching jobs, you possibly can experiment with totally different augmentations that could be applicable on your undertaking.

To study extra about selecting preprocessing and augmentation steps, learn our preprocessing and augmentation information.

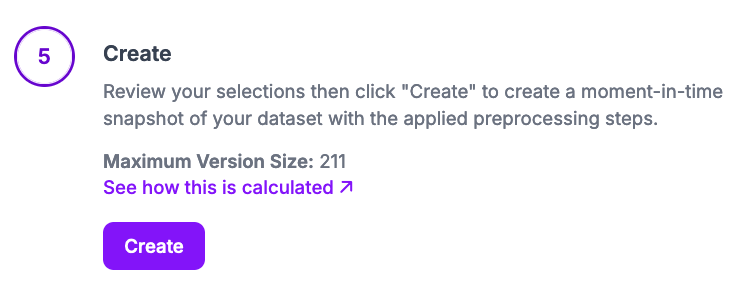

Click on “Create” on the backside of the web page to create your dataset model.

Your dataset model will then be generated. This course of could take a number of minutes relying on the variety of pictures in your dataset.

Step #4: Prepare a Mannequin

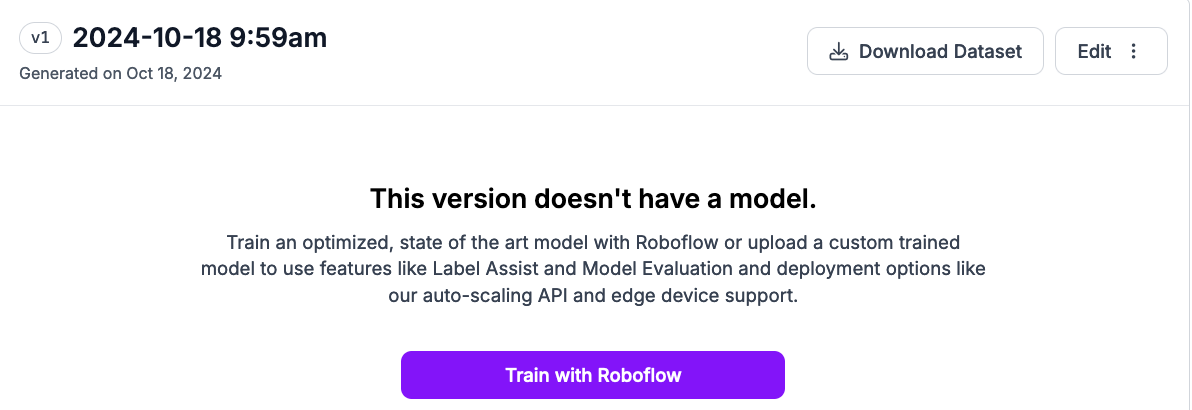

On the web page for the dataset model you created, a “Prepare with Roboflow” button will seem. This can let you prepare a mannequin within the Roboflow Cloud. This mannequin can then be used by our cloud API or deployed to the sting (i.e. on an NVIDIA Jetson).

Click on “Prepare with Roboflow”.

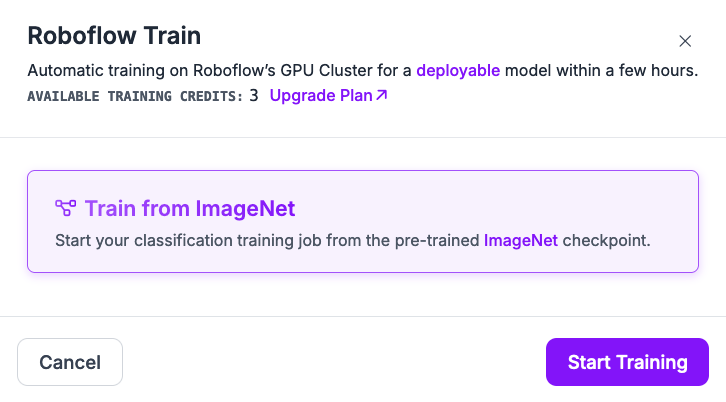

A window will seem from which you’ll be able to configure your coaching job. Select to coach from the ImageNet checkpoint. Then, click on “Begin Coaching”.

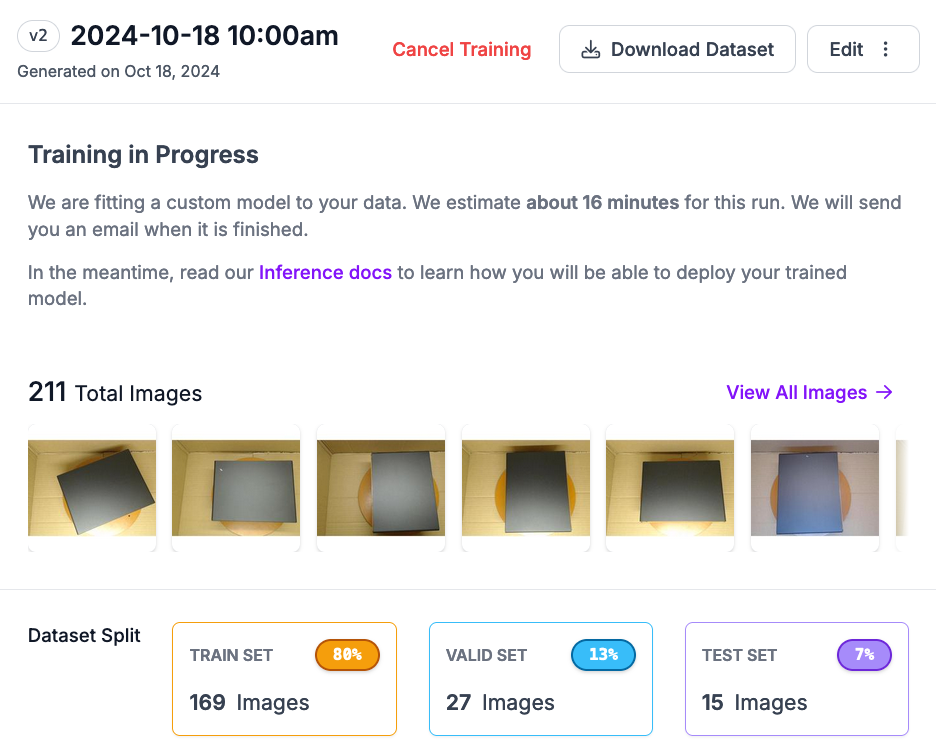

You’ll obtain an estimate for a way lengthy we anticipate your mannequin coaching to take.

Then, you will notice a message indicating coaching is in progress:

When your mannequin has educated, you’ll obtain an electronic mail and the dashboard will auto-update to incorporate details about how your mannequin performs.

Step #5: Check the Mannequin

You may take a look at your classification mannequin from the Visualize web page. This web page contains an interactive widget in which you’ll be able to attempt your mannequin.

Drag and drop a picture to see how your mannequin classifies the picture:

Within the instance above, our picture was efficiently categorized as containing a defect.

There’s a JSON illustration displaying the classification outcomes on the precise hand aspect of the web page:

{ "predictions": [ { "class": "defect", "class_id": 1, "confidence": 1 } ]

}Step #5: Deploy the Mannequin

You may deploy your mannequin by yourself {hardware} with Roboflow Inference. Roboflow Inference is a high-performance open supply laptop imaginative and prescient inference server. Roboflow Inference runs offline, enabling you to run fashions in an surroundings with out an web connection.

You may run fashions educated on or uploaded to Roboflow with Inference. This contains fashions you will have educated in addition to any of the 50,000+ pre-trained imaginative and prescient fashions accessible on Roboflow Universe. You may also run basis fashions like CLIP, SAM, and DocTR.

Roboflow Inference may be run both by a pip bundle or in Docker. Inference works on a spread of gadgets and architectures, together with:

- x86 CPU (i.e. a PC)

- ARM CPU (i.e. Raspberry Pi)

- NVIDIA GPUs (i.e. an NVIDIA Jetson, NVIDIA T4)

To learn to deploy a mannequin with Inference, consult with the Roboflow Inference Deployment Quickstart widget. This widget offers tailor-made steerage on find out how to deploy your mannequin primarily based on the {hardware} you might be utilizing.

For this information, we’ll present you find out how to deploy your mannequin by yourself {hardware}. This could possibly be an NVIDIA Jetson or one other edge machine deployed in a facility.

Click on “Deployment” within the left sidebar, then click on “Edge”. A code snippet will seem that you should utilize to deploy the mannequin.

First, set up Inference:

pip set up inferenceThen, copy the code snippet from the Roboflow net software for deploying to the sting.

The code snippet will appear like this, besides it can embrace your undertaking data:

# import the inference-sdk

from inference_sdk import InferenceHTTPClient # initialize the shopper

CLIENT = InferenceHTTPClient( api_url="https://detect.roboflow.com", api_key="API_KEY"

) # infer on a neighborhood picture

consequence = CLIENT.infer("YOUR_IMAGE.jpg", model_id="defect-classification-xwjdd/2")Then, run your code.

The primary time your code runs, your mannequin weights shall be downloaded to be used with Inference. They may then be cached for future use.

For the next picture, our mannequin efficiently classifies it as containing a defect:

The small mark in the midst of the panel is a scratch.

Listed below are the outcomes, as JSON:

{ "inference_id": "f7235c10-3da0-46a0-9b3a-a33b26ebd2b4", "time": 0.11387634100037758, "picture": {"width": 640, "peak": 640}, "predictions": [ {"class": "defect", "class_id": 1, "confidence": 0.9998}, {"class": "no defect", "class_id": 0, "confidence": 0.0002}, ], "prime": "defect", "confidence": 0.9998,

}

The arrogance of the category defect is 0.9998, which signifies the mannequin may be very assured that the picture accommodates a defect.

If you wish to deploy your mannequin to AWS, you are able to do so with Inference. We now have written a information on find out how to deploy YOLO11 to AWS EC2. The identical directions work with any mannequin educated on Roboflow: Roboflow Prepare 3.0, YOLO-NAS, and many others.

Conclusion

AWS Lookout for Imaginative and prescient is a pc imaginative and prescient answer. Lookout for Imaginative and prescient is being discontinued on October 31, 2025. On this information, we walked by how one can export your knowledge to AWS S3, obtain your knowledge from S3, then import it to Roboflow to be used in coaching and deploying fashions that you could run within the cloud and on the sting.

For those who want help immigrating from AWS Lookout for Imaginative and prescient to Roboflow, contact us.