We’re excited to announce help for format conversion to YOLO11 PyTorch TXT, coaching YOLO11 fashions on Roboflow, deploying YOLO11 fashions with Roboflow Inference, and utilizing YOLO11 fashions in Workflows.

On this information, we’re going to stroll via all of those options. With out additional ado, let’s get began!

Callout: Curious to be taught extra about YOLO11? Take a look at our “What’s YOLO11?” information and our “The best way to Prepare a YOLO11 Mannequin with Customized Information” Tutorial

Label Information for YOLO11 Fashions with Roboflow

Roboflow lets you convert information from 40+ codecs to the information format required by YOLO11 (YOLO11 PyTorch TXT). For instance, in case you have a COCO JSON dataset, you may convert it to the required format for YOLO11 detection, segmentation, and extra.

You may see a full listing of supported codecs on the Roboflow Codecs listing.

You can too label information in Roboflow and export it to the YOLO11 format to be used in coaching in Colab notebooks. Roboflow has an intensive suite of annotation instruments to assist pace up your labeling course of, together with SAM-powered annotation and auto-label. You can too use educated YOLO11 fashions as a label assistant to assist pace up labeling information.

Right here is an instance exhibiting SAM-powered labeling, the place you may hover over an object and click on on it to attract a polygon annotation:

Prepare YOLO11 Fashions with Roboflow

You may practice YOLO11 fashions on the Roboflow hosted platform, Roboflow Prepare.

To coach a mannequin, first create a mission on Roboflow and generate a dataset model. Then, click on “Prepare with Roboflow” in your dataset model dashboard:

A window will seem from which you’ll select the kind of mannequin you wish to practice. Choose “YOLO11”:

Then, click on “Proceed”. You’ll then be requested whether or not you wish to practice a Quick, Correct, or Further Giant mannequin. For testing, we suggest coaching a Quick mannequin. For manufacturing use instances the place accuracy is important, we suggest coaching Correct fashions.

You’ll then be requested from what coaching checkpoint you wish to begin coaching. By default, we suggest coaching from our YOLO11 COCO Checkpoint. You probably have already educated a YOLO11 mannequin on a earlier model of your dataset, you should use the mannequin as a checkpoint. This will enable you to obtain greater accuracy.

Click on “Begin Coaching” to begin coaching your mannequin.

You’ll obtain an estimate for a way lengthy we anticipate the coaching job to take:

The period of time your coaching job will take will differ relying on the variety of photographs in your dataset and a number of other different components.

Deploy YOLO11 Fashions with Roboflow

When your mannequin has educated, it is going to be accessible for testing within the Roboflow net interface, and deployment via both the Roboflow cloud REST API or on-device deployment with Inference.

To realize the bottom latency, we suggest deploying on system with Roboflow Inference. You may deploy on each CPU and GPU units. For those who deploy on a tool that helps a CUDA GPU – for instance, an NVIDIA Jetson – the GPU can be used to speed up inference.

To deploy a YOLO11 mannequin by yourself {hardware}, first set up Inference:

pip set up inferenceYou may run Inference in two methods:

- In a Docker container, or;

- Utilizing our Python SDK.

For this information, we’re going to deploy with the Python SDK.

Create a brand new Python file and add the next code:from inference import get_model

import supervision as sv

import cv2 # outline the picture url to make use of for inference

image_file = "picture.jpeg"

picture = cv2.imread(image_file) # load a pre-trained yolo11n mannequin

mannequin = get_model(model_id="yolov11n-640") # run inference on our chosen picture, picture is usually a url, a numpy array, a PIL picture, and so on.

outcomes = mannequin.infer(picture)[0] # load the outcomes into the supervision Detections api

detections = sv.Detections.from_inference(outcomes) # create supervision annotators

bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator() # annotate the picture with our inference outcomes

annotated_image = bounding_box_annotator.annotate( scene=picture, detections=detections)

annotated_image = label_annotator.annotate( scene=annotated_image, detections=detections) # show the picture

sv.plot_image(annotated_image)Above, set your Roboflow workspace ID, mannequin ID, and API key, if you wish to use a customized mannequin you’ve got educated in your workspace.

Additionally, set the URL of a picture on which you wish to run inference. This is usually a native file.

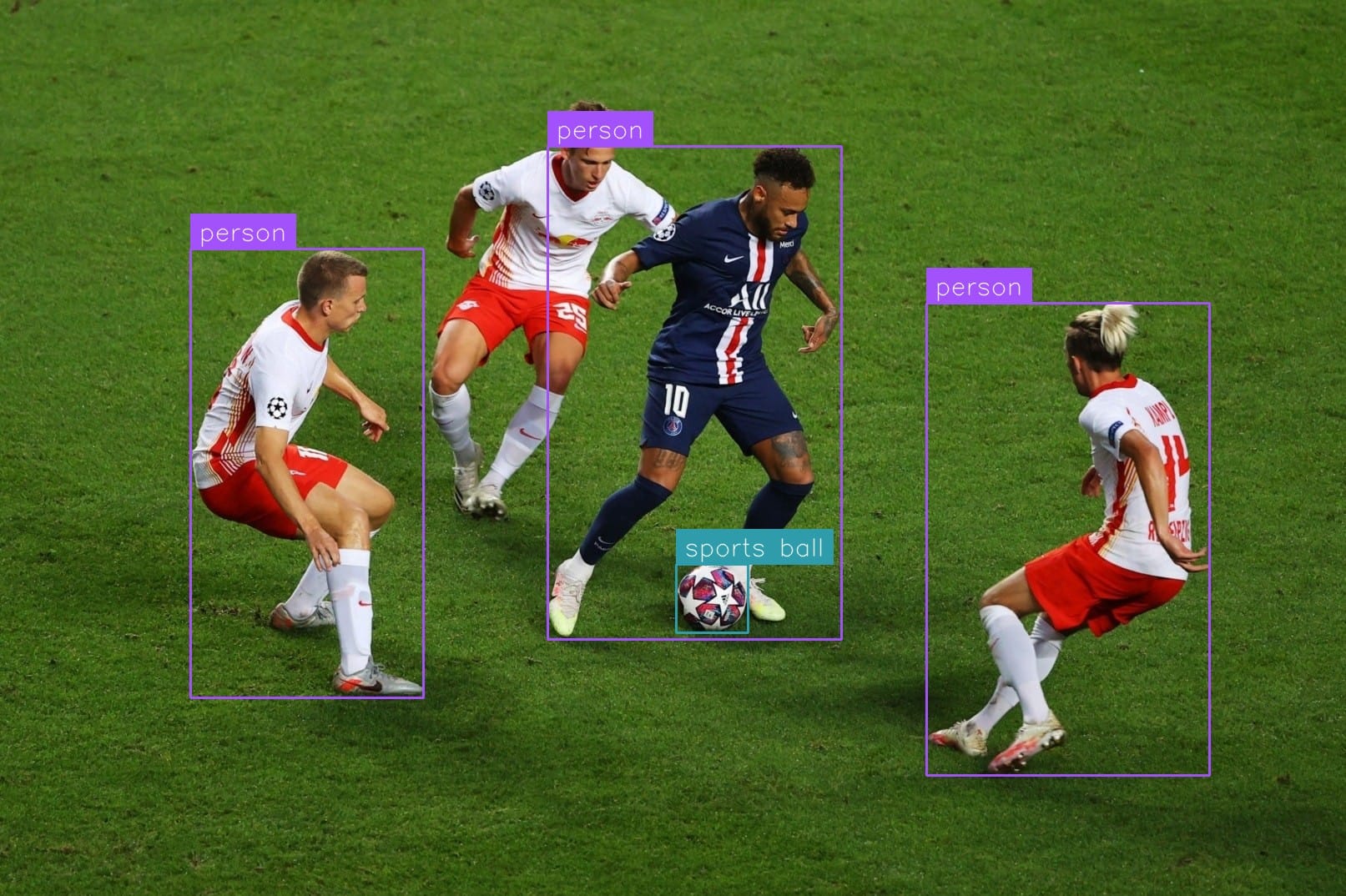

Right here is an instance of a picture working via the mannequin:

The mannequin efficiently detected a delivery container within the picture, indicated by the purple bounding field surrounding an object of curiosity.

You can too run inference on a video stream. To be taught extra about working your mannequin on video streams – from RTSP to webcam feeds – discuss with the Inference video information.

Use YOLO11 Fashions in Roboflow Workflows

You can too deploy YOLO11 fashions in Roboflow Workflows. Workflows lets you construct advanced, multi-step pc imaginative and prescient options in a web-based utility builder.

To make use of YOLO11 in a Workflow, navigate to the Workflows tab in your Roboflow dashboard. That is accessible from the Workflows hyperlink within the dashboard sidebar.

Then, click on “Create a Workflow”.

You’ll be taken to the Workflows editor the place you may configure your utility:

Click on “Add a Block” so as to add a block. Then, add an Object Detection Mannequin block.

You’ll then be taken to a panel the place you may select what mannequin you wish to use. Set the mannequin ID related along with your YOLO11 mannequin. You may set any mannequin in your workspace, or a public mannequin.

To visualise your mannequin predictions, add a Bounding Field Visualization block:

Now you can check your Workflow!

To check your Workflow, click on “Take a look at Workflow”, then drag in a picture on which you wish to run inference. Click on the “Take a look at Workflow” button to run inference in your picture:

Our YOLO11 mannequin, fine-tuned for logistics use instances like detecting delivery containers, efficiently recognized delivery containers.

You may then deploy your Workflow within the cloud or by yourself {hardware}. To find out about deploying your Workflow, click on “Deploy Workflow” within the Workflow editor, then select the deployment possibility that’s most applicable to your use case.

Conclusion

YOLO11 is a brand new mannequin structure developed by Ultralytics, the creators of the favored YOLOv5 and YOLOv8 software program.

On this information, we walked via 3 ways you should use YOLO11 with Roboflow:

- Prepare YOLO11 fashions from the Roboflow net person interface;

- Deploy fashions by yourself {hardware} with Inference;

- Create Workflows and deploy them with Roboflow Workflows.

To be taught extra about YOLO11, discuss with the Roboflow What’s YOLO11? information.