We’re excited to announce the launch of assist for video processing in Roboflow Workflows. Roboflow Workflows is a web-based pc imaginative and prescient software builder. With Workflows, you may construct advanced, multi-step functions in a couple of minutes.

With the brand new video processing capabilities, you may create pc imaginative and prescient functions that:

- Monitor the period of time an object has spent in a zone;

- Depend what number of objects have handed over a line, and;

- Visualize dwell time and the variety of objects which have handed a line.

On this information, we’re going to show the best way to use the video processing options in Roboflow Workflows. We’ll stroll by way of an instance that calculates for a way lengthy somebody is in a zone.

If you wish to calculate the variety of objects crossing a line, check with our Depend Objects Crossing Strains with Laptop Imaginative and prescient information.

Right here is an instance of a Workflow working to calculate dwell time in a zone, utilizing an instance of skiers on a slope:

We even have a video information that walks by way of the best way to construct video functions in Workflows:

With out additional ado, let’s get began!

Step #1: Create a Workflow

To get began, we have to create a Workflow.

Create a free Roboflow account. Then, click on on “Workflows” within the left sidebar. This can take you to your Workflows house web page, from which it is possible for you to to see all Workflows you’ve created in your workspace.

Click on “Create a Workflow” to create a Workflow.

You may be taken into the Roboflow Workflows editor from which you’ll construct your software:

We now have an empty Workflow from which we will construct an software.

Step #2: Add a Video Enter

We’ll want a Video Metadata enter. This enter can settle for webcam streams or video recordsdata native to your machine, or RTSP streams.

Click on “Enter” in your Workflow. Click on the digicam icon within the Enter configuration panel so as to add video metadata:

This parameter might be used to connect with a digicam.

Step #3: Add a Detection Mannequin

We’re going to use a mannequin to detect skiiers on a slope. We’ll then use video processing options in Workflows to trace objects between frames so we will calculate time spent in a zone.

So as to add an object detection mannequin to your Workflow, click on “Add a Block” and select the Object Detection Mannequin block:

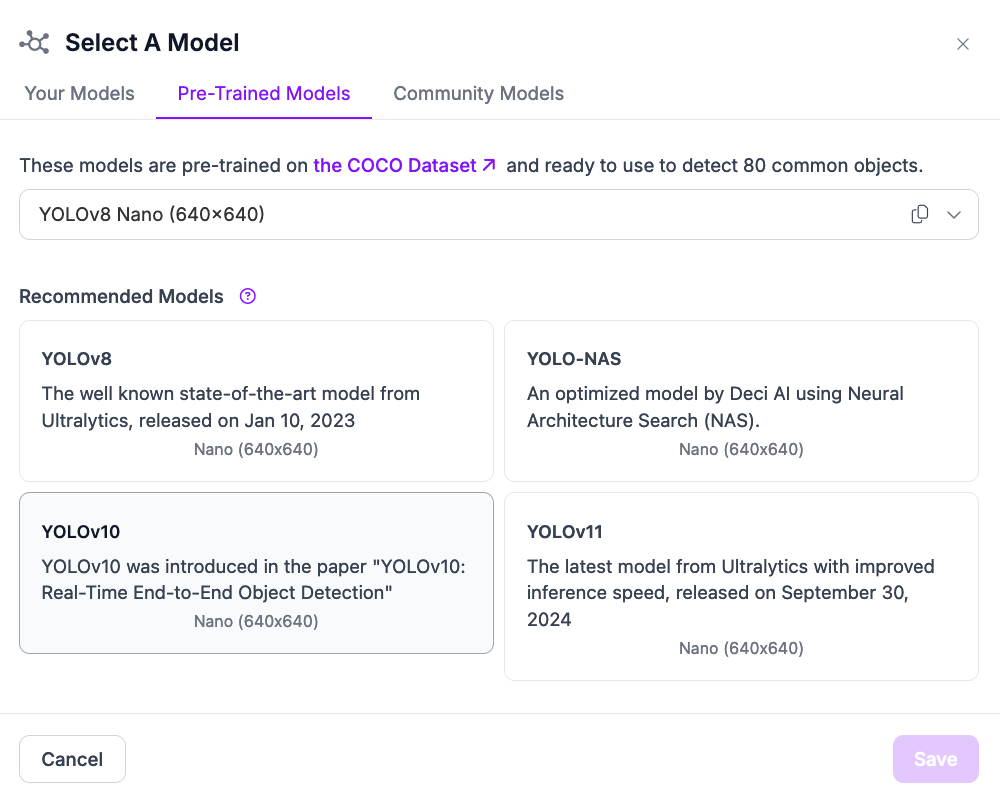

A configuration panel will open from which you’ll choose the mannequin you wish to use. You should utilize fashions skilled on or uploaded to Roboflow, or any mannequin on Roboflow Universe.

For this instance, let’s use a YOLO mannequin hosted on Universe that may detect individuals snowboarding. Click on “Public Fashions” and select YOLOv8 Nano:

Step #4: Add Monitoring

With a detection mannequin arrange, we now want so as to add object monitoring. This can permit us to trace objects between frames, a prerequisite for calculating dwell time.

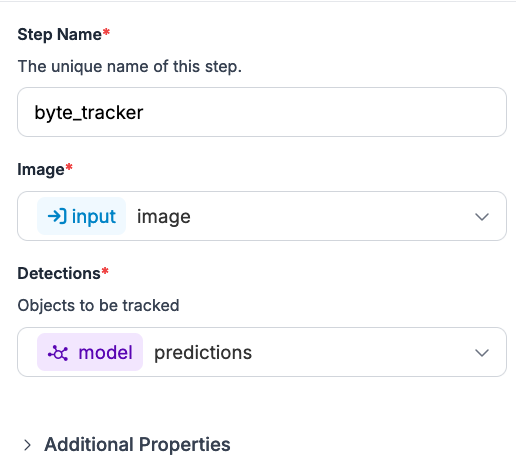

Add a “Byte Tracker” block to your Workflow:

The block must be robotically related to your object detection mannequin. You possibly can confirm this by making certain the Byte Tracker reads predictions out of your mannequin:

Step #5: Add Time in Zone

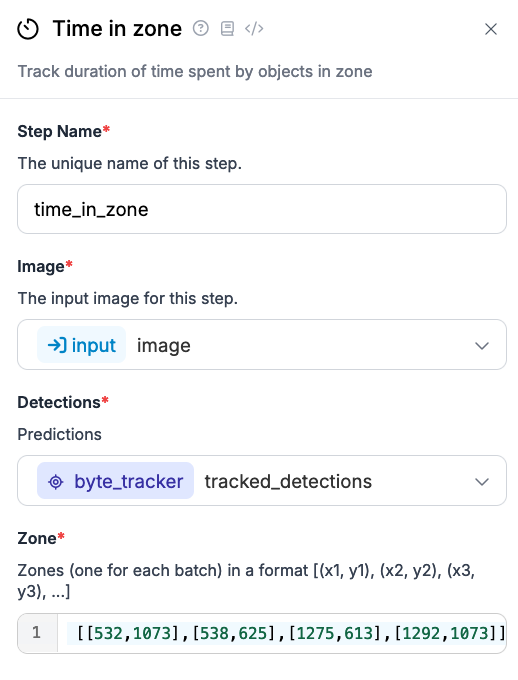

To calculate dwell time (often known as “time in zone”), we will use the Time in Zone block. Add the block to your Workflow:

A configuration panel will seem from which you’ll configure the Workflow block.

You’ll need to set the zone through which you wish to rely objects.

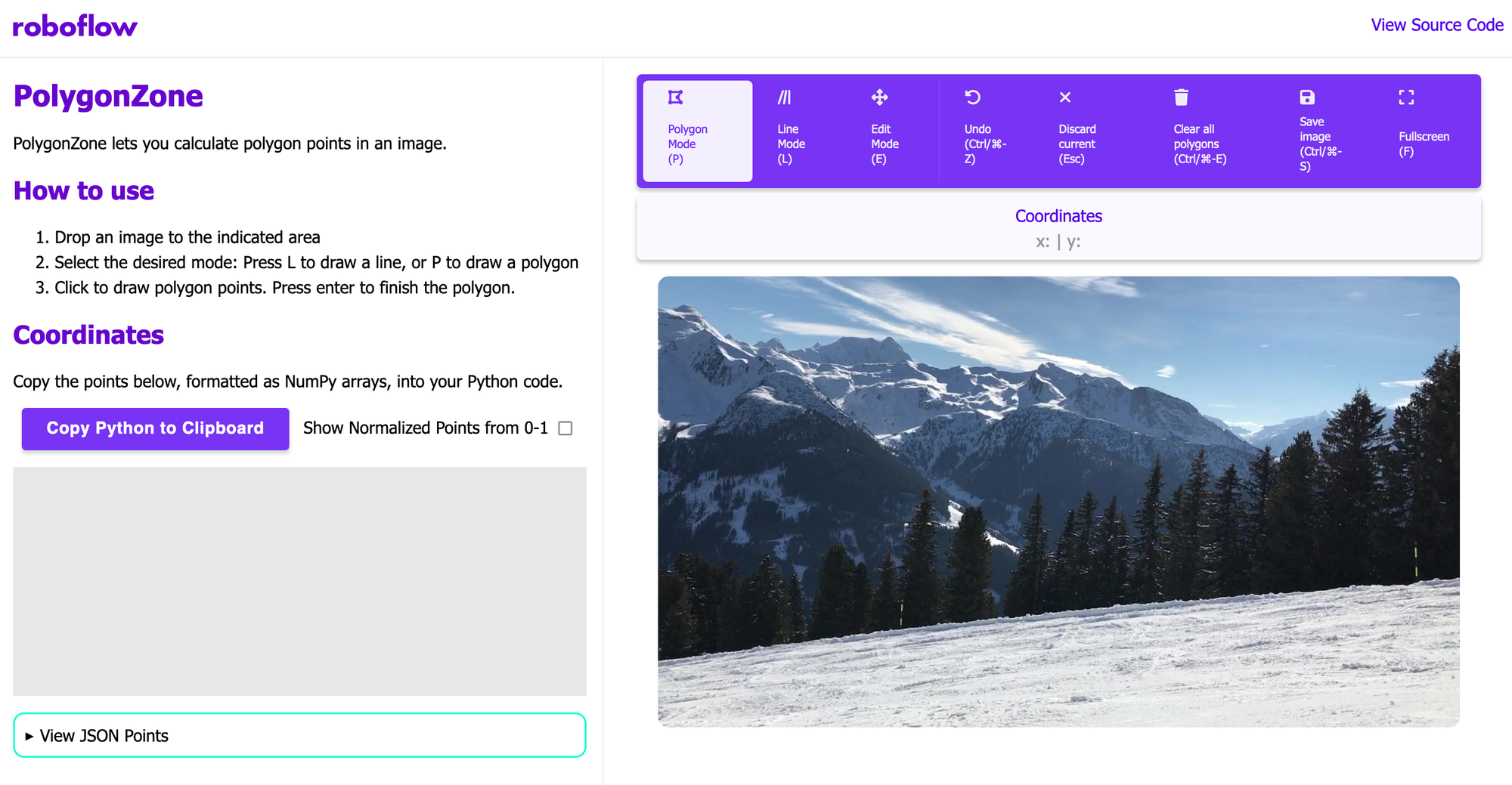

You possibly can calculate the coordinates of a zone utilizing Polygon Zone, an online interface for calculating polygon coordinates. Open Polygon Zone, then drag in a picture of the precise decision of the enter body out of your video.

If in case you have a static video, you may retrieve a body of the precise decision with the ffmpeg command:

ffmpeg -i video.mp4 -vf "choose=eq(n,0)" -q:v Three output_image.jpgAbove, change video.mp4 with the file you’ll use as a reference.

For instance, in case your enter video is 1980×1080, your enter picture to Polygon Zone must be the identical decision.

Then, use the polygon annotation device so as to add factors. You possibly can create as many zones as you need. To finish a zone, press the Enter key.

The NumPy coordinates are formatted in x0,y0,x1,y1 type. You possibly can copy these into the Workflows editor.

Step #6: Add Visualizations

By default, Workflows doesn’t present any visible illustration of the outcomes out of your Workflow. You want to add this manually.

For testing, we advocate including three visualization blocks:

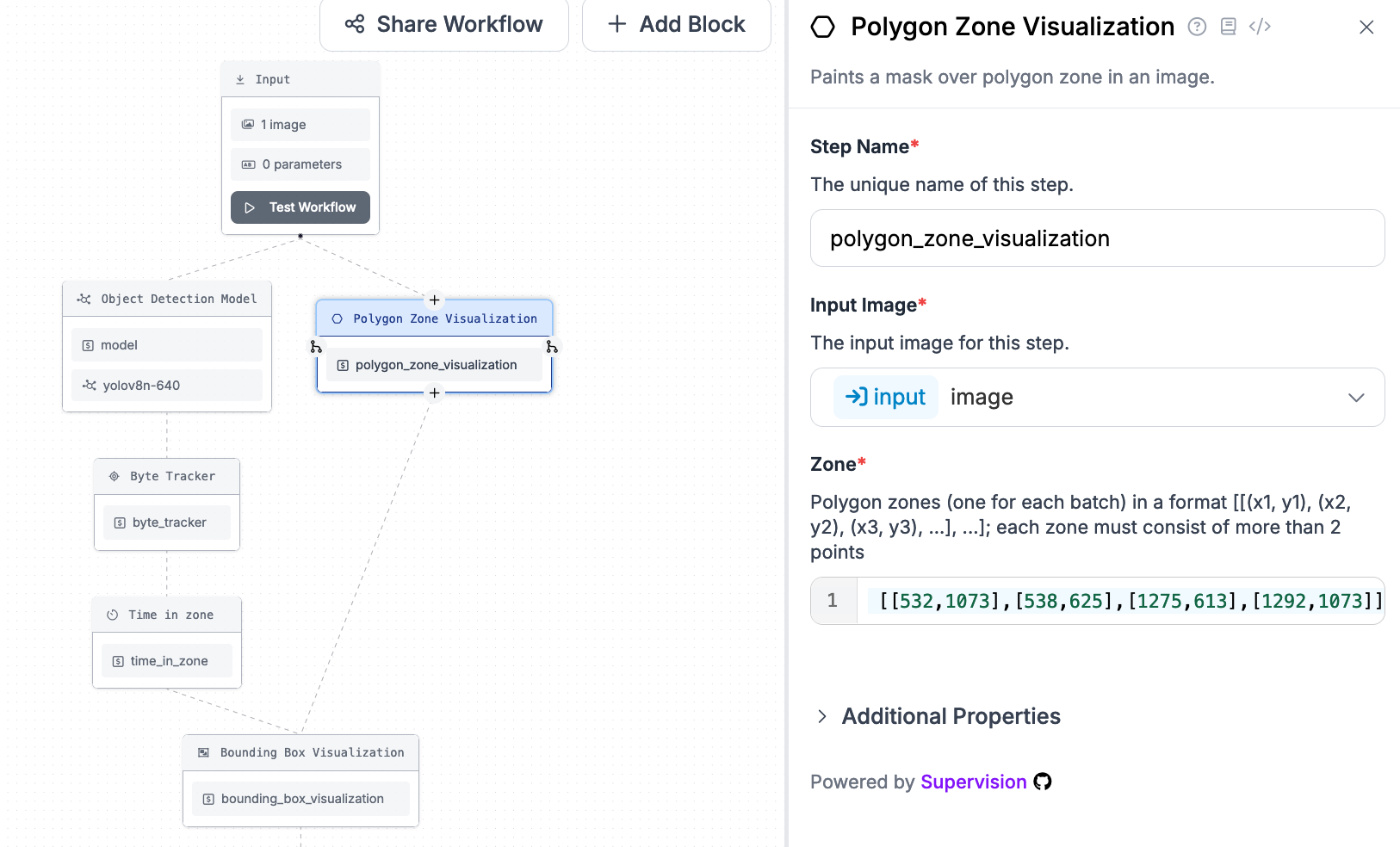

- Polygon zone visualization, which helps you to see the polygon zone you’ve drawn.

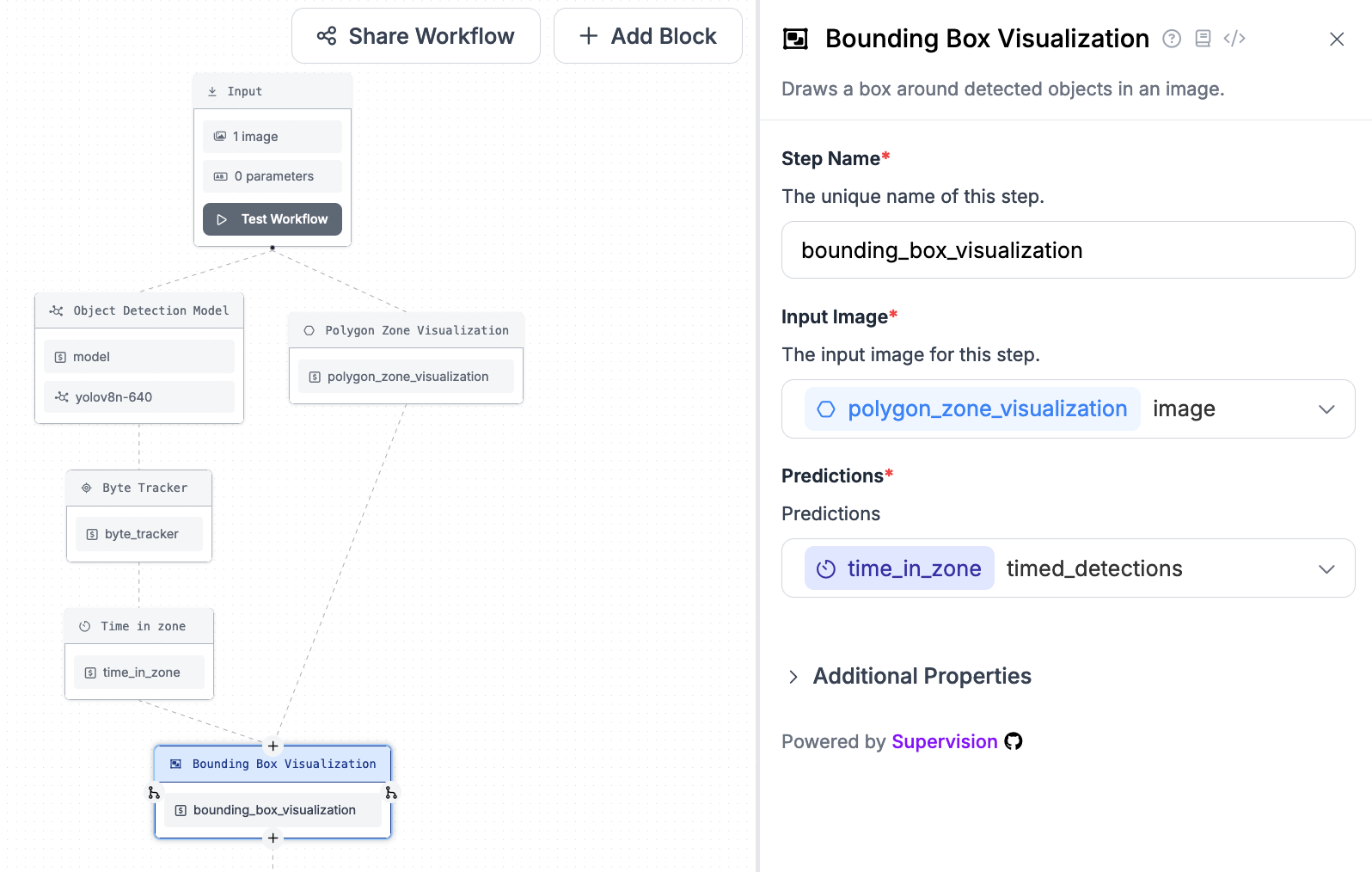

- Bounding field visualization, which shows bounding packing containers akin to detections from an object detection mannequin, and;

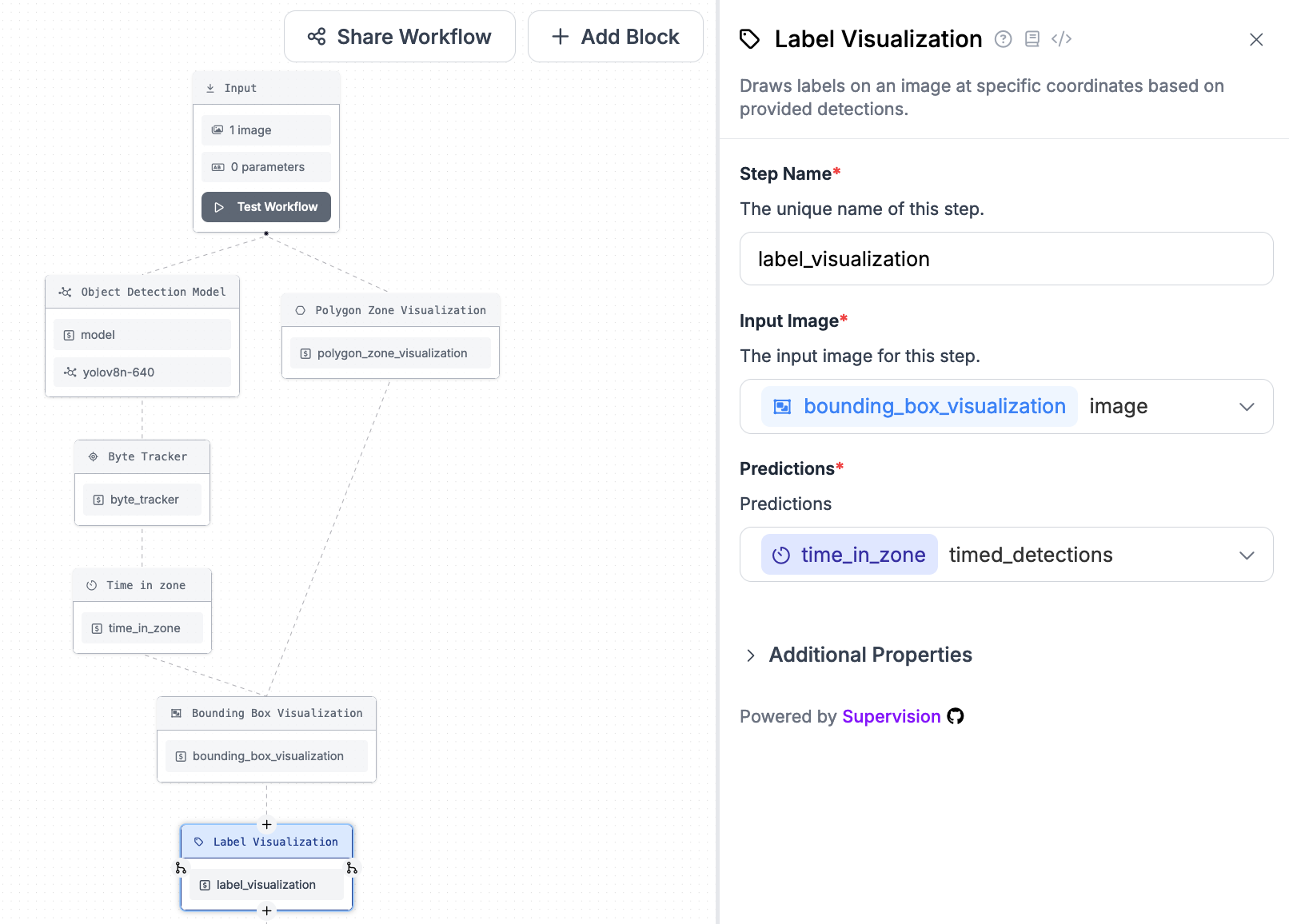

- Label visualization, which exhibits the labels that correspond with every bounding field.

The polygon zone visualization must be related to your enter picture, like this:

Add the coordinates of the zone you outlined within the Time in Zone block to the Zone worth within the polygon zone visualization.

Arrange a bounding field visualization to learn the picture out of your polygon zone visualization and use the predictions from the time in zone characteristic:

Arrange the label visualization to learn the time in zone detections and use the bounding field visualization picture:

After you have added these visualizations, you’re prepared to check your Workflow.

Step #7: Take a look at Workflow

To check your Workflow, you will have to run your Workflow by yourself {hardware}.

To do that, you will have an set up of Roboflow Inference, our on-device deployment software program, arrange.

Run the next command to put in Inference:

pip set up inferenceThen, create a brand new Python file and add the next code:

import argparse

import os from inference import InferencePipeline

import cv2 API_KEY = os.environ["ROBOFLOW_API_KEY"] def principal( video_reference: str, workspace_name: str, workflow_id: str,

) -> None: pipeline = InferencePipeline.init_with_workflow( api_key=API_KEY, workspace_name=workspace_name, video_reference=video_reference, on_prediction=my_sink, workflow_id=workflow_id, max_fps=30, ) pipeline.begin() # begin the pipeline pipeline.be a part of() # look forward to the pipeline thread to complete def my_sink(outcome, video_frame): visualization = outcome["label_visualization"].numpy_image cv2.imshow("Workflow Picture", visualization) cv2.waitKey(1) if __name__ == '__main__': parser = argparse.ArgumentParser() parser.add_argument("--video_reference", kind=str, required=True) parser.add_argument("--workspace_name", kind=str, required=True) parser.add_argument("--workflow_id", kind=str, required=True) args = parser.parse_args() principal( video_reference=args.video_reference, workspace_name=args.workspace_name, workflow_id=args.workflow_id, This code will create a command-line interface that you should utilize to check your Workflow.

First, export your Roboflow API key into your surroundings:

export ROBOFLOW_API_KEY=""Run the script like this:

python3 app.py --video_reference=0 --workspace_name=workspace --workflow_id=workflow-idAbove, set:

- Video_reference to the ID related to the webcam on which you wish to run inference. By default, that is 0. You can too specify an RTSP URL or the identify of a video file on which you wish to run inference.

- workspace_name together with your Roboflow workspace identify.

- workflow_id together with your Roboflow Workflow ID.

You possibly can retrieve your Workspace identify and Workflow ID from the Workflow internet editor. To retrieve this info, click on “Deploy Workflow” within the high proper nook of the Workflows internet editor, then copy these values from any of the default code snippets.

You solely want to repeat the workspace identify and Workflow ID. You don’t want to repeat the total code snippet, as we have now already written the requisite code within the final step.

Run the script to check your Workflow.

Right here is an instance displaying the Workflow working on a video:

Our Workflow efficiently tracks the time every particular person spends within the drawn zone.

Conclusion

Roboflow Workflows is a web-based pc imaginative and prescient software builder. Workflows contains dozens of blocks you should utilize to construct your software, from detection and segmentation fashions to predictions cropping to object monitoring.

On this information, we walked by way of the best way to construct a Workflow that makes use of the brand new video processing options in Roboflow Workflows.

We created a Workflow, arrange an object detection mannequin, configured object monitoring, then used the Time in Zone block to outline a zone through which we wished to calculate dwell time. We then created Workflow visualizations and ran the Workflow on our personal {hardware}.

To be taught extra about Workflows and the best way to use Workflows on static photos, check with our Roboflow Workflows introduction.