Deliver this undertaking to life

Traditionally, object detection was one of many first deep studying applied sciences that grew to become viable for client use. This was largely due to the unbelievable work of Joseph Redmon and his analysis group to develop the primary generations of You Solely Look As soon as (YOLO) fashions. There highly effective framework enabled folks with extra informal computing setups to get began with YOLO object detection, and start to combine the AI framework into their present functions.

This custom was continued with the devlopment of YOLOv5 and YOLOv8 at Ultralytics. They’ve continued to push the envelope in growing SOTA object detection fashions with more and more higher choices for interfacing the framework. The most recent of those comes with the YOLO bundle from Ultralytics, which makes it a lot simpler to combine YOLO with present python code and run in Notebooks.

On this article, we’re excited to introduce the YOLOv8 Internet UI. Impressed by the work of Computerized1111 and their many contributors to develop an all-in-one software for operating Secure Diffusion, we sought to develop a single Gradio software interface for YOLOv8 that may serve the entire mannequin’s key functionalities. In our new software, customers can label their photos, view these photos in ordered galleries, practice the complete gamut of YOLOv8 fashions, and generate picture label predictions on picture and video inputs – multi function simple to make use of software!

Comply with this tutorial to discover ways to run the applying in Gradient.

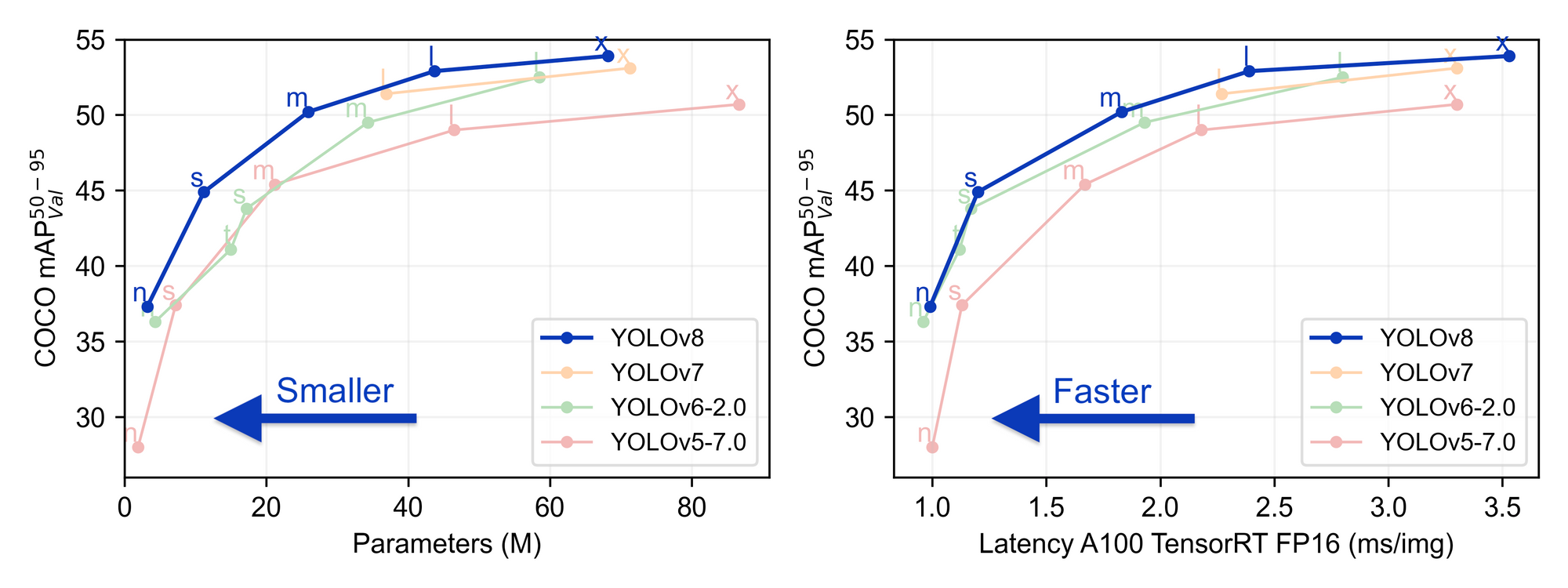

YOLOv8 is the newest object detection mannequin from the YOLO household and Ultralytics. YOLOv8 affords SOTA object detection in a bundle that has been considerably simplified to make use of in comparison with earlier iterations. Moreover, YOLOv8 represents a big step ahead in detection accuracy.

To study extra about YOLOv8, remember to learn a number of the different items on this weblog overlaying the subject:

We’ve made the applying out there for anybody on Github, and from there, it’s easy to launch a Gradient Pocket book to run the app. Alternatively, we are able to launch the applying in a stand alone deployment, and switch this into a correct API.

Run it in a Pocket book

Deliver this undertaking to life

To run the applying in a Gradient Pocket book, first click on the Run on Gradient hyperlink right here or on the high of the web page. It will open the YOLO Pocket book on a Free GPU machine.

As soon as within the Pocket book, navigate to the primary code cell and execute it to put in the dependencies:

!pip set up -r necessities.txtThen execute the next cell to spin up the applying. Click on the dwell hyperlink to entry the app on any net browser!

!python app-final.pyRun it in a Deployment

To deploy this software with Gradient, we merely must fill within the required values within the Deployment creation web page. Open up the Gradient console, and navigate to the deployments tab. Hit ‘Create’ to start out a brand new Deployment.

From this web page, we are able to fill within the spec in order that it holds the next values:

enabled: true

picture: paperspace/yolo:v1.01

port: 7860

command: - python - app-final.py

env: - title: GRADIO_SERVER_NAME worth: 0.0.0.0

sources: replicas: 1 instanceType: A100-80GMake sure to declare the surroundings variable GRADIO_SERVER_NAME as ‘0.0.0.0’. In any other case, the applying will level the server to the default worth, 127.0.0.2, and the app is not going to operate as meant.

As soon as we have now stuffed in the suitable data, hit ‘Deploy’ on the backside of the web page, and you can be redirected to the Deployment’s particulars web page. Right here we are able to get the API endpoint URL and edit the present YAML spec, if we have to. The Deployment will take a minute or two to spin up, and we’ll know its prepared by the inexperienced ‘Prepared’ image being displayed on the web page.

Now that we have now spun up the applying, let’s stroll by means of its capabilities.

Label photos

The primary tab is the picture labeling tab. Right here we’re capable of submit photos both in bulk or in single uploads. There we are able to title the listing to carry our knowledge. If there isn’t any present listing by that title, producing labels will set off the creation of a brand new listing and a knowledge.yaml file with the corresponding paths to the coaching, testing, and validation photos. Whichever values are within the label inputs are separated by semicolons, and can correspond to the label indexes within the knowledge.yaml file. It will enable us to coach on the pictures, per the required YOLOv8 file construction.

We will iteratively choose photos from the dropdown by importing them with the ‘Bulk add’ radio choice. It will open up a file uploader and dropdown. Load the pictures in, refresh the dropdown, after which choose every picture one after the other to load them into the sketchpad. Single photos can also be uploaded instantly by clicking on the sketchpad.

Utilizing the sketchpad object right here, we are able to draw over present photos to create the bounding packing containers for our photos. These are robotically detected. Upon producing the labels, a replica of the picture is moved to the assigned break up directories picture folder, and a corresponding labels textual content file shall be exported to the labels listing.

As soon as we’re completed labeling our photos, we are able to take a look at them utilizing the gallery tab.

View your photos in galleries

Within the Gallery tab, we are able to view our labeled photos. The bounding packing containers will not be at the moment utilized to those photos, however we goal so as to add that characteristic sooner or later. For now, it’s an efficient approach to see what photos have been submitted to what listing.

Run coaching

The coaching tab lets us run YOLOv8 coaching on the pictures we have now labeled. We will use the radio buttons and sliders to regulate the mannequin kind, variety of coaching epochs, and batch dimension. The coaching will output the outcomes of a validation check and the trail to the most effective mannequin, greatest.pt.

Generate predicted picture and video labels

Lastly, now that we have now educated our mannequin, we are able to use it to deduce labels on inputted photos and movies. The interface within the app permits for instantly importing these inputs, or they are often submitted as a URL. After operating prediction, on the backside of the web page there would be the metrics output and the inputted picture or video now with any detected bounding packing containers overlaid.

We hope that this software will make it easy for anybody to get began with YOLO on Gradient. Sooner or later, we plan to replace this GUI with extra options like in-app RoboFlow dataset acquisition and dwell object detection on streaming video, like a webcam.

Make sure to try our repo and add in your personal options!