MLPerf stays the definitive measurement for AI efficiency as an unbiased, third-party benchmark. NVIDIA’s AI platform has persistently proven management throughout each coaching and inference because the inception of MLPerf, together with the MLPerf Inference 3.zero benchmarks launched at this time.

“Three years in the past once we launched A100, the AI world was dominated by laptop imaginative and prescient. Generative AI has arrived,” mentioned NVIDIA founder and CEO Jensen Huang.

“That is precisely why we constructed Hopper, particularly optimized for GPT with the Transformer Engine. Right now’s MLPerf 3.zero highlights Hopper delivering 4x extra efficiency than A100.

“The subsequent degree of Generative AI requires new AI infrastructure to coach massive language fashions with nice vitality effectivity. Clients are ramping Hopper at scale, constructing AI infrastructure with tens of hundreds of Hopper GPUs linked by NVIDIA NVLink and InfiniBand.

“The trade is working onerous on new advances in secure and reliable Generative AI. Hopper is enabling this important work,” he mentioned.

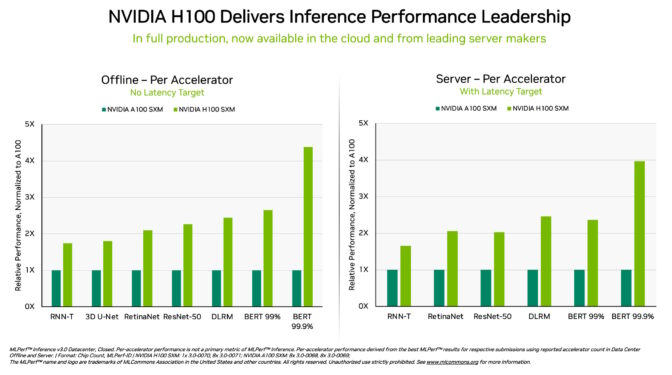

The newest MLPerf outcomes present NVIDIA taking AI inference to new ranges of efficiency and effectivity from the cloud to the sting.

Particularly, NVIDIA H100 Tensor Core GPUs operating in DGX H100 methods delivered the very best efficiency in each check of AI inference, the job of operating neural networks in manufacturing. Due to software program optimizations, the GPUs delivered as much as 54% efficiency beneficial properties from their debut in September.

In healthcare, H100 GPUs delivered a 31% efficiency improve since September on 3D-UNet, the MLPerf benchmark for medical imaging.

Powered by its Transformer Engine, the H100 GPU, primarily based on the Hopper structure, excelled on BERT, a transformer-based massive language mannequin that paved the way in which for at this time’s broad use of generative AI.

Generative AI lets customers shortly create textual content, photographs, 3D fashions and extra. It’s a functionality firms from startups to cloud service suppliers are quickly adopting to allow new enterprise fashions and speed up present ones.

A whole lot of hundreds of thousands of individuals at the moment are utilizing generative AI instruments like ChatGPT — additionally a transformer mannequin — anticipating prompt responses.

At this iPhone second of AI, efficiency on inference is significant. Deep studying is now being deployed practically all over the place, driving an insatiable want for inference efficiency from manufacturing facility flooring to on-line advice methods.

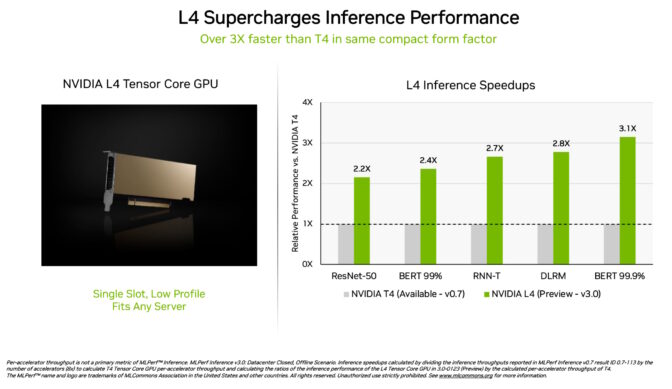

L4 GPUs Velocity Out of the Gate

NVIDIA L4 Tensor Core GPUs made their debut within the MLPerf checks at over 3x the velocity of prior-generation T4 GPUs. Packaged in a low-profile type issue, these accelerators are designed to ship excessive throughput and low latency in virtually any server.

L4 GPUs ran all MLPerf workloads. Due to their assist for the important thing FP8 format, their outcomes have been notably gorgeous on the performance-hungry BERT mannequin.

Along with stellar AI efficiency, L4 GPUs ship as much as 10x quicker picture decode, as much as 3.2x quicker video processing and over 4x quicker graphics and real-time rendering efficiency.

Introduced two weeks in the past at GTC, these accelerators are already out there from main methods makers and cloud service suppliers. L4 GPUs are the most recent addition to NVIDIA’s portfolio of AI inference platforms launched at GTC.

Software program, Networks Shine in System Check

NVIDIA’s full-stack AI platform confirmed its management in a brand new MLPerf check.

The so-called network-division benchmark streams information to a distant inference server. It displays the favored situation of enterprise customers operating AI jobs within the cloud with information saved behind company firewalls.

On BERT, distant NVIDIA DGX A100 methods delivered as much as 96% of their most native efficiency, slowed partially as a result of they wanted to attend for CPUs to finish some duties. On the ResNet-50 check for laptop imaginative and prescient, dealt with solely by GPUs, they hit the total 100%.

Each outcomes are thanks, largely, to NVIDIA Quantum Infiniband networking, NVIDIA ConnectX SmartNICs and software program similar to NVIDIA GPUDirect.

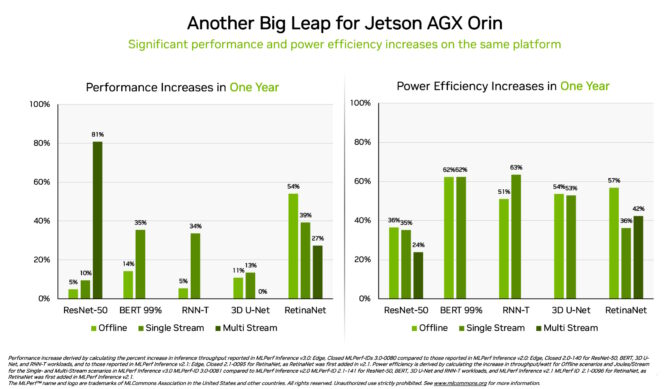

Orin Exhibits 3.2x Beneficial properties on the Edge

Individually, the NVIDIA Jetson AGX Orin system-on-module delivered beneficial properties of as much as 63% in vitality effectivity and 81% in efficiency in contrast with its outcomes a yr in the past. Jetson AGX Orin provides inference when AI is required in confined areas at low energy ranges, together with on methods powered by batteries.

For purposes needing even smaller modules drawing much less energy, the Jetson Orin NX 16G shined in its debut within the benchmarks. It delivered as much as 3.2x the efficiency of the prior-generation Jetson Xavier NX processor.

A Broad NVIDIA AI Ecosystem

The MLPerf outcomes present NVIDIA AI is backed by the trade’s broadest ecosystem in machine studying.

Ten firms submitted outcomes on the NVIDIA platform on this spherical. They got here from the Microsoft Azure cloud service and system makers together with ASUS, Dell Applied sciences, GIGABYTE, H3C, Lenovo, Nettrix, Supermicro and xFusion.

Their work reveals customers can get nice efficiency with NVIDIA AI each within the cloud and in servers operating in their very own information facilities.

NVIDIA companions take part in MLPerf as a result of they comprehend it’s a worthwhile software for patrons evaluating AI platforms and distributors. Ends in the most recent spherical exhibit that the efficiency they ship at this time will develop with the NVIDIA platform.

Customers Want Versatile Efficiency

NVIDIA AI is the one platform to run all MLPerf inference workloads and situations in information middle and edge computing. Its versatile efficiency and effectivity make customers the actual winners.

Actual-world purposes usually make use of many neural networks of various sorts that usually must ship solutions in actual time.

For instance, an AI software may have to know a consumer’s spoken request, classify a picture, make a advice after which ship a response as a spoken message in a human-sounding voice. Every step requires a unique sort of AI mannequin.

The MLPerf benchmarks cowl these and different standard AI workloads. That’s why the checks guarantee IT resolution makers will get efficiency that’s reliable and versatile to deploy.

Customers can depend on MLPerf outcomes to make knowledgeable shopping for choices, as a result of the checks are clear and goal. The benchmarks take pleasure in backing from a broad group that features Arm, Baidu, Fb AI, Google, Harvard, Intel, Microsoft, Stanford and the College of Toronto.

Software program You Can Use

The software program layer of the NVIDIA AI platform, NVIDIA AI Enterprise, ensures customers get optimized efficiency from their infrastructure investments in addition to the enterprise-grade assist, safety and reliability required to run AI within the company information middle.

All of the software program used for these checks is offered from the MLPerf repository, so anybody can get these world-class outcomes.

Optimizations are repeatedly folded into containers out there on NGC, NVIDIA’s catalog for GPU-accelerated software program. The catalog hosts NVIDIA TensorRT, utilized by each submission on this spherical to optimize AI inference.

Learn this technical weblog for a deeper dive into the optimizations fueling NVIDIA’s MLPerf efficiency and effectivity.