This text was contributed by Timothy Malche, an assistant professor within the Division of Pc Functions at Manipal College Jaipur.

Automated fault identification is an rising software of laptop imaginative and prescient that’s revolutionizing pipeline monitoring and upkeep. Utilizing automated fault detection programs, companies can higher handle crucial infrastructure for the transportation of petroleum merchandise, pure gasoline, water, sewage, chemical substances, and extra.

On this information, we’ll discuss by methods to construct a bodily pipeline monitoring system with laptop imaginative and prescient. We’ll:

- Outline an issue assertion;

- Select a dataset with which we are going to practice our mannequin;

- Outline the {hardware} required to gather pictures;

- Practice a YOLOv8 mannequin to determine numerous defects in a pipeline, and;

- Take a look at our mannequin.

With out additional ado, let’s start!

Understanding the Downside

Utilizing laptop imaginative and prescient algorithms, companies can analyze pictures and video streams for numerous indicators of bodily pipeline degradation, together with:

- Cracks

- Corrosion

- Leakages

This expertise gives quite a few benefits, comparable to elevated effectivity, expense financial savings, enhanced security, and data-driven decision-making.

Automated pipeline fault detection utilizing laptop imaginative and prescient might help assure the secure and reliable operation of pipelines and cut back the danger of environmental catastrophes with steady monitoring of pipelines, figuring out defects, and giving real-time suggestions.

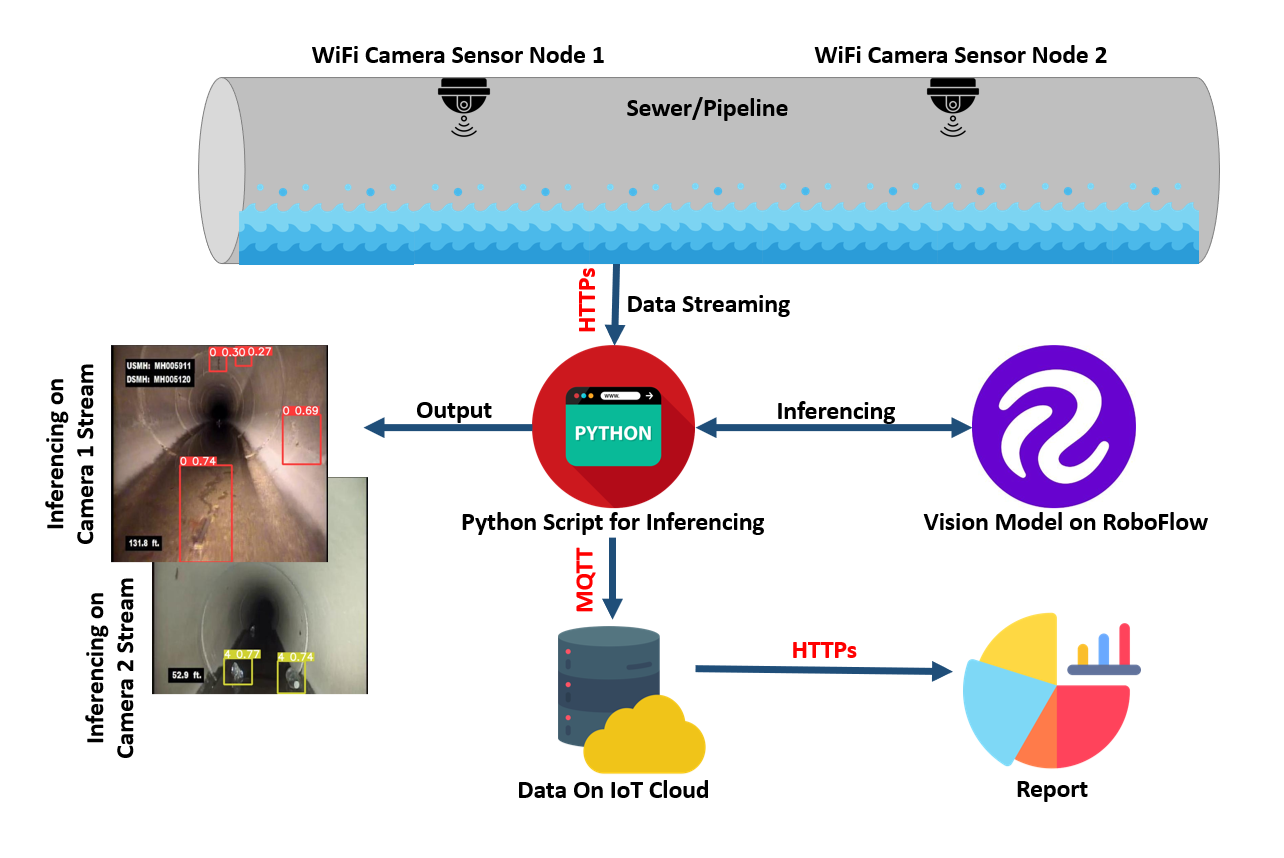

To unravel this downside, we’ll construct a system that makes use of laptop imaginative and prescient to determine points with the pipeline. We’ll construct logic on prime of this mannequin to avoid wasting information when points are recognized in a pipeline. Here’s a diagram of the system we’re going to construct:

Why Use Pc Imaginative and prescient for Pipeline Inspection?

When in comparison with standard guide checks, laptop imaginative and prescient is a extra environment friendly, strong, and cost-effective method of discovering pipeline defects.

First, steady inspection utilizing laptop imaginative and prescient permits for the early discovery of defects earlier than they turn into important. This leads to decreased downtime and financial savings from costly repairs and even pipeline failures.

Moreover, laptop imaginative and prescient fashions can determine flaws in actual time, giving on the spot suggestions and permitting for immediate motion. This helps companies guarantee pipelines are in good condition, reducing the danger of environmental catastrophes and making certain steady service supply.

Listed below are a number of extra advantages of utilizing laptop imaginative and prescient for pipeline inspection:

- Employee Security: Unbiased of environmental circumstances or operator biases, it may determine faults reliably, making certain that each one defects are discovered and in addition assist determine potential hazards earlier than they trigger accidents, defending each employees and the setting.

- Predictive upkeep: Pc vision-assisted pipeline fault identification can present information and concepts that can be utilized for predictive upkeep. Operators can detect attainable faults in pipeline information by analysing traits over time, reducing delay and maintenance prices.

- Regulatory compliance: Utilizing laptop imaginative and prescient to determine pipeline faults can help pipeline homeowners in assembly regulatory necessities for pipeline security and environmental safety. Operators can assure regulatory compliance by providing fixed monitoring and fault detection.

- Determination-making: Utilizing this methodology, a major amount of information may be generated that can be utilized to make data-driven choices. Operators can spot patterns and make knowledgeable selections about repairs and repairs by analysing pipeline information over time, reducing prices and rising effectivity.

Automated Pipeline Inspection System Structure

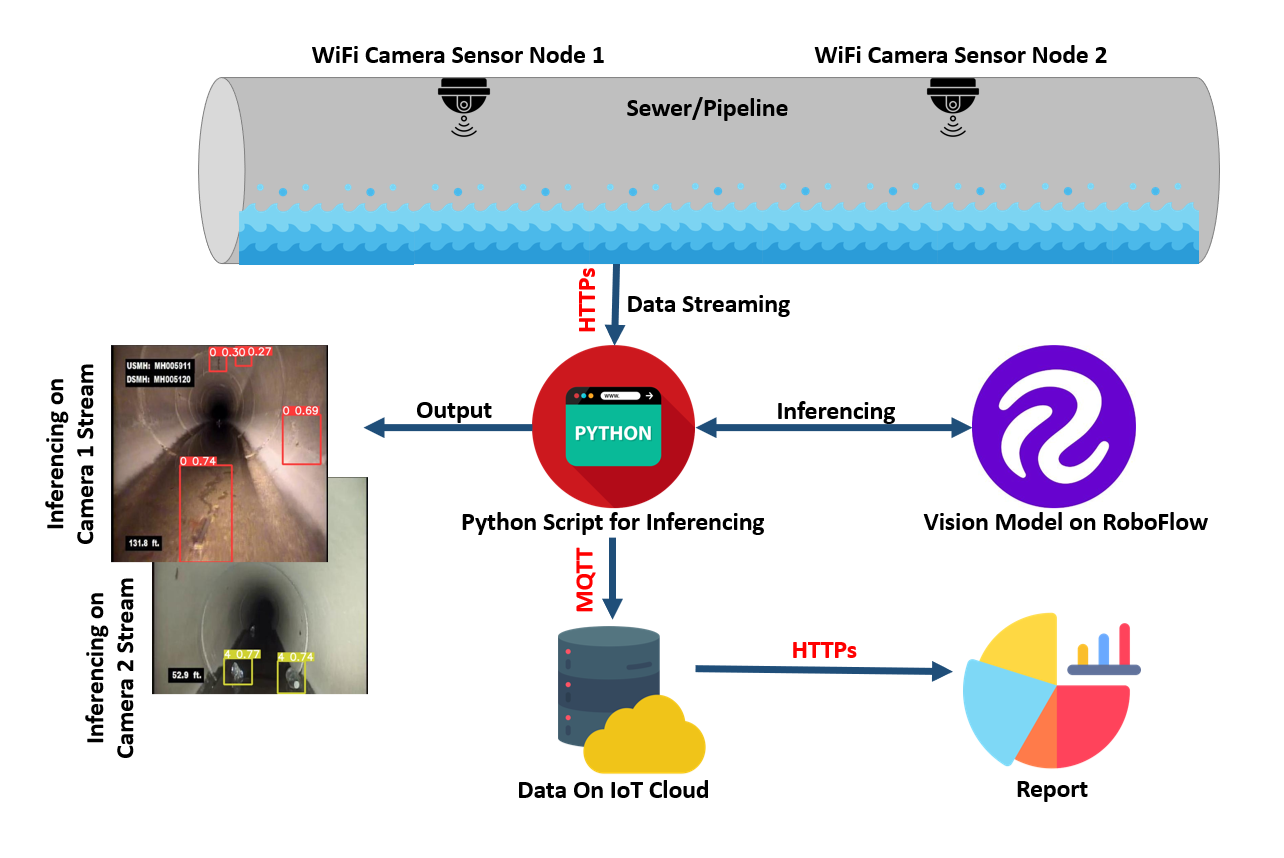

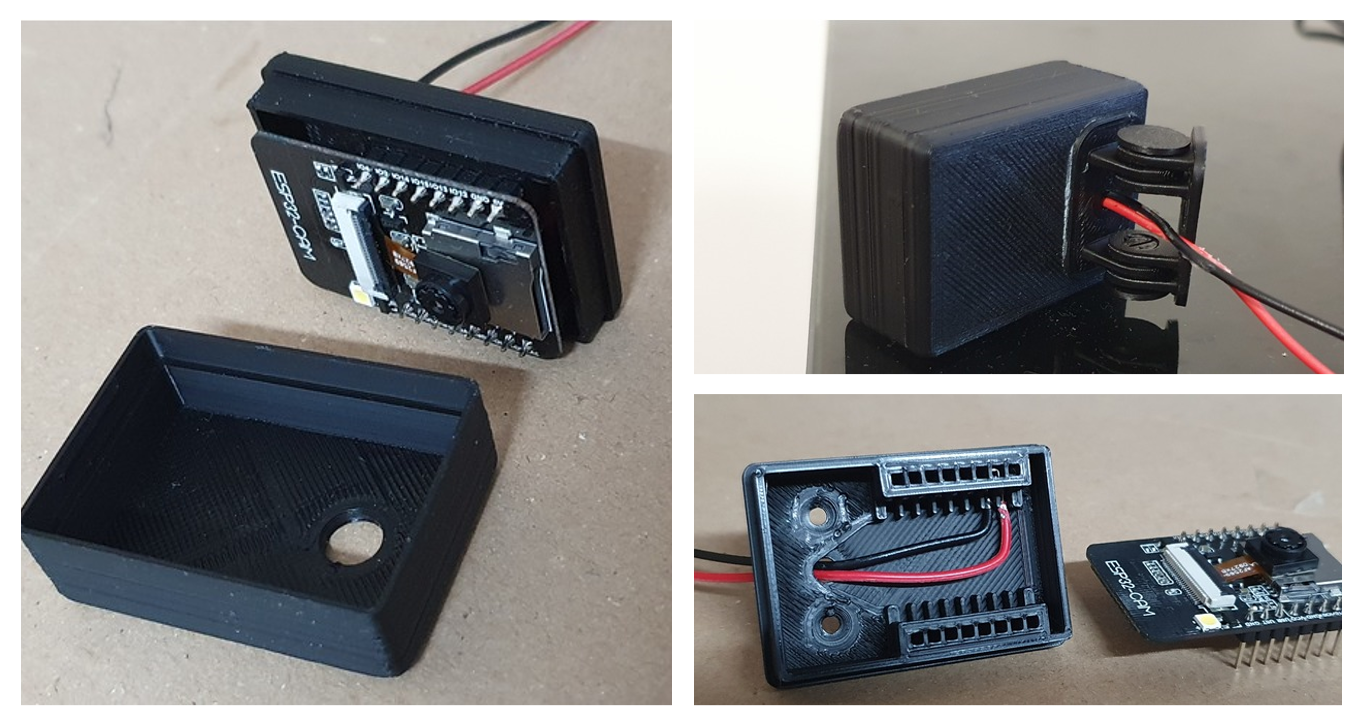

The pipeline defect detection system that we’ll construct on this article is made up of plenty of low-cost, low-power, useful resource constraint digicam nodes positioned at numerous factors alongside the pipeline to be examined.

The digicam nodes are positioned at a decided distance aside and positioned to offer a continuing view of the pipeline.

The digicam nodes can stream footage over Wi-Fi or different wi-fi expertise in order that it may be accessed from a distant location. The video stream is then fed into the pc imaginative and prescient algorithm, which helps in choice making. The inferencing outcomes are uploaded to the IoT server to make information out there for evaluation and reporting.

On this challenge, the video stream captured by the ESP32 digicam node is transmitted to a Python script working on the Raspberry Pi gateway over HTTP.

As soon as the video stream is acquired on the gateway, the stream is processed and analyzed utilizing a machine studying mannequin skilled utilizing YOLOv8 and uploaded to Roboflow.

After the evaluation is full, the outcomes are despatched to an IoT server through the MQTT protocol. The Raspberry Pi gateway acts as an MQTT consumer and sends the outcomes to the IoT server utilizing the publish-subscribe messaging sample. The usage of HTTP and MQTT protocols for transmitting information on this challenge permits for environment friendly communication between units in a distributed IoT community.

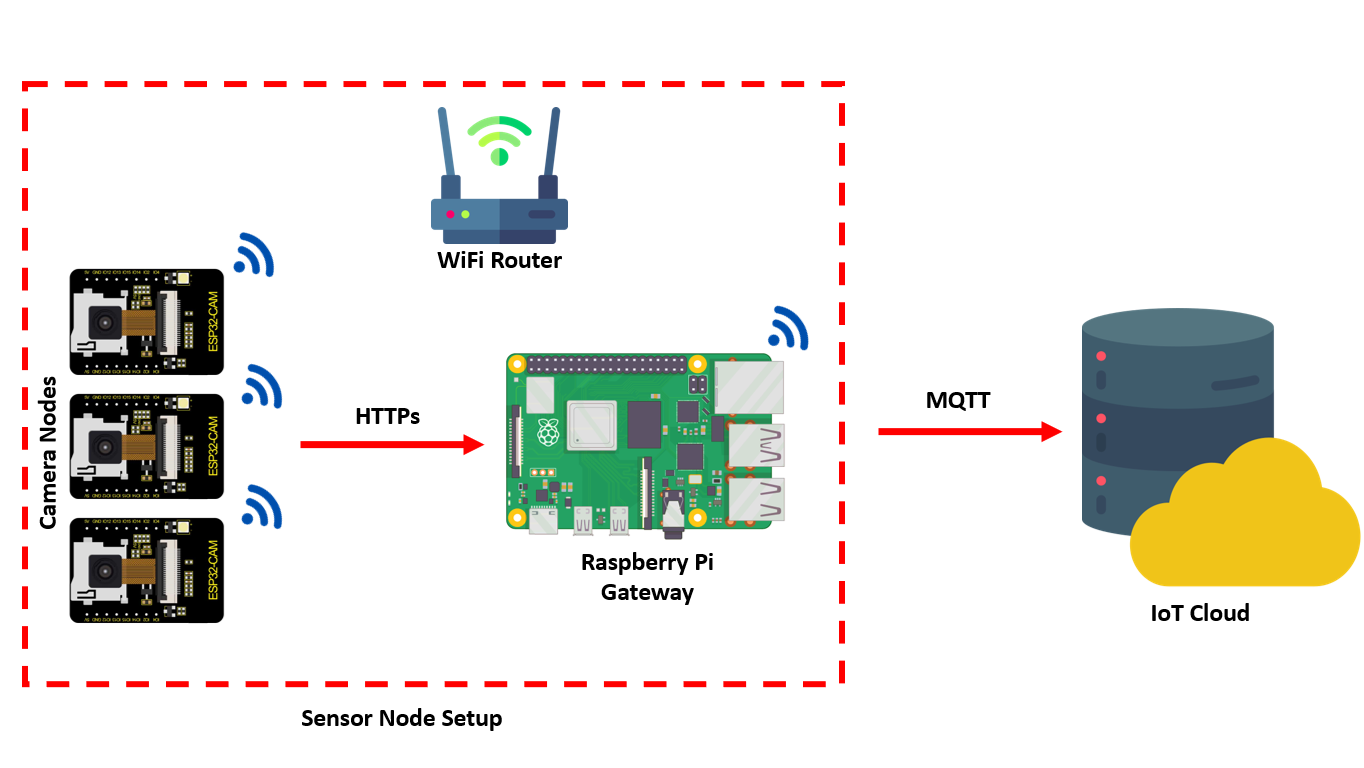

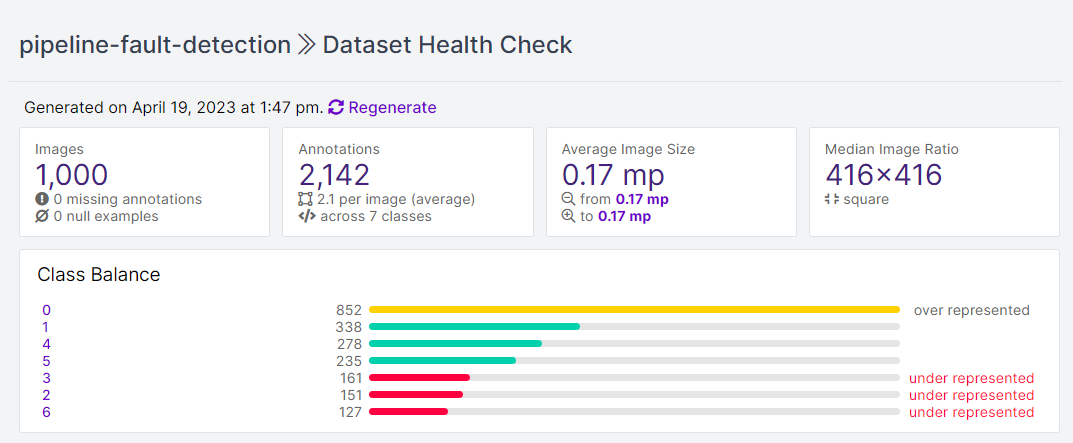

Selecting a Dataset

For this challenge, we’ll use the “Storm drain” pipeline dataset hosted on Roboflow Universe. The dataset consists of 1,000 pictures and is labeled with the next courses:

- 0: Crack

- 1: Utility intrusion

- 2: Particles

- 3: Joint offset

- 4: Impediment

- 5: Gap

- 6: Buckling

Roboflow gives a Well being Examine function which can be utilized to get extra perception in regards to the dataset comparable to class steadiness, dimension insights, annotation warmth map, and a histogram of object counts. This may assist in understanding the dataset and help in making selections concerning preprocessing and augmentation for the dataset.

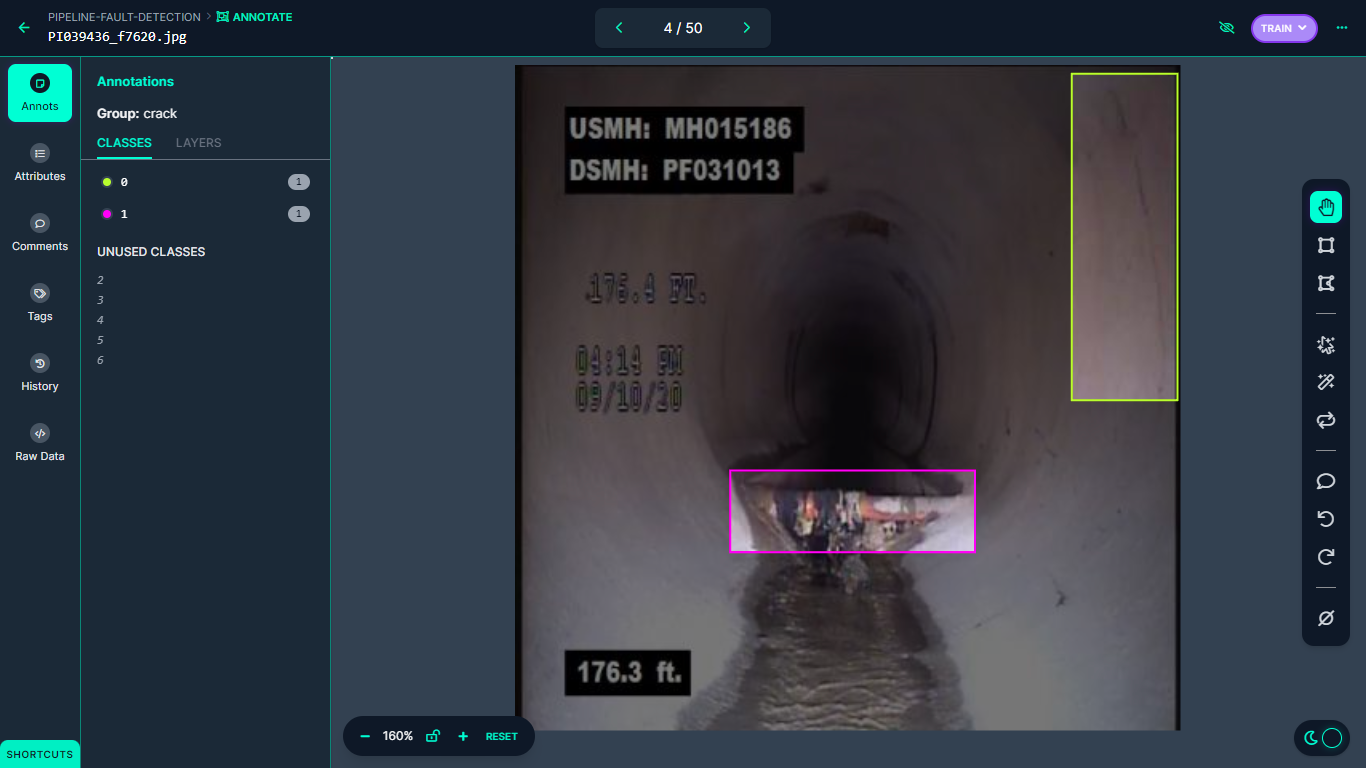

The next determine reveals an instance labelled picture in our dataset:

Constructing the Automated Pipeline Inspection System

To gather pictures, we’ll use the next items of expertise:

- ESP32 Digicam

- Li-Po 3.7 v battery

- Raspberry Pi 4

Collectively, this expertise varieties a “node” that may very well be a part of a community of a number of sensors monitoring a pipeline.

With the {hardware} in place, we have to construct a software program element that may determine the aforementioned points in a pipeline.

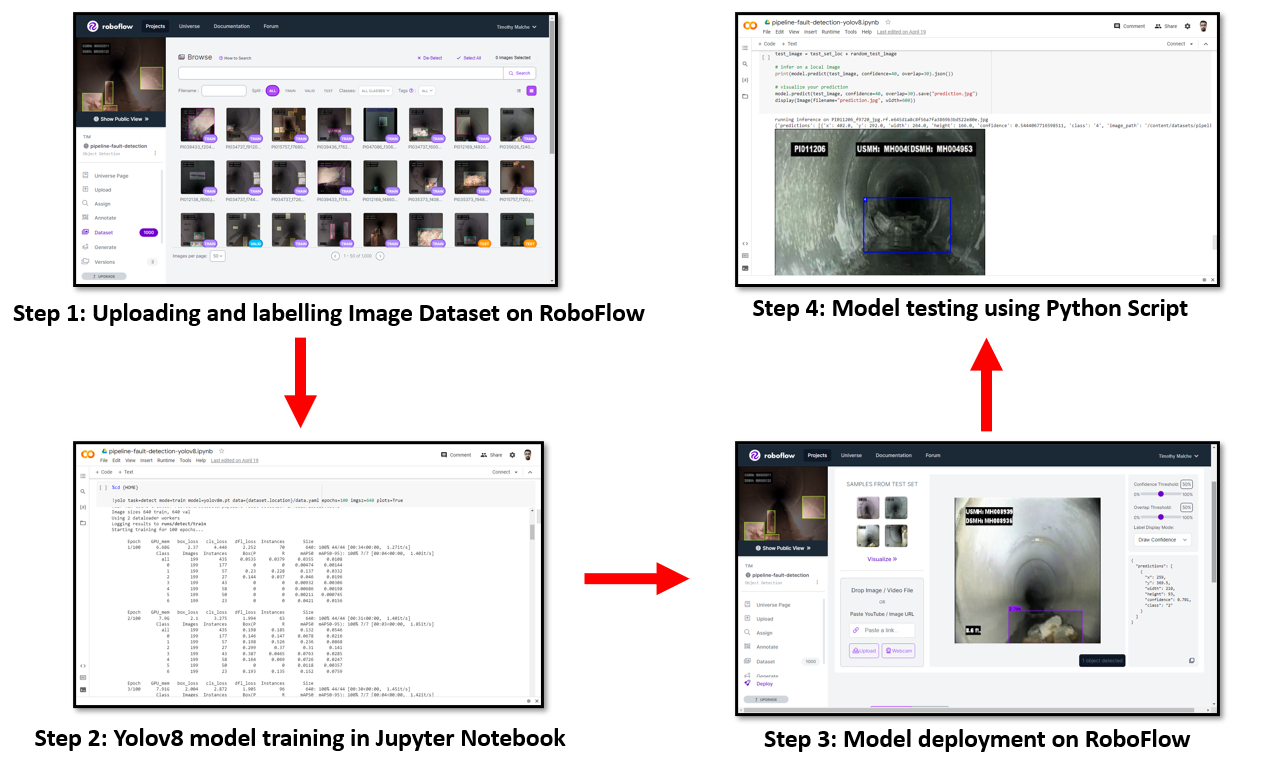

To construct the software program, we are going to undergo the next steps:

- Add and label the picture dataset

- Practice a YOLOv8 mannequin in a Jupyter Pocket book (discover the instance Colab pocket book for this challenge)

- Deploy the mannequin to Roboflow

- Take a look at the mannequin with a Python script

Step 1: Add and Label Photos

A picture dataset was collected and uploaded to Roboflow after which annotated for various courses of injury comparable to crack, utility intrusion, particles, joint offset, impediment, gap, and buckling utilizing Roboflow Annotate. This annotation course of allows the pc imaginative and prescient mannequin to grasp and classify various kinds of injury precisely.

Step 2: Practice a YOLOv8 Mannequin

After annotation, a Jupyter Pocket book was used to coach the YOLOv8 object detection mannequin. As soon as the YOLOv8 mannequin is skilled, the skilled weights are deployed again to Roboflow, making the skilled mannequin out there for inferencing through Roboflow’s hosted inference API.

Step 3: Deploy the Mannequin to Roboflow

After deploying the mannequin, a Python script is used to entry the mannequin through API, and stay inferencing is finished on new and unseen information. The script feeds new pictures to the YOLOv8 mannequin, which then identifies and localizes the various kinds of damages current within the picture. The output of the inferencing course of is used to generate a report, which can be utilized for evaluation.

Roboflow presents a easy interface to add the skilled mannequin and make it prepared to make use of. The mannequin may be deployed from python code to the Roboflow platform utilizing the code given beneath:

challenge.model(dataset.model).deploy(model_type="yolov8", model_path=f"{HOME}/runs/detect/practice/")Keep in mind to provide the trail to the skilled weights for the model_path parameter that corresponds with the folder wherein you will have saved your weights.

Step 4: Take a look at the Mannequin

As soon as the mannequin is deployed, it may be examined with the next Python code in opposition to the check set within the dataset or by feeding a real-time picture.

#load mannequin

mannequin = challenge.model(dataset.model).mannequin #select random check set picture

import os, random test_set_loc = dataset.location + "/check/pictures/"

random_test_image = random.alternative(os.listdir(test_set_loc))

print("working inference on " + random_test_image)

test_image = test_set_loc + random_test_image #infer on an area picture

print(mannequin.predict(test_image, confidence=40, overlap=30).json()) #visualize your prediction

mannequin.predict(test_image, confidence=40, overlap=30).save("prediction.jpg")show(Picture(filename="prediction.jpg", width=600))Within the code above, inference is finished utilizing a pre-trained mannequin deployed on Roboflow. The mannequin was loaded utilizing challenge.model(dataset.model).mannequin.

First, a random picture from the check set is chosen by deciding on a file randomly from the test_set_loc listing. The trail of the chosen picture is then printed to the console.

Subsequent, the mannequin.predict operate known as with the trail of the chosen picture as enter. The boldness and overlap parameters are set to 40 and 30, respectively. These parameters management the brink for accepting predicted bounding packing containers and the diploma of overlap allowed between bounding packing containers, respectively. The json() methodology known as on the returned prediction object so we will learn the predictions as structured information.

The mannequin.predict operate known as once more on the identical picture, this time with the save methodology to avoid wasting the expected bounding packing containers on the picture as a brand new file named prediction.jpg. Lastly, the saved picture is exhibited to the console.

Here’s a code instance of methods to carry out object detection on a single picture utilizing a pre-trained mannequin in Roboflow:

Operating inference on PI011206_f9720_jpg.rf.e645d1a8c8f56a7fa3869b3bd522e80e.jpg, the code will generate following output:

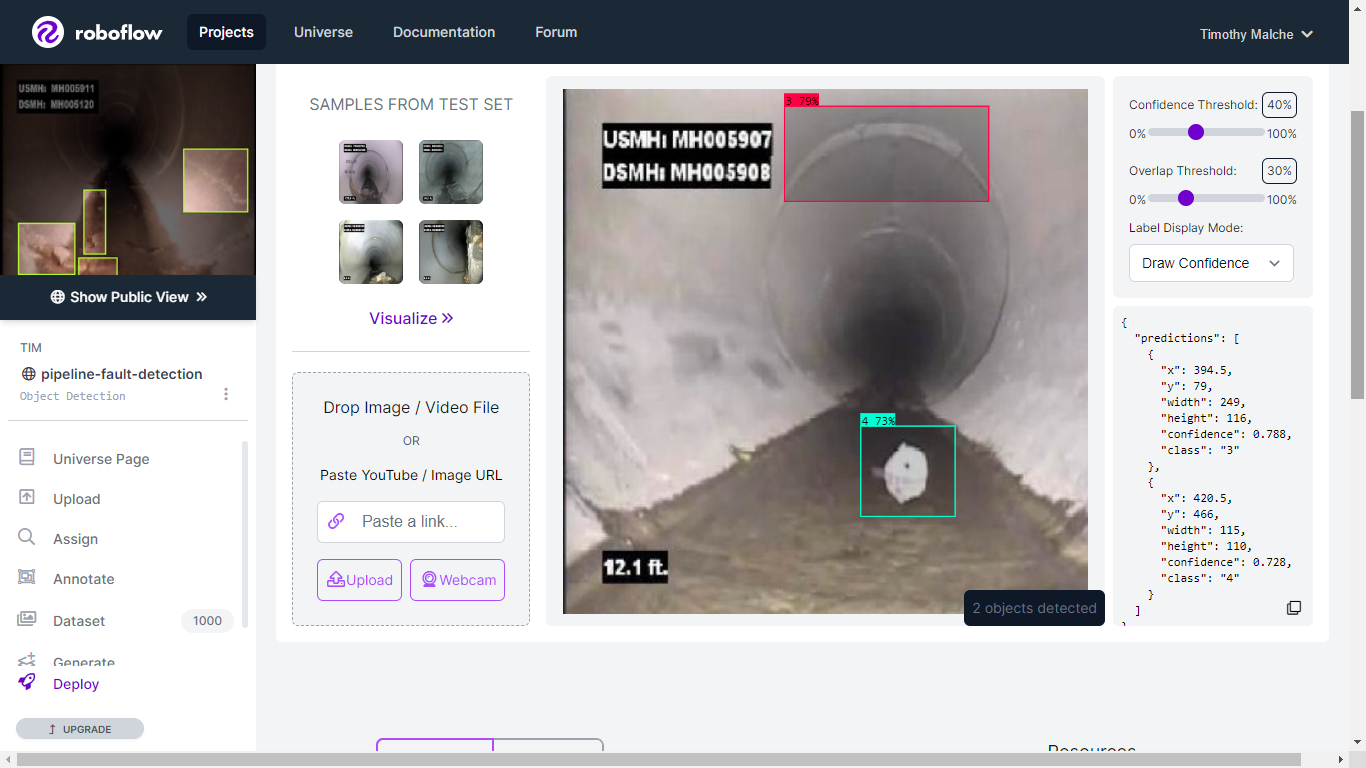

{'predictions': [{'x': 402.0, 'y': 292.0, 'width': 264.0, 'height': 166.0, 'confidence': 0.5444067716598511, 'class': '4', 'image_path': '/content/datasets/pipeline-fault-detection-1/test/images/PI011206_f9720_jpg.rf.e645d1a8c8f56a7fa3869b3bd522e80e.jpg', 'prediction_type': 'ObjectDetectionModel'}], 'picture': {'width': '640', 'peak': '640'}}For the reason that mannequin is uploaded to a Roboflow challenge area, (pipeline-fault-detection), it may be used for inferencing on the Roboflow Deploy web page. The next picture reveals inferencing utilizing the uploaded YOLOv8 mannequin on Roboflow.

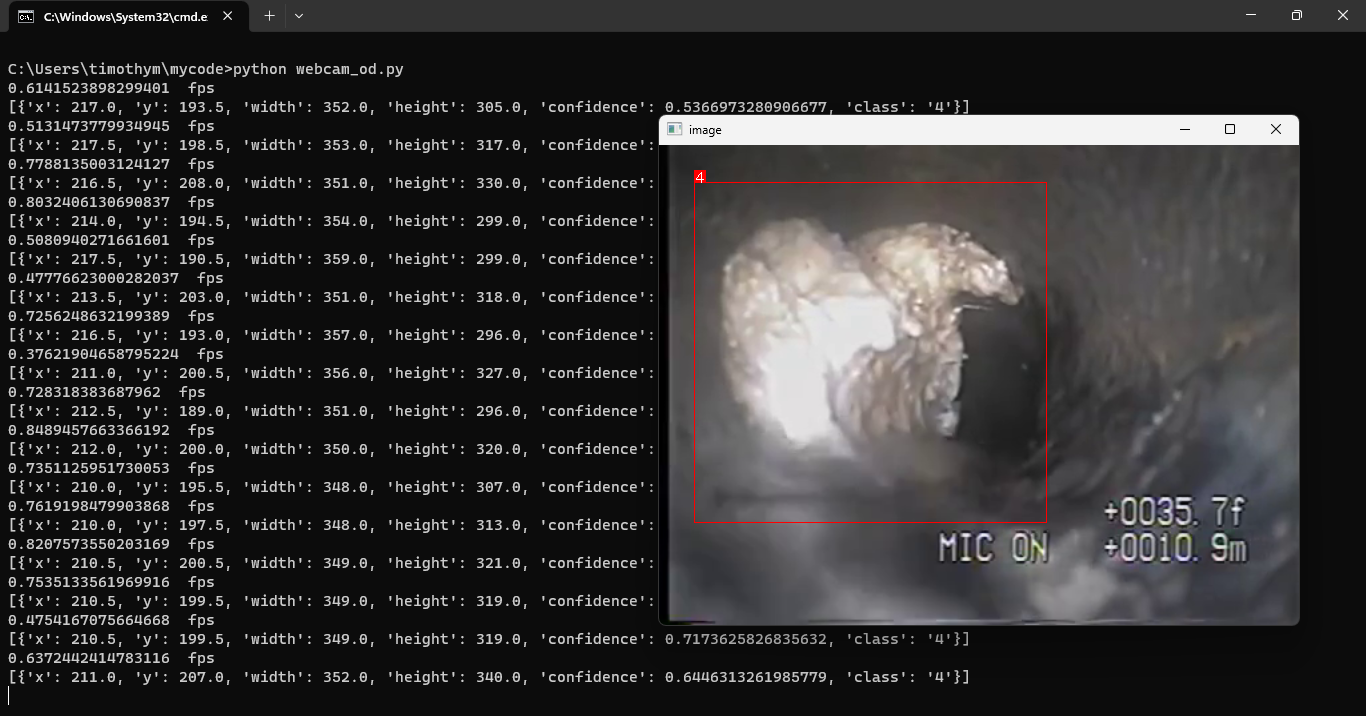

Lastly, the principle software may be constructed utilizing the deployed mannequin from Roboflow in a Python script. An in depth tutorial is out there right here. The next is the output from the python script. The mannequin has detected class 4 (which maps to Impediment in accordance with the ontology we outlined earlier within the publish) within the stay video stream.

Conclusion

By leveraging laptop imaginative and prescient, pipeline fault detection may be automated, permitting companies to observe pipelines for points in actual time with out guide inspection. The YOLOv8 object detection mannequin skilled on pictures annotated utilizing Roboflow can precisely detect various kinds of injury, comparable to cracks, utility intrusions, particles, joint offset, obstacles, holes, and buckling.