Zero-shot pc imaginative and prescient fashions, the place a mannequin can return predictions in a set of courses with out being skilled on particular information on your use case, have many purposes in pc imaginative and prescient pipelines. You should use zero-shot fashions to judge if a picture assortment pipeline ought to ship a picture to a datastore, to annotate information for widespread courses, to trace objects, and extra.

To information a zero-shot mannequin on what you’d prefer it to establish, you should use a immediate or set of prompts to feed the mannequin. A “immediate” refers to a textual content instruction {that a} mannequin will encode and use to affect what the mannequin ought to return.

However, choosing the proper immediate – instruction for an object to establish – for a activity is tough. One method is to guess what immediate may fit finest given the contents of your dataset. This may increasingly work for fashions that establish widespread objects. However, this tactic is much less applicable if you’re constructing a mannequin that ought to establish extra nuanced options in picture information.

That is the place CVevals is available in: our software for locating a statistically robust immediate to make use of with zero-shot fashions. You should use this software to search out the very best immediate to:

- Use Grounding DINO for automated object detection;

- Routinely classify some information in your dataset, and;

- Classify a picture to find out whether or not it ought to be processed additional (helpful in eventualities the place you’ve gotten restricted compute or storage sources obtainable on a tool and need to prioritize what picture information you save).

On this submit, we’re going to point out how you can evaluate prompts for Grounding DINO, a zero-shot object detection mannequin. We’ll resolve on 5 prompts for a basketball participant detection dataset and use CVevals, a pc imaginative and prescient mannequin analysis bundle maintained by Roboflow, to find out the immediate that may establish basketball gamers the very best.

By the top of this submit, we are going to know what immediate will finest label our information robotically with Grounding DINO.

With out additional ado, let’s get began!

Step 1: Set up CVevals

Earlier than we will consider prompts, we have to set up CVevals. CVevals is obtainable as a repository on GitHub which you can set up in your native machine.

To put in CVevals, execute the next traces of code in your terminal:

git clone https://github.com/roboflow/evaluations.git

cd evaluations

pip set up -r necessities.txt

pip set up -e .Since we’ll be working with Grounding DINO on this submit, we’ll want to put in the mannequin. We’ve got a helper script that installs and configures Grounding DINO within the cvevals repository. Run the next instructions within the root cvevals folder to put in the mannequin:

chmod +x ./dinosetup.sh

./dinosetup.shStep 2: Configure an Evaluator

The CVevals repository comes with a spread of examples for evaluating pc imaginative and prescient fashions. Out of the field, we now have comparisons for evaluating prompts for CLIP and Grounding DINO. You too can customise our CLIP comparability to work with BLIP, ALBEF, and BLIPv2.

For this tutorial, we’ll concentrate on Grounding DINO, a zero-shot object detection mannequin, since our activity pertains to object detection. To study extra about Grounding DINO, take a look at our deep dive into Grounding DINO on YouTube.

Open up the examples/dino_compare_example.py file and scroll all the way down to the part the place a variable referred to as `evals` is detected. The variable appears to be like like this by default:

evals = [ {"classes": [{"ground_truth": "", "inference": ""}], "confidence": 0.5}

]Floor reality ought to be precisely equal to the title of the label in our dataset. Inference is the title of the immediate we need to run on Grounding DINO.

Notice that you possibly can solely consider prompts for one class at a time utilizing this script.

We’re going to experiment with 5 prompts:

- A participant on a basketball courtroom

- A sports activities participant

- A basketball participant

For every immediate, we have to set a confidence stage that should be met to ensure that a prediction to be processed. For this instance, we’ll set a price of 0.5. However, we will use this parameter later to determine how rising and lowering the arrogance stage impacts the predictions returned by Grounding DINO.

Let’s substitute the default evals with those we specified above:

evals = [ {"classes": [{"ground_truth": "Player", "inference": "player"}], "confidence": 0.5}, {"courses": [{"ground_truth": "Player", "inference": "a sports player"}], "confidence": 0.5}, {"courses": [{"ground_truth": "Player", "inference": "referee"}], "confidence": 0.5}, {"courses": [{"ground_truth": "Player", "inference": "basketball player"}], "confidence": 0.5}, {"courses": [{"ground_truth": "Player", "inference": "person"}], "confidence": 0.5},

]For reference, right here is an instance picture in our validation dataset:

Step 3: Run the Comparability

To run a comparability, we have to retrieve the next items of data from our Roboflow account:

- Our Roboflow API key;

- The mannequin ID related to our mannequin;

- The workspace ID related to our mannequin, and;

- Our mannequin model quantity.

We’ve got documented how you can retrieve these values in our API documentation.

With the values listed above prepared, we will begin a comparability. To take action, we will run the Grounding DINO immediate comparability instance that we edited within the final part with the next arguments:

python3 examples/dino_example.py --eval_data_path=<path_to_eval_data>

--roboflow_workspace_url=<workspace_url>

--roboflow_project_url<project_url>

--roboflow_model_version=<model_version>

--config_path=<path_to_dino_config_file>

--weights_path=<path_to_dino_weights_file>The config_path worth ought to be GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py and the weights_path worth ought to be GroundingDINO/weights/groundingdino_swint_ogc.pth, assuming you might be operating the script from the basis cvevals folder and have Grounding DINO put in utilizing the set up helper script above.

Here is an instance accomplished command:

python3 dino_compare_example.py

--eval_data_path=/Customers/james/src/webflow-scripts/evaluations/basketball-players

--roboflow_workspace_url=roboflow-universe-projects

--roboflow_project_url=basketball-players-fy4c2

--roboflow_model_version=16

--config_path=GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py

--weights_path=GroundingDINO/weights/groundingdino_swint_ogc.pthOn this instance, we’re utilizing the Basketball Gamers dataset on Roboflow Universe. You possibly can consider any dataset on Roboflow Universe or any dataset related along with your Roboflow account.

Now we will run the command above. In case you are operating the command for the primary time, you may be requested to log in to your Roboflow account in case you have not already logged in utilizing our Python bundle. The script will information you thru authenticating.

As soon as authenticated, this command will:

- Obtain your dataset from Roboflow;

- Run inference on every picture in your validation dataset for every immediate you specified;

- Examine the bottom reality out of your Roboflow dataset with the Grounding DINO predictions, and;

- Return statistics concerning the efficiency of every immediate, and data on the very best immediate as measured by the immediate that resulted within the highest F1 rating.

The size of time it takes for the Grounding DINO analysis course of to finish will depend upon the variety of photos in your validation dataset, the variety of prompts you might be evaluating, whether or not you’ve gotten a CUDA-enabled GPU obtainable, and the specs of your pc.

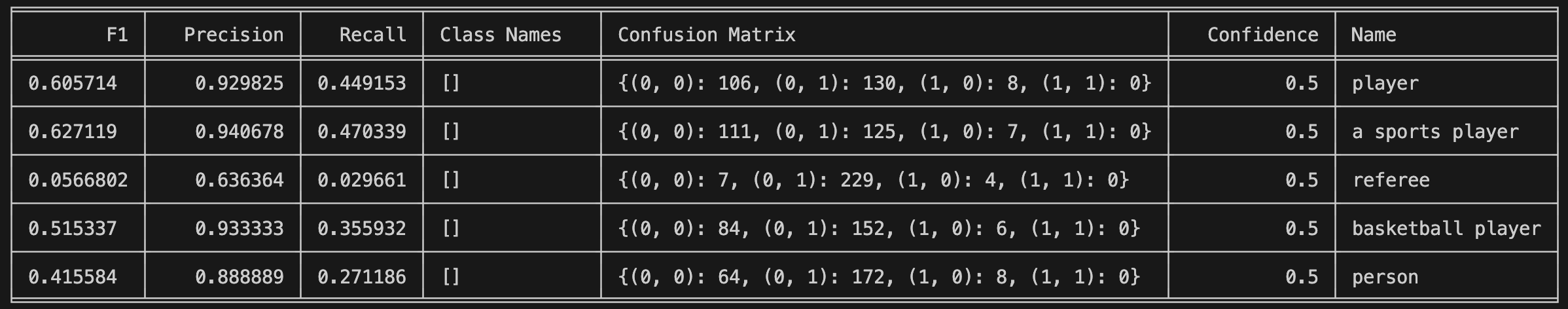

After a while, you will notice a outcomes desk seem. Listed here are the outcomes from our mannequin:

With this info, we will see that “a sports activities participant” is the very best immediate out of those we examined, as measured by the immediate with the best f1 rating. They each carry out equally. We might use this information to robotically label some information in our dataset, figuring out that it’ll carry out effectively for our use case.

In case your analysis returns poor outcomes throughout prompts, this will likely point out:

- Your immediate is simply too particular for Grounding DINO to establish (a key limitation with zero-shot object detectors), or;

- You should experiment with completely different prompts.

We now know we will run Grounding DINO with the “a sports activities participant” immediate to robotically label a few of our information. After doing so, we must always evaluation our annotations in an interactive annotation software like Roboflow Annotate to make sure our annotations are correct and to make any required adjustments.

You possibly can study extra about how you can annotate with Grounding DINO in our Grounding DINO information.

Conclusion

On this information, we now have demonstrated how you can evaluate zero-shot mannequin prompts with the CVevals Python utility. We in contrast three Grounding DINO prompts on a basketball dataset to search out the one which resulted within the highest F1 rating.

CVevals has out-of-the-box assist for evaluating CLIP and Grounding DINO prompts, and you should use present code with some modification to judge BLIP, ALBEF, and BLIPv2 prompts. See the examples folder in CVevals for extra instance code.

Now you’ve gotten the instruments it is advisable discover the very best zero-shot immediate on your use case.

We’ve got additionally written a information displaying how you can consider the efficiency of customized Roboflow fashions utilizing CVevals that we suggest reviewing if you’re excited about diving deeper into mannequin analysis with CVevals.