Ultralytics just lately launched help for the Section Something Mannequin (SAM) to make it simpler for customers to duties akin to occasion segmentation and text-to-mask predictions. Combining the facility of YOLOv8 with SAM is extraordinarily useful

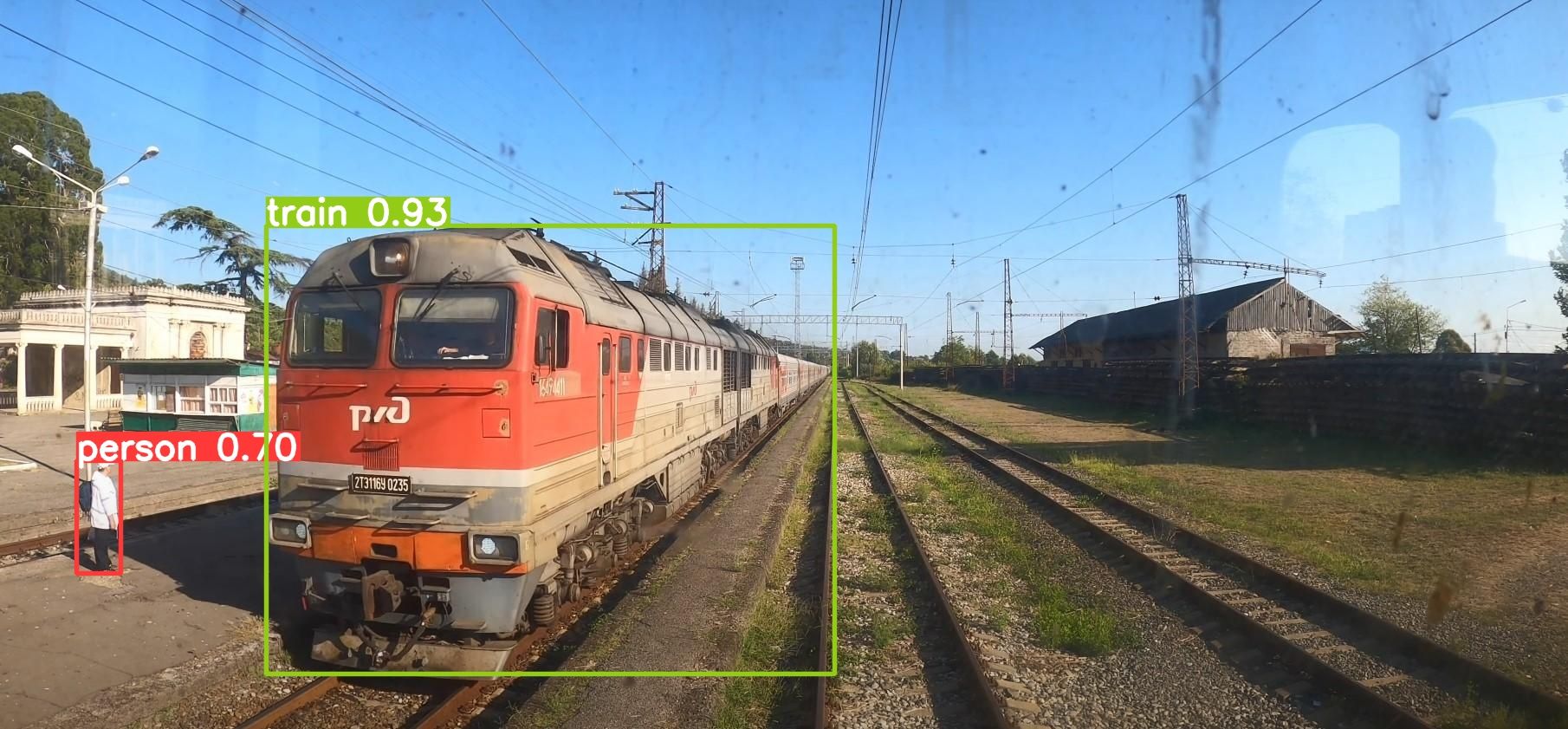

Within the discipline of pc imaginative and prescient, object detection and occasion segmentation are essential duties that allow machines to grasp and work together with visible knowledge. The power to precisely establish and isolate objects in a picture has quite a few sensible functions, from autonomous autos to medical imaging.

On this weblog submit, we’ll discover easy methods to convert bounding containers to segmentation masks and take away the background of photos utilizing a Jupyter pocket book with the assistance of Roboflow and Ultralytics YOLOv8.

💡

Advantages of Segmentation Masks As an alternative of Bounding Bins

Think about you’ve gotten a dataset of photos containing objects of curiosity, with every picture annotated with bounding containers. Whereas bounding containers present positional details about objects, they lack the positive particulars required for extra superior pc imaginative and prescient duties like occasion segmentation or background elimination.

Changing bounding containers to segmentation masks permits us to extract correct object boundaries and separate them from the background, opening up new alternatives for evaluation and manipulation.

Utilizing Roboflow, YOLOv8, and SAM to Create Occasion Segmentation Datasets

To deal with the problem of changing bounding containers to segmentation masks, we’ll make the most of the Roboflow and Ultralytics libraries inside a Jupyter pocket book surroundings. Roboflow simplifies knowledge preparation and annotation, whereas Ultralytics gives state-of-the-art object detection fashions and utilities.

Setting Up the Pocket book

pip set up roboflow ultralytics 'git+https://github.com/facebookresearch/segment-anything.git'We begin by importing the required packages and organising the pocket book surroundings. The code snippet beneath demonstrates the preliminary setup:

import ultralytics

from IPython.show import show, Picture

from roboflow import Roboflow

import cv2

import sys

import numpy as np

import matplotlib.pyplot as plt # Set the machine for GPU acceleration

machine = "cuda" # Verify Ultralytics model and setup completion

ultralytics.checks() # Set the first_run flag to False after the preliminary run

first_run = False if first_run: !{sys.executable} -m pip set up 'git+https://github.com/facebookresearch/segment-anything.git' !wget https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth

Loading the Dataset

Subsequent, load a dataset utilizing the Roboflow API for accessing and managing datasets. The next code snippet demonstrates easy methods to load a dataset model from a selected undertaking:

from roboflow import Roboflow

rf = Roboflow(api_key="YOUR_API_KEY")

undertaking = rf.workspace("vkr-v2").undertaking("vkrrr")

dataset = undertaking.model(5).obtain("yolov8")Operating YOLOv8 Inference

To carry out object detection with YOLOv8, we run the next code:

from ultralytics import YOLO # Load the YOLOv8 mannequin

mannequin = YOLO('yolov8n.pt') # Carry out object detection on the picture

outcomes = mannequin.predict(supply='PATH_TO_IMAGE', conf=0.25)

As soon as we now have the outcomes from YOLOv8, we will extract the bounding field coordinates for the detected objects:

for end in outcomes: containers = end result.containers

bbox = containers.xyxy.tolist()[0]print(bbox)

[746.568603515625, 40.80133056640625, 1142.08056640625, 712.3660888671875]Convert Bounding Field to Segmentation Masks utilizing SAM

Let’s load the SAM mannequin and set it up for inference:

from segment_anything import sam_model_registry, picture = cv2.cvtColor(cv2.imread('PATH_TO_IMAGE'), cv2.COLOR_BGR2RGB)

SamAutomaticMaskGenerator, SamPredictor

sam_checkpoint = "sam_vit_h_4b8939.pth"

model_type = "vit_h"

sam = sam_model_registry[model_type](checkpoint=sam_checkpoint)

sam.to(machine=machine)

predictor = SamPredictor(sam)

predictor.set_image(picture)def show_mask(masks, ax, random_color=False): if random_color: shade = np.concatenate([np.random.random(3), np.array([0.6])], axis=0) else: shade = np.array([30/255, 144/255, 255/255, 0.6]) h, w = masks.form[-2:] mask_image = masks.reshape(h, w, 1) * shade.reshape(1, 1, -1) ax.imshow(mask_image) def show_points(coords, labels, ax, marker_size=375): pos_points = coords[labels==1] neg_points = coords[labels==0] ax.scatter(pos_points[:, 0], pos_points[:, 1], shade='inexperienced', marker='*', s=marker_size, edgecolor='white', linewidth=1.25) ax.scatter(neg_points[:, 0], neg_points[:, 1], shade='pink', marker='*', s=marker_size, edgecolor='white', linewidth=1.25) def show_box(field, ax): x0, y0 = field[0], field[1] w, h = field[2] - field[0], field[3] - field[1] ax.add_patch(plt.Rectangle((x0, y0), w, h, edgecolor='inexperienced', facecolor=(0,0,0,0), lw=2)) Subsequent, let’s convert the bounding field coordinates to a segmentation masks utilizing the SAM mannequin:

input_box = np.array(bbox)

masks, _, _ = predictor.predict( point_coords=None, point_labels=None, field=bbox[None, :], multimask_output=False,

)

plt.determine(figsize=(10, 10))

plt.imshow(picture)

show_mask(masks[0], plt.gca())

show_box(input_box, plt.gca())

plt.axis('off')

plt.present()

BONUS: Background Elimination

Lastly, we will take away the background from the picture utilizing the segmentation masks. The next code snippet demonstrates the method:

segmentation_mask = masks[0] # Convert the segmentation masks to a binary masks

binary_mask = np.the place(segmentation_mask > 0.5, 1, 0)

white_background = np.ones_like(picture) * 255 # Apply the binary masks

new_image = white_background * (1 - binary_mask[..., np.newaxis]) + picture * binary_mask[..., np.newaxis] plt.imshow(new_image.astype(np.uint8))

plt.axis('off')

plt.present()

The ensuing picture is then displayed with out the background.

Conclusion

On this weblog submit, we now have explored easy methods to convert bounding containers to segmentation masks and take away a background utilizing a Jupyter pocket book.

By leveraging the capabilities of the Roboflow and Ultralytics libraries, we will carry out object detection, generate segmentation masks, and manipulate photos with ease. This opens up potentialities for superior pc imaginative and prescient duties, akin to occasion segmentation and background elimination, and empowers us to extract beneficial insights from visible knowledge.