Introduction

Picture matting expertise is a pc imaginative and prescient and picture processing approach that separates the foreground objects from the background in a picture. The objective of picture matting is to precisely compute the transparency or alpha values for every pixel within the picture, indicating how a lot of the pixel belongs to the foreground and the way a lot to the background.

Varied purposes generally use picture matting, together with picture and video enhancing, which requires exact object extraction. It permits for creating lifelike composites, seamlessly integrating objects from one picture into one other whereas preserving intricate particulars like hair or fur. Superior picture matting strategies usually contain utilizing machine studying fashions and deep studying algorithms to enhance the accuracy of the matting course of. These fashions analyze the picture’s visible traits, together with shade, texture, and lighting, to successfully estimate the alpha values and separate foreground and background parts.

Picture matting includes precisely estimating the foreground object in a picture. Folks make use of this method in varied purposes, with one notable instance being using background blur results throughout video calls or when capturing portrait selfies on smartphones. ViTMatte is the newest addition to the 🤗 Transformers library, a state-of-the-art mannequin designed for picture matting. ViTMatte leverages a imaginative and prescient transformer (ViT) as its spine, complemented by a light-weight decoder, making it able to distinguishing intricate particulars comparable to particular person hairs.

Studying targets

On this article, we’ll delve into;

- The ViTMatte mannequin and exhibit it for picture matting.

- We’ll present a step-by-step information utilizing code

This text was printed as part of the Knowledge Science Blogathon.

Desk of contents

Understanding the ViTMatte Mannequin

ViTMatte’s structure is constructed upon a imaginative and prescient transformer (ViT), the mannequin’s spine. The important thing benefit of this design is that the spine handles the heavy lifting, benefiting from large-scale self-supervised pre-training. This ends in excessive efficiency in the case of picture matting. It was launched within the paper “Boosting Picture Matting with Pretrained Plain Imaginative and prescient Transformers” by Jingfeng Yao, Xinggang Wang, Shusheng Yang, and Baoyuan Wang. This mannequin leverages the ability of plain Imaginative and prescient Transformers (ViTs) to deal with picture matting, which includes precisely estimating the foreground object in photos and movies.

The essential contributions of ViTMatte, as within the summary of the paper, are as follows:

- Hybrid Consideration Mechanism: It makes use of a hybrid consideration mechanism with a convolutional “neck.” This strategy helps ViTs strike a steadiness between efficiency and computation in matting.

- Element Seize Module: To raised data essential for matting, ViTMatte introduces the element seize module. This module consists of light-weight convolutions, which counterpoint the knowledge.

ViTMatte inherits a number of traits from ViTs, together with various pretraining methods, streamlined architectural design, and adaptable inference methods.

State-of-the-Artwork Efficiency

The mannequin was evaluated on Composition-1k and Distinctions-646, benchmarks for picture matting. In each instances, ViTMatte has achieved state-of-the-art efficiency and surpassed the capabilities of previous matting strategies by a margin. This underscores ViTMatte’s potential within the area of picture matting.

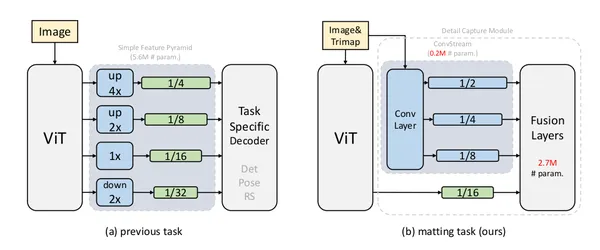

The above diagram is from their paper exhibiting an outline of ViTMatte and different purposes of plain imaginative and prescient transformers. The previous strategy used a easy function pyramid designed by ViTDet. Determine (b) presents a brand new adaptation technique for picture matting referred to as ViTMatte. It leverages easy convolution layers to extract data from the picture and makes use of the function map generated by imaginative and prescient transformers (ViT).

Sensible Implementation

Let’s dive into the sensible implementation of ViTMatte. We are going to stroll by way of the steps to make use of ViTMatte to harness its capabilities. We cannot proceed this text with out mentioning the efforts of Niels Rogge for his fixed efforts for the HF household. ViTMatte’s contribution to HF is attributed to Niels https://huggingface.co/nielsr. The unique code for ViTMatte will be discovered right here. Allow us to dive into the code!

Setting Atmosphere

To get began with ViTMatte, you first must arrange your setting. Discover this tutorial code right here: https://github.com/inuwamobarak/ViTMatte . Start by putting in the 🤗 Transformers library, which incorporates the ViTMatte mannequin. Use the next code for set up:

!pip set up -q git+https://github.com/huggingface/transformers.gitLoading the Picture and Trimap

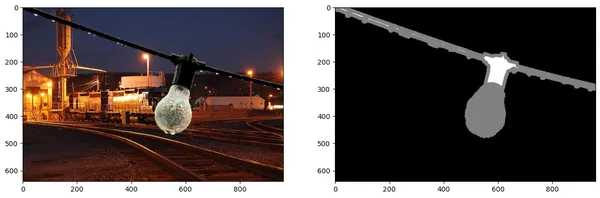

In picture matting, we manually label a touch map often called a trimap, which outlines the foreground in white, the background in black, and the unknown areas in gray. The ViTMatte mannequin expects each the enter picture and the trimap to carry out picture matting.

The under code demonstrates loading a picture and its corresponding trimap:

# Import needed libraries

import matplotlib.pyplot as plt

from PIL import Picture

import requests # Load the picture and trimap

url = "https://github.com/hustvl/ViTMatte/blob/major/demo/bulb_rgb.png?uncooked=true"

picture = Picture.open(requests.get(url, stream=True).uncooked).convert("RGB")

url = "https://github.com/hustvl/ViTMatte/blob/major/demo/bulb_trimap.png?uncooked=true"

trimap = Picture.open(requests.get(url, stream=True).uncooked) # Show the picture and trimap

plt.determine(figsize=(15, 15))

plt.subplot(1, 2, 1)

plt.imshow(picture)

plt.subplot(1, 2, 2)

plt.imshow(trimap)

plt.present()

Loading the ViTMatte Mannequin

Subsequent, let’s load the ViTMatte mannequin and its processor from the 🤗 hub. The processor handles the picture preprocessing, whereas the mannequin itself is the core for picture matting.

# Import ViTMatte associated libraries

from transformers import VitMatteImageProcessor, VitMatteForImageMatting # Load the processor and mannequin

processor = VitMatteImageProcessor.from_pretrained("hustvl/vitmatte-small-distinctions-646")

mannequin = VitMatteForImageMatting.from_pretrained("hustvl/vitmatte-small-distinctions-646")Operating a Ahead Go

Now that we’ve set the picture and trimap and the mannequin, let’s run a ahead cross for the anticipated alpha values. These alpha values symbolize the transparency of every pixel within the picture. Which means with the mannequin and processor in place, now you can carry out a ahead cross to get the anticipated alpha values, which symbolize the transparency of every pixel within the picture.

# Import needed libraries

import torch # Carry out a ahead cross

with torch.no_grad(): outputs = mannequin(pixel_values) # Extract the alpha values

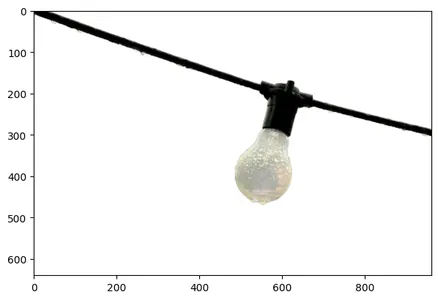

alphas = outputs.alphas.flatten(0, 2)Visualizing the Foreground

To visualise the foreground object, we are able to use the next code, which crops the foreground from the picture based mostly on the anticipated alpha values:

# Import needed libraries

import PIL

from torchvision.transforms import practical as F # Outline a operate to calculate the foreground

def cal_foreground(picture: PIL.Picture, alpha: PIL.Picture): picture = picture.convert("RGB") alpha = alpha.convert("L") alpha = F.to_tensor(alpha).unsqueeze(0) picture = F.to_tensor(picture).unsqueeze(0) foreground = picture * alpha + (1 - alpha) foreground = foreground.squeeze(0).permute(1, 2, 0).numpy() return foreground # Calculate and show the foreground

fg = cal_foreground(picture, prediction)

plt.determine(figsize=(7, 7))

plt.imshow(fg)

plt.present()

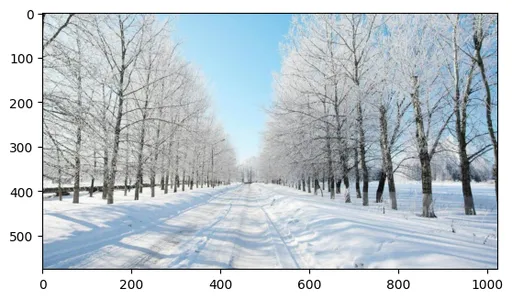

Background Substitute

One spectacular use of picture matting is changing the background with a brand new one. The code under exhibits how one can merge the anticipated alpha matte with a brand new background picture:

# Load the brand new background picture

url = "https://github.com/hustvl/ViTMatte/blob/major/demo/new_bg.jpg?uncooked=true"

background = Picture.open(requests.get(url, stream=True).uncooked).convert("RGB") plt.imshow(background)

# Outline a operate to merge with the brand new background

def merge_new_bg(picture, background, alpha): picture = picture.convert('RGB') bg = background.convert('RGB') alpha = alpha.convert('L') picture = F.to_tensor(picture) bg = F.to_tensor(bg) bg = F.resize(bg, picture.form[-2:]) alpha = F.to_tensor(alpha) new_image = picture * alpha + bg * (1 - alpha) new_image = new_image.squeeze(0).permute(1, 2, 0).numpy() return new_image # Merge with the brand new background

new_image = merge_new_bg(picture, background, prediction)

plt.determine(figsize=(7, 7))

plt.imshow(new_image)

plt.present()

Discover the complete code right here and don’t overlook to observe my GitHub. It is a highly effective addition to picture matting, making it simpler to precisely estimate foreground objects in photos and movies. Video conferencing like ZOOM can use this expertise to successfully take away backgrounds successfully.

Conclusion

ViTMatte is a groundbreaking addition to the world of picture matting, making it simpler than ever to precisely estimate foreground objects in photos and movies. With the flexibility to leverage pre-trained imaginative and prescient transformers, ViTMatte affords outcomes. By following the steps outlined on this article, you may harness the capabilities of ViTMatte to higher picture matting and discover artistic purposes like background substitute. Whether or not you’re a developer, researcher, or curious in regards to the newest developments in laptop imaginative and prescient, ViTMatte is a precious device to have.

Key Takeaways:

- ViTMatte is a mannequin with plain Imaginative and prescient Transformers (ViTs) to excel in picture matting, precisely estimating the foreground object in photos and movies.

- ViTMatte incorporates a hybrid consideration mechanism and a element seize module to strike a steadiness between efficiency and computation, making it environment friendly and strong for picture matting.

- ViTMatte has achieved state-of-the-art efficiency on benchmark datasets, outperforming previous picture matting strategies by a margin.

- It inherits properties from ViTs, together with pretraining methods, architectural design, and versatile inference methods.

Steadily Requested Questions

A1: Picture matting is the method of precisely estimating the foreground object in photos and movies. It’s essential for purposes, background blur in video calls, and portrait images.

A2: ViTMatte leverages plain Imaginative and prescient Transformers (ViTs) and revolutionary consideration mechanisms to attain state-of-the-art efficiency and adaptableness in picture matting.

A3: ViTMatte’s key contributions embody introducing ViTs to picture matting, a hybrid consideration mechanism, and a element seize module. It inherits ViT’s strengths and achieves efficiency on benchmark datasets.

A4: ViTMatte was contributed by Nielsr in HuggingFace from Yao et al. (2023) and the unique code will be discovered right here(https://github.com/hustvl/ViTMatte).

A5: Picture matting with ViTMatte opens up artistic potentialities, background substitute, creative results, and video conferencing options like digital backgrounds. Builders and researchers to discover new methods of bettering photos and movies.

References

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.ViTMatte: Unveiling the Slicing-Edge in Picture Matting Know-how