Microsoft has introduced the following of its suite of smaller, extra nimble synthetic intelligence (AI) fashions focused at extra particular use instances.

Earlier this month, Microsoft unveiled Phi-1, the primary of what it calls small language fashions (SLMs); they’ve far fewer parameters than their massive language mannequin (LLM) predecessor. For instance, the GPT-Three LLM — the premise for ChatGPT — has 175 billion parameters. GPT-4, OpenAI’s newest LLM, has about 1.7 trillion parameters. Phi-1 was adopted by Phi-1.5, which by comparability, has 1.Three billion parameters.

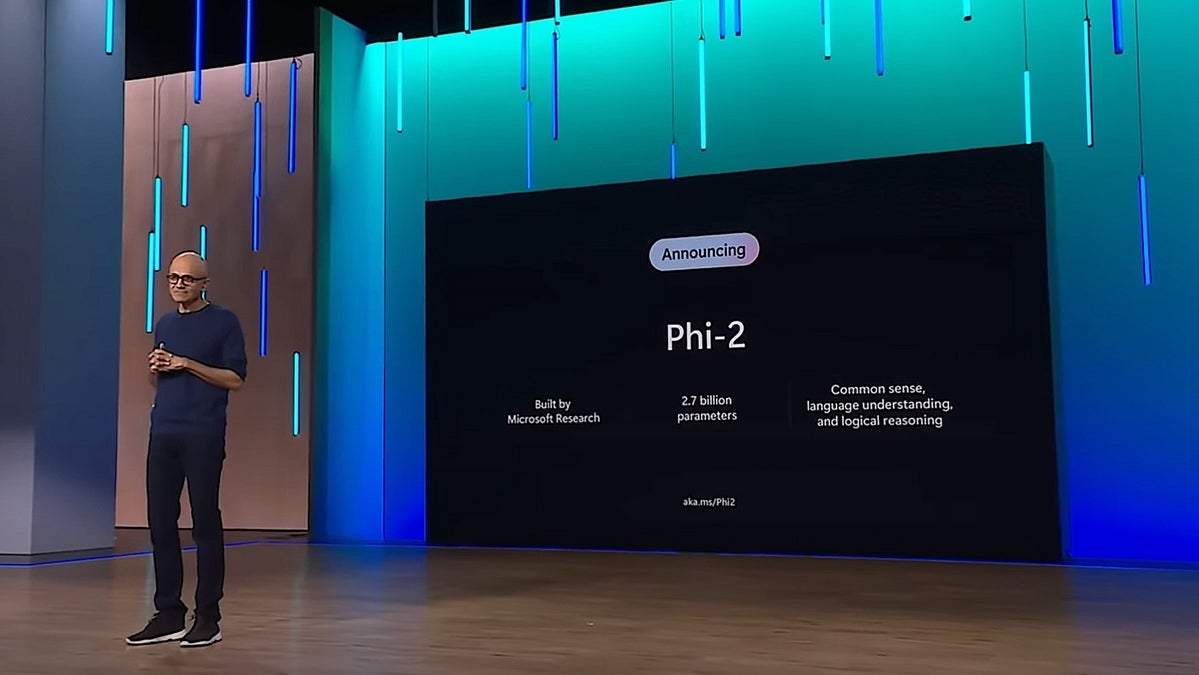

Phi-2 is a 2.7 billion-parameter language mannequin that the corporate claims can outperform LLMs as much as 25 instances bigger.

Microsoft is a serious inventory holder and associate with OpenAI, the developer of ChatGPT, which was launched just a little greater than a 12 months in the past. Microsoft makes use of ChatGPT as the premise for its Copilot generative AI assistant.

LLMs used for generative AI (genAI) functions corresponding to chatGPT or Bard can devour huge quantities of processor cycles and be pricey and time-consuming to coach for particular use instances due to their dimension. Smaller, extra industry- or business-focused fashions can usually present higher outcomes tailor-made to enterprise wants.

“Eventually, scaling of GPU chips will fail to maintain up with will increase in mannequin dimension,” stated Avivah Litan, a vice chairman distinguished analyst with Gartner Analysis. “So, persevering with to make fashions larger and greater just isn’t a viable choice.”

At present, there’s a growing trend to shrink LLMs to make them extra inexpensive and able to being educated for domain-specific duties, corresponding to on-line chatbots for monetary providers purchasers or genAI functions that may summarize digital healthcare information.

Smaller, extra area particular language fashions educated on focused knowledge will ultimately problem the dominance of immediately’s main LLMs, together with OpenAI’s GPT 4, Meta AI’s LLaMA 2, or Google’s PaLM 2.

Dan Diasio, Ernst & Younger’s World Synthetic Intelligence Consulting Chief, famous that there’s at the moment a backlog of GPU orders. A chip scarcity not solely creates issues for tech corporations making LLMs, but additionally for consumer corporations looking for to tweak fashions or construct their very own proprietary LLMs.

“Because of this, the prices of fine-tuning and constructing a specialised company LLM are fairly excessive, thus driving the pattern in the direction of information enhancement packs and constructing libraries of prompts that include specialised information,” Diasio stated.

With its compact dimension, Microsoft is pitching Phi-2 as an “superb playground for researchers,” together with for exploration round mechanistic interpretability, security enhancements, or fine-tuning experimentation on a wide range of duties. Phi-2 is available in the Azure AI Studio model catalog.

“If we would like AI to be adopted by each enterprise — not simply the billion-pound multinationals — then it must be cost-effective, in keeping with Victor Botev, former AI analysis engineer at Chalmers College and CTO and co-founder at start-up Iris.ai, which makes use of AI to speed up scientific analysis.

The discharge of Microsoft’s Phi-2 is critical, Botev stated. “Microsoft has managed to problem conventional scaling legal guidelines with a smaller-scale mannequin that focuses on ‘textbook-quality’ knowledge. It is a testomony to the truth that there’s extra to AI than simply rising the scale of the mannequin,” he stated.

“Whereas it’s unclear what knowledge and the way the mannequin was educated on it, there are a number of improvements that may enable fashions to do extra with much less.”

LLMs of all sizes are educated via a course of referred to as prompt engineering — feeding queries and the right responses into the fashions so the algorithm can reply extra precisely. At the moment, there are even marketplaces for lists of prompts, such because the 100 finest prompts for ChatGPT.

However the extra knowledge ingested into LLMs, the the larger the potential for dangerous and inaccurate outputs. GenAI instruments are principally next-word predictors, that means flawed info fed into them can yield flawed outcomes. (LLMs have already made some high-profile errors and may produce “hallucinations” the place the next-word era engines go off the rails and produce weird responses.)

“If the information itself is properly structured and promotes reasoning, there’s much less scope for any mannequin to hallucinate,” Botev stated. “Coding language may also be used because the coaching knowledge, as it’s extra reason-based than textual content.

“We should use domain-specific, structured information to ensure language fashions ingest, course of, and reproduce info on a factual foundation,” he continued. “Taking this additional, information graphs can assess and display the steps a language mannequin takes to reach at its outputs, basically producing a potential chain of ideas. The much less room for interpretation on this coaching means fashions usually tend to be guided to factually correct solutions.

“Smaller fashions with excessive efficiency like Phi-2 characterize the best way ahead.”