Huawei’s Mindspore is an open-source deep studying framework for coaching and inference written in C++. Notably, customers can implement the framework throughout cell, edge, and cloud functions. Working beneath the Apache-2.zero license, MindSpore AI permits customers to make use of, modify, and distribute the software program.

MindSpore provides a complete developer platform to develop, deploy, and scale synthetic intelligence fashions. MindSpore lowers the limitations to beginning by offering a unified programming interface, Python compatibility, and visible instruments.

On this weblog article, we’ll discover MindSpore in-depth:

- Understanding the Structure

- Reviewing Optimization Strategies

- Exploring Adaptability

- Ease of growth

- Upsides and Industrial Dangers

About us: Viso Suite is probably the most highly effective end-to-end laptop imaginative and prescient platform. Our no-code answer permits groups to quickly construct real-world laptop imaginative and prescient utilizing the newest deep studying fashions out of the field. Ebook a demo.

What’s MindSpore?

At its core, the MindSpore open-source venture is an answer that mixes ease of growth with superior capabilities. It accelerates AI analysis and prototype growth. The built-in strategy promotes collaboration, innovation, and accountable AI practices with deep studying algorithms.

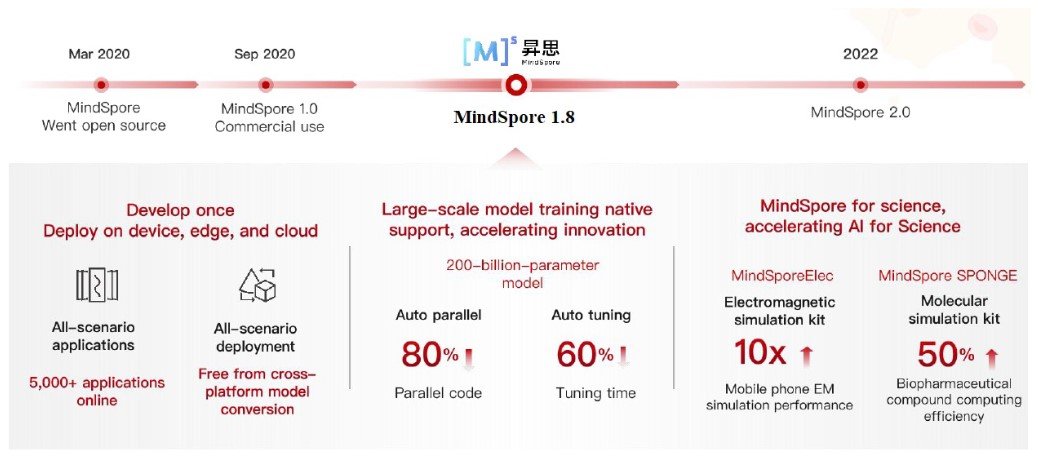

Right now, MindSpore is extensively used for analysis and prototyping tasks throughout ML Imaginative and prescient, NLP, and Audio duties. Key advantages embrace the all-scenario functions with an strategy of growing as soon as and deploying wherever.

Mindspore Structure: Understanding Its Core

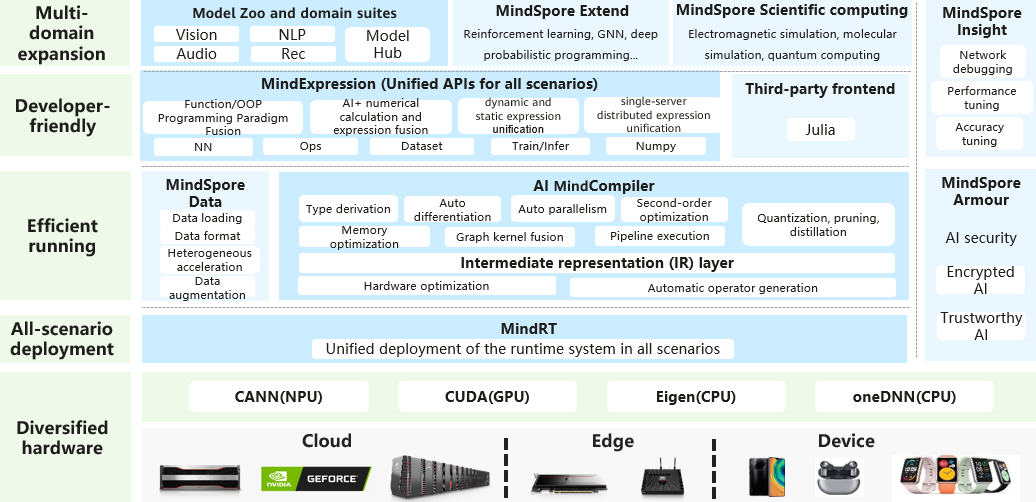

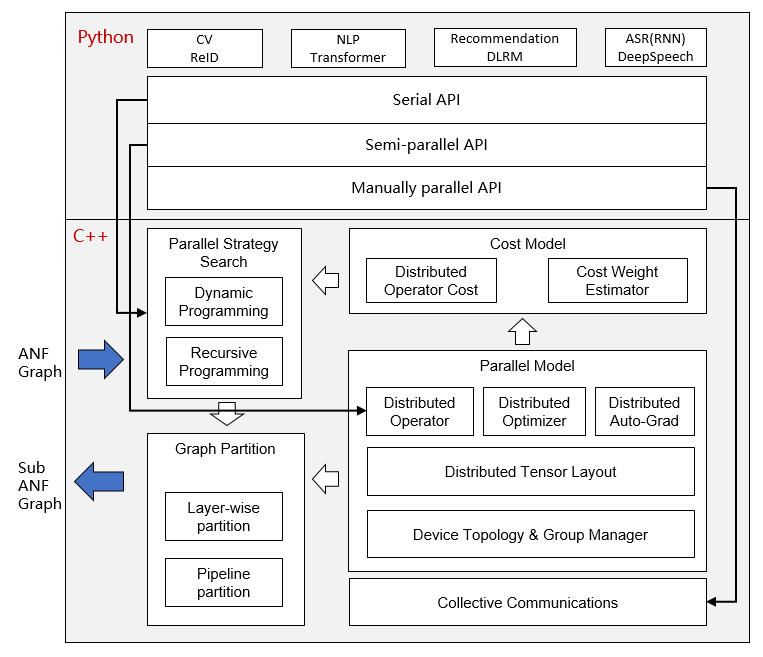

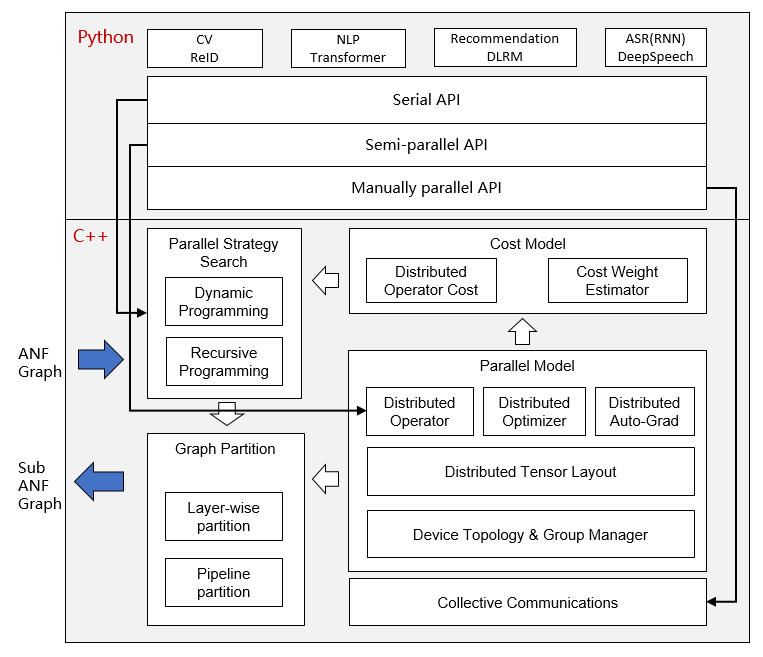

Huawei’s MindSpore comprises a modular and environment friendly structure for neural community coaching and inference.

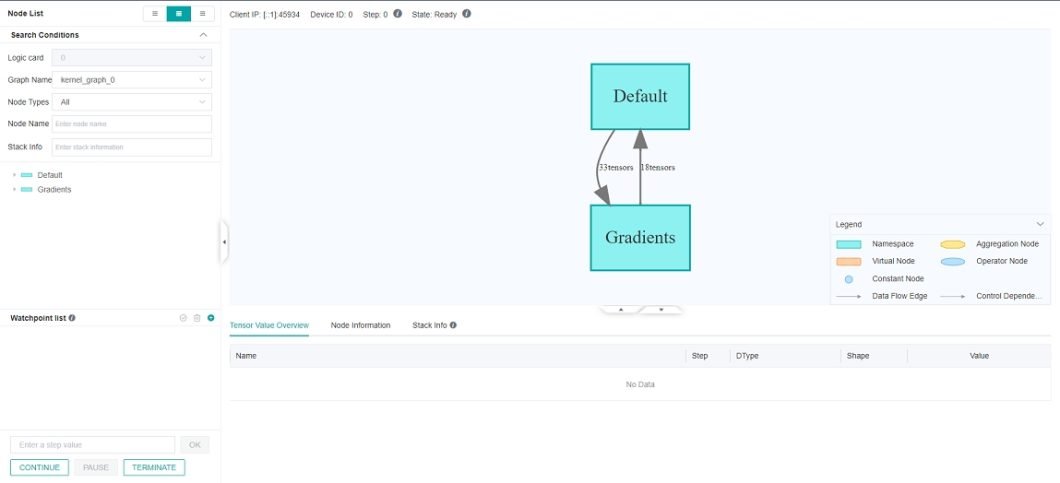

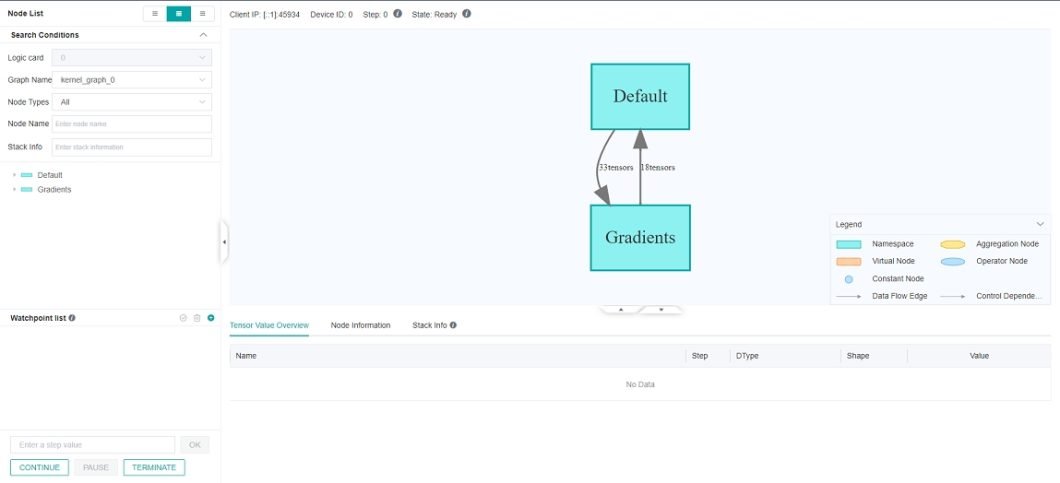

- Computational Graph. The Computational Graph is a dynamic and versatile illustration of neural community operations. This graph types the spine of mannequin execution, selling flexibility and flexibility throughout the coaching and inference phases.

- Execution Engine. The Execution Engine interprets the computational graph into actionable instructions. With a concentrate on optimization, it ensures seamless and environment friendly execution of neural community duties throughout AI {hardware} architectures.

- Operators and Kernels. The MindSpor structure comprises a library of operators and kernels, every optimized for particular {hardware} platforms. These parts type the constructing blocks of neural community operations, contributing to the framework’s velocity and effectivity.

- Mannequin Parallelism. MindSpore implements an computerized parallelism strategy, seamlessly integrating coaching knowledge units, fashions, and hybrid parallelism. Every operator is intricately break up into clusters, enabling environment friendly parallel operations with out coming into into advanced implementation particulars. With a dedication to a Python-based growth atmosphere, customers can concentrate on top-level API effectivity whereas benefiting from computerized parallelism.

- Supply Transformation (ST). Evolving from the practical programming framework, ST performs an computerized differential transformation on the intermediate expression throughout the compilation course of. Supply Transformation helps advanced management stream situations, higher-order features, and closures.

Supply Transformation

MindSpore, a complicated computing framework, makes use of a technique known as computerized differentiation based mostly on Supply Transformation (ST). This strategy is particularly designed to reinforce the efficiency of neural networks by enabling computerized differentiation of management flows and incorporating static compilation optimization. Merely put, it makes advanced neural community computations extra environment friendly and efficient.

The core of MindSpore’s computerized differentiation lies in its similarity to symbolic differentiation present in fundamental algebra. It makes use of a system known as Intermediate Illustration (IR), which acts as a center step in calculations. This illustration mirrors the idea of composite features in algebra, the place every fundamental operate in IR corresponds to a primitive operation. This alignment permits MindSpore to assemble and handle advanced management flows in computations, akin to dealing with intricate algebraic features.

To raised perceive this, think about how derivatives are calculated in fundamental algebra. MindSpore’s computerized differentiation, by means of the usage of Intermediate Representations, simplifies the method of coping with advanced mathematical features.

Every step within the IR correlates with basic algebraic operations, enabling the framework to effectively deal with extra subtle and sophisticated duties. This makes MindSpore not solely highly effective for neural community optimization but additionally versatile in dealing with a variety of advanced computational situations.

A key takeaway from Mindspore’s structure is its modular design. This allows customers to customise neural networks for quite a lot of duties, from Picture Recognition to Pure Language Processing (NLP). This adaptability signifies that the framework can combine into many environments. Thus, making it relevant to a variety of laptop imaginative and prescient functions.

Optimization Strategies

Optimization strategies are obligatory for MindSpore’s performance as they improve mannequin efficiency and contribute to useful resource effectivity. For AI functions the place computational calls for are excessive, MindSpore’s optimization methods be sure that neural networks function seamlessly. In flip, delivering high-performance outcomes whereas conserving invaluable assets.

- Quantization. MindSpore makes use of quantization, which transforms the precision of numerical values inside neural networks. By decreasing the bit-width of information representations, the framework not solely conserves reminiscence but additionally accelerates computational velocity.

- Pruning. By means of pruning, MindSpore removes pointless neural connections to streamline mannequin complexity. This system enhances the sparsity of neural networks, leading to diminished reminiscence footprint and sooner inference. Consequently, MindSpore crafts leaner fashions with out compromising on predictive accuracy.

- Weight Sharing. MindSpore takes an revolutionary strategy to parameter sharing, which optimizes mannequin storage and hastens computation. By combining shared weights, it ensures extra environment friendly reminiscence use and accelerates the coaching course of.

- Dynamic Operator Fusion. Dynamic Operator Fusion orchestrates the mixing of a number of operations for improved computational effectivity. By combining sequential operations right into a single, optimized kernel, the deep studying framework minimizes reminiscence overhead. Thus, enabling sooner and extra environment friendly neural community execution.

- Adaptive Studying Charge. Adaptive algorithms dynamically regulate studying charges throughout mannequin coaching. MindSpore adapts to the dynamic nature of neural community coaching and overcomes challenges posed by various gradients. This enables for optimum convergence and mannequin accuracy.

Native Assist for {Hardware} Acceleration

{Hardware} acceleration is a game-changer for AI efficiency. MindSpore leverages native assist for numerous {hardware} architectures, corresponding to GPUs, NPUs, and Ascend processors, optimizing mannequin execution and total AI effectivity.

The Energy of GPUs and Ascend Processors

MindSpore seamlessly integrates with GPUs and Ascend processors, leveraging their parallel processing capabilities. This integration enhances each coaching and inference by optimizing the execution of neural networks, establishing MindSpore as an answer for computation-intensive AI duties.

Distributed Coaching

We are able to spotlight MindSpore’s scalability with its native assist for {hardware} acceleration, extending to distributed coaching. This enables neural community coaching to scale throughout a number of gadgets. In flip, the expedited growth lifecycle makes MindSpore appropriate for large-scale AI initiatives.

Mannequin Parallelism

MindSpore incorporates superior options like mannequin parallelism, which distributes neural community computations throughout completely different {hardware} gadgets. By partitioning the workload, MindSpore optimizes computational effectivity, leveraging various {hardware} assets to their full potential.

The mannequin parallelism strategy ensures not solely optimum useful resource use but additionally acceleration of AI mannequin growth. This offers a major increase to efficiency and scalability in advanced computing environments.

Actual-Time Inference with FPGA Integration

In real-time AI functions, MindSpore’s native assist extends to FPGA integration. This integration with Subject-Programmable Gate Arrays (FPGAs) facilitates swift and low-latency inference. In flip, positions MindSpore as a stable alternative for functions demanding fast and exact predictions.

Elevating Edge Computing with MindSpore

MindSpore extends its native assist for {hardware} acceleration to edge computing. This integration ensures environment friendly AI processing on resource-constrained gadgets, bringing AI capabilities nearer to the information supply and enabling clever edge functions.

Ease of ML Improvement

The MindSpore platform offers a single programming interface that helps to streamline the event of laptop imaginative and prescient fashions. In flip, this permits customers to work throughout completely different {hardware} architectures with out intensive code adjustments.

By leveraging the recognition of Python programming language within the AI group, MindSpore ensures compatibility, making it accessible to a broad spectrum of builders. Using Python, together with the MindSpore options, was constructed to speed up the event cycle.

Visible Interface and No-Code Components

MindSpore permits builders to design and deploy fashions with a no-code strategy. Thus, permitting for collaboration between area consultants and AI builders. The AI device additionally facilitates visible mannequin design by means of instruments like ModelArts. This makes it doable for builders to visualise and expedite the event of advanced knowledge processing and customized educated fashions.

For those who want no code for the complete lifecycle of laptop imaginative and prescient functions, try our end-to-end platform Viso Suite.

Operators and Fashions

Furthermore, MindSpore has compiled a repository of pre-built operators and fashions. For prototyping, builders can begin from scratch and transfer forward shortly when growing new fashions. That is particularly evident for frequent duties in laptop imaginative and prescient, pure language processing, audio AI, and extra. The platform incorporates auto-differentiation, automating the computation of gradients within the coaching course of, and simplifying the implementation of superior machine studying fashions.

Seamless integration with industry-standard deep studying frameworks like TensorFlow or PyTorch permits builders to make use of current fashions. That means that they’ll seamlessly transition to MindSpore.

The MindSpore Hub provides a centralized repository for fashions, datasets, and scripts, fostering a collaborative ecosystem the place builders can entry and share assets. Designed with cloud-native ideas, MindSpore locations the ability of cloud assets in customers’ arms. By doing this, Huawei AI enhances scalability and expedites mannequin deployment in cloud environments.

Upsides and Dangers to Take into account

Common in Open Supply Group

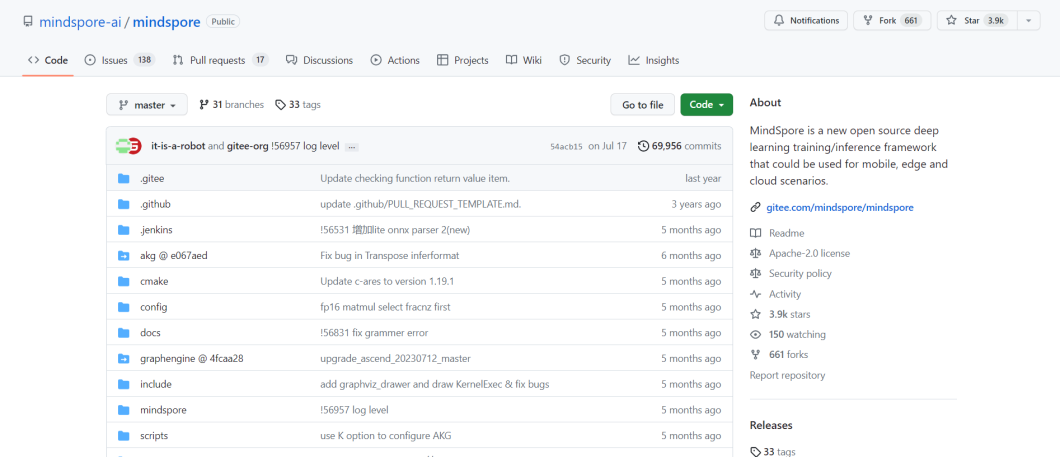

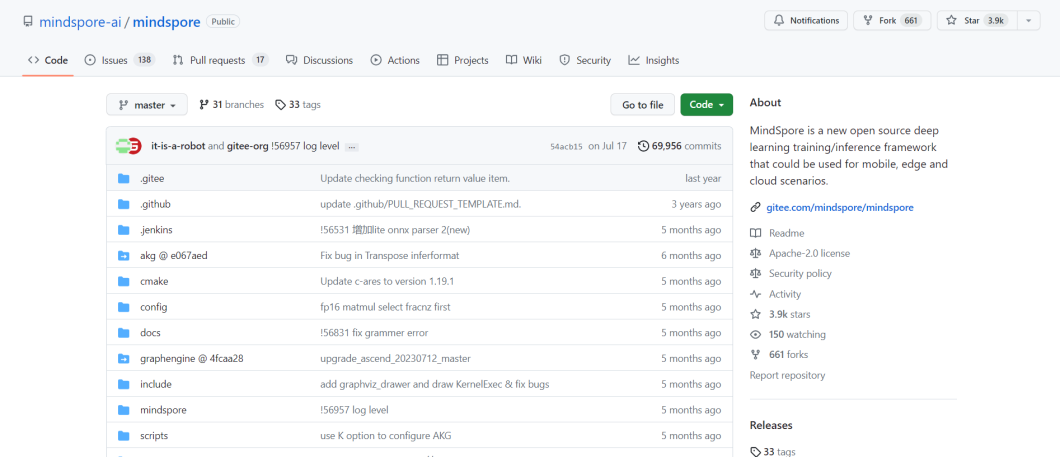

MindSpore actively contributes to the open-source AI group by selling collaboration and information sharing. By participating with builders and knowledge scientists worldwide, Huawei AI pushes the AI {hardware} and software program utility ecosystem ahead. Right now, the framework’s repository on GitHub has greater than 465 open-source contributors and 4’000 stars.

The modular structure of MindSpore provides customers the flexibleness to customise their strategy to numerous ML duties. The built-in set of instruments is repeatedly maintained and bolstered by novel optimization strategies, computerized parallelism, and {hardware} acceleration that improve mannequin efficiency.

Potential Dangers for Companies Customers

In 2019, the U.S. took motion in opposition to Huawei, citing safety considerations and implementing export controls on U.S. know-how gross sales to the corporate. These measures had been pushed by fears that Huawei’s intensive presence in world telecommunications networks may probably be exploited for espionage by the Chinese language authorities.

EU officers have additionally expressed reservations about Huawei, labeling it a “high-risk provider.” Margrethe Vestager, the Competitors Commissioner, confirmed the European Fee’s intent to regulate Horizon Europe guidelines to align with their evaluation of Huawei as a high-risk entity.

The Fee’s considerations relating to Huawei and ZTE, one other Chinese language telecoms firm, have led it to endorse member states’ efforts to restrict and exclude these suppliers from cell networks. To this point, ten member states have imposed restrictions on telecoms provides, pushed by considerations over espionage and overreliance on Chinese language know-how.

Whereas Brussels lacks the authority to forestall Huawei parts from coming into member state networks, it’s taking steps to guard its personal communications by avoiding Huawei and ZTE parts. Moreover, it plans to evaluation EU funding packages in gentle of the high-risk standing.

Past direct safety threats, there may be additionally a possible for provider and insurance coverage dangers that might emerge when companies or prospects are uncovered to potential dangers.

What’s Subsequent?

At viso.ai, we energy the main laptop imaginative and prescient platform trusted by enterprise prospects world wide to construct, and scale real-world laptop imaginative and prescient functions. A enterprise various to MindSpore, Viso Suite permits ML groups to construct extremely customized laptop imaginative and prescient methods and combine current cameras.

Discover the Viso Suite Platform options and request a demo on your workforce.

To be taught extra about deep studying frameworks, try the next articles: