NeurIPS 2023, the convention and workshop on Neural Data Processing Programs, came about December 10th by means of 16th. The convention showcased the newest in machine studying and synthetic intelligence. This yr’s convention featured 3,584 papers that advance machine studying throughout many domains. NeurIPS introduced the NeurIPS 2023 award-winning papers to assist spotlight their view of high analysis papers.

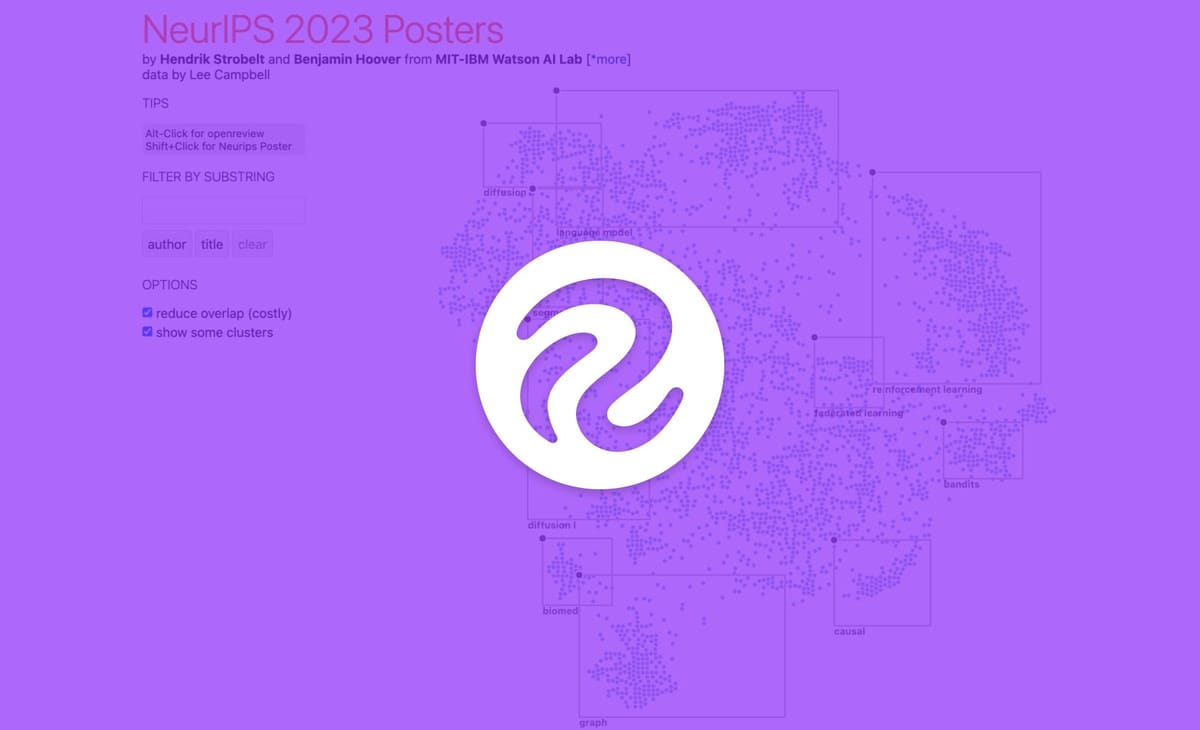

With the explosion of groundbreaking papers, our group utilized a strong NeurIPS 2023 papers visualization device to seek out the newest advances in laptop imaginative and prescient and multimodality from the convention. From there, we learn by means of to seek out probably the most impactful papers to share with you.

On this weblog put up, we spotlight 11 vital papers from NeurIPS 2023 and share basic traits that look to be setting the stage for 2024 and past. Let’s start!

SEEM is a promptable and interactive mannequin for segmenting every little thing in every single place in a picture utilizing a novel decoding mechanism. This mechanism permits various prompting for every type of segmentation duties. The purpose is to create a common segmentation interface that behaves like massive language fashions (LLMs).

Large net datasets play a key position within the success of enormous vision-language fashions like CLIP and Flamingo. This research reveals how generated captions can enhance the utility of web-scraped information factors with nondescript textual content. By means of exploring totally different mixing methods for uncooked and generated captions, they outperform the very best filtering strategies by lowering noisy information with out sacrificing information variety.

Current works have composed basis fashions for visible reasoning—utilizing massive language fashions (LLMs) to provide applications that may be executed by pre-trained vision-language fashions. Nonetheless, summary ideas like “left” may also be grounded in 3D, temporal, and motion information, as in shifting to your left. This paper proposes Logic-Enhanced FoundaTion Mannequin (LEFT), a unified framework that learns to floor and motive with ideas throughout domains.

Imaginative and prescient-Language Fashions (VLMs) have demonstrated spectacular zero-shot switch capabilities in image-level visible notion. Nonetheless, these fashions have proven restricted efficiency in instance-level duties that demand exact localization and recognition.

This paper introduces a brand new zero-shot framework that leverages pixel-level annotations acquired from a generalist segmentation mannequin for fine-grained visible prompting. By means of this analysis, they reveal an utility of blurring outdoors a goal masks that displays distinctive effectiveness, referred to as High-quality-Grained Visible Prompting (FGVP). This system demonstrates superior efficiency in zero-shot comprehension of referring expressions on the RefCOCO, RefCOCO+, and RefCOCOg benchmarks.

A big multimodal wine dataset for finding out the relations between visible notion, language, and taste.

The paper proposes a low-dimensional idea embedding algorithm that mixes human expertise with computerized machine similarity kernels. The authors exhibit that this shared idea embedding area improves upon separate embedding areas for coarse taste classification (alcohol proportion, nation, grape, value, ranking) and aligns with the intricate human notion of taste.

This group presents an LLM-based framework for vision-centric duties, termed VisionLLM. This framework supplies a unified perspective for imaginative and prescient and language duties by treating photos as a overseas language and aligning vision-centric duties with language duties that may be flexibly outlined and managed utilizing language directions. The mannequin can obtain over 60% mAP on COCO, on par with detection-specific fashions.

Multi-modal Queried Object Detection, MQ-Det, is an environment friendly structure and pre-training technique designed to make the most of each textual description with open-set generalization and visible exemplars with wealthy description granularity as class queries, particularly, for real-world detection with each open-vocabulary classes and numerous granularity.

MQ-Det incorporates imaginative and prescient queries into present effectively established language-queried-only detectors. MQ-Det considerably improves the state-of-the-art open-set detector GLIP by +7.8% AP on the LVIS benchmark through multimodal queries with none downstream finetuning, and averagely +6.3% AP on 13 few-shot downstream duties.

LoRA is a novel Logical Reasoning Augmented VQA dataset that requires formal and sophisticated description logic reasoning based mostly on a food-and-kitchen information base. 200,000 various description logic reasoning questions based mostly on the SROIQ Description Logic had been created, together with lifelike kitchen scenes and floor reality solutions. Zero-shot efficiency of state-of-the-art massive vision-and-language fashions are then carried out on LoRA.

Vocabulary-free Picture Classification (VIC), goals to assign to an enter picture a category that resides in an unconstrained language-induced semantic area, with out the prerequisite of a identified vocabulary. VIC is a difficult job because the semantic area is extraordinarily massive, containing hundreds of thousands of ideas, with fine-grained classes.

Class Search from Exterior Databases (CaSED), a technique that exploits a pre-trained vision-language mannequin and an exterior imaginative and prescient language database to handle VIC in a training-free method. Experiments on benchmark datasets validate that CaSED outperforms different complicated imaginative and prescient language frameworks, whereas being environment friendly with a lot fewer parameters, paving the way in which for future analysis on this course.

Zero-shot Human-Object Interplay (HOI) detection goals to establish each seen and unseen HOI classes. CLIP4HOI is developed on the vision-language mannequin CLIP and avoids the mannequin from overfitting to seen human-object pairs. People and objects are independently recognized and all possible human-object pairs are processed by Human-Object interactor for pairwise proposal era.

Experiments on prevalent benchmarks present that CLIP4HOI outperforms earlier approaches on each uncommon and unseen classes, and units a collection of state-of-the-art information underneath a wide range of zero-shot settings.

In-context studying is the power to configure a mannequin’s habits with totally different prompts. This paper supplies a mechanism for in-context studying of scene understanding duties: nearest neighbor retrieval from a immediate of annotated options.

The ensuing mannequin, Hummingbird, performs numerous scene understanding duties with out modification whereas approaching the efficiency of specialists which have been fine-tuned for every job. Hummingbird could be configured to carry out new duties way more effectively than fine-tuned fashions, elevating the potential for scene understanding within the interactive assistant regime.

NeurIPS is among the premier conferences for the fields of machine studying and synthetic intelligence. With over 3,000 papers included within the NeurIPS 2023 corpus, there may be an awesome quantity of developments and breakthroughs which can form the way forward for AI in 2024 and past.

A number of thrilling traits emerged this yr which we predict will proceed to develop in significance and allow new laptop imaginative and prescient use instances:

- Multimodal mannequin efficiency: GPT-Four with Imaginative and prescient, Gemini, and many open supply multimodal fashions are pushing mannequin efficiency right into a realm the place actual enterprise functions could be constructed utilizing multimodal fashions. With extra groups specializing in enhancing efficiency, new benchmarks for understanding efficiency, and new datasets being created, it appears like 2024 might be the yr we’ve got multimodal fashions as helpful as at the moment’s broadly adopted language fashions.

- Prompting for multimodality: LLMs took off in 2023 because of the comparatively simple means of interacting with them utilizing textual content and it appears like multimodal fashions are subsequent. Visible information is complicated and discovering methods to interact with that information in a option to get the outcomes you need is a brand new frontier being explored. As extra interactions are developed, extra actual world use instances shall be unlocked. You should use the open supply repo, Maestro, to check out prompting strategies.

- Visible logic and reasoning: 2023 had many breakthroughs in segmenting and understanding objects inside a picture. With these issues pretty solved, shifting on to understanding the connection between objects and the way their interactions talk data is the following step. Very like how LLMs can motive and enhance outputs by understanding context, multimodal fashions will turn out to be extra helpful after they higher perceive the context of a given scene.

We’re grateful to everybody who labored to submit papers for NeurIPS 2023 and excited to assist builders flip this analysis into real-world functions in 2024!