On February 21st, 2024, Chien-Yao Wang, I-Hau Yeh, and Hong-Yuan Mark Liao launched the “YOLOv9: Studying What You Wish to Be taught Utilizing Programmable Gradient Data” paper, which introduces a brand new pc imaginative and prescient mannequin structure: YOLOv9. Later, the supply code was made out there, permitting anybody to coach their very own YOLOv9 fashions.

Based on the challenge analysis group, the YOLOv9 achieves a better mAP than present well-liked YOLO fashions equivalent to YOLOv8, YOLOv7, and YOLOv5, when benchmarked towards the MS COCO dataset.

On this information, we’re going to present the best way to prepare a YOLOv9 mannequin on a customized dataset. We are going to stroll by way of an instance of coaching a imaginative and prescient mannequin to determine soccer gamers on a subject. With that mentioned, you should utilize any dataset you need with this information.

With out additional ado, let’s get began!

What’s YOLOv9?

YOLOv9 is a pc imaginative and prescient mannequin developed by Chien-Yao Wang, I-Hau Yeh, and Hong-Yuan Mark Liao. Hong-Yuan Mark Liao and Chien-Yao Wang additionally labored on the YOLOv4, YOLOR, and YOLOv7, different well-liked mannequin architectures. YOLOv9 introduces two new architectures: YOLOv9 and GELAN, each of that are usable from the yolov9 Python repository releasd with th epaper.

Utilizing the YOLOv9 mannequin, you may prepare an object detection mannequin. Segmentation, classification, and different process sorts are usually not supported presently.

YOLOv9 is available in 4 fashions, ordered by parameter depend:

- v9-S

- v9-M

- v9-C

- v9-E

The weights for v9-S and v9-M are usually not out there on the time of penning this information.

The smallest of the fashions achieved 46.8% AP on the validation set of the MS COCO dataset, whereas the most important mannequin achieves 55.6%. This units a brand new state-of-the-art for object detection efficiency. The chart under reveals the findings from the YOLOv9 analysis group.

YOLOv9 doesn’t have an official license on the time of penning this information. As of February 22nd, 2023, a lead researcher famous “I feel it ought to by GPL3, I’ll examine and replace the license file.”. This means a license shall be set quickly.

The best way to Set up YOLOv9

YOLOv9 is packaged as a sequence of scripts with which you’ll be able to work. There isn’t any official Python bundle or wrapper that you should utilize to work together with the mannequin on the time of penning this information.

To make use of YOLOv9, it’s essential to obtain the challenge repository. Then, you may run both a coaching job or inference from an present COCO checkpoint.

This tutorial assumes you might be working in Google Colab. Regulate instructions as vital in case you are working in your native machine exterior of a Pocket book surroundings.

There’s a bug in YOLOv9 that forestalls you from working inference on a picture, however the Roboflow group is sustaining an unofficial fork with a patch till the repair is launched. To put in YOLOv9 from our patched fork, run the next instructions:

git clone https://github.com/SkalskiP/yolov9.git

cd yolov9

pip3 set up -r necessities.txt -qLet’s set a HOME listing with which to work:

import os

HOME = os.getcwd()

print(HOME)

Subsequent, it’s essential to obtain the mannequin weights. Solely the v9-C and v9-E weights can be found in the meanwhile. You may obtain them utilizing the next instructions:

!mkdir -p {HOME}/weights

!wget -P {HOME}/weights -q https://github.com/WongKinYiu/yolov9/releases/obtain/v0.1/yolov9-c.pt

!wget -P {HOME}/weights -q https://github.com/WongKinYiu/yolov9/releases/obtain/v0.1/yolov9-e.pt

!wget -P {HOME}/weights -q https://github.com/WongKinYiu/yolov9/releases/obtain/v0.1/gelan-c.pt

!wget -P {HOME}/weights -q https://github.com/WongKinYiu/yolov9/releases/obtain/v0.1/gelan-e.ptNow you can use the scripts within the challenge repository to run inference on and prepare YOLOv9 fashions.

Run Inference on a YOLOv9 Mannequin

Let’s run inference utilizing the v9-C COCO checkpoint on an instance picture. Create a brand new knowledge listing and obtain an instance picture into your pocket book. You should use our canine picture for example, or every other picture you need.

!mkdir -p {HOME}/knowledge

!wget -P {HOME}/knowledge -q https://media.roboflow.com/notebooks/examples/canine.jpeg

SOURCE_IMAGE_PATH = f"{HOME}/canine.jpeg"We will now run inference on our picture:

!python detect.py --weights {HOME}/weights/gelan-c.pt --conf 0.1 --source {HOME}/knowledge/canine.jpeg --device 0Picture(filename=f"{HOME}/yolov9/runs/detect/exp/canine.jpeg", width=600)

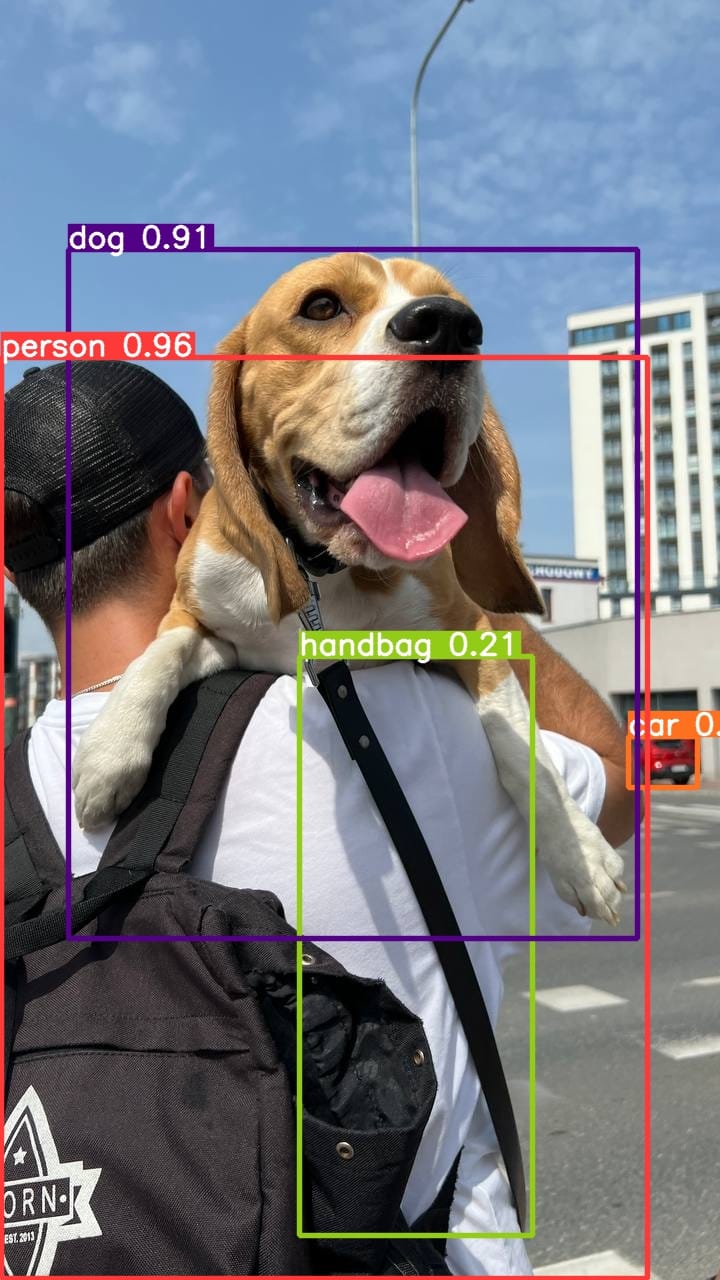

Our mannequin returns:

Our mannequin was capable of efficiently determine an individual, canine, and automobile within the picture. With that mentioned, the mannequin misidentified a strap as a purse and did not detect the backpack.

Let’s attempt the v9-E mannequin, the mannequin with essentially the most parameters:

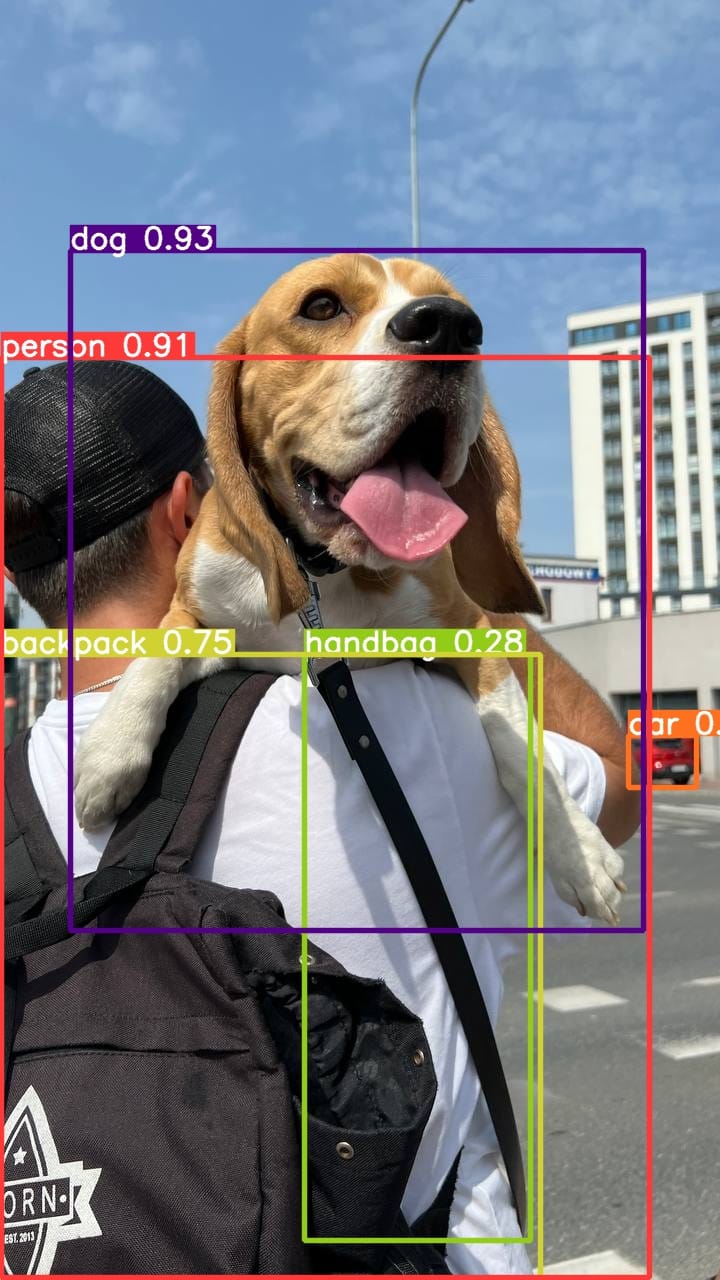

!python detect.py --weights {HOME}/weights/yolov9-e.pt --conf 0.1 --source {HOME}/knowledge/canine.jpeg --device 0Picture(filename=f"{HOME}/yolov9/runs/detect/exp2/canine.jpeg", width=600)Our mannequin returns:

The mannequin was capable of efficiently determine the individual, canine, automobile, and backpack.

The best way to Practice a YOLOv9 Mannequin

You may prepare a YOLOv9 mannequin utilizing the prepare.py file within the YOLOv9 challenge listing.

Step #1: Obtain Dataset

To start out coaching a mannequin, you have to a dataset. For this information, we’re going to use a dataset of soccer gamers. The ensuing mannequin will be capable of determine soccer gamers on a subject.

When you should not have a dataset, take a look at Roboflow Universe, a group the place over 200,000 pc imaginative and prescient datasets have been shared publicly. Yow will discover datasets masking all the things from guide spines to soccer gamers to photo voltaic panels.

Run the next code to obtain the dataset with which we are going to work on this information:

%cd {HOME}/yolov9 roboflow.login() rf = roboflow.Roboflow() challenge = rf.workspace("roboflow-jvuqo").challenge("football-players-detection-3zvbc")

dataset = challenge.model(1).obtain("yolov7")

While you run this code, you can be requested to authenticate with Roboflow. Observe the hyperlink that seems in your terminal to authenticate. When you don’t have an account, you can be taken to a web page the place you may create an account. Then, click on the hyperlink once more to authenticate with the Python bundle.

This code downloads a dataset within the YOLOv7 format, which is suitable with the YOLOv9 mannequin.

You should use any dataset formatted within the YOLOv7 format with this information.

Step #2: Use YOLOv9 Python Script to Practice a Mannequin

Let’s prepare a mannequin on our dataset for 20 epochs. We are going to achieve this utilizing the GELAN-C structure, one of many two architectures launched as a part of the YOLOv9 GitHub repository. GELAN-C is quick to coach. GELAN-C inference occasions are quick, too.

You are able to do so utilizing the next code:

%cd {HOME}/yolov9 !python prepare.py

--batch 16 --epochs 20 --img 640 --device 0 --min-items 0 --close-mosaic 15

--data {dataset.location}/knowledge.yaml

--weights {HOME}/weights/gelan-c.pt

--cfg fashions/detect/gelan-c.yaml

--hyp hyp.scratch-high.yaml

Your mannequin will begin coaching. You will notice coaching metrics from every epoch because the mannequin trains.

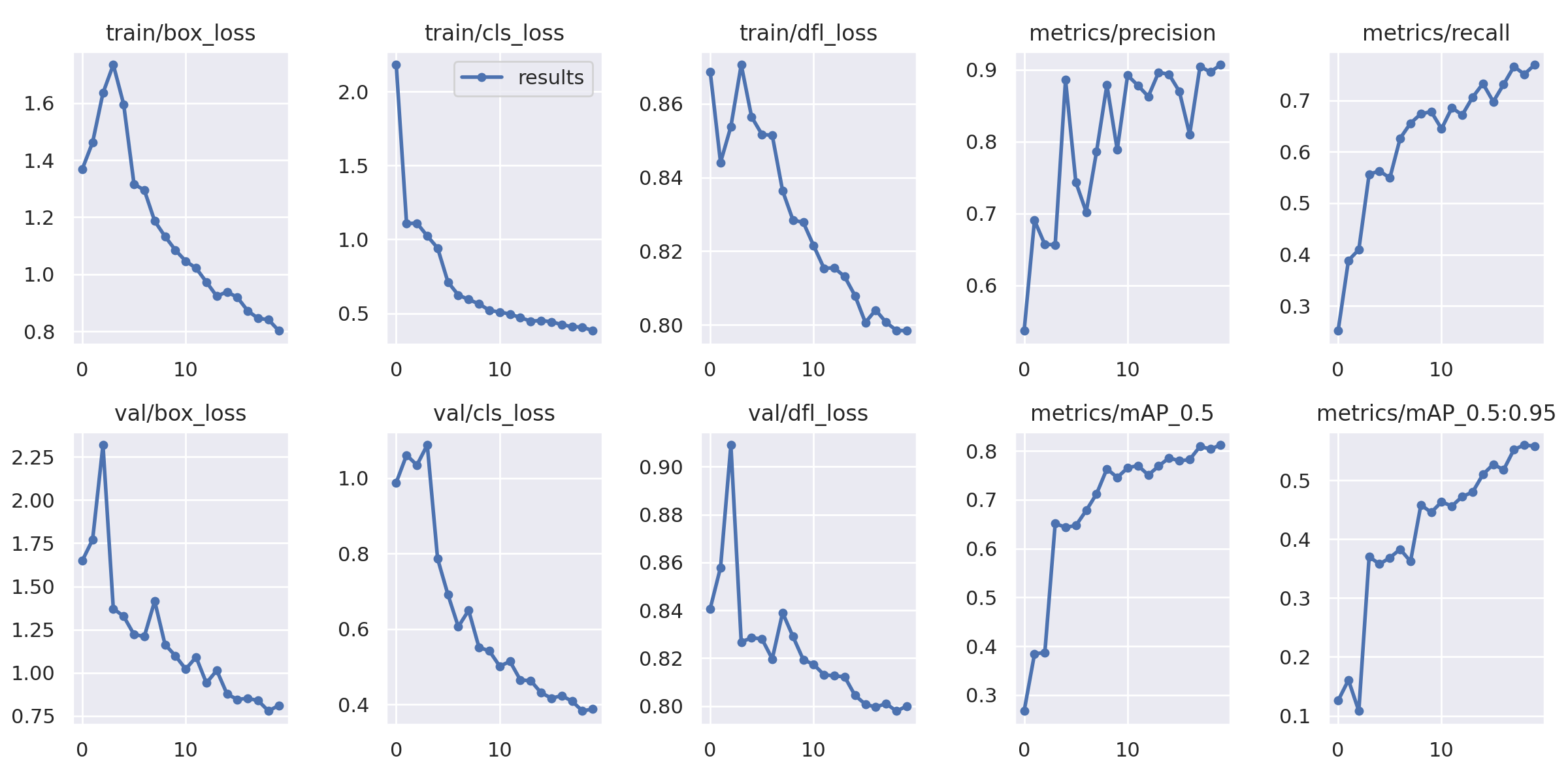

As soon as your mannequin has completed coaching, you may consider the coaching outcomes utilizing the graphs generated by the YOLOv9.

Run the next code to view your coaching graphs:

Picture(filename=f"{HOME}/yolov9/runs/prepare/exp/outcomes.png", width=1000)

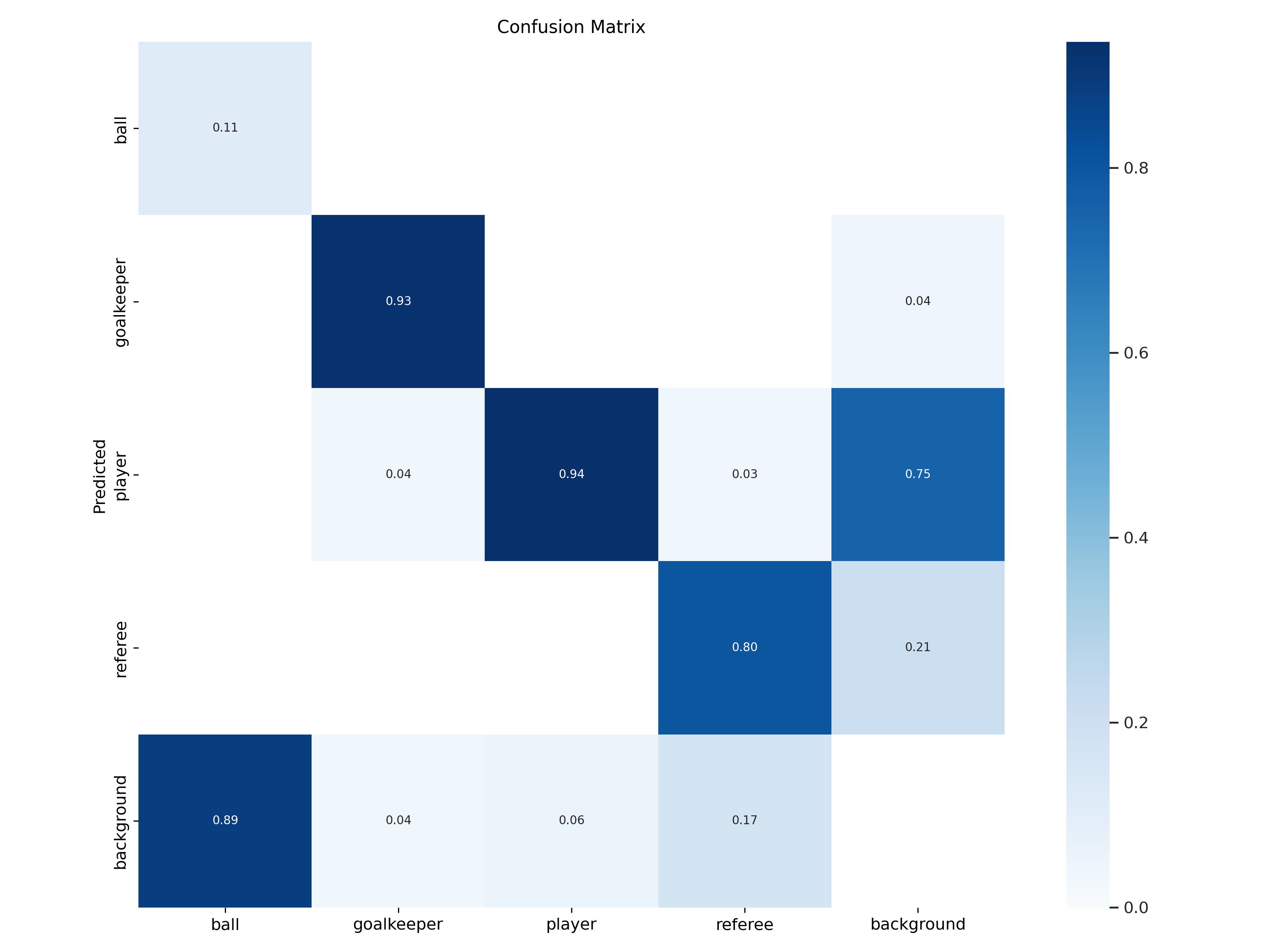

Run the next code to view your confusion matrix:

Picture(filename=f"{HOME}/yolov9/runs/prepare/exp/confusion_matrix.png", width=1000)

Run the next code to see outcomes out of your mannequin on a batch of pictures in your validation set:

Picture(filename=f"{HOME}/yolov9/runs/prepare/exp/val_batch0_pred.jpg", width=1000)

Step #3: Run Inference on the Customized Mannequin

Now that we’ve got a educated mannequin, we will run inference. To take action, we will use the detect.py file within the YOLOv9 repository.

Run the next code to run inference on all pictures in your validation set:

!python detect.py

--img 1280 --conf 0.1 --device 0

--weights {HOME}/yolov9/runs/prepare/exp/weights/greatest.pt

--source {dataset.location}/legitimate/pictures import glob

from IPython.show import Picture, show for image_path in glob.glob(f'{HOME}/yolov9/runs/detect/exp4/*.jpg')[:3]:

show(Picture(filename=image_path, width=600))

print("n")We educated our mannequin on pictures with a measurement of 640, which permits us to coach a mannequin with lesser computational sources. Throughout inference, we enhance the picture measurement to 1280, permitting us to get extra correct outcomes from our mannequin.

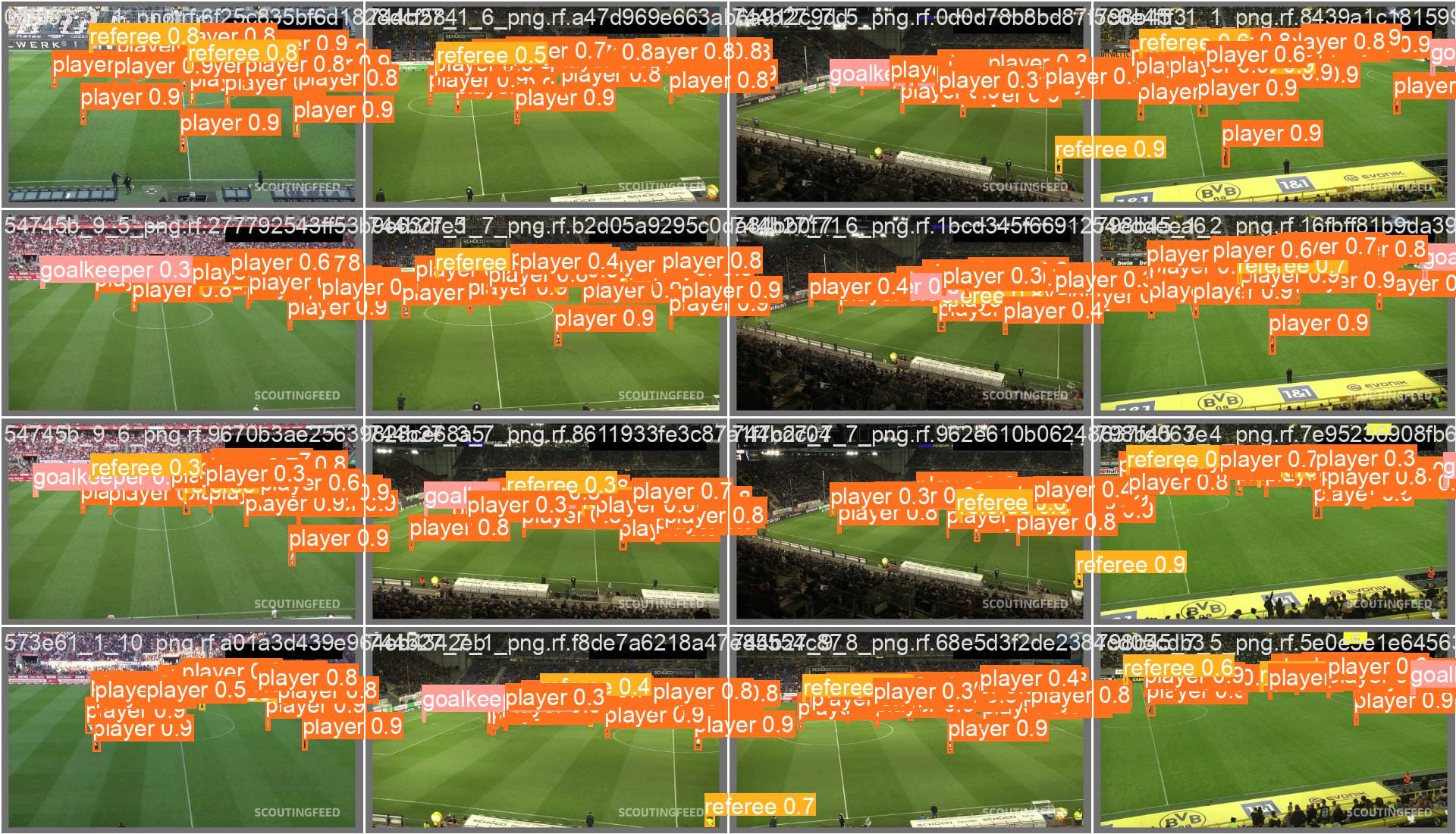

Listed below are three examples of outcomes from our mannequin:

Our mannequin efficiently recognized gamers, referees, and goalkeepers.

Conclusion

YOLOv9, launched by Chien-Yao Wang, I-Hau Yeh, and Hong-Yuan Mark Liao, is a brand new pc imaginative and prescient mannequin structure. You may prepare object detection fashions utilizing the YOLOv9 structure.

On this information, we demonstrated the best way to run inference on and prepare a YOLOv9 mannequin on a customized dataset. We cloned the YOLOv9 challenge code, downloaded the mannequin weights, then ran inference utilizing the default COCO weights. We then educated a fine-tuned mannequin utilizing a soccer gamers detection dataset. We reviewed coaching graphs and the confusion matrix, then examined the mannequin on pictures from the validation set.