The most recent set up within the YOLO sequence, YOLOv9, was launched on February 21st, 2024. Since its inception in 2015, the YOLO (You Solely Look As soon as) object-detection algorithm has been intently adopted by tech lovers, knowledge scientists, ML engineers, and extra, gaining a large following as a result of its open-source nature and group contributions. With each new launch, the YOLO structure turns into simpler to make use of and far quicker, decreasing the obstacles to make use of for folks all over the world.

YOLO was launched as a analysis paper by J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, signifying a step ahead within the real-time object detection house, outperforming its predecessor – the Area-based Convolutional Neural Community (R-CNN). It’s a single-pass algorithm having just one neural community to foretell bounding containers and sophistication possibilities utilizing a full picture as enter.

What’s YOLOv9?

YOLOv9 is the most recent model of YOLO, launched in February 2024, by Chien-Yao Wang, I-Hau Yeh, and Hong-Yuan Mark Liao. It’s an improved real-time object detection mannequin that goals to surpass all convolution-based, and transformer-based strategies.

YOLOv9 is launched in 4 fashions, ordered by parameter depend: v9-S, v9-M, v9-C, and v9-E. To enhance accuracy, it introduces programmable gradient info (PGI) and the Generalized Environment friendly Layer Aggregation Community (GELAN). PGI prevents knowledge loss and ensures correct gradient updates and GELAN optimizes light-weight fashions with gradient path planning.

Right now, the one laptop imaginative and prescient job supported by YOLOv9 is object detection.

YOLO Model Historical past

Earlier than diving into the YOLOv9 specifics, let’s briefly recap on the opposite YOLO variations obtainable in the present day.

YOLOv1

YOLOv1 structure (displayed above) surpassed R-CNN with a imply common precision (mAP) of 63.4, and an inference velocity of 45 FPS on the open-source Pascal VOC 2007 dataset. With YOLOv1, object detection is handled as a regression job to foretell bounding containers and sophistication possibilities from a single move of a picture.

YOLOv2

Launched in 2016, it may detect 9000+ object classes. YOLOv2 launched anchor containers – predefined bounding containers referred to as priors that the mannequin makes use of to pin down the best place of an object. YOLOv2 achieved 76.Eight mAP at 67 FPS on the VOC 2007 dataset.

YOLOv3

The authors launched YOLOv3 in 2018 which boasted greater accuracy than earlier variations, with an mAP of 28.2 at 22 milliseconds. To foretell courses, the YOLOv3 mannequin makes use of Darknet-53 because the spine with logistic classifiers as an alternative of softmax and Binary Cross-entropy (BCE) loss.

YOLOv4

2020, Alexey Bochkovskiy et al. launched YOLOv4, introducing the idea of a Bag of Freebies (BoF) and a Bag of Specials (BoS). BoF is a set of information augmentation methods that improve accuracy at no further inference price. (BoS considerably enhances accuracy with a slight improve in price). The mannequin achieved 43.5 mAP at 65 FPS on the COCO dataset.

YOLOv5

With out an official analysis paper, Ultralytics launched YOLOv5 additionally in 2020. The mannequin is straightforward to coach since it’s carried out in PyTorch. The mannequin structure makes use of a Cross-stage Partial (CSP) Connection block because the spine for a greater gradient movement to cut back computational price. YOLOv5 makes use of YAML information as an alternative of CFG information within the mannequin configurations.

YOLOv6

YOLOv6 is one other unofficial model launched in 2022 by Meituan – a Chinese language procuring platform. The corporate focused the mannequin for industrial purposes with higher efficiency than its predecessor. The modifications resulted in YOLOv6n reaching an mAP of 37.5 at 1187 FPS on the COCO dataset and YOLOv6s reaching 45 mAP at 484 FPS.

YOLOv7

In July 2022, a bunch of researchers launched the open-source mannequin YOLOv7, the quickest and essentially the most correct object detector with an mAP of 56.8% at FPS starting from 5 to 160. YOLOv7 is predicated on the Prolonged Environment friendly Layer Aggregation Community (E-ELAN), which improves coaching by letting the mannequin study numerous options with environment friendly computation.

YOLOv8

YOLOv8 has no official paper (as with YOLOv5 and v6) however boasts greater accuracy and quicker velocity for state-of-the-art efficiency. As an example, the YOLOv8m has a 50.2 mAP rating at 1.83 milliseconds on the MS COCO dataset and A100 TensorRT. YOLO v8 additionally incorporates a Python bundle and CLI-based implementation, making it simple to make use of and develop.

Structure YOLOv9

To deal with the data bottleneck (knowledge loss within the feed-forward course of), YOLOv9 creators suggest a brand new idea, i.e. the programmable gradient info (PGI). The mannequin generates dependable gradients through an auxiliary reversible department. Deep options nonetheless execute the goal job and the auxiliary department avoids the semantic loss as a result of multi-path options.

The authors achieved the very best coaching outcomes by making use of PGI propagation at totally different semantic ranges. The reversible structure of PGI is constructed on the auxiliary department, so there isn’t any further price. Since PGI can freely choose a loss perform appropriate for the goal job, it additionally overcomes the issues encountered by masks modeling.

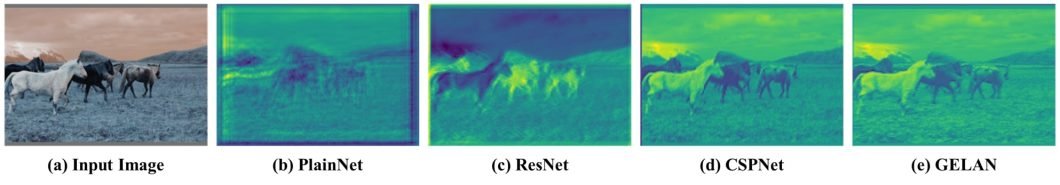

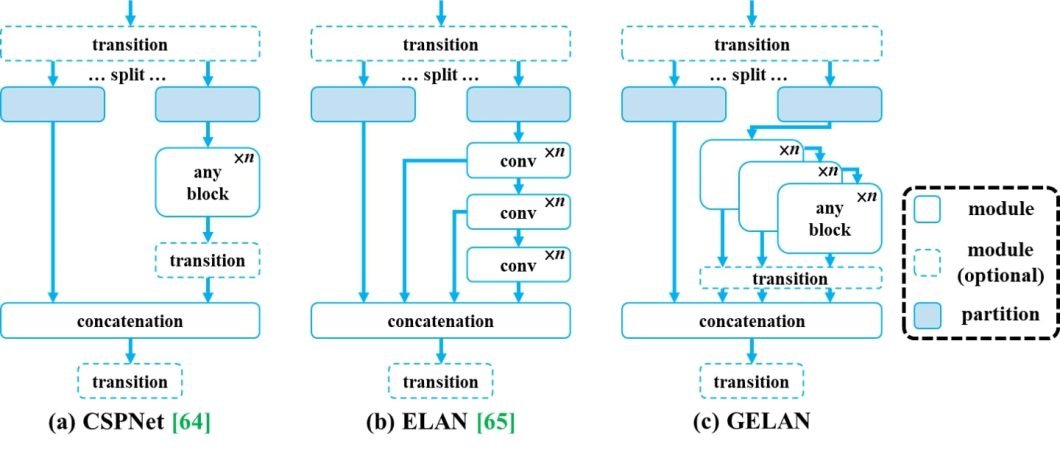

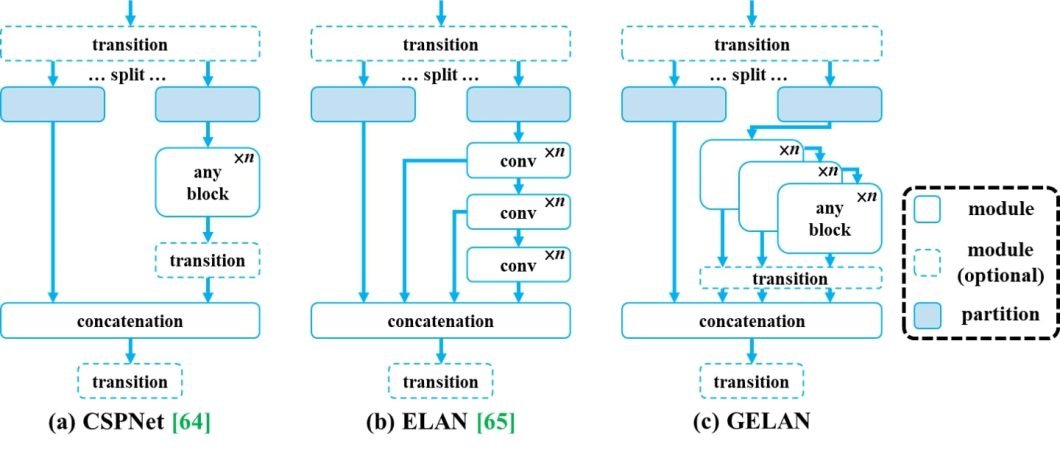

The proposed PGI mechanism might be utilized to deep neural networks of varied sizes. Within the paper, the authors designed a generalized ELAN (GELAN) that concurrently takes under consideration the variety of parameters, computational complexity, accuracy, and inference velocity. The design permits customers to decide on acceptable computational blocks arbitrarily for various inference units.

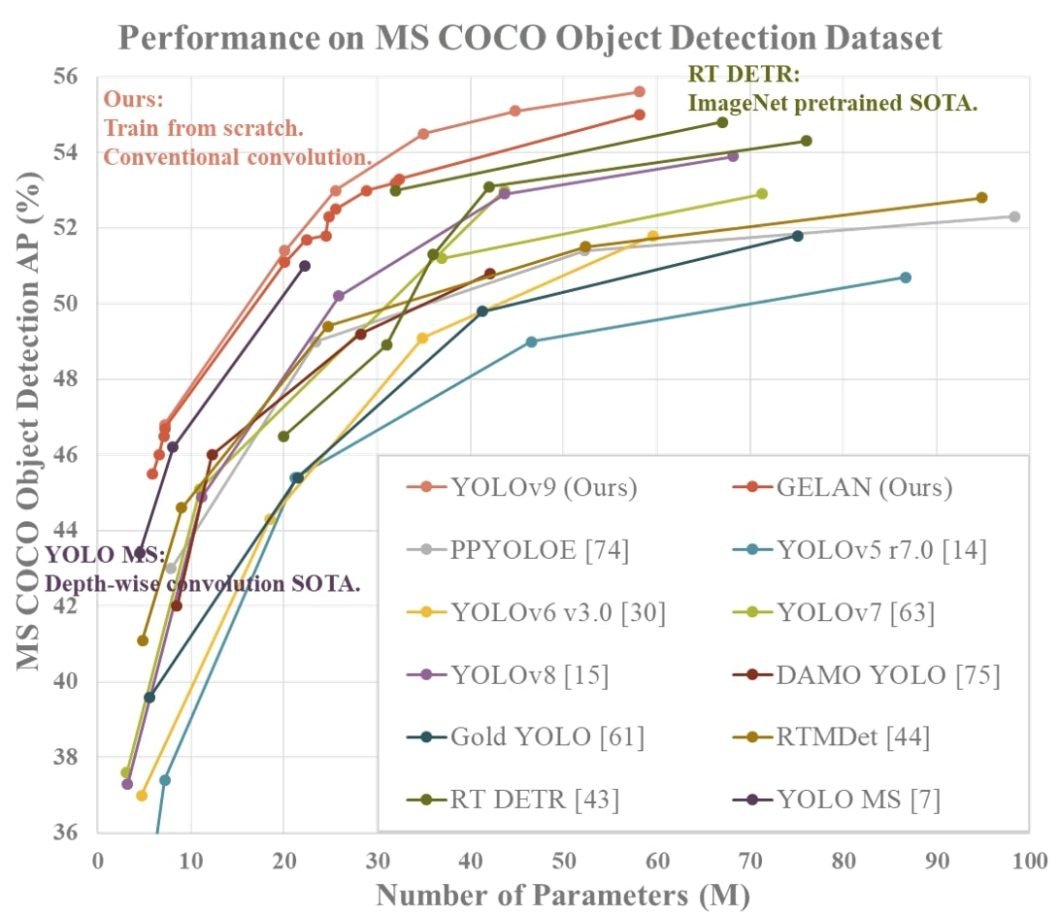

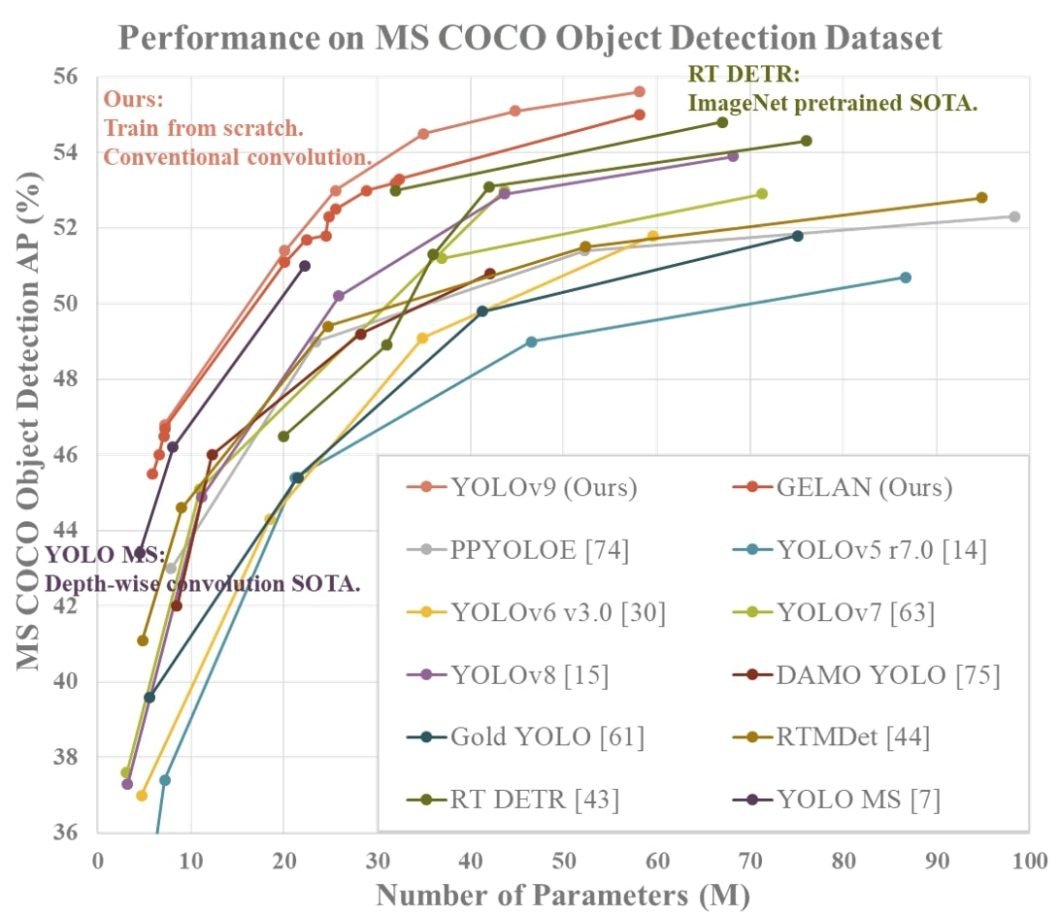

Utilizing the proposed PGI and GELAN – the authors designed YOLOv9. To conduct experiments they used the MS COCO dataset, and the experimental outcomes verified that the proposed YOLO v9 achieved the highest efficiency in all instances.

Analysis Contributions

- Theoretical evaluation of deep neural community structure from the attitude of reversible perform. The authors designed PGI and auxiliary reversible branches primarily based on this evaluation and achieved wonderful outcomes.

- The designed PGI solves the issue that deep supervision can solely be used for very deep neural community architectures. Thus, it permits new light-weight architectures to be actually utilized in each day life.

- The GELAN community solely makes use of standard convolution to realize a better parameter utilization than the depth sensible convolution design. So it reveals the good benefits of being mild, quick, and correct.

- Combining the proposed PGI and GELAN, the item detection efficiency of the YOLOv9 on the MS COCO dataset largely surpasses the prevailing real-time object detectors in all facets.

YOLOv9 License

YOLOv9 was not launched with an official license. Within the following days, nonetheless WongKinYiu up to date the official license to GPL-3.0. YOLOv7 and YOLOv9 have been launched beneath WongKinYiu’s repository.

Benefits of YOLOv9

YOLOv9 arises as a robust mannequin, providing modern options that can play an vital position within the additional growth of object detection, and possibly even picture segmentation and classification down the highway. It offers quicker, clearer, and extra versatile actions, and different benefits embrace:

- Dealing with the data bottleneck and adapting deep supervision to light-weight architectures of neural networks by introducing the Programmable Gradient Data (PGI).

- Creating the GELAN, a sensible and efficient neural community. GELAN has confirmed its sturdy and steady efficiency in object detection duties at totally different convolution and depth settings. It might be extensively accepted as a mannequin appropriate for varied inference configurations.

- By combining PGI and GELAN – YOLOv9 has proven sturdy competitiveness. Its intelligent design permits the deep mannequin to cut back the variety of parameters by 49% and the variety of calculations by 43% in contrast with YOLOv9. And it nonetheless has a 0.6% Common Precision enchancment on the MS COCO dataset.

- The developed YOLOv9 mannequin is superior to RT-DETR and YOLO-MS when it comes to accuracy and effectivity. It units new requirements in light-weight mannequin efficiency by making use of standard convolution for higher parameter utilization.

| Mannequin | #Param. | FLOPs | AP50:95val | APSval | APMval | APLval |

|---|---|---|---|---|---|---|

| YOLOv7 [63] | 36.9 | 104.7 | 51.2% | 31.8% | 55.5% | 65.0% |

| + AF [63] | 43.6 | 130.5 | 53.0% | 35.8% | 58.7% | 68.9% |

| + GELAN | 41.7 | 127.9 | 53.2% | 36.2% | 58.5% | 69.9% |

| + DHLC [34] | 58.1 | 192.5 | 55.0% | 38.0% | 60.6% | 70.9% |

| + PGI | 58.1 | 192.5 | 55.6% | 40.2% | 61.0% | 71.4% |

The above desk demonstrates common precision (AP) of varied object detection fashions.

YOLOv9 Purposes

YOLOv9 is a versatile laptop imaginative and prescient mannequin that you should utilize in numerous real-world purposes. Right here we recommend a couple of widespread use instances.

- Logistics and distribution: Object detection can help in estimating product stock ranges to make sure ample inventory ranges and supply info concerning shopper habits.

- Autonomous autos: Autonomous autos can make the most of YOLOv9 object detection to assist navigate self-driving vehicles safely by means of the highway.

- Individuals counting: Retailers and procuring malls can practice the mannequin to detect real-time foot site visitors of their retailers, detect queue size, and extra.

- Sports activities analytics: Analysts can use the mannequin to trace participant actions in a sports activities discipline to assemble related insights concerning crew efficiency.

YOLOv9: Essential Takeaways

The YOLO fashions are the usual within the object detection house with their nice efficiency and vast applicability. Listed below are our first conclusions about YOLOv9:

- Ease-of-use: YOLOv9 is already in GitHub, so the customers can implement YOLOv9 rapidly by means of the CLI and Python IDE.

- YOLOv9 duties: YOLOv9 is environment friendly for real-time object detection with improved accuracy and velocity.

- YOLOv9 enhancements: YOLOv9’s primary enhancements embrace a decoupled head with anchor-free detection and mosaic knowledge augmentation that turns off within the final ten coaching epochs.

Sooner or later, we stay up for seeing if the creators will increase YOLOv9 capabilities to a variety of different laptop imaginative and prescient duties as nicely.

Viso Suite is the end-to-end platform for no code laptop imaginative and prescient. Viso Suite gives a number of pre-trained fashions to select from, or the chance to import or practice your individual customized AI fashions. To study how one can resolve your business’s challenges with no-code laptop imaginative and prescient, ebook a demo of Viso Suite.