Introduction

Within the fast-evolving panorama of synthetic intelligence, 2024 has introduced forth notable developments, one being TikTok’s groundbreaking introduction of “Depth Something.” This cutting-edge Monocular Depth-Estimation (MDE) mannequin, developed in collaboration with esteemed establishments just like the College of Hong Kong and Zhejiang Lab, stands out for its utilization of an enormous dataset comprising 1.5 million labeled and 62 million unlabeled photographs. Lately open-sourced, “Depth Something” is poised to redefine depth notion expertise. Let’s discover its structure, sensible implementation, and the creation of a user-friendly net app utilizing Python and Flask.

Studying Goals

- Acquire perception into the idea of Monocular Depth-Estimation fashions and their significance in pc imaginative and prescient duties.

- Discover ways to implement a Monocular Depth-Estimation mannequin pipeline utilizing the “Depth Something” mannequin.

- Discover the method of growing a purposeful net software for Monocular Depth-Estimation utilizing Python and Flask.

- Perceive the right way to enhance consumer interplay and interface design in net purposes via Flask and styling methods.

- Handle widespread questions on Depth-Estimation, Flask net app improvement, picture processing, and the utilization of Hugging Face fashions.

This text was revealed as part of the Information Science Blogathon.

Desk of contents

Journey to Depth Something

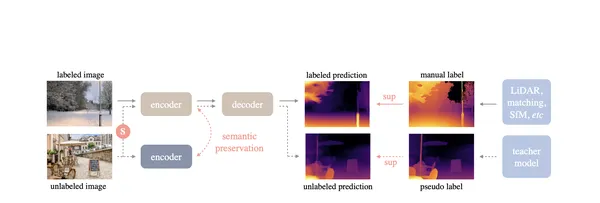

One method to be progressive within the subject is to have a look at outdated fashions and attempt to enhance them by making use of extra coaching or enhancing earlier architectures. The journey in direction of growing Depth Something started with a crucial evaluation of different Depth-Estimation strategies, emphasizing the constraints associated to knowledge protection. The mannequin’s success centered on the potential of unlabeled datasets, and the authors took an unconventional route to realize this.

Initially, the authors skilled a instructor mannequin on a labeled dataset and tried to information the coed utilizing the instructor, together with unlabeled datasets pseudo labeled by the instructor. Nonetheless, this method didn’t work. Each architectures ended up producing related outputs.

To deal with this, the authors launched a more difficult optimization goal for the coed. This concerned extra from unlabeled photographs subjected to paint jittering, distortions, Gaussian blurring, and spatial distortion, permitting the mannequin to have representations.

Structure of Depth Something

Now allow us to see the structure of the mannequin. The structure of Depth Something consists of a DINOv2 encoder for characteristic extraction, adopted by a DPT decoder. The coaching course of skilled the instructor mannequin on labeled photographs, adopted by joint coaching of the coed mannequin with the addition of pseudo-labeled knowledge from Massive Imaginative and prescient Transformers (ViT-L).

Highlights of “Depth Something” Architectural Implementation Enhancements

Allow us to Dive into the revolutionary developments of our ‘Depth Something’ architectural implementation. Expertise heightened efficiency, enhanced scalability, streamlined workflows, and unparalleled flexibility. These key highlights redefine what’s potential in your system’s structure.

Zero-Shot Relative Depth-Estimation

Depth Something achieves zero-shot relative Depth-Estimation, exceeding the capabilities of present fashions like MiDaS v3.1 (BEiTL-512). That is an enchancment in precisely figuring out the relative depth order among the many pixels.

Zero-Shot Metric Depth-Estimation

The mannequin excels in zero-shot metric Depth-Estimation, outperforming established benchmarks like ZoeDepth. That is particularly worthwhile in predicting the distances of factors in a scene from the digital camera, treating every pixel as a definite regression drawback.

Optimum Nice-Tuning and Benchmarking

Depth Something supplies optimum fine-tuning and benchmarking on datasets like NYUv2 and KITTI. This showcases its adaptability and reliability in dealing with various eventualities.

Technical Implementation of Depth Something

As we now have seen, the Depth Something mannequin is a cutting-edge MDE mannequin based mostly on the DPT structure skilled on a dataset of roughly 62 million photographs, Depth Something achieves state-of-the-art outcomes for each relative and absolute Depth-Estimation Allow us to see the sensible use of this mannequin.

Pipeline API

Using Depth Something is made handy via the pipeline API, abstracting away complexities. In just a few traces of code, you may carry out Depth-Estimation utilizing the pre-trained mannequin:

!pip set up --upgrade git+https://github.com/huggingface/transformers.gitfrom PIL import Picture

import requests url = 'http://photographs.cocodataset.org/val2017/000000039769.jpg'

picture = Picture.open(requests.get(url, stream=True).uncooked)

picture

from transformers import pipeline

from PIL import Picture

import requests # Load pipeline

pipe = pipeline(job="depth-estimation", mannequin="LiheYoung/depth-anything-small-hf") # Load picture

url = 'http://photographs.cocodataset.org/val2017/000000039769.jpg'

picture = Picture.open(requests.get(url, stream=True).uncooked) # Inference

depth = pipe(picture)["depth"]depth

The above code is anticipated to be run in Jupyter Pocket book e.g. utilizing the Google Colab platform. However it is a platform made for analysis and working inference. Constructing a helpful software program product requires writing a purposeful software.

Within the subsequent step, we are going to combine this pipeline API into a totally purposeful net app the place customers can go to and generate the MDE for his or her photographs. This might be photographers, lab scientists, or researchers. We will likely be constructing domestically, so you may open your favorite code editor however I will likely be utilizing VS Code editor. Don’t worry about pc assets as this mannequin has been optimized to make use of little RAM of lower than 2GB! The whole challenge might be cloned right here.

Allow us to now construct the challenge from scratch.

Step 1: Flask App

You can begin by making a digital atmosphere. A digital atmosphere makes it simple to put in software necessities and construct in your machine in isolation. Use it besides you might have a cause to not.

Create a base file in your app. Title it app.py and paste the under code into it.

from flask import Flask, render_template, request

from PIL import Picture

from transformers import pipeline

import requests

from io import BytesIO

import base64 app = Flask(__name__) # Load pipeline

pipe = pipeline(job="depth-estimation", mannequin="LiheYoung/depth-anything-small-hf") @app.route('/')

def index(): return render_template('index.html') @app.route('/estimate_depth', strategies=['POST'])

def estimate_depth(): # Get chosen enter kind (url or add) input_type = request.type.get('input_type', 'url') url = None # Initialize url variable original_image_base64 = None # Initialize original_image_base64 variable if input_type == 'url': # Get picture URL from the shape url = request.type.get('image_url', '') # Test if the URL is offered if not url: return "Please present an Picture URL." elif input_type == 'add': # Get uploaded file uploaded_file = request.information.get('file_upload') # Test if a file is uploaded if not uploaded_file: return "Please add a picture." # Learn the picture from the file original_image = Picture.open(uploaded_file) # Convert unique picture to base64 original_image_base64 = image_to_base64(original_image) else: return "Invalid enter kind" if input_type == 'url': # Load picture picture = Picture.open(requests.get(url, stream=True).uncooked) elif input_type == 'add': picture = original_image # Inference depth = pipe(picture)["depth"] # Convert depth picture to base64 depth_base64 = image_to_base64(depth) # Show picture with depth return render_template('consequence.html', input_type=input_type, image_url=url, original_image_base64=original_image_base64, depth_base64=depth_base64) def image_to_base64(picture): buffered = BytesIO() picture.save(buffered, format="PNG") return base64.b64encode(buffered.getvalue()).decode('utf-8') if __name__ == "__main__": app.run(debug=True)That is the place we embed the code for the pipeline we used earlier from Huggingface transformers. I’ve added feedback for every line for readability if you’re new to Flask. Flask makes it simple to develop apps on a server with pace. Now we have two routes. One is for the index web page which is the touchdown, and the opposite is the web page the place we are going to see our outcomes.

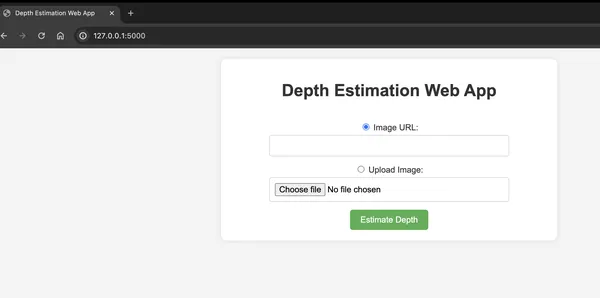

Step 2: Dwelling Web page

We’d like two pages for our App. These will likely be HTML pages. Create a listing/folder known as ‘template’ and add a file known as ‘index.html’. That is the touchdown web page the place customers will first see and determine in the event that they wish to add a picture from their pc/machine or in the event that they wish to use a picture URL. Now we have added some fashion to make it extra visible. Right here is our index.html.

<!DOCTYPE html>

<html lang="en">

<head> <meta charset="UTF-8"> <meta title="viewport" content material="width=device-width, initial-scale=1.0"> <title>Depth Estimation Internet App</title> <fashion> physique { font-family: 'Arial', sans-serif; text-align: heart; margin: 20px; background-color: #f4f4f4; } h1 { coloration: #333; } .container { show: flex; flex-direction: column; align-items: heart; max-width: 600px; margin: Zero auto; background-color: white; padding: 20px; border-radius: 10px; box-shadow: Zero 0 10px rgba(0, 0, 0, 0.1); } type { margin-top: 20px; } label { font-size: 16px; coloration: #333; margin-bottom: 5px; show: block; } enter[type="text"], enter[type="file"] { width: calc(100% - 22px); padding: 10px; font-size: 16px; margin-bottom: 15px; box-sizing: border-box; border: 1px strong #ccc; border-radius: 5px; show: inline-block; } button { padding: 10px 20px; background-color: #4CAF50; coloration: white; border: none; border-radius: 5px; cursor: pointer; font-size: 16px; transition: background-color 0.3s; } button:hover { background-color: #45a049; } </fashion>

</head>

<physique> <div class="container"> <h1>Depth Estimation Internet App</h1> <type motion="/estimate_depth" methodology="publish" enctype="multipart/form-data"> <label> <enter kind="radio" title="input_type" worth="url" checked> Picture URL: </label> <enter kind="textual content" id="image_url" title="image_url"> <label> <enter kind="radio" title="input_type" worth="add"> Add Picture: </label> <enter kind="file" id="file_upload" title="file_upload" settle for="picture/*"> <button kind="submit">Estimate Depth</button> </type> </div>

</physique>

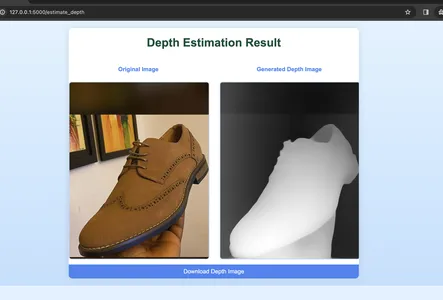

</html>Step 3: Outcomes

The logic right here is that when customers add their picture they need to be redirected to a brand new web page the place they are going to see the outcomes of their predicted depth. Now we have added styling and tags to comprise the picture output. This we are going to name the consequence.html. Add this folder to the templates listing/folder.

<!DOCTYPE html>

<html lang="en">

<head> <meta charset="UTF-8"> <meta title="viewport" content material="width=device-width, initial-scale=1.0"> <title>Depth Estimation End result</title> <fashion> physique { font-family: 'Arial', sans-serif; text-align: heart; margin: 0; background: linear-gradient(180deg, #e0f3ff, #c1e0ff); /* Gradient background */ } h1 { coloration: #0e5039; margin-bottom: 10px; } .container { show: flex; flex-direction: column; align-items: heart; max-width: 800px; margin: 20px auto; background: #ffffff; /* White background */ border-radius: 10px; box-shadow: Zero 0 20px rgba(0, 0, 0, 0.1); /* Delicate shadow impact */ overflow: hidden; } p { font-size: 16px; coloration: #333; margin-bottom: 5px; } .image-container { show: flex; justify-content: space-between; margin-top: 20px; overflow: hidden; } .image-container div { width: 48%; } img { max-width: 100%; peak: auto; margin-top: 20px; border: 2px strong #bdd7ff; /* Gentle border coloration */ border-radius: 8px; background-color: #f0f7ff; /* Gentle blue background for photographs */ transition: remodel 0.3s ease-in-out; } img:hover { remodel: scale(1.05); /* Enlarge picture on hover */ } .download-button { text-decoration: none; padding: 10px; background-color: #4285f4; /* Google Blue */ coloration: #ffffff; border-radius: 5px; cursor: pointer; transition: background-color 0.3s ease; margin-top: 10px; show: block; /* Make sure the button takes full width */ width: 100%; /* Set button width to 100% */ } .download-button:hover { background-color: #357ae8; /* Darker shade on hover */ } </fashion>

</head>

<physique> <div class="container"> <h1>Depth Estimation End result</h1> <div class="image-container"> <div> <p fashion="font-weight: daring; coloration: #4285f4;">Authentic Picture</p> {% if input_type == 'url' %} <img src="{{ image_url }}" alt="Authentic Picture"> {% elif input_type == 'add' %} <img src="knowledge:picture/png;base64,{{ original_image_base64 }}" alt="Authentic Picture"> {% endif %} </div> <div> <p fashion="font-weight: daring; coloration: #4285f4;">Generated Depth Picture</p> <img src="knowledge:picture/png;base64,{{ depth_base64 }}" alt="Depth Picture Preview"> </div> </div> <!-- Add the obtain button under the pictures --> <a category="download-button" href="knowledge:picture/png;base64,{{ depth_base64 }}" obtain="depth_image.png"> Obtain Depth Picture </a> </div>

</physique>

</html>With this, you might be prepared to begin your app. Open the terminal go to the challenge listing and run ‘python app.py’. These will begin your app efficiently. Go to http://127.0.0.1:5000/ in your browser and you will note the touchdown web page.

Choose a picture picture out of your machine or enter a picture URL and click on the ‘Estimate Depth’ button. This could take you to the outcomes web page.

That is how you should use the Depth for something in your challenge. The technical implementation of Depth Something offers the pipeline API and handbook utilization, permitting customers to adapt the mannequin to their particular wants. You may go on to see DepthAnythingConfig to offer additional customization, and the DepthAnythingForDepthEstimation class encapsulates the mannequin’s Depth-Estimation capabilities. You need to use the Hugging Face documentation to study extra. The hyperlinks can be found on the button of this text. Builders can seamlessly combine Depth Something into their initiatives for monocular duties.

Utilizing Docker for the WebApp

Docker is a platform to automate the deployment inside containers. Right here’s why we wish to use Docker for this Flask app:

- Isolation: Docker encapsulates an software and its dependencies, guaranteeing it runs throughout completely different environments. This avoids the “it really works on my machine” drawback.

- Atmosphere Reproducibility: You may outline your app’s atmosphere utilizing a Dockerfile which specifies dependencies like libraries, and configurations. This ensures that each occasion runs in the identical atmosphere, lowering compatibility points.

- Scalability: Docker makes it simple to scale your software horizontally by working containers in parallel. That is helpful for web-applications with various hundreds.

- Simple Deployment: Docker lets you bundle your software and its dependencies right into a container which simplifies deployment and minimizes the danger of errors.

By containerizing the Flask app with Docker, you create a constant and reproducible atmosphere, making it simpler to handle dependencies, deploy the appliance, and scale it.

Go to your house listing for the app and create a file named “Dockerfile”. Word that I didn’t add file extension to it. Paste the configurations under.

# Use an official Python as a mother or father picture

FROM python:3.11-slim # Set the working listing to /app

WORKDIR /app # Copy the present listing information into /app

COPY . /app # Set up necessities.txt

RUN pip set up --no-cache-dir -r necessities.txt # Make port 5000 obtainable to the world outdoors

EXPOSE 5000 # Outline atmosphere variable

ENV FLASK_APP=app.py # Run app.py when the container launches

CMD ["flask", "run", "--host=0.0.0.0"]Additionally, just like git ignore information, we are not looking for docker to containerize some information like git information. We create a .gitignore file once more within the residence listing and embody the next.

__pycache__

.git

*.pyc

*.pyo

*.pyd

venvAfter creating the above information, go to terminal and do the next:

- Construct the Docker Picture: docker construct -t depth-estimation-app .

- Now Run the Docker Container: docker run -p 5000:5000 depth-estimation-app

Be certain that to make use of these instructions within the listing the place your Flask app and the Dockerfile are situated. This creates a container known as depth-estimation-app utilizing all of the information within the present listing therefore the fullstop signal on the finish of the road. Lastly, we begin the app with the run command.

Actual-Life Use Circumstances for Depth-Something

- Autonomous Autos: Depth-Estimation performs a job in autos understanding the encompassing atmosphere, aiding in navigation and impediment avoidance.

- Augmented Actuality (AR): AR can use Depth-Estimation to seamlessly combine digital objects into the real-world atmosphere, offering customers with immersive and interactive experiences.

- Medical Imaging: Depth-Estimation is utilized in medical imaging to help in duties together with surgical planning, organ segmentation, and 3D reconstruction from 2D medical photographs.

- Surveillance and Safety: Surveillance methods can leverage Depth-Estimation for object detection and monitoring, enhancing the accuracy of safety monitoring.

- Robotics: Robots can use Depth-Estimation to visualise environment which allows them to navigate and work together with objects in environments.

- Images and Picture Enhancing: Depth data enhances the capabilities of picture enhancing instruments, permitting customers to use results selectively based mostly on the space of objects from the digital camera.

Conclusion

Depth Something represents a leap ahead in Monocular Depth-Estimation, addressing challenges associated to knowledge protection in zero-shot eventualities. Its integration into the broader AI group via open-source. This demonstrates a dedication to enhancing the sector and fostering collaboration. As we delve deeper into the capabilities of Depth Something, its potential affect on industries turns into more and more evident, heralding a brand new period within the realm of pc imaginative and prescient and depth notion.

Key Takeaways

- TikTok’s “Depth Something” mannequin is a Monocular Depth-Estimation (MDE) mannequin that leverages a mixture of 1.5 million labeled and 62 million unlabeled photographs.

- The mannequin’s success relied on using unlabeled datasets and an unconventional optimization method, incorporating coloration jittering, distortions, Gaussian blurring, and spatial distortion for invariant representations.

- Depth Something supplies optimum fine-tuning and benchmarking on datasets like NYUv2 and KITTI which showcases the reliability in various eventualities.

- Depth Something is made handy via a pipeline API, abstracting complexities.

- The mannequin has sensible purposes in fields like self-driven automobiles, augmented actuality, medical imaging, surveillance and safety, robotics, and pictures/picture enhancing.

Incessantly Requested Questions

A. Depth-Estimation is the method of figuring out the space of objects in a scene from a digital camera. It’s essential in pc imaginative and prescient because it supplies worthwhile 3D data that permits purposes together with object recognition, scene understanding, and spatial mapping.

A. The transformers library supplies pre-trained fashions for pure language processing and pc imaginative and prescient duties, together with Depth-Estimation. Leveraging these fashions simplifies the implementation of subtle Depth-Estimation algorithms which presents high-quality outcomes.

A. Sure, Depth-Estimation has various real-world purposes. It’s employed in fields like autonomous autos, medical imaging, augmented actuality, surveillance, robotics, and pictures. The flexibility to understand depth enhances the efficiency of methods in these domains.

A. A Flask net server for requests, a consumer interface to work together with the appliance, picture processing logic for Depth-Estimation, and styling components for an interesting visible presentation.

A. Customers are introduced with an choice to pick their most popular enter kind on the web-application. They will both enter a picture URL or add a picture file. The appliance dynamically adjusts its habits based mostly on the chosen enter kind.

References

The media proven on this article will not be owned by Analytics Vidhya and is used on the Creator’s discretion.