On July 29th, 2024, Meta AI launched Section Something 2 (SAM 2), a state-of-the-art picture and video segmentation mannequin.

With SAM 2, you’ll be able to specify factors on a picture and generate segmentation masks for these factors. You may as well generate segmentation masks for all objects in a picture.

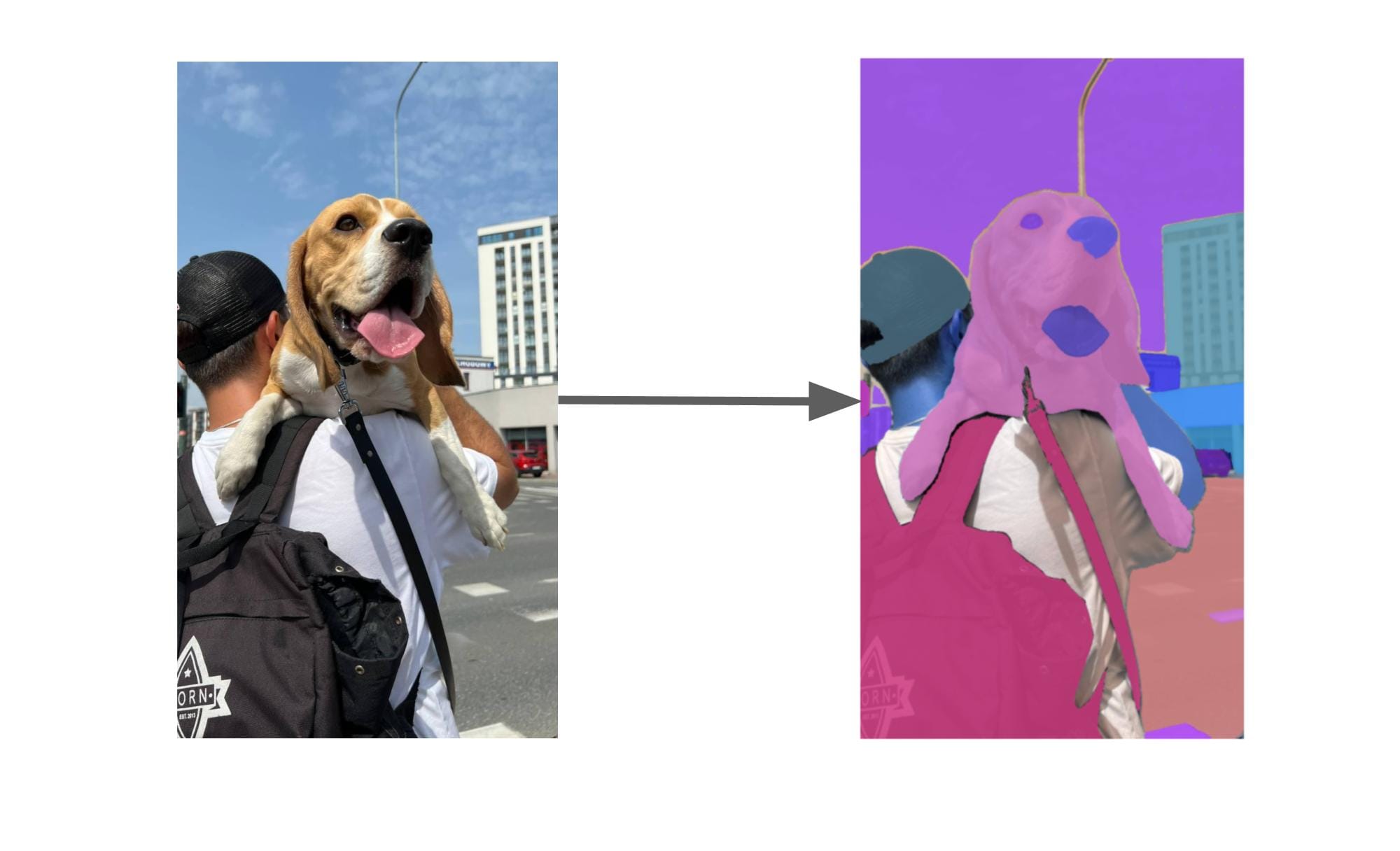

Right here is an instance of segmentation masks generated by SAM 2:

Whereas SAM 2 has no understanding of what the objects are, you’ll be able to mix the mannequin with Florence-2, a multimodal mannequin, to generate segmentation masks for areas in a picture with textual content prompts.

For instance, you may have a dataset of screws and supply the label “screw”. Florence-2 can establish all of the areas that comprise screws, then SAM can generate segmentation masks for every particular person screw.

On this information, we’re going to stroll by way of how one can label pc imaginative and prescient knowledge utilizing Grounded SAM 2, a mix of SAM 2 and Florence-2. This mannequin makes use of the Autodistill framework, which helps you to use giant, basis fashions to auto-label knowledge to be used in coaching smaller, fine-tuned fashions (i.e. YOLOv8, YOLOv10).

With out additional ado, let’s get began!

Step #1: Put together a dataset

To get began, you will want a dataset of photos. For this information, we’re going to label a dataset of transport containers. You possibly can obtain the dataset utilizing the next command

wget https://media.roboflow.com/containers.zip

Our dataset comprises footage of a yard on which there are a number of transport containers. Right here is an instance of a picture:

We’re going to use Grounded SAM 2, a mix of Florence-2 (the grounding mannequin) and SAM 2 (a segmentation mannequin) to generate segmentation masks for every screw in our dataset.

Step #2: Set up Autodistill Grounded SAM 2

With a dataset prepared, we will set up Grounded SAM 2. It is a mannequin obtainable within the Autodistill ecosystem. Autodistill permits you to use giant, basis fashions to auto-label knowledge to be used in coaching smaller, fine-tuned fashions.

Run the next command to put in Autodistill Grounded SAM 2, the muse mannequin we are going to use on this information:

pip set up autodistill-grounded-sam-2

After getting put in the mannequin, you might be prepared to begin testing which prompts carry out finest in your dataset.

Step #3: Check prompts

Utilizing Grounded SAM 2, we will present a textual content immediate and generate segmentation masks for all objects that match the immediate. Earlier than we auto-label a full dataset, nonetheless, you will need to check prompts to make sure that we discover one which precisely identifies objects of curiosity.

Let’s begin by testing the immediate “transport container”.

Create a brand new Python file and add the next code:

from autodistill_grounded_sam_2 import GroundedSAM2

from autodistill.detection import CaptionOntology

from autodistill.utils import plot

import cv2

import supervision as sv base_model = GroundedSAM2( ontology=CaptionOntology(

{

"screw": "screw"

} )

)

outcomes = base_model.predict("picture.png") picture = cv2.imread("picture.png") mask_annotator = sv.MaskAnnotator() annotated_image = mask_annotator.annotate( picture.copy(), detections=outcomes

) sv.plot_image(picture=annotated_image, dimension=(8, 8)) Above, we import the required dependencies, then initialize an occasion of the GroundedSAM2 mannequin. We set an ontology the place “screw” is our immediate and we save the outcomes from the mannequin with the category title “screw”. To customise your immediate, replace the primary “screw” textual content. To customise the label that will probably be saved to your last dataset, replace the second occasion of “screw”.

We then run inference on a picture known as “picture.png”. We use the supervision Python bundle to indicate the segmentation masks returned by our mannequin.

Let’s run the script on the next picture:

Our script returns the next visualization:

The purple shade reveals the place containers have been recognized. Our system has efficiently recognized each containers!

In case your immediate doesn’t work to your knowledge, replace the “transport container” immediate above and discover completely different prompts. It might take a number of tries to discover a immediate that works to your knowledge.

Notice that some objects won’t be identifiable with Grounded SAM 2. Grounded SAM 2 works finest on generic objects. For instance, the mannequin can establish containers, however will doubtless battle to seek out the situation of container IDs.

Step #4: Auto-label knowledge

With a immediate that efficiently identifies our objects, we will now label our full dataset. Create a brand new folder and add all the photographs you need to label within the folder.

We will do that utilizing the next code:

base_model.label("containers", extension="jpg")

Above, substitute knowledge with the title of the folder the place your photos are that you simply need to label. Substitute “jpg” with the extension of the photographs within the folder.

When you’re prepared, run the code.

Your photos will probably be labeled utilizing Grounded SAM 2 and saved into a brand new folder. When the labeling course of is finished, you’ll have a dataset you could import into Roboflow.

Step #5: Prepare a mannequin

With a labeled dataset prepared, the subsequent step is to examine the standard of the labels and prepare your mannequin. Roboflow has utilities for each of those steps. With Roboflow, you’ll be able to evaluation and amend annotations, then use your dataset to coach a mannequin.

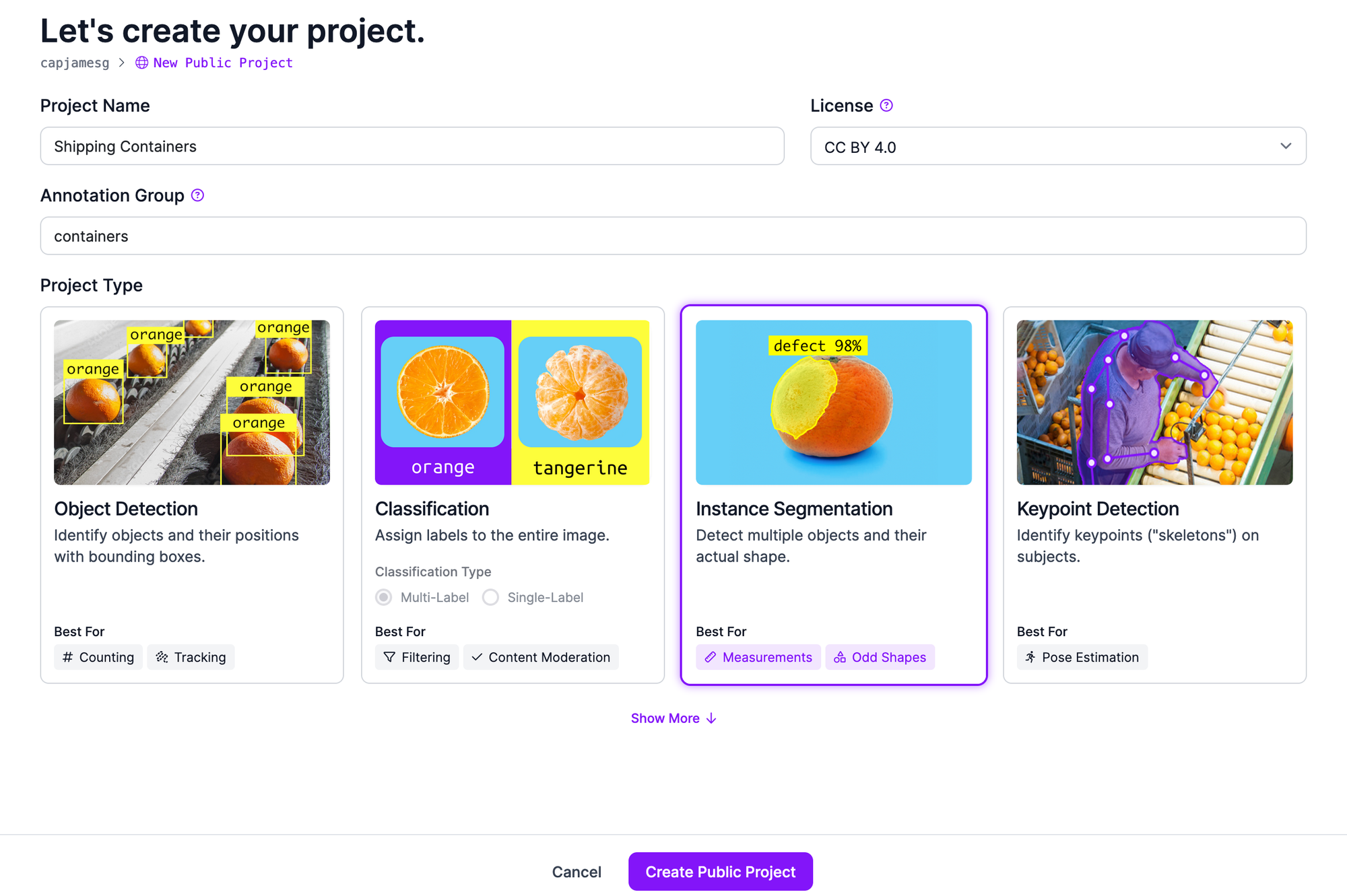

To examine your dataset and prepare a mannequin, first create a free Roboflow account. Then, click on “Create Mission” in your Roboflow dashboard. Set a reputation to your venture, then choose “occasion segmentation” as your venture sort.

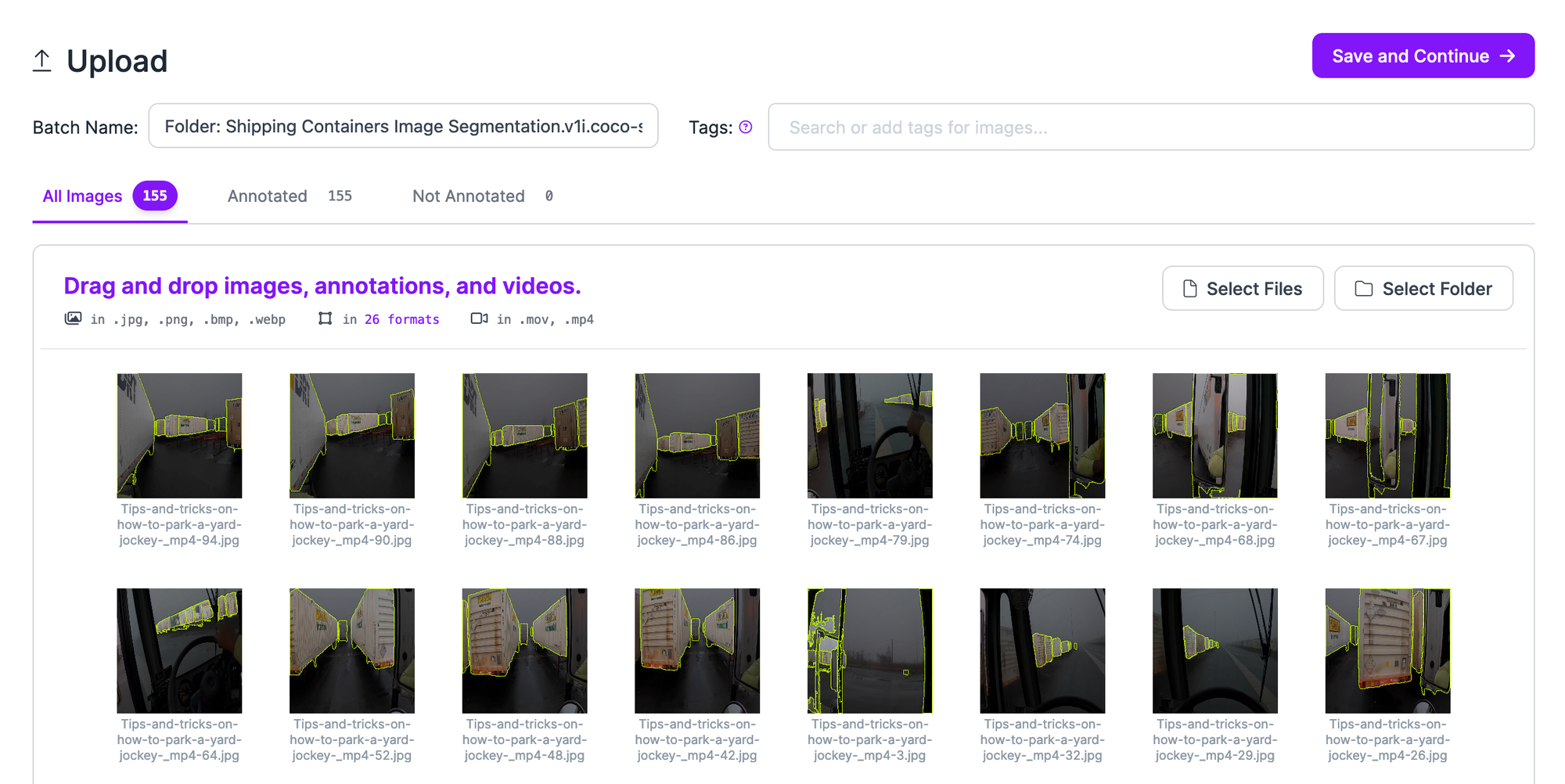

You may be taken to a web page the place you’ll be able to add your photos for evaluation. Drag and drop your folder of labeled photos generated by Grounded SAM 2 into the web page. Your photos will probably be processed within the browser. When the photographs are prepared for add, click on “Save and Proceed”.

Now you can evaluation your photos and make modifications to your annotations. Click on on the dataset batch that you simply uploaded, then choose a picture to examine and modify the annotations.

You possibly can add, modify, and take away polygons. To be taught extra about annotating with Roboflow and the options obtainable, confer with the Roboflow Annotate documentation.

A fast method so as to add a brand new polygon is to make use of the Section Something-powered labeling device. This device permits you to click on on a area of a picture and assign a polygon utilizing Section Something.

To allow this characteristic, click on on the magic wand characteristic in the precise process bar, then click on “Enhanced Good Polygon”. This can allow the Section Something-powered Good Polygon annotation device. With this device, you’ll be able to hover over a part of a picture to see what masks will probably be created, then click on to create a masks.

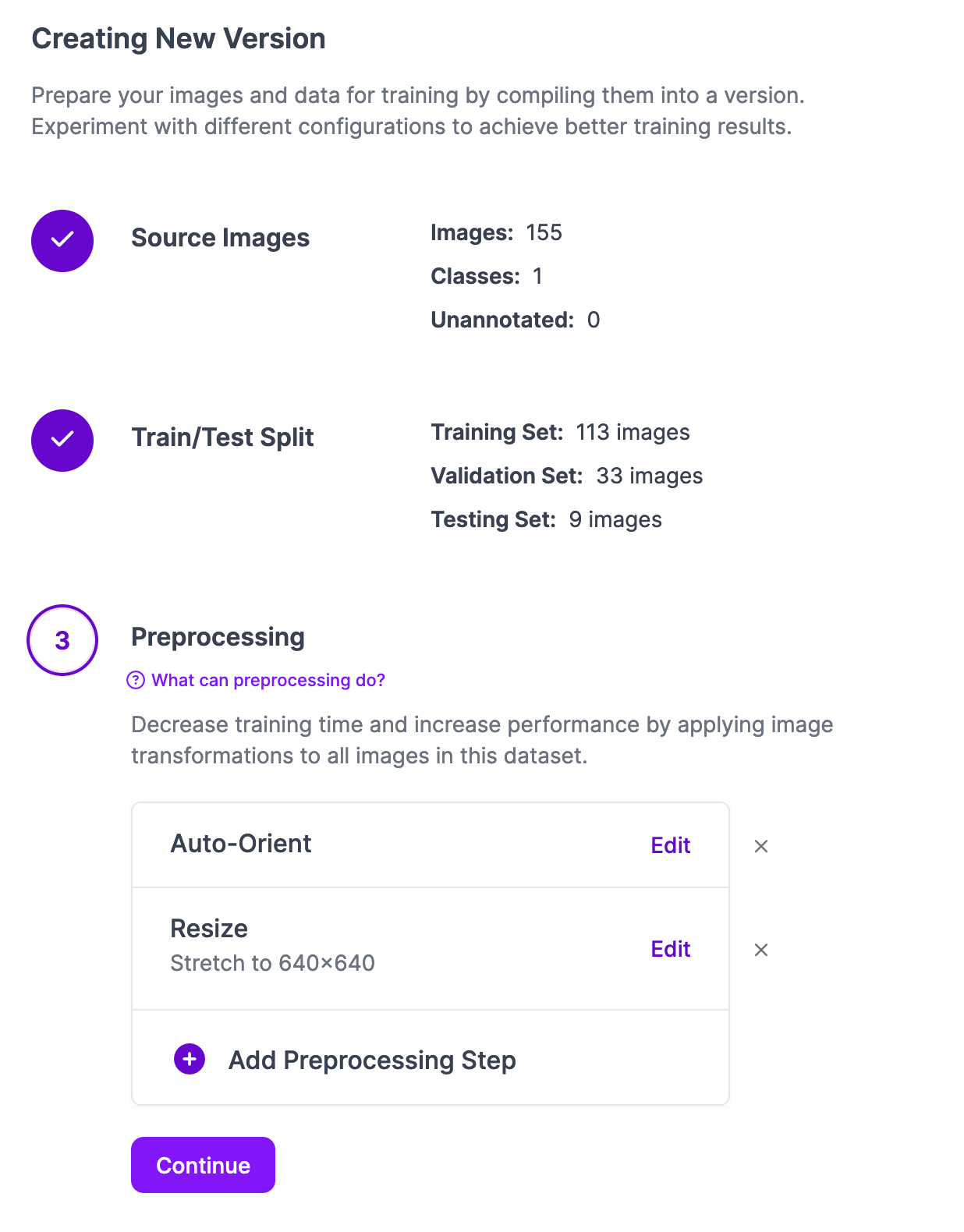

After getting labeled your photos, you’ll be able to generate a dataset model from the “Generate” tab in Roboflow. On this tab, you’ll be able to apply augmentations and preprocessing steps to your dataset. Learn our information to preprocessing and augmentation to discover ways to assist enhance mannequin efficiency with preprocessing and augmentation.

💡

Notice: In case your dataset has zero photos within the Testing Set, click on on the Prepare/Check Cut up and rebalance your dataset to make sure there are photos within the check set. You will have photos within the check set to coach your mannequin.

When you’re prepared, click on “Create” on the dataset technology web page. This can create a model of your dataset with any chosen preprocessing and augmentation steps. You may be taken to your dataset web page the place you’ll be able to prepare a mannequin.

Click on the ‘Prepare with Roboflow” button to coach your mannequin. It is possible for you to to customise the coaching job. On your first coaching job, select all of the “Advisable” choices: quick coaching, and prepare from the COCO checkpoint.

When coaching begins, it is possible for you to to observe coaching progress within the Roboflow dashboard.

Subsequent Steps

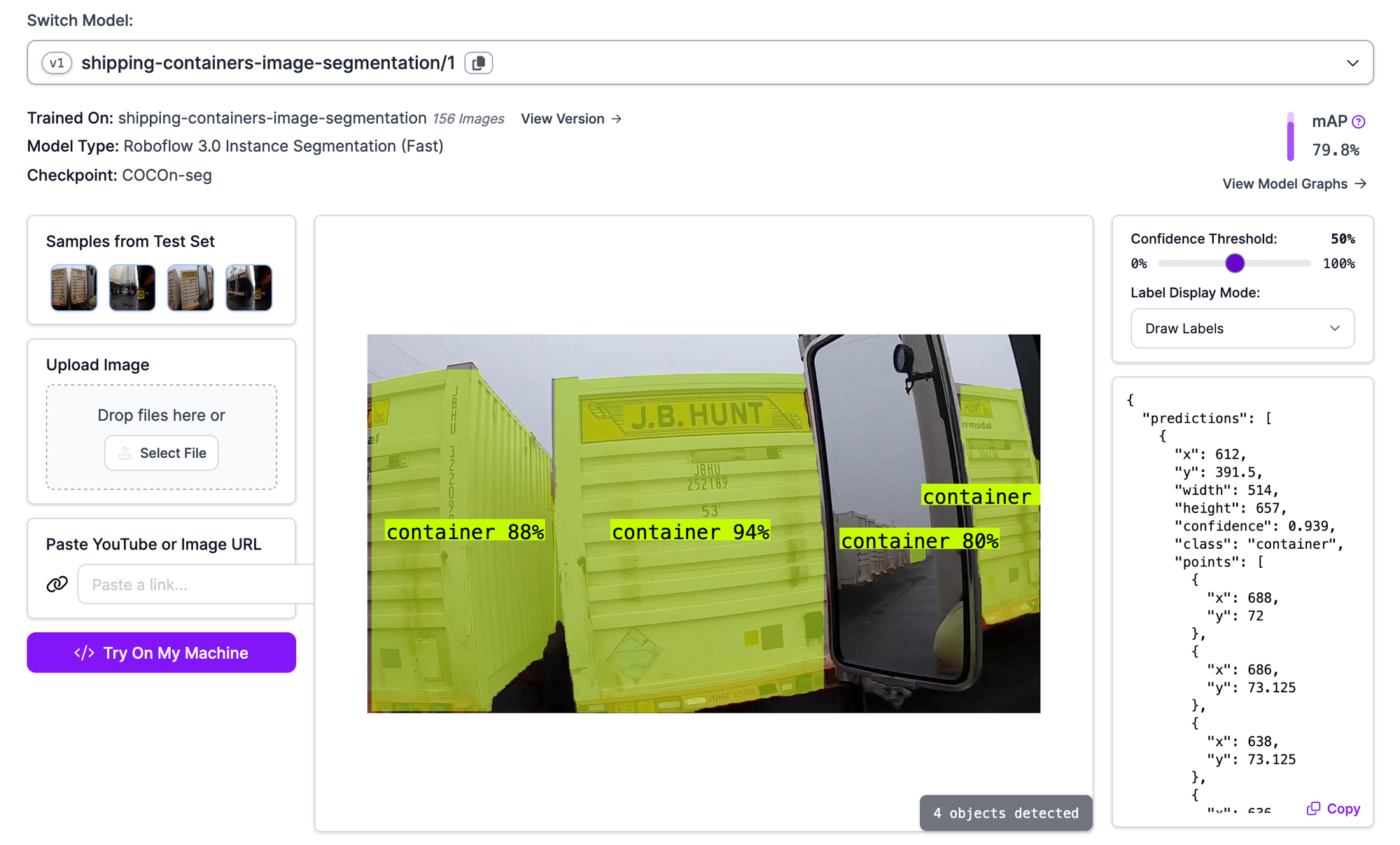

After getting a educated mannequin, you’ll be able to preview it from the Visualize tab within the Roboflow dashboard. Right here is an instance of our container detection mannequin working:

Our mannequin efficiently identifies the situation of conainers in our dataset.

With a educated mannequin, the subsequent step is to deploy the mannequin. You possibly can deploy the mannequin to the cloud utilizing the Roboflow API, or by yourself {hardware}. When you select to deploy the mannequin to your personal {hardware}, you’ll be able to run your deployment with Roboflow Inference. Inference is our high-performance self-hosted pc imaginative and prescient Inference server.

You possibly can deploy Inference on NVIDIA Jetson units, Raspberry Pis, by yourself cloud GPU server, an AI PC, and extra.

To be taught extra about deploying segmentation fashions with Inference, confer with the Inference documentation.