Introduction to YOLOv11

Launched on September 27, 2024, YOLOv11 (referred to by the mannequin writer Ultralytics as YOLO11) is a pc imaginative and prescient mannequin that you should use for all kinds of duties, from object detection to segmentation to classification.

In keeping with Ultralytics, “YOLO11m achieves a better imply Common Precision (mAP) rating on the COCO dataset whereas utilizing 22% fewer parameters than YOLOv8m.” With fewer parameters, the mannequin can run sooner, thereby making the mannequin extra enticing to be used in real-time laptop imaginative and prescient purposes.

On this information, we’re going to stroll by means of how you can prepare a YOLOv11 object detection mannequin with a customized dataset. We’ll:

- Create a customized dataset with labeled pictures

- Export the dataset to be used in mannequin coaching

- Prepare the mannequin utilizing the a Colab coaching pocket book

- Run inference with the mannequin

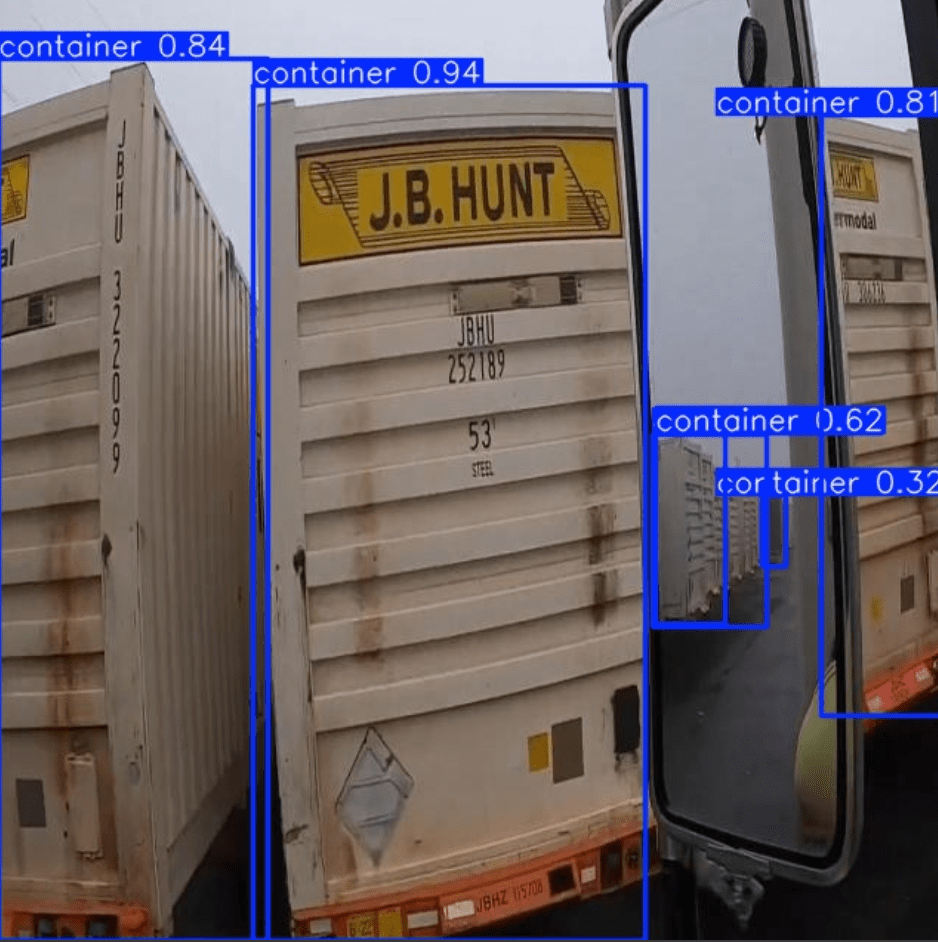

Right here is an instance of predictions from a mannequin skilled to establish transport containers:

We’ve got a YOLOv11 Colab pocket book so that you can use as you comply with this tutorial.

With out additional ado, let’s get began!

Step #1: Create a Roboflow Undertaking

To get began, we have to put together a labeled dataset within the format required by YOLOv11. We are able to accomplish that with Roboflow.

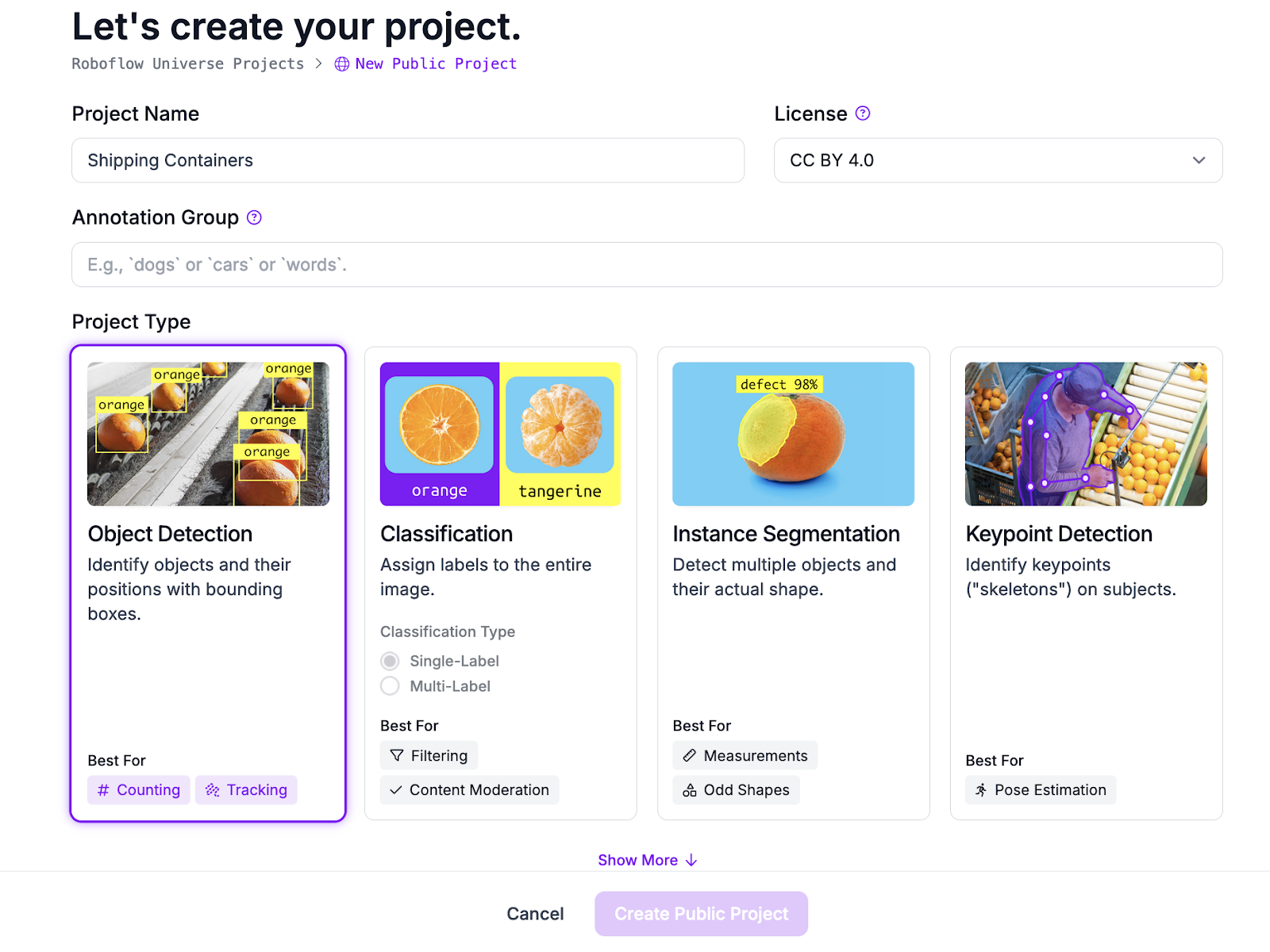

Create a free Roboflow account. After getting created an account, click on “Create New Undertaking” on the Roboflow dashboard. You’ll be taken to a web page the place you possibly can configure your challenge.

Set a reputation on your challenge. Select the “Object Detection” dataset sort:

Then, click on “Create Undertaking” to create your challenge.

Step #2: Add and Annotate Pictures

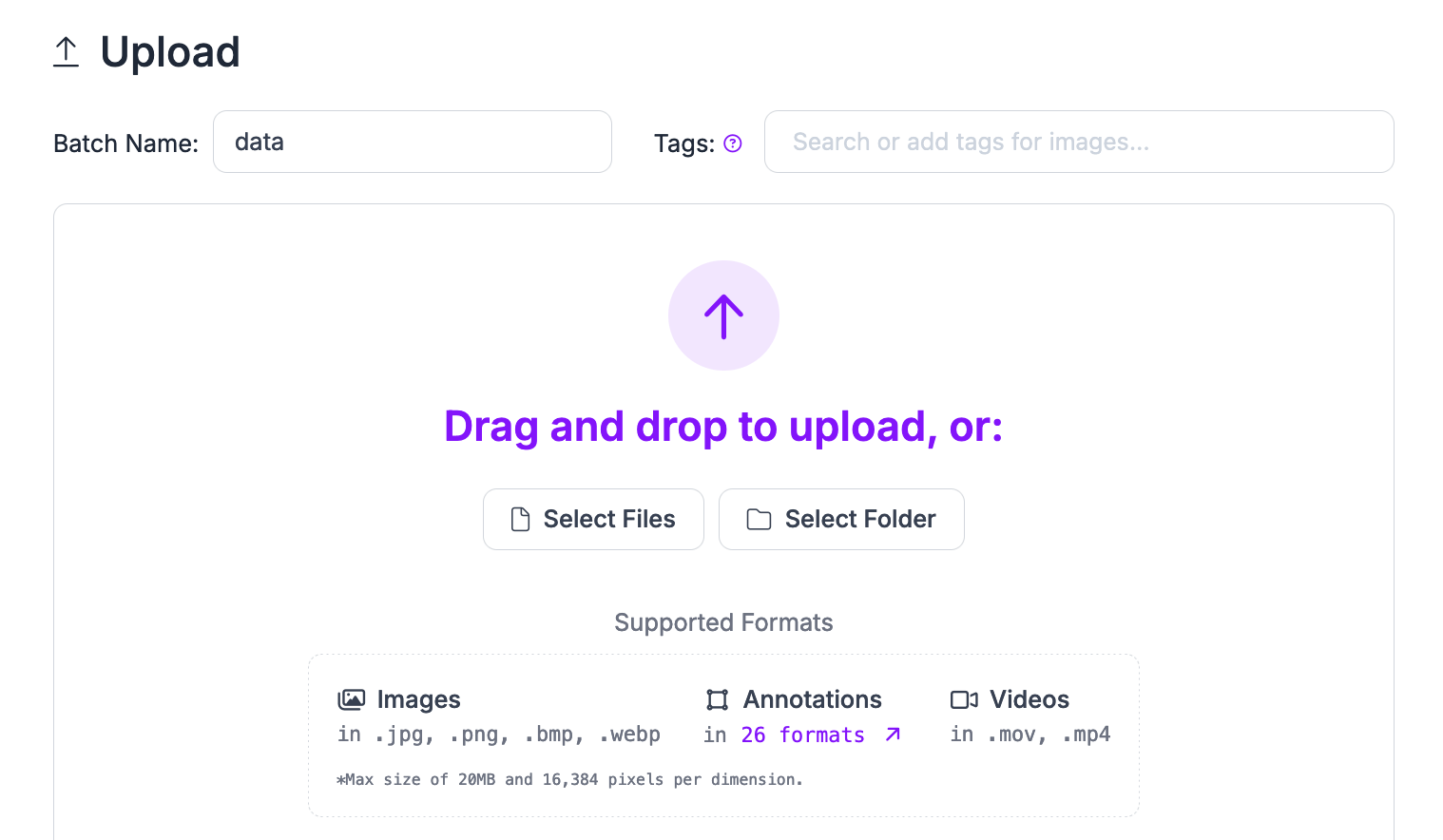

Subsequent, that you must add information to be used in your challenge. You possibly can add labeled information to overview or convert to the YOLO PyTorch TXT format, and/or uncooked pictures to annotate in your challenge.

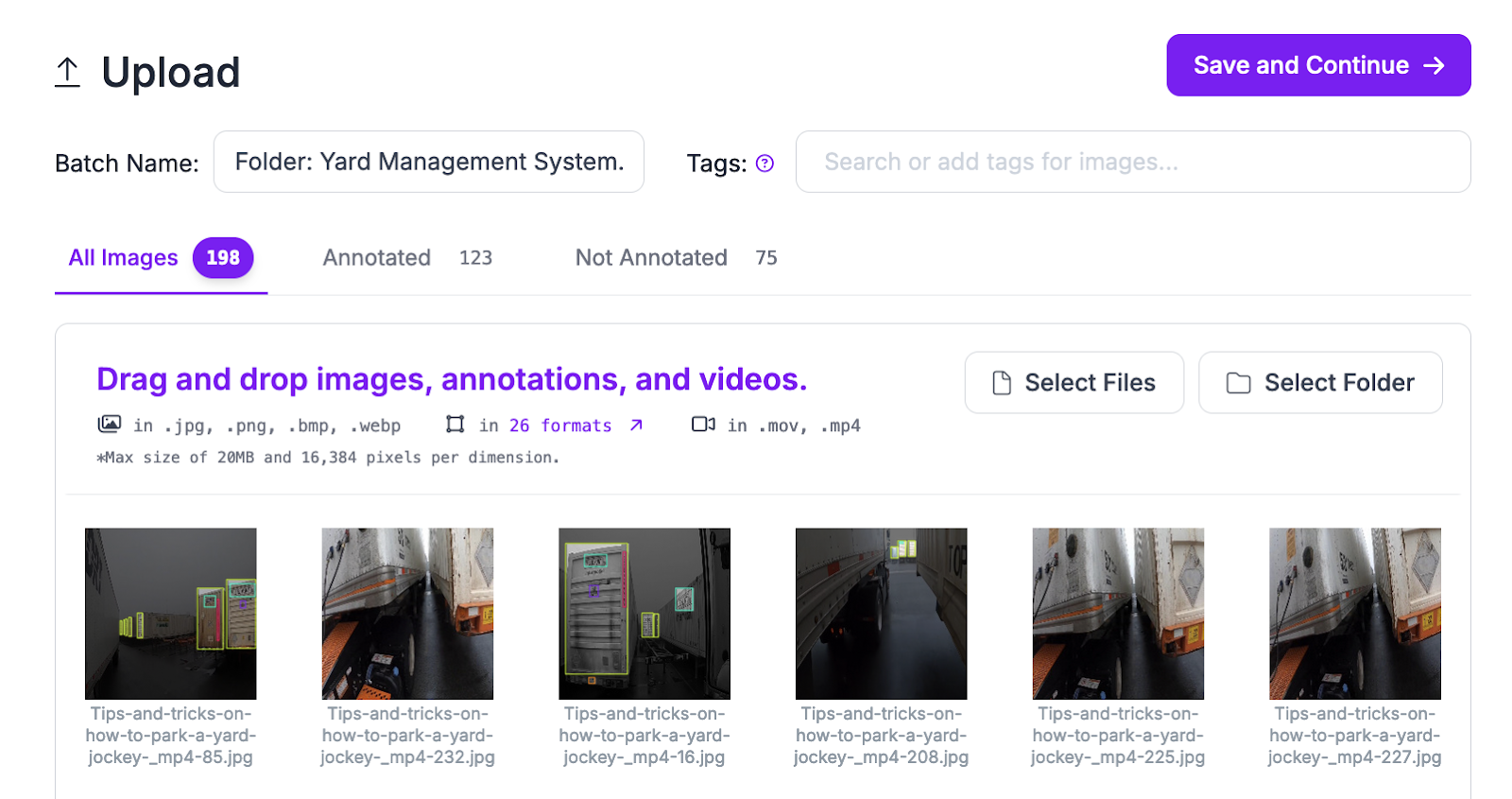

On this information, we’ll prepare a mannequin that detects transport containers. We’ve got an open transport container dataset on Roboflow Universe that you should use. Or, you should use your personal information.

Drag and drop your uncooked or annotated pictures into the Add field:

If you drag in information, the information shall be processed in your browser. Click on “Save and Proceed” to add your information into the Roboflow platform.

Together with your information in Roboflow, you possibly can annotate pictures.

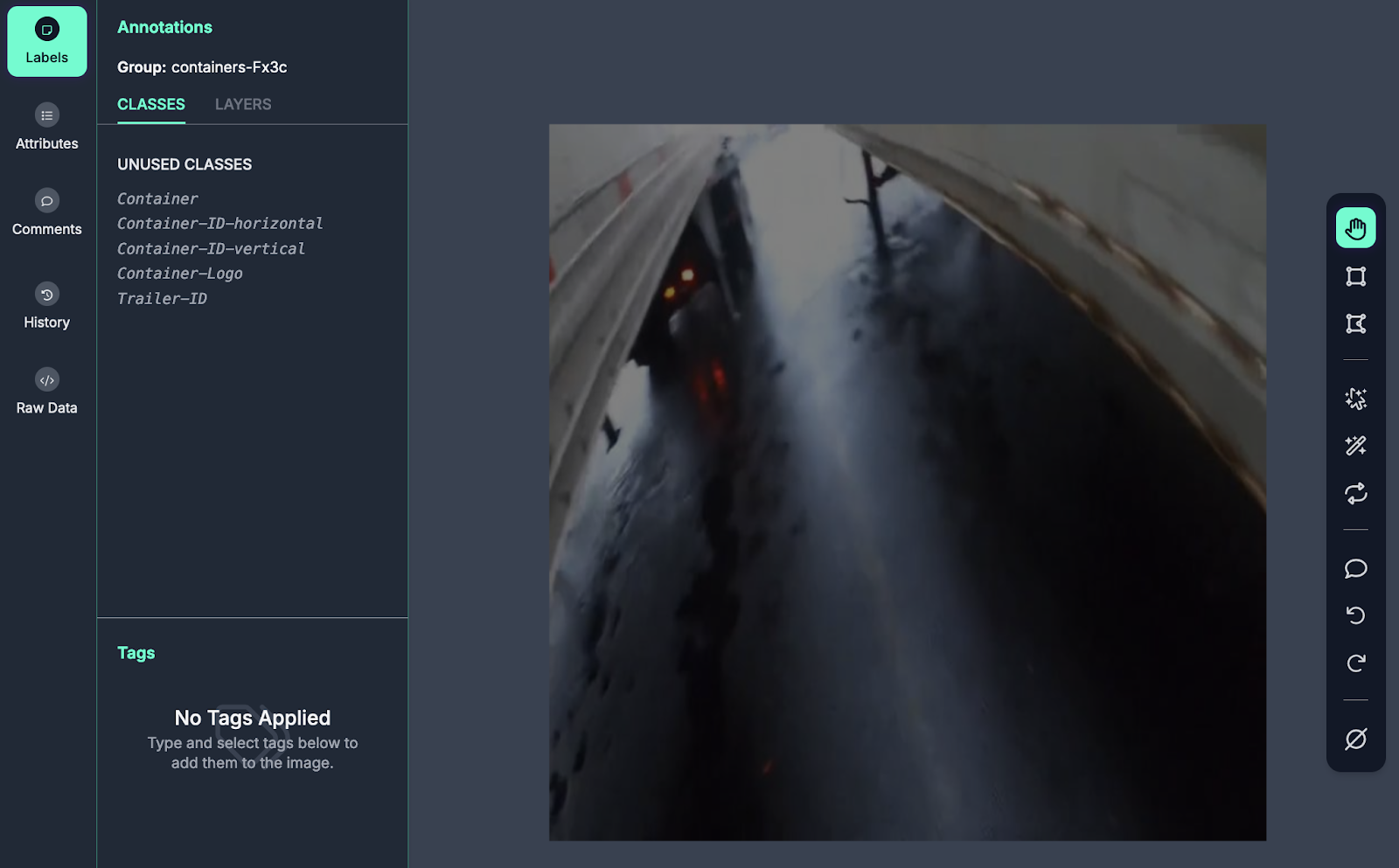

To annotate a picture, click on “Annotate” within the left sidebar. Click on on a picture to start out annotating. You’ll be taken to the Roboflow Annotate interface in which you’ll label your information:

To attract bounding packing containers, select the bounding field labeling software in the precise sidebar.

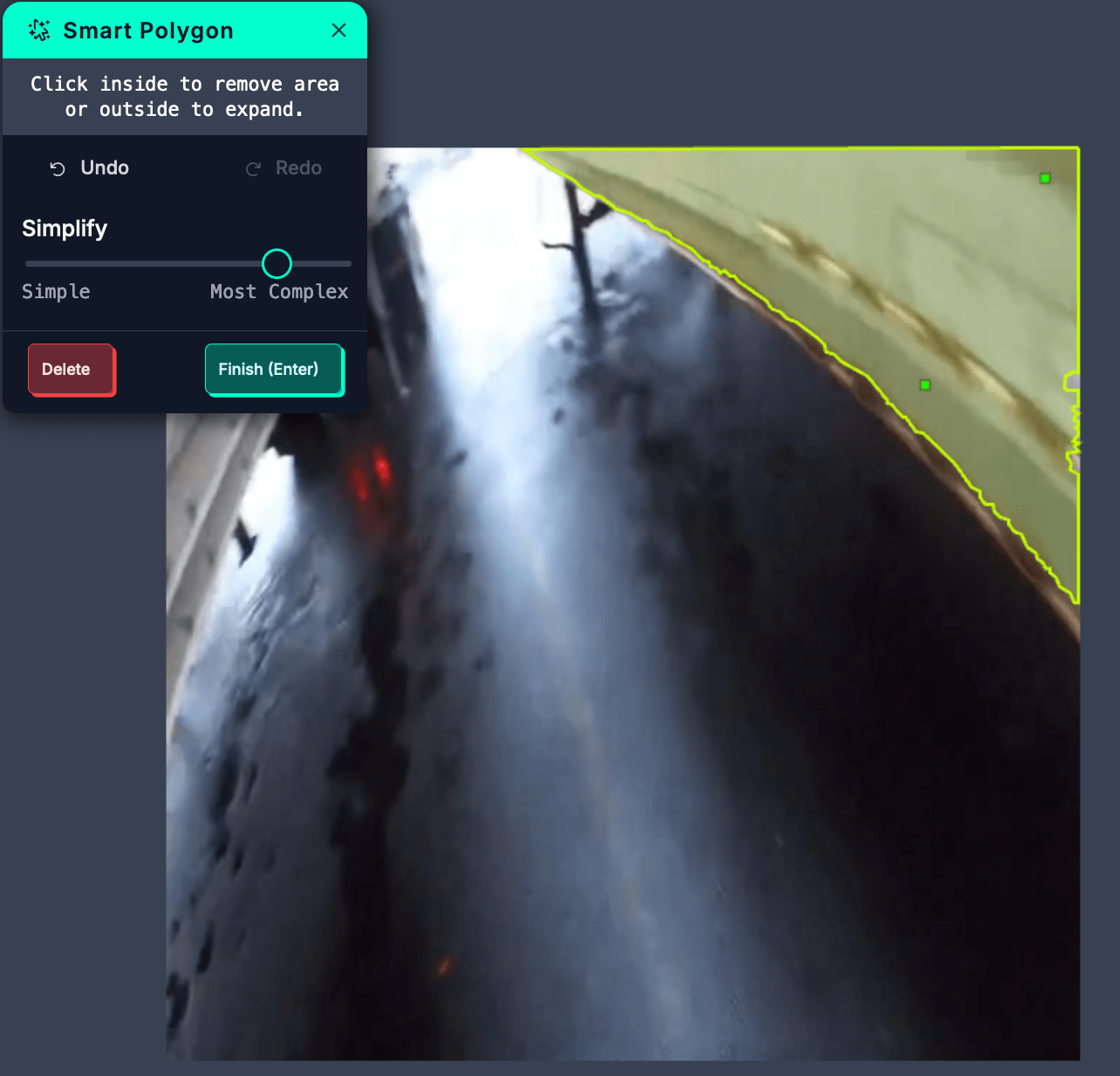

With that mentioned, we advocate utilizing the Roboflow Good Polygon software to hurry up the labeling course of. Good Polygon allows you to use the SAM-2 basis mannequin to label objects with a single click on. Good Polygons are routinely transformed to an object detection format when your information is exported.

To allow Label Help, click on on the magic wand icon in the precise sidebar within the annotation interface. Select the Enhanced labeling choice.

You possibly can then level and click on on any area that you simply need to embody in an annotation:

To develop a label, click on outdoors the area that the software has chosen. To refine a polygon, click on contained in the annotated area.

When you might have labeled an object, click on “End” to avoid wasting the annotation. You’ll then be requested to decide on a category for the annotation, after which level you possibly can label the following object within the picture.

Step #3: Generate a Dataset Model

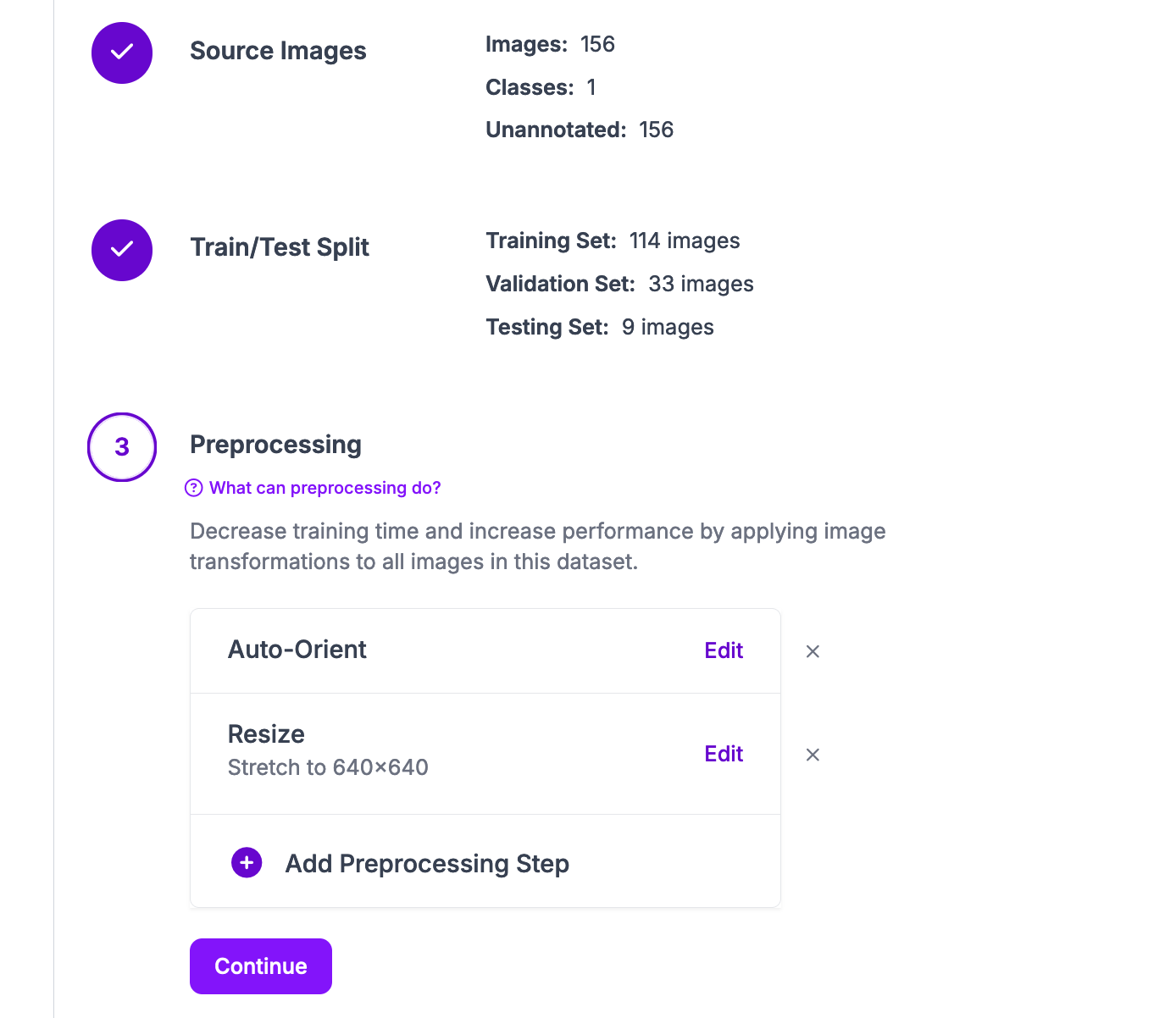

After getting labeled your dataset, you’re able to generate a dataset model. A dataset model is a snapshot of a dataset, frozen in time. You possibly can apply preprocessing and augmentation datasets to variations, permitting you to experiment with preprocessing and augmentation with out altering the underlying dataset.

To generate a dataset model, click on “Generate” within the left sidebar.

Then, you possibly can choose the preprocessing and augmentation steps that you simply need to apply.

For the primary model of your challenge, we advocate leaving the preprocessing steps as their default, and making use of no augmentations. This can assist you to create a baseline towards which you’ll evaluate mannequin variations.

After getting configured your dataset model, click on “Generate” on the backside of the web page. Your dataset model shall be generated.

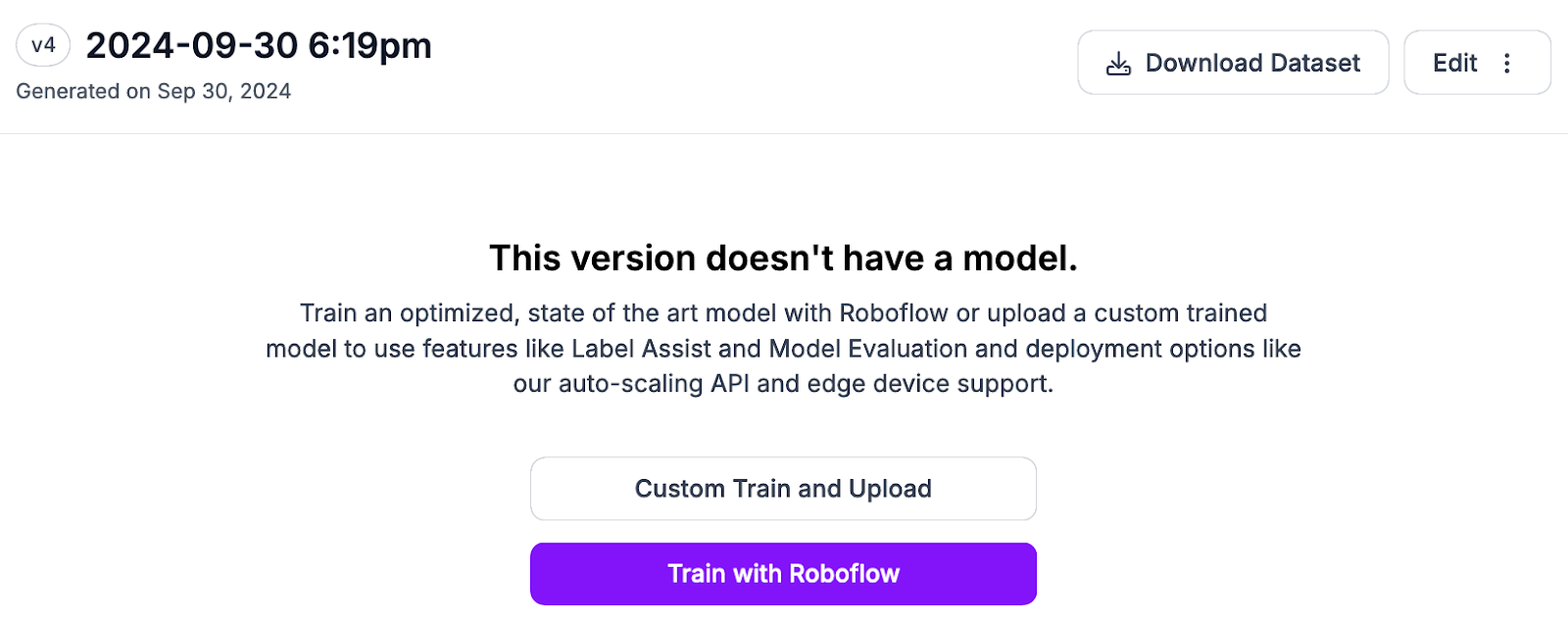

Step #4: Export Knowledge

With a dataset model prepared, you possibly can export it to be used in coaching a YOLO11 mannequin.

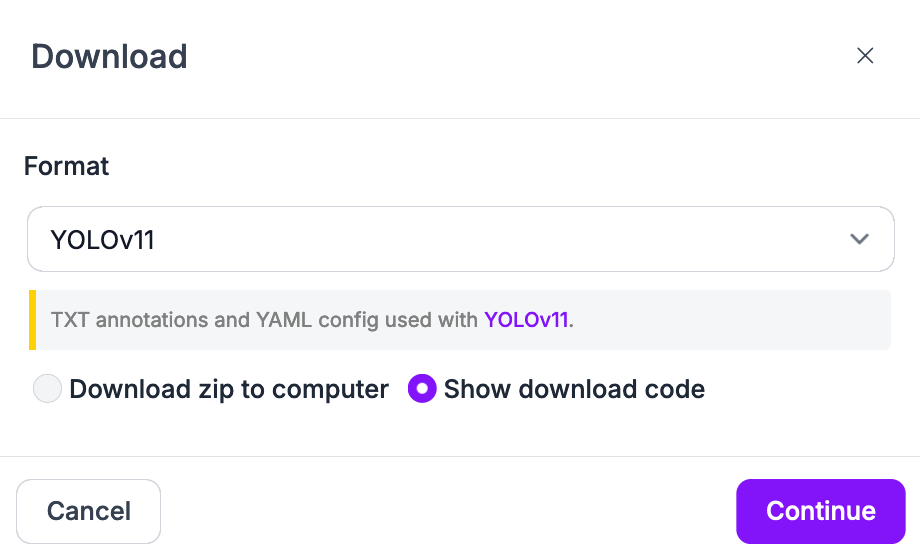

Click on “Obtain Dataset”:

Then select the YOLOv11 PyTorch TXT format:

Select the “Present obtain code choice”.

A code snippet will seem that you should use to export your dataset.

Step #5: Prepare a YOLOv11 Mannequin

With the export code snippet prepared, you can begin coaching a YOLOv11 mannequin. We’ve got ready a pocket book that you should use to coach a mannequin.

To get began, open the Roboflow YOLOv11 mannequin coaching pocket book in Google Colab. Beneath, we’ll stroll by means of the primary steps.

First, we have to set up a couple of dependencies:

- Ultralytics, which we’ll use for coaching and inference;

- supervision, which we’ll use to post-process mannequin predictions, and;

- Roboflow, which we’ll use to obtain our dataset.

%pip set up ultralytics supervision roboflow

import ultralytics

ultralytics.checks()Then, replace the dataset obtain part to make use of the dataset export code snippet:

!mkdir {HOME}/datasets

%cd {HOME}/datasets from google.colab import userdata

from roboflow import Roboflow ROBOFLOW_API_KEY = userdata.get('ROBOFLOW_API_KEY')

rf = Roboflow(api_key=ROBOFLOW_API_KEY) workspace = rf.workspace("your-workspace")

challenge = workspace.challenge("your-dataset")

model = challenge.model(VERSION)

dataset = model.obtain("yolov8")Subsequent, we have to make a couple of adjustments to the dataset recordsdata to work with the YOLOv11 coaching routine:

!sed -i '$d' {dataset.location}/information.yaml # Delete the final line

!sed -i '$d' {dataset.location}/information.yaml # Delete the second-to-last line

!sed -i '$d' {dataset.location}/information.yaml # Delete the third-to-last line !echo 'check: ../check/pictures' >> {dataset.location}/information.yaml

!echo 'prepare: ../prepare/pictures' >> {dataset.location}/information.yaml

!echo 'val: ../legitimate/pictures' >> {dataset.location}/information.yamlWe are able to then prepare our mannequin utilizing the next command:

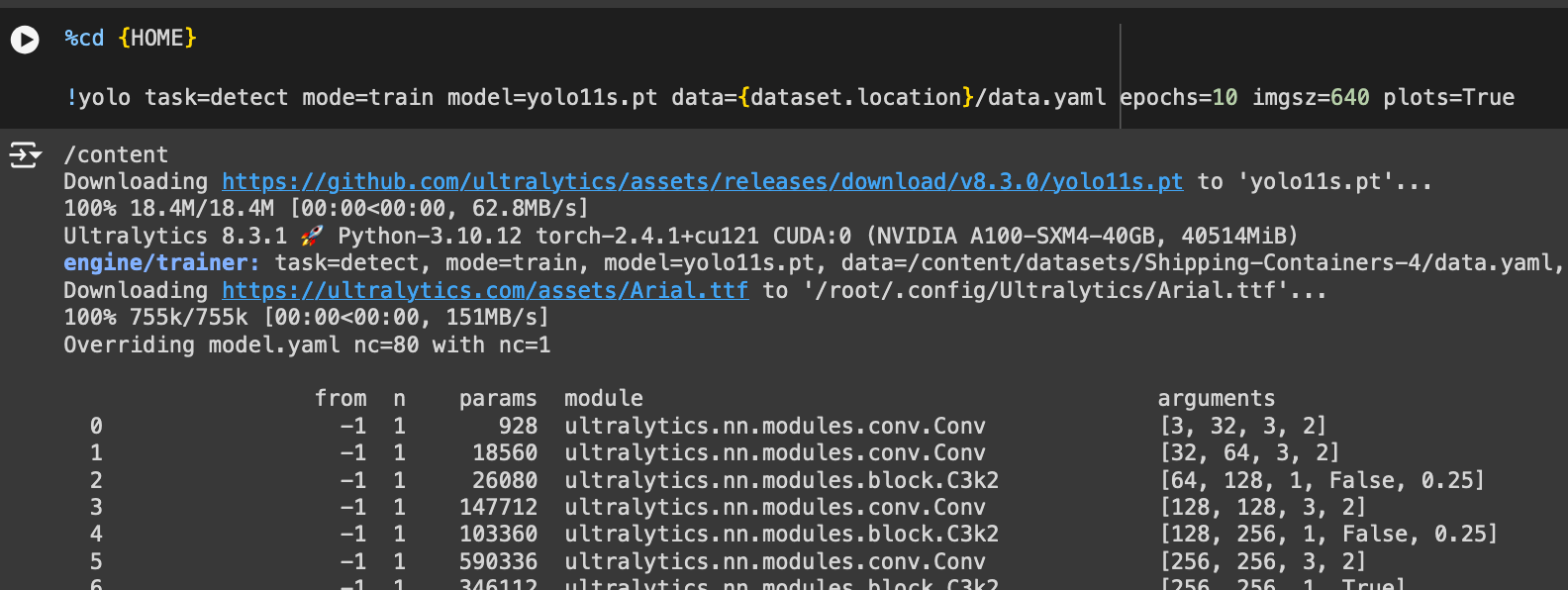

%cd {HOME} !yolo activity=detect mode=prepare mannequin=yolo11s.pt information={dataset.location}/information.yaml epochs=10 imgsz=640 plots=TrueRight here, we prepare a YOLO11s mannequin. If you wish to prepare a mannequin of a unique dimension, change yolo11s with the ID of the bottom mannequin weights to make use of.

We’ll prepare for 10 epochs to check the coaching. For a mannequin you need to use in manufacturing you might need to prepare for 50-100 epochs.

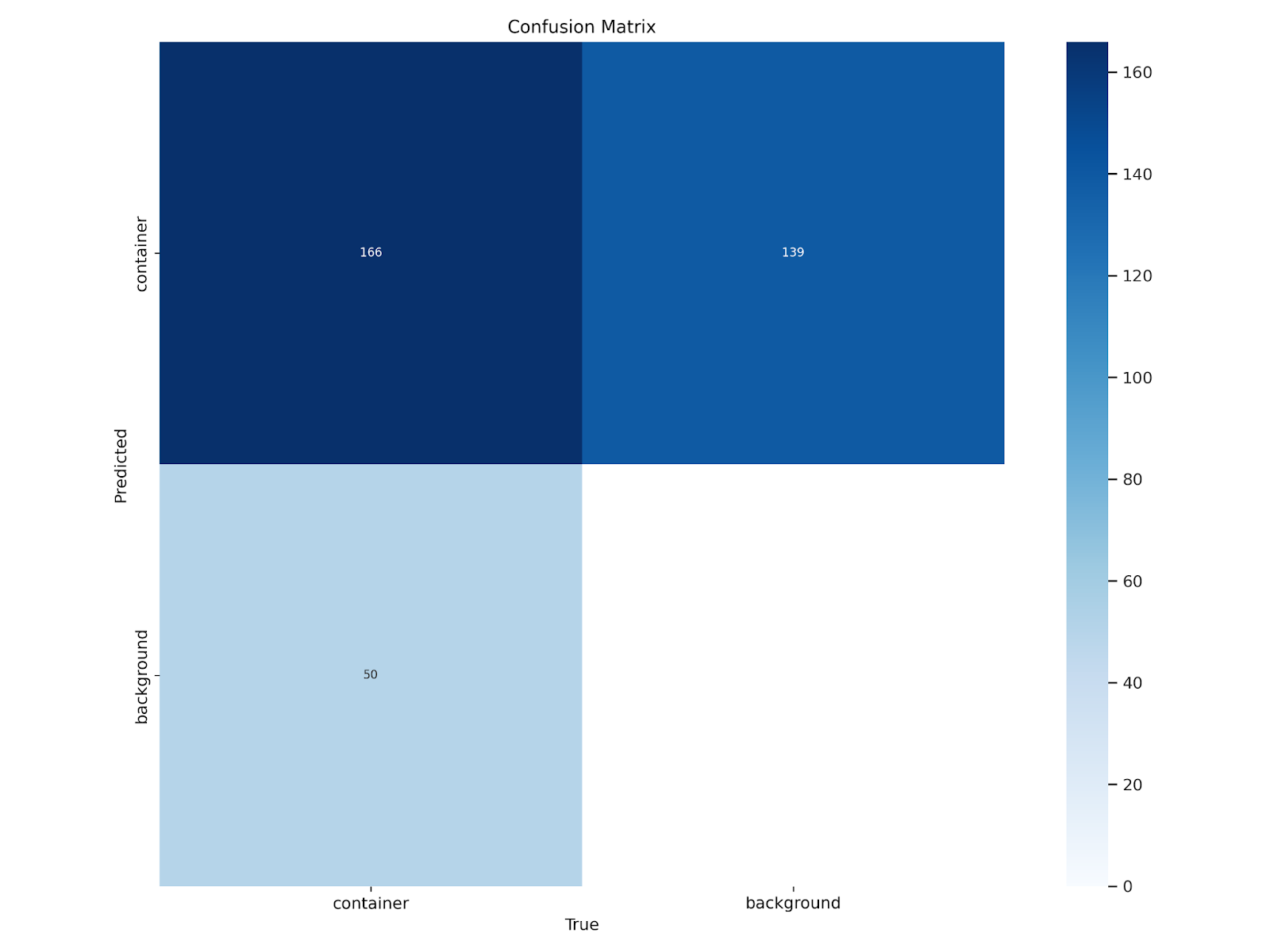

You possibly can view the confusion matrix on your mannequin utilizing the next code:

from IPython.show import Picture as IPyImage IPyImage(filename=f'{HOME}/runs/detect/prepare/confusion_matrix.png', width=600)Right here is the confusion matrix for our mannequin:

You possibly can then run inference with the Ultralytics yolo command line interface:

!yolo activity=detect mode=predict mannequin={HOME}/runs/detect/prepare/weights/greatest.pt conf=0.25 supply={dataset.location}/check/pictures save=TrueThis can run inference on pictures in our check/pictures check set in our dataset. This command will return all predictions with a confidence larger than 0.25 (25%).

We are able to then visualize the mannequin outcomes utilizing the next code:

import glob

import os

from IPython.show import Picture as IPyImage, show latest_folder = max(glob.glob('/content material/runs/detect/predict*/'), key=os.path.getmtime)

for img in glob.glob(f'{latest_folder}/*.jpg')[:3]: show(IPyImage(filename=img, width=600)) print("n")Right here is an instance of a outcome from our mannequin:

We’ve got efficiently skilled a mannequin utilizing our transport container dataset.

Conclusion

YOLOv11 is a pc imaginative and prescient mannequin developed by Ultralytics, the creators of YOLOv5 and YOLOv8. You possibly can prepare fashions for object detection, segmentation, classification, and different duties with YOLOv11.

On this information, we walked by means of how you can prepare a YOLOv11 mannequin. We ready a dataset in Roboflow, then exported the dataset within the YOLOv11 PyTorch TXT format to be used in coaching a mannequin. We skilled our mannequin in a Google Colab surroundings utilizing the YOLO11n weights, then evaluated it on pictures from our mannequin check set.

To discover extra coaching notebooks from Roboflow, take a look at Roboflow Notebooks, our open supply repository of laptop imaginative and prescient notebooks.