YOLO (You Solely Look As soon as) is a state-of-the-art (SOTA) object-detection algorithm launched as a analysis paper by J. Redmon, et al. (2015). Within the discipline of real-time object identification, YOLOv11 structure is an development over its predecessor, the Area-based Convolutional Neural Community (R-CNN).

Utilizing a complete picture as enter, this single-pass method with a single neural community predicts bounding bins and sophistication chances. On this article we are going to elaborate on YOLOV11 – the newest developed by Ultralytics.

About us: Viso Suite is an Finish-to-Finish Laptop Imaginative and prescient Infrastructure that gives all of the instruments required to coach, construct, deploy, and handle pc imaginative and prescient functions at scale. By combining accuracy, reliability, and decrease complete value of possession Viso Suite, lends itself completely to multi-use case, multi-location deployments. To get began with enterprise-grade pc imaginative and prescient infrastructure, e-book a demo of Viso Suite with our workforce of consultants.

What’s YOLOv11?

YOLOv11 is the newest model of YOLO, a sophisticated real-time object detection. The YOLO household enters a brand new chapter with YOLOv11, a extra succesful and adaptable mannequin that pushes the boundaries of pc imaginative and prescient.

The mannequin helps pc imaginative and prescient duties like posture estimation and occasion segmentation. CV neighborhood that makes use of earlier YOLO variations will admire YOLOv11 due to its higher effectivity and optimized structure.

Ultralytics CEO and founder Glenn Jocher claimed: “With YOLOv11, we got down to develop a mannequin that gives each energy and practicality for real-world functions. Due to its elevated accuracy and effectivity, it’s a flexible instrument that’s tailor-made to the actual issues that completely different sectors encounter.”

Supported Duties

For builders and researchers alike, Ultralytics YOLOv11 is a ubiquitous instrument resulting from its creative structure. CV neighborhood will use YOLOv11 to develop inventive options and superior fashions. It allows a wide range of pc imaginative and prescient duties, together with:

- Object Detection

- Occasion Segmentation

- Pose Estimation

- Oriented Detection

- Classification

A number of the principal enhancements embrace improved function extraction, extra correct element seize, larger accuracy with fewer parameters, and sooner processing charges that enormously enhance real-time efficiency.

An Overview of YOLO Fashions

Right here is an summary of the YOLO household of fashions up till YOLOv11.

| Launch | Authors | Duties | Paper | |

|---|---|---|---|---|

| YOLO | 2015 | Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi | Object Detection, Fundamental Classification | You Solely Look As soon as: Unified, Actual-Time Object Detection |

| YOLOv2 | 2016 | Joseph Redmon, Ali Farhadi | Object Detection, Improved Classification | YOLO9000: Higher, Sooner, Stronger |

| YOLOv3 | 2018 | Joseph Redmon, Ali Farhadi | Object Detection, Multi-scale Detection | YOLOv3: An Incremental Enchancment |

| YOLOv4 | 2020 | Alexey Bochkovskiy, Chien-Yao Wang, Hong-Yuan Mark Liao | Object Detection, Fundamental Object Monitoring | YOLOv4: Optimum Pace and Accuracy of Object Detection |

| YOLOv5 | 2020 | Ultralytics | Object Detection, Fundamental Occasion Segmentation (by way of customized modifications) | no |

| YOLOv6 | 2022 | Chuyi Li, et al. | Object Detection, Occasion Segmentation | YOLOv6: A Single-Stage Object Detection Framework for Industrial Purposes |

| YOLOv7 | 2022 | Chien-Yao Wang, Alexey Bochkovskiy, Hong-Yuan Mark Liao | Object Detection, Object Monitoring, Occasion Segmentation | YOLOv7: Trainable bag-of-freebies units new state-of-the-art for real-time object detectors |

| YOLOv8 | 2023 | Ultralytics | Object Detection, Occasion Segmentation, Panoptic Segmentation, Keypoint Estimation | no |

| YOLOv9 | 2024 | Chien-Yao Wang, I-Hau Yeh, Hong-Yuan Mark Liao | Object Detection, Occasion Segmentation | YOLOv9: Studying What You Wish to Be taught Utilizing Programmable Gradient Data |

| YOLOv10 | 2024 | Ao Wang, Hui Chen, Lihao Liu, Kai Chen, Zijia Lin, Jungong Han, Guiguang Ding | Object Detection | YOLOv10: Actual-Time Finish-to-Finish Object Detection |

Key Benefits of YOLOv11

YOLOv11 is an enchancment over YOLOv9 and YOLOv10, which had been launched earlier this 12 months (2024). It has higher architectural designs, more practical function extraction algorithms, and higher coaching strategies. The outstanding mix of YOLOv11’s velocity, precision, and effectivity units it aside, making it among the many strongest fashions by Ultralytics to this point.

YOLOv11 possesses an improved design, which allows extra exact detection of delicate particulars – even in troublesome conditions. It additionally has higher function extraction, i.e. it will possibly extract a number of patterns and particulars from photographs.

Regarding its predecessors, Ultralytics YOLOv11 presents a number of noteworthy enhancements. Essential developments include:

- Higher accuracy with fewer parameters: YOLOv11m is extra computationally environment friendly with out sacrificing accuracy. It achieves larger imply Common Precision (mAP) on the COCO dataset with 22% fewer parameters than YOLOv8m.

- Extensive number of duties supported: YOLOv11 is able to performing a variety of CV duties, together with pose estimation, object recognition, picture classification, occasion segmentation, and oriented object detection (OBB).

- Improved velocity and effectivity: Sooner processing charges are achieved by way of improved architectural designs and coaching pipelines that strike a compromise between accuracy and efficiency.

- Fewer parameters: fewer parameters make fashions sooner with out considerably affecting v11’s correctness.

- Improved function extraction: YOLOv11 has a greater neck and spine structure to enhance function extraction capabilities, which ends up in extra correct object detection.

- Adaptability throughout contexts: YOLOv11 is adaptable to a variety of contexts, corresponding to cloud platforms, edge gadgets, and methods which can be appropriate with NVIDIA GPUs.

YOLOv11 – The best way to Use It?

As of October 10, 2024, Ultralytics has not revealed the YOLOv11 paper, nor its structure diagram. Nonetheless, there’s sufficient documentation launched on GitHub. The mannequin is much less resource-intensive and able to dealing with sophisticated duties. It is a superb selection for difficult AI initiatives as a result of it additionally enhances large-scale mannequin efficiency.

The coaching course of has enhancements to the augmentation pipeline, which makes it easier for YOLOv11 to regulate to numerous duties – whether or not small initiatives or large-scale functions. Set up the newest model of the Ultralytics bundle to start utilizing YOLOv11:

pip set up ultralytics>=8.3.0

You should use YOLOv11 for real-time object detection and different pc imaginative and prescient functions with only a few strains of code. Use this code to load a pre-trained YOLOv11 mannequin and carry out inference on an image:

from ultralytics import YOLO

# Load the YOLO11 mannequin

mannequin = YOLO("yolo11n.pt")

# Run inference on a picture

outcomes = mannequin("path/to/picture.jpg")

# Show outcomes

outcomes[0].present()

Elements of YOLOv11

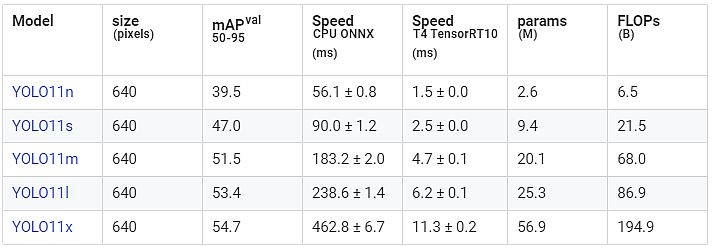

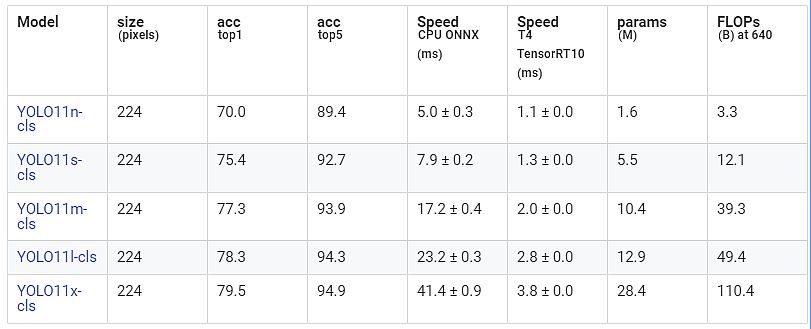

YOLOv11 consists of the next instruments: oriented bounding field (-obb), pose estimation (-pose), occasion segmentation (-seg), bounding field fashions (no suffix), and classification (-cls).

The next sizes are additionally accessible for the instruments: nano (n), small (s), medium (m), giant (l), and extra-large (x). The engineers can make the most of Ultralytics Library fashions to:

- Observe objects and hint them alongside their paths.

- Export recordsdata: the library is well exportable in a wide range of codecs and makes use of.

- Execute varied situations: they will practice their fashions utilizing a spread of things and movie sorts.

Moreover, Ultralytics has launched the YOLOv11 Enterprise Fashions, which shall be accessible on October 31st. Although it would use bigger proprietary customized datasets, groups can use it equally to the open-source YOLOv11 fashions.

YOLOv11 presents unparalleled flexibility for a variety of functions since it may be seamlessly built-in into a number of workflows. As well as, groups can optimize it for deployment throughout a number of settings, together with edge gadgets and cloud platforms.

With the Ultralytics Python bundle and the Ultralytics HUB, engineers can already begin utilizing YOLOv11. It should convey them superior CV potentialities and so they’ll see how YOLO-11 can help various AI initiatives.

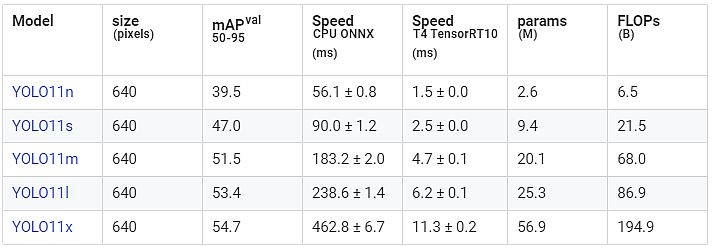

Efficiency Metrics and Supported Duties

With its distinctive processing energy, effectivity, and compatibility for cloud and edge system deployment, YOLOv11 presents flexibility in a wide range of settings. Furthermore, Yolo11 isn’t simply an improve – fairly, it’s a way more exact, efficient, and adaptable mannequin that may deal with various CV duties.

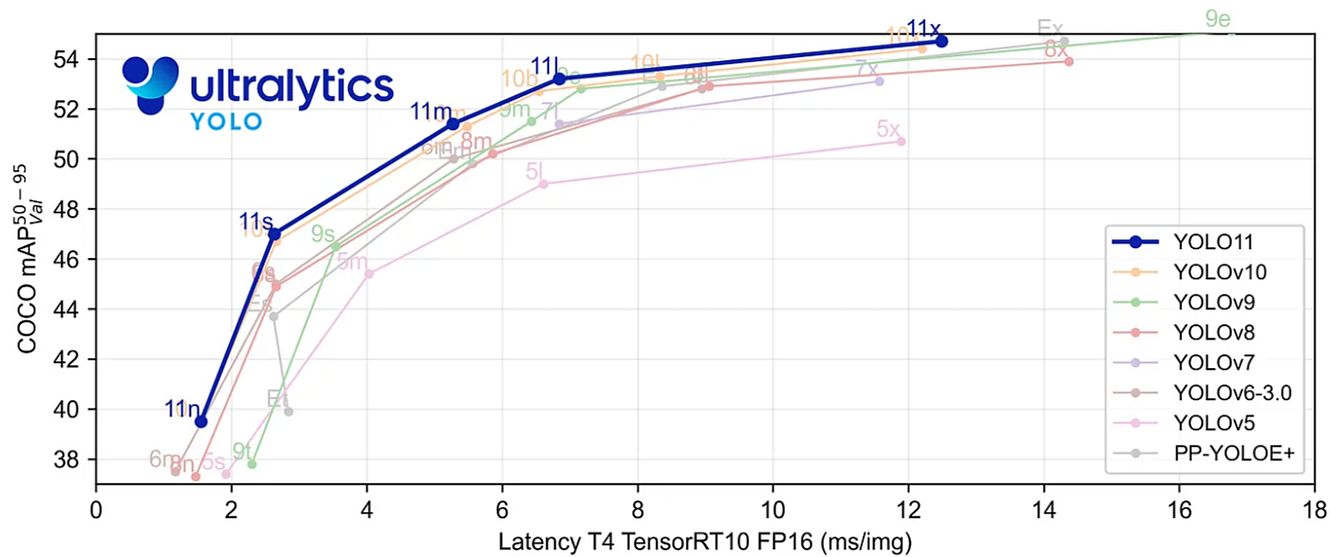

It gives higher function extraction with extra correct element seize, larger accuracy with fewer parameters, and sooner processing charges (higher real-time efficiency). Relating to accuracy and velocity – YOLO-11 is superior to its predecessors:

- Effectivity and velocity: It’s ultimate for edge functions and resource-constrained contexts by having as much as 22% fewer parameters than different fashions. Additionally, it enhances actual time object detection by as much as 2% sooner.

- Accuracy enchancment: relating to object detection on COCO, YOLO-11 outperforms YOLOv8 by as much as 2% when it comes to mAP (imply Common Precision).

- Surprisingly, YOLO11m makes use of 22% fewer parameters than YOLOv8m and obtains a better imply Common Precision (mAP) rating on the COCO dataset. Thus, it’s computationally lighter with out compromising efficiency.

This means that it executes extra effectively and produces extra correct outcomes. Moreover, YOLOv11 presents higher processing speeds than YOLOv10, with inference occasions which can be about 2% sooner. This makes it good for real-time functions.

YOLOv11 Purposes

Groups can make the most of versatile YOLO-11 fashions in a wide range of pc imaginative and prescient functions, corresponding to:

- Object monitoring: This function, which is essential for a lot of real-time functions, tracks and displays the motion of objects over a collection of video frames.

- Object detection: To be used in surveillance, autonomous driving, and retail analytics, this expertise locates and identifies issues inside photos or video frames and attracts bounding bins round them.

- Picture classification: This method classifies photos into pre-established teams. It makes it good for makes use of like e-commerce product classification or animal commentary.

- Occasion segmentation: This course of requires pinpointing and pixel-by-pixel identification and separation of particular objects inside a picture. Purposes corresponding to medical imaging and manufacturing defect uncovering can profit from its use.

- Pose estimation: in a variety of medical functions, sports activities analytics, and health monitoring. Pose estimation identifies necessary spots inside a picture dimension, or video body to trace actions or poses.

- Oriented object detection (OBB): This expertise locates gadgets with an orientation angle, making it potential to localize rotational objects extra exactly. It’s significantly helpful for jobs involving robotics, warehouse automation, and aerial photos.

Subsequently, YOLO-11 is adaptable sufficient for use in numerous CV functions: autonomous driving, surveillance, healthcare imaging, good retail, and industrial use circumstances.

Implementing YOLOv11

Because of neighborhood contributions and broad applicability, the YOLO fashions are the business normal in object detection. With this launch of YOLOv11, we’ve got seen that it has good processing energy effectivity and is good for deployment on edge and cloud gadgets. It gives flexibility in a wide range of settings and a extra exact, efficient, and adaptable method to pc imaginative and prescient duties. We’re excited to see additional developments on the planet of open-source pc imaginative and prescient and the YOLO collection!

To get began with YOLOv11 for open-source, analysis, and scholar initiatives, we propose testing the Ultralytics Github repository. To study extra in regards to the legalities of implementing pc imaginative and prescient on enterprise functions, try our information to mannequin licensing.

Get Began With Enterprise Laptop Imaginative and prescient

Viso Suite is an Finish-to-Finish Laptop Imaginative and prescient Infrastructure that gives all of the instruments required to coach, construct, deploy, and handle pc imaginative and prescient functions at scale. Our infrastructure is designed to expedite the time taken to deploy real-world functions, leveraging current digicam investments and working on the sting. It combines accuracy, reliability, and decrease complete value of possession lending itself completely to multi-use case, multi-location deployments.

Viso Suite is absolutely appropriate with all fashionable machine studying and pc imaginative and prescient fashions.

We work with giant corporations worldwide to develop and execute their AI functions. To begin implementing state-of-the-art pc imaginative and prescient, get in contact with our workforce of consultants for a customized demo of Viso Suite.

Steadily Requested Questions

Q1: What are the principle benefits of YOLOv11?

Reply: The principle YOLO-11 benefits are: higher accuracy, sooner velocity, fewer parameters, improved function extraction, adaptability throughout completely different contexts, and help for varied duties.

Q2: Which duties can YOLOv11 carry out?

Reply: By utilizing YOLO-11 you possibly can classify photos, detect objects, phase photos, estimate poses, and object orientation detection.

Q3: The best way to practice the YOLOv11 mannequin for object detection?

Reply: Engineers can practice the YOLO-11 mannequin for object detection through the use of Python or CLI instructions. First, they import the YOLO library in Python after which make the most of the mannequin.practice() command.

This autumn: Can YOLOv11 be used on edge gadgets?

Reply: Sure, due to its light-weight environment friendly structure, and environment friendly processing methodology – YOLOv11 could be deployed on a number of platforms together with edge gadgets.