Deep studying (DL) revolutionised laptop imaginative and prescient (CV) and synthetic intelligence basically. It was an enormous breakthrough (circa 2012) that allowed AI to blast into the headlines and into our lives like by no means earlier than. ChatGPT, DALL-E 2, autonomous vehicles, and so on. – deep studying is the engine driving these tales. DL is so good, that it has reached a degree the place each answer to an issue involving AI is now likely being solved utilizing it. Simply check out any educational convention/workshop and scan by the introduced publications. All of them, regardless of who, what, the place or when, current their options with DL.

The options that DL is fixing are complicated. Therefore, essentially, DL is a fancy matter. It’s not straightforward to return to grips with what is going on underneath the hood of those functions. Belief me, there’s heavy statistics and arithmetic being utilised that we take without any consideration.

On this publish I believed I’d attempt to clarify how DL works. I need this to be a “Deep Studying for Dummies” sort of article. I’m going to imagine that you’ve a highschool background in arithmetic and nothing extra. (So, for those who’re a seasoned laptop scientist, this publish isn’t for you – subsequent time!)

Let’s begin with a easy equation:

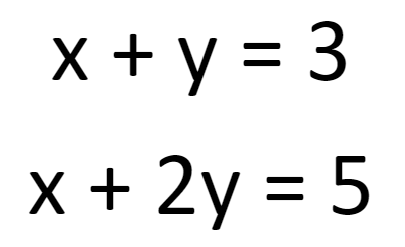

What are the values of x and y? Properly, going again to highschool arithmetic, you’ll know that x and y can take an infinite variety of values. To get one particular answer for x and y collectively we want extra data. So, let’s add some extra data to our first equation by offering one other one:

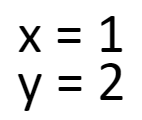

Ah! Now we’re speaking. A fast subtraction right here, somewhat substitution there, and we’ll get the next answer:

Solved!

Extra data (extra information) offers us extra understanding.

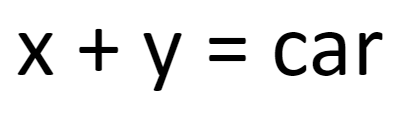

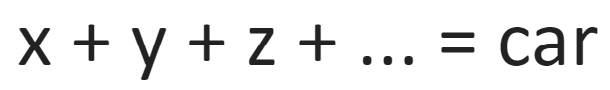

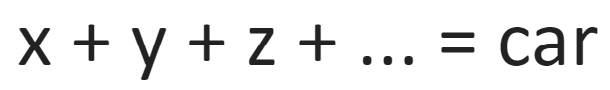

Now, let’s rewrite the primary equation somewhat to supply an oversimplified definition of a automobile. We will consider it as a definition we are able to use to search for vehicles in photographs:

We’re caught with the identical dilemma, aren’t we? One attainable answer is that this:

However there are numerous, many others.

In equity, nevertheless, that equation is way too easy for actuality. Vehicles are sophisticated objects. What number of variables ought to a definition need to visually describe a automobile, then? One would want to take color, form, orientation of the automobile, makes, manufacturers, and so on. into consideration. On prime of that we have now completely different climate eventualities to remember (e.g. a automobile will look completely different in a picture when it’s raining in comparison with when it’s sunny – the whole lot appears completely different in inclement climate!). After which there’s additionally lighting circumstances to contemplate too. Vehicles look completely different at night time then within the daytime.

We’re speaking about tens of millions and tens of millions of variables! That’s what is required to precisely outline a automobile for a machine to make use of. So, we would want one thing like this, the place the variety of variables would go on and on and on, advert nauseam:

That is what a neural community units up. Precisely equations like this with tens of millions and tens of millions and typically billions or trillions of variables. Right here’s an image of a small neural community (inicidentally, these networks are known as neural networks as a result of they’re impressed by how neurons are interconnected in our brains):

Every of the circles within the picture is a neuron that may be considered a single variable – besides that in technical phrases, these variables are known as “parameters“, which is what I’m going to name them any longer on this publish. These neurons are interconnected and organized in layers, as might be seen above.

The community above has solely 39 parameters. To make use of our instance of the automobile from earlier, that’s not going to be sufficient for us to adequately outline a automobile. We’d like extra parameters. Actuality is way too complicated for us to deal with with only a handful of unknowns. Therefore why a few of the newest picture recognition DL networks have parameter numbers within the billions. Meaning layers, and layers, and layers of neurons.

Now, initially when a neural community is ready up with all these parameters, these parameters (variables) are “empty”, i.e. they haven’t been initiated to something significant. The neural community is unusable – it’s “clean”.

In different phrases, with our equation from earlier, we have now to work out what every x, y, z, … is within the definitions we want to clear up for.

To do that, we want extra data, don’t we? Identical to within the very first instance of this publish. We don’t know what x, y, and z (and so forth) are except we get extra information.

That is the place the concept of “coaching a neural community” or “coaching a mannequin” is available in. We throw photographs of vehicles on the neural community and get it to work out for itself what all of the unknowns are within the equations we have now arrange. As a result of there are such a lot of parameters, we want heaps and plenty and plenty of data/information – cf. massive information.

And so we get the entire notion of why information is value a lot these days. DL has given us the power to course of giant quantities of information (with tonnes of parameters), to make sense of it, to make predictions from it, to achieve new perception from it, to make insightful selections from it. Previous to the massive information revolution, no one collected a lot information as a result of we didn’t know what to do with it. Now we do.

Yet another factor so as to add to all this: the extra parameters in a neural community, the extra complicated equations/duties it could possibly clear up. It is smart, doesn’t it? This is the reason AI is getting higher and higher. Individuals are constructing bigger and bigger networks (GPT-Four is reported to have parameters within the trillions, GPT-Three has 175 billion, GPT-2 has 1.5 billion) and coaching them on swathes of information. The issue is that there’s a restrict to simply how massive we are able to go (as I focus on on this publish after which this one) however it is a dialogue for an additional time.

To conclude, this women and gents are the very fundamentals of Deep Studying and why it has been such a disruptive know-how. We’re in a position to arrange these equations with tens of millions/billions/trillions of parameters and get machines to work out what every of those parameters must be set to. We outline what we want to clear up for (e.g. vehicles in photographs) and the machine works the remainder out for us so long as we offer it with sufficient information. And so AI is ready to clear up increasingly more complicated issues in our world and do mind-blowing issues.

(Word: If this publish is discovered on a website aside from zbigatron.com, a bot has stolen it – it’s been taking place lots recently)

—

To learn when new content material like that is posted, subscribe to the mailing record: