The article beneath was contributed by Timothy Malche, an assistant professor within the Division of Pc Purposes at Manipal College Jaipur.

On this weblog put up, we are going to discover ways to construct a pc imaginative and prescient undertaking to determine, classify, and measure the scale of fish in a picture.

Precisely measuring fish dimension helps in estimating the inhabitants and well being of fish shares, aiding in sustainable fisheries administration. This may additionally assist guarantee compliance with authorized dimension limits, serving to to manage catch sizes and stop overfishing.

Moreover, automated dimension measurement permits for steady monitoring of fish development, optimizing feeding schedules and bettering total farm productiveness.

Let’s get began constructing our software.

Figuring out fish species and measuring fish sizes

To determine fish species, we’re going to prepare a pc imaginative and prescient mannequin that may discover the placement of fish in a picture. That is achieved by accumulating pictures of fish, then labeling every fish with a bounding field or polygon device that encloses the fish. The species is assigned as the category label for that bounding field or polygon. Following is an instance of utilizing a bounding field to label fish species from Roboflow Fish Dataset.

There are a number of strategies to determine fish dimension, comparable to utilizing keypoint detection, stereo imaginative and prescient and segmentation based mostly strategies.

On this undertaking, we are going to use the object detection strategy, which may run in near actual time. We are going to decide fish dimension based mostly on its visible look.

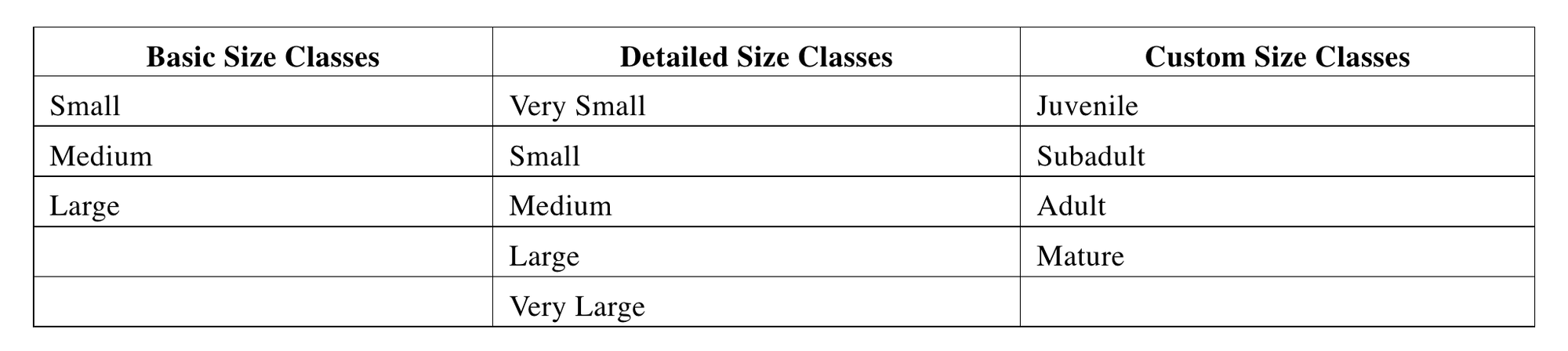

The method begins with figuring out the fish species, adopted by measuring its dimension. A number of dimension courses might be outlined for every species. Listed below are some frequent examples and issues for dimension classification:

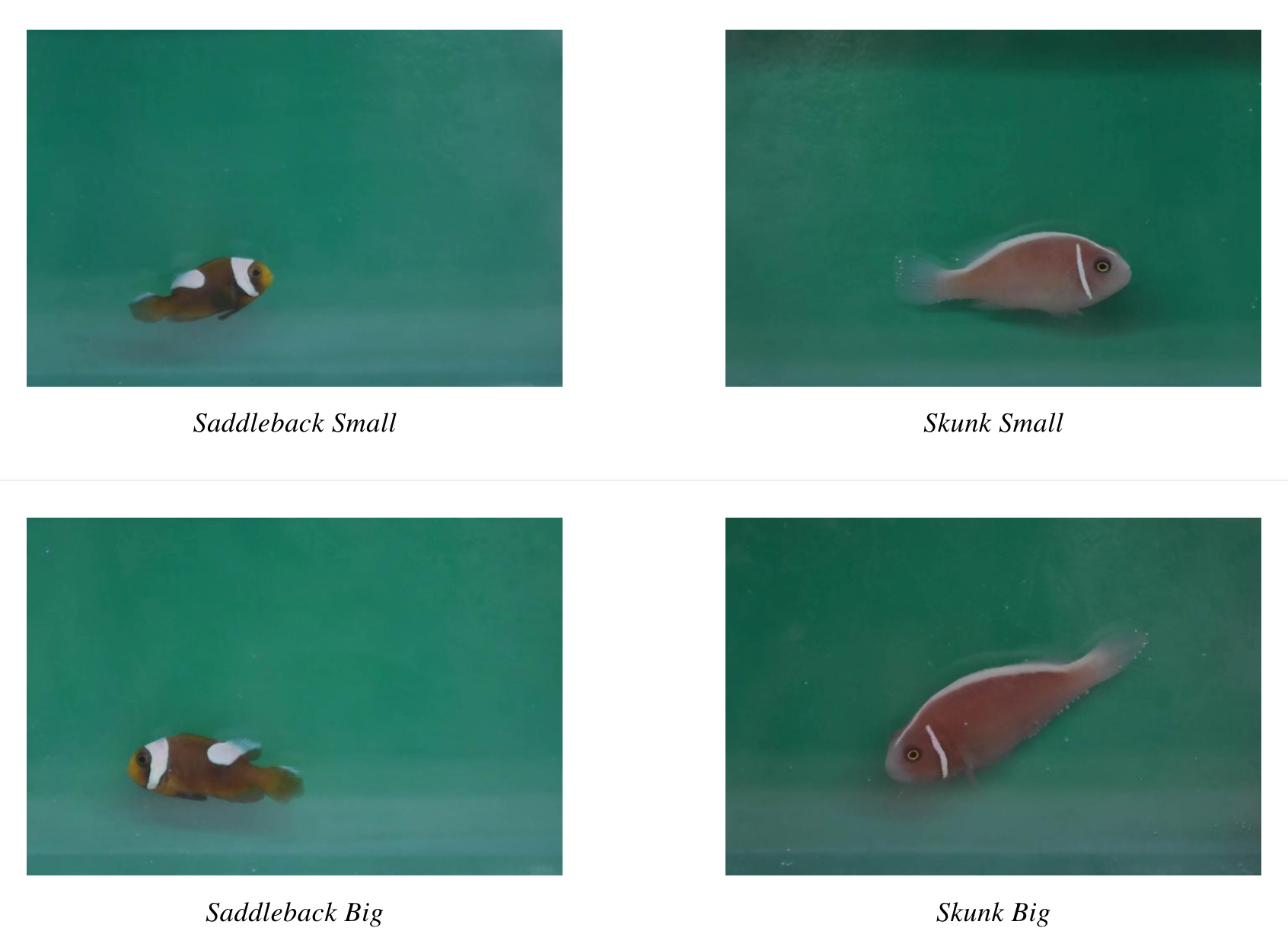

These dimension courses might be correlated with precise bodily measurements. In our instance, we categorize two fish species, Saddleback and Pink Skunk, into two fundamental dimension courses: small and huge/massive (as proven within the following pictures).

The pictures above reveal how fish species and sizes might be visually recognized (i.e. Saddleback small and Saddleback massive might be categorised from its bodily appearances and equally Skunk small and large). Equally, the dataset of various fish species with various sizes (i.e. small, medium, massive and many others.) might be collected and used to label and prepare a pc imaginative and prescient mannequin.

In our undertaking, we are going to use the Roboflow polygon device to annotate fish within the picture (see determine beneath) for coaching object detection fashions.

We are going to label every species and dimension courses to coach the thing detection mannequin to automated species classification and fish dimension categorization. This has precious functions in areas comparable to fisheries administration and industrial sorting.

Steps for creating the fish dimension measurement system

We are going to comply with the three easy steps outlined beneath to rapidly construct this undertaking.

- Put together the dataset

- Prepare an object detection mannequin

- Construct an software that makes use of our mannequin and returns the scale of fish

Step #1: Put together the dataset

Getting ready a dataset is a vital step in constructing any pc imaginative and prescient mannequin. For this undertaking I exploit Measure the scale of the fish pc imaginative and prescient undertaking dataset from Roboflow Universe. I’ve downloaded the dataset pictures and created my very own object detection undertaking (named fish-size-detection) and uploaded all pictures to this undertaking workspace.

💡

Word that this dataset is labeled to determine particular species of fish; it can’t be used to determine any arbitrary fish. To determine particular fish, we advocate accumulating your individual information then following this information to coach your individual pc imaginative and prescient mannequin together with your information.

You need to use the Roboflow dataset well being examine choice to assess and enhance the standard of your dataset. It affords a spread of statistics concerning the dataset related to a undertaking.

All the pictures within the dataset are labelled for object detection utilizing the polygon device as proven within the picture beneath. Observe the Roboflow polygon annotation information to study extra about polygon annotation.

I’ve then generated the dataset model. A dataset model have to be generated earlier than it’s skilled. You possibly can generate a dataset model through the use of the “Generate” tab and “Create New Model” choice within the Roboflow interface.

Step #2: Prepare an object detection mannequin

As soon as the dataset model is generated, you need to use the Roboflow auto coaching choice to coach essentially the most correct pc imaginative and prescient mannequin. To coach, first click on the “Prepare with Roboflow” button.

Then select Roboflow 3.0:

Select Quick->Prepare from the general public checkpoint and click on “Begin Coaching”, the Roboflow will prepare the mannequin.

When the mannequin coaching is accomplished you will notice the modal metrics and the mannequin might be made accessible for inferencing on Roboflow platform in addition to through Roboflow inference API.

It’s possible you’ll check the mannequin from the “Visualize” tab.

Mannequin Testing

The skilled mannequin is accessible right here. You need to use the mannequin your self with the instance beneath, or prepare your individual mannequin.

Step #3: Construct inferencing software

That is the ultimate step. On this step we are going to construct an internet software utilizing Gradio. The appliance will enable us to both add a picture or seize it via a digital camera. The appliance then communicates with our pc imaginative and prescient mannequin (skilled utilizing Roboflow within the earlier step) to run prediction after which show the resultant picture.

Right here is the code for our software:

# import the required libraries

from inference_sdk import InferenceHTTPClient, InferenceConfiguration

import gradio as gr

from PIL import Picture, ImageDraw, ImageFont

import os # initialize the consumer

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="ROBOFLOW_API_KEY"

) # outline a prediction perform to deduce on a picture

def infer_image(picture, confidence=0.5, iou_threshold=0.5):

# save the uploaded or captured picture to a file

image_path = "uploaded_image.jpg"

picture.save(image_path)

# set customized configuration

custom_configuration = InferenceConfiguration(confidence_threshold=confidence, iou_threshold=iou_threshold) # infer on the picture utilizing the consumer

with CLIENT.use_configuration(custom_configuration):

outcome = CLIENT.infer(image_path, model_id="fish-size-detection/2")

# extract predictions

predictions = outcome.get('predictions', [])

# outline a shade map for various courses of the mannequin

class_colors = {

"Skunk clownfish_small": "purple",

"Saddleback clownfish_big": "blue",

"Saddleback clownfish_small": "yellow",

"Skunk clownfish_big": "inexperienced"

}

# draw bounding bins on the picture

draw = ImageDraw.Draw(picture)

attempt:

font = ImageFont.truetype("arial.ttf", 20)

besides IOError:

font = ImageFont.load_default()

for pred in predictions:

x = pred['x']

y = pred['y']

width = pred['width']

peak = pred['height']

left = x - width / 2

prime = y - peak / 2

proper = x + width / 2

backside = y + peak / 2

# get the colour for the category

shade = class_colors.get(pred['class'], "inexperienced") # default to inexperienced if class is just not within the shade map

draw.rectangle([left, top, right, bottom], define=shade, width=3)

# Draw the label

label = f"{pred['class']} ({pred['confidence']:.2f})"

text_size = draw.textbbox((0, 0), label, font=font)

text_width = text_size[2] - text_size[0]

text_height = text_size[3] - text_size[1]

text_background = [(left, top - text_height - 4), (left + text_width + 4, top)]

draw.rectangle(text_background, fill=shade)

draw.textual content((left + 2, prime - text_height - 2), label, fill="white", font=font)

return picture # Create the Gradio interface

iface = gr.Interface(

fn=infer_image,

inputs=[

gr.Image(type="pil", label="Upload or Capture Image"),

gr.Slider(0.0, 1.0, value=0.5, step=0.1, label="Confidence Threshold"),

gr.Slider(0.0, 1.0, value=0.5, step=0.1, label="IoU Threshold")

],

outputs=gr.Picture(sort="pil", label="Picture with Bounding Bins"),

title="Fish Measurement Detection",

description="Add or seize a picture to detect fish dimension and sophistication utilizing Roboflow mannequin. Alter confidence and IoU thresholds utilizing the sliders."

) # Launch the app

iface.launch()This Python script creates a Gradio app that enables customers to add or seize a picture and detect fish sizes utilizing a pre-trained mannequin from Roboflow.

The app makes use of the InferenceHTTPClient from the inference-sdk to carry out the picture inference, drawing bounding bins round detected fish and labeling them with their respective courses and confidence scores.

Customers can modify the boldness threshold and Intersection over Union (IoU) threshold utilizing sliders to regulate the detection sensitivity. The output is the unique picture overlaid with bounding bins and labels, displayed straight inside the Gradio interface. Working the app will present following output.

Conclusion

Fish dimension detection utilizing pc imaginative and prescient enhances the effectivity of information assortment in fisheries, aquaculture, and marine analysis, enabling real-time monitoring and evaluation. It helps sustainable fishing practices by serving to to implement dimension limits and monitor fish populations, contributing to higher useful resource administration.

Listed below are a number of different weblog posts on the subject of fish detection and pc imaginative and prescient that you could be take pleasure in studying: