With this information, you will learn to use laptop imaginative and prescient to solely establish shifting objects in a video. Here’s a hosted demo to check out.

Object detection fashions can be utilized on a reside video feed, pre-recorded video, youtube video, webcam, and many others. Let’s imagine you don’t want to detect all objects in a video, you simply wish to detect shifting objects.

Ignore all non-moving objects like work

Use Instances for Movement Detection

You might wish to have a safety digicam and never choose up objects which are within the background or you might wish to arrange alerts with a pet digicam that notifies you when your pet is likely to be entering into bother (or doing one thing cute).

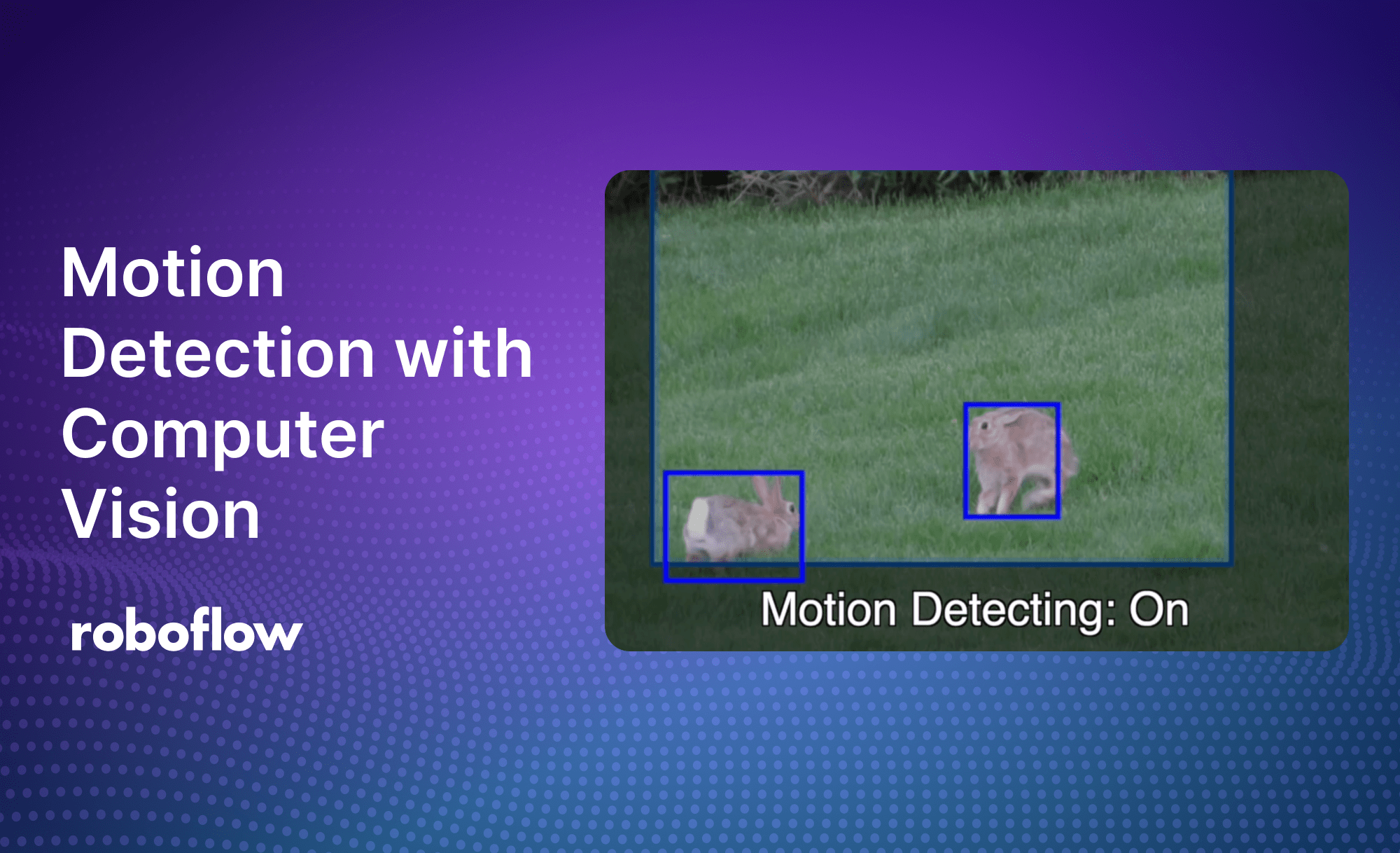

Within the above instance, I’m working an object detection mannequin from Roboflow Universe and solely wish to run it on shifting objects.

The way it works

At a excessive stage, this is the way it’s performed:

- You begin the inferenceJS employee identical to regular however individually run a brand new operate captureMotion.

- captureMotion will look again on the earlier body and the present body and create a field that accommodates all of the variations detected over a sure threshold.

- Then the article detection might be filtered all the way down to solely what’s inside that field

- This repeats each 500 milliseconds

You begin this by including a setInterval operate earlier than the employee begins.

video.onplay = operate() { setInterval(captureMotion, 500); inferEngine.startWorker(model_name, model_version, publishable_key, [{ scoreThreshold: confidence_threshold }]) .then((id) => { // Begin inference detectFrame(); });

}

World Variables

We’ll add a bunch of variables that may be tweaked. Typically, you’ll solely wish to mess with the pixelDiffThreshold and scoreThreshold.

pixelDiffThreshold could be considered the distinction earlier than you contemplate it a change has occurred in any respect.

scoreThreshold could be considered the quantity instances a distinction has occurred.

// The canvas's are made and prepped for differencing

var isReadyToDiff = false; // The pixel space of change

var diffWidth = 64;

var diffHeight = 48; // Change these primarily based in your choice of movement

var pixelDiffThreshold = 32;

var scoreThreshold = 16; // The dict containing the field dimensions, width, and top to attract

var currentMotionBox; // A canvas for simply the distinction calculation

var diffCanvas = doc.createElement('canvas');

diffCanvas.width = diffWidth;

diffCanvas.top = diffHeight;

var diffContext = diffCanvas.getContext('2nd', { willReadFrequently: true });

Let’s take a fast break and have a look at a cat video instance earlier than persevering with.

Movement detection for a pet digicam

Movement Detection Logic

That is captureMotion with none of the additional drawing field code. We get a picture representing the distinction between frames, shrink down the picture right into a canvas with measurement diffWidth and diffHeight, and eventually put that into an array for evaluating.

operate captureMotion() { diffContext.globalCompositeOperation = 'distinction'; diffContext.drawImage(video, 0, 0, diffWidth, diffHeight); var diffImageData = diffContext.getImageData(0, 0, diffWidth, diffHeight); if (isReadyToDiff) { var diff = processDiff(diffImageData); if (diff.motionBox) { // Draw rectangle } currentMotionBox = diff.motionBox; } }Then we course of the distinction with processDiff to find out if movement has occurred and set the motionBox if returns one thing.

If the rating will get above your set scoreThreshold, a movement is detected and it’s returned. The remainder of code is simply figuring out the field dimensions and returning it.

operate processDiff(diffImageData) { var rgba = diffImageData.knowledge; // Pixel changes are performed by reference straight on diffImageData var rating = 0; var motionPixels = []; var motionBox = undefined; for (var i = 0; i < rgba.size; i += 4) { var pixelDiff = rgba[i] * 0.3 + rgba[i + 1] * 0.6 + rgba[i + 2] * 0.1; var normalized = Math.min(255, pixelDiff * (255 / pixelDiffThreshold)); rgba[i] = 0; rgba[i + 1] = normalized; rgba[i + 2] = 0; if (pixelDiff >= pixelDiffThreshold) { rating++; coords = calculateCoordinates(i / 4); motionBox = calculateMotionBox(motionBox, coords.x, coords.y); motionPixels = calculateMotionPixels(motionPixels, coords.x, coords.y, pixelDiff); } } return { rating: rating, motionBox: rating > scoreThreshold ? motionBox : undefined, motionPixels: motionPixels };

}Draw the Movement Detection Container

All of the code is there, however we nonetheless need to do the precise filtering. That’s performed on the drawing stage.

In brief, if the movement is detected and the prediction is contained in the field created, present that prediction.

operate drawBoundingBoxes(predictions, ctx) { if (currentMotionBox != undefined) { // Draw motionBox for (var i = 0; i < predictions.size; i++) { if (confidence < user_confidence || !isCornerInside(motion_x, motion_y, motion_width, motion_height, x, y, width, top)) { proceed; }Right here is remaining instance video with bunnies. They have a tendency to freeze up and immediately transfer so the movement detection could be proven.

Conclusion

It is a bit complicated however a lot simpler to know for those who play with the code and alter issues to see what they do.

I encourage you check out totally different fashions and alter the sliders for “Pixel Distinction Threshold” and “# of Modified Pixels Threshold” and see how they modify the movement detection.

The Github repository consists of directions for how you can run it in your server with Vercel, Heroku, and Replit.