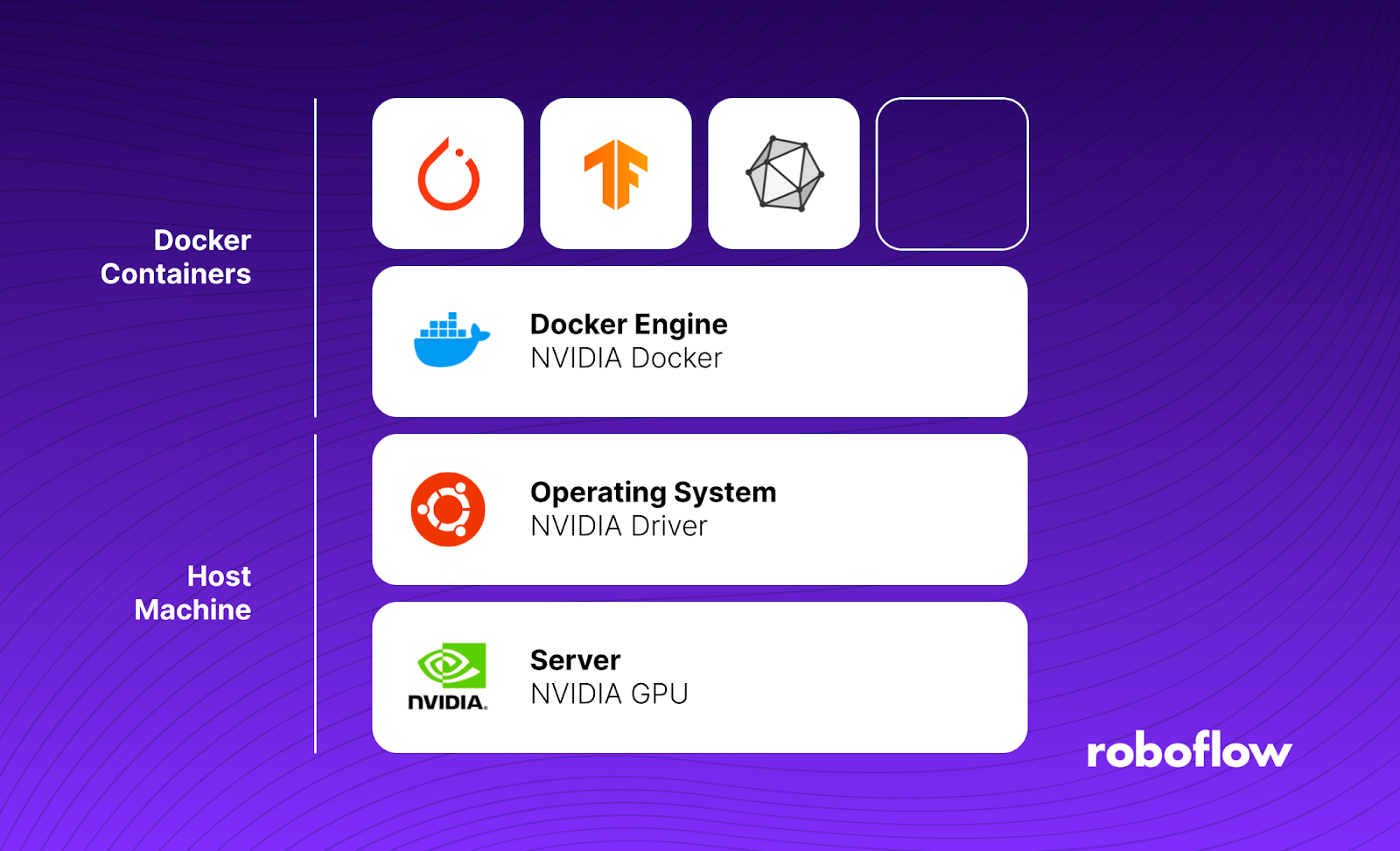

The Roboflow Inference server is a drop-in substitute for the Hosted Inference API that may be deployed by yourself {hardware}. We’ve got optimized the Inference Server to get most efficiency from the NVIDIA Jetson line of edge-AI units. We’ve got executed this by particularly tailoring the drivers, libraries, and binaries particularly to its CPU and GPU architectures.

This weblog put up gives a complete information to deploying Open AI’s CLIP mannequin on the the sting utilizing a Jetson Orin powered by Jetpack 5.1.1. The method makes use of Roboflow’s open supply inference server, using this GitHub repository for seamless implementation.

What’s CLIP? What Can I Do with CLIP?

CLIP is an open supply imaginative and prescient mannequin developed by OpenAI. CLIP permits you to generate textual content and picture embeddings. These embeddings encode semantic details about textual content and pictures which you should use for all kinds of laptop imaginative and prescient duties.

Listed here are just a few duties you may full with CLIP mannequin:

- Establish the similarity between two photos or frames in a video;

- Establish the similarity between a textual content immediate and a picture (i.e. determine if “practice monitor” is consultant of the contents of a picture);

- Auto-label photos for a picture classification mannequin;

- Construct a semantic picture search engine;

- Gather photos which are much like a specified textual content immediate to be used in coaching a mannequin;

- And extra!

By deploying CLIP on a Jetson, you should use CLIP on the sting to your utility wants.

Flash Jetson System

Guarantee your Jetson is flashed with Jetpack 5.1.1. To take action, first obtain the Jetson Orin Nano Developer Equipment SD Card picture from the JetPack SDK Web page, after which write the picture to your microSD card with the NVIDIA instructions.

When you energy on and boot the Jetson Nano, you may examine Jetpack 5.1.1 is put in with this repository

git clone https://github.com/jetsonhacks/jetsonUtilities.git

cd jetsonUtilities python jetsonInfo.pyRun the Roboflow Inference Docker Container

Roboflow Inference comes with Docker configurations for a variety of units and environments. There’s a Docker container particularly to be used with NVIDIA Jetsons. You may be taught extra about Roboflow’s Inference Docker Picture construct, pull and run in our documentation.

Comply with the steps beneath to run the Jetson Orin Docker container with Jetpack 5.1.1.

git clone https://github.com/roboflow/inference

docker construct -f dockerfiles/Dockerfile.onnx.jetson.5.1.1 -t roboflow/roboflow-inference-server-trt-jetson-5.1.1 sudo docker run --privileged --net=host --runtime=nvidia --mount supply=roboflow,goal=/tmp/cache -e NUM_WORKERS=1 roboflow/roboflow-inference-server-trt-jetson-5.1.1:newest

Tips on how to Use CLIP on the Roboflow Inference Server

This code makes a POST request to OpenAI’s CLIP mannequin on the sting and returns the picture’s embedding vectors. Cross within the picture URL, Roboflow API key, and the bottom URL which connects to the Docker container operating Jetpack 5.1.1 (by default http://localhost:9001):

import requests dataset_id = "soccer-players-5fuqs"

version_id = "1"

image_url = "https://supply.roboflow.com/pwYAXv9BTpqLyFfgQoPZ/u48G0UpWfk8giSw7wrU8/unique.jpg" #Change ROBOFLOW_API_KEY along with your Roboflow API Key

api_key = "ROBOFLOW_API_KEY" #Outline Request Payload

infer_clip_payload = { #Photos could be offered as urls or as bas64 encoded strings "picture": { "sort": "url", "worth": image_url, },

} # Outline inference server url (localhost:9001, infer.roboflow.com, and many others.)

base_url = f"http://localhost:9001" # Outline your Roboflow API Key

api_key = <YOUR API KEY HERE> res = requests.put up( f"{base_url}/clip/embed_image?api_key={api_key}", json=infer_clip_payload,

) embeddings = res.json()['embeddings']

print(embeddings)Compute Picture Similarity with CLIP Embeddings

After creating the embedding vectors for a number of photos, you may calculate the cosine similarity of the embeddings and return a similarity rating:

# Operate to calculate similarity between embeddings

def calculate_similarity(embedding1, embedding2): # Outline your similarity metric right here # You need to use cosine similarity, Euclidean distance, and many others. cosine_similarity = np.dot(embedding1, embedding2) / (np.linalg.norm(embedding1) * np.linalg.norm(embedding2)) similarity_percentage = (cosine_similarity + 1) / 2 * 100 return similarity_percentage embedding_similarity = calculate_similarity(embedding1,embedding2)With CLIP on the sting, you may embed photos, textual content, and compute similarity scores!