When you find yourself operating inference on a imaginative and prescient mannequin in actual time, decreasing inference latency is important. For actual time use circumstances, Roboflow recommends UDP inference as a substitute of HTTP. UDP inference ensures that dropped or sluggish packets don’t block inference from persevering with, so your mannequin will run easily in actual time.

Roboflow Inference, an open supply resolution for operating inference on imaginative and prescient fashions, helps UDP inference out of the field. Utilizing Roboflow Inference with UDP provides you all the advantages of Roboflow Inference, together with:

- A regular API by means of which to run imaginative and prescient inference;

- Modular implementations of frequent architectures, so that you don’t should implement tensor parsing logic;

- A mannequin registry so you’ll be able to simply change between fashions with completely different architectures, and extra.

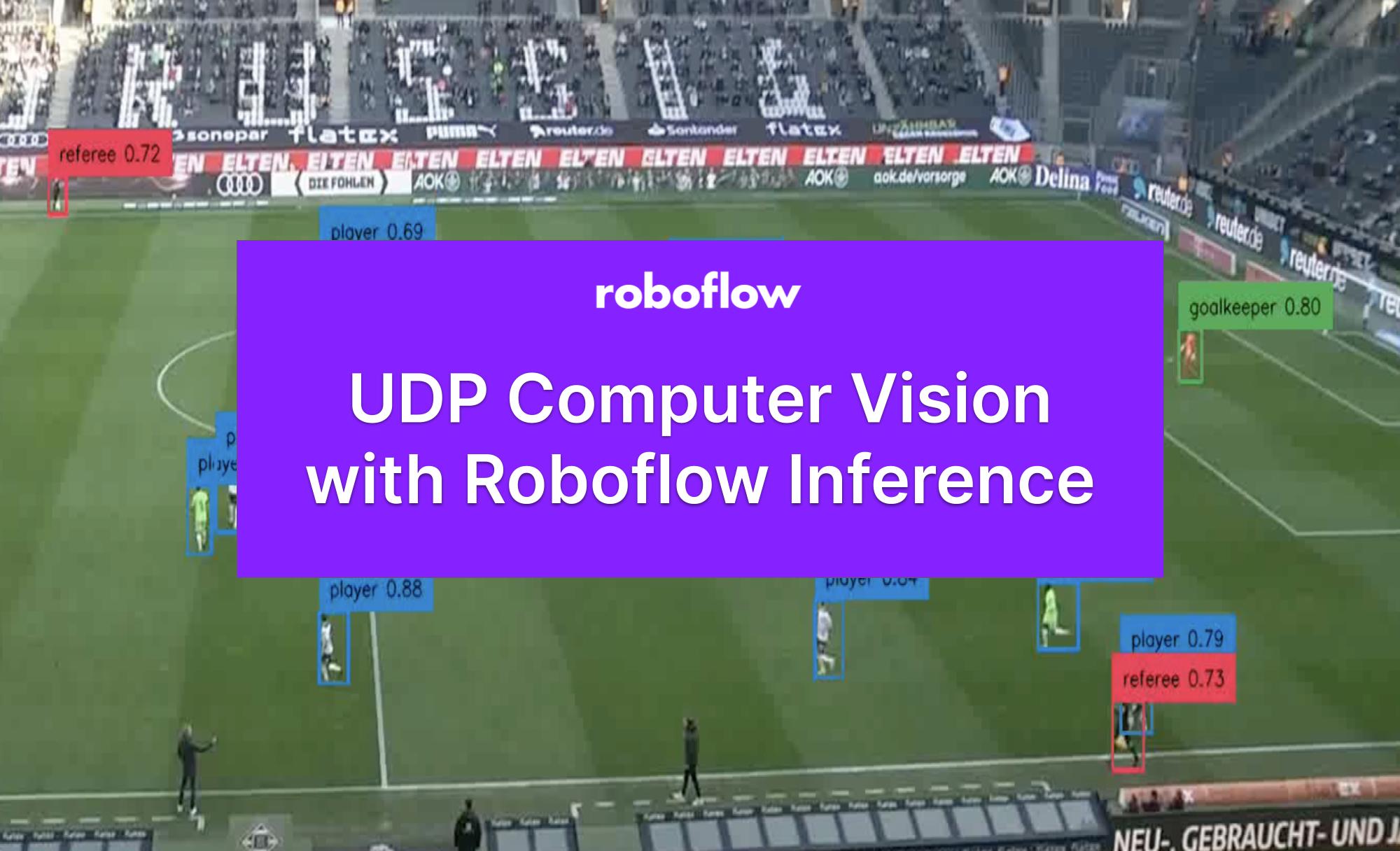

On this information, we’re going to present learn how to use the UDP Roboflow Inference container to be used in operating inference on an actual time reside stream. This container has been used to energy real-time use circumstances for well-known sports activities broadcasts world wide.

We’ll run inference on a video clip of a soccer recreation:

With out additional ado, let’s get began!

💡

You’ll need a tool with an NVIDIA CUDA-enabled GPU to run inference over UDP with Roboflow Inference.

Easy methods to Use Inference with UDP

UDP with Roboflow Inference is supported utilizing the Inference UDP Docker container. This container units up a server that accepts a digicam stream and sends predictions to a shopper.

To make use of Inference with UDP, that you must arrange two tasks:

- A Docker container operating Roboflow Inference’s UDP server, and;

- A shopper that may obtain predictions and deal with post-processing logic (i.e. save predictions, set off an occasion).

Under, we are going to arrange Inference to do real-time inference with a webcam. For this instance, we’ll use a soccer participant detection mannequin hosted on Roboflow. You need to use any mannequin you need.

To get arrange, you have to:

- A tool with entry to a CUDA-enabled GPU for this challenge, and;

- A steady web connection.

An web connection isn’t required after you arrange the server.

Step #1: Pull the Docker Picture

First, we have to pull the Roboflow Inference UDP Docker picture. If you happen to don’t have already got Docker put in on the machine on which you need to run inference, comply with the official Docker set up directions to get arrange. As soon as Docker is put in in your machine, run the next command to obtain the Inference UDP container:

docker pull roboflow/roboflow-inference-server-udp-gpuThis command will take a couple of minutes to run relying on the energy of your web connection.

Step #2: Configure a Receiving Server

The Inference server will run inference on a webcam stream and return predictions to a receiving server. Earlier than we will arrange our Inference server, we’d like a receiving server able to deal with requests.

We now have written an instance receiving server to be used with UDP streams. To retrieve the server code, run the next command:

git clone https://github.com/roboflow/inferenceThe UDP server is within the examples/inference-client/udp.py file.

To begin the receiving server, run the next command:

python3 udp.py --port=5000Once you begin the receiving server, it is best to see a message like this:

UDP server up and listening on http://localhost:12345You’ll be able to replace the udp.py script as obligatory together with your prediction post-processing logic. For instance, you’ll be able to log predictions to a file, set off an occasion (i.e. a webhook) when a prediction is discovered, rely predictions in a area, and extra.

Step #3: Configure and Run the Inference Server

Subsequent, we have to configure the UDP server to make use of a mannequin. For this information, we are going to use a rock paper scissors mannequin hosted on Roboflow. You need to use any mannequin supported by Roboflow Inference over UDP. To study extra about supported fashions, check with the Inference README.

We will write a Docker command to run Inference:

# use a stream

docker run --gpus=all --net=host -e STREAM_ID=0 -e MODEL_ID=MODEL_ID -e API_KEY=API_KEY roboflow/roboflow-inference-server-udp-gpu:newest # run inference on a video

docker run --gpus=all --net=host -e STREAM_ID=video.mov -e MODEL_ID=MODEL_ID -e API_KEY=API_KEY roboflow/roboflow-inference-server-udp-gpu:newestWe’ll want the next items of knowledge:

- A

STREAM_ID, which is the ID for the digicam you’re utilizing. This can be Zero in case your machine solely has one digicam arrange. In case your machine has a couple of digicam arrange and also you don’t need to use the default digicam (0), it’s possible you’ll want to extend this quantity till you discover the proper digicam. You can even present a video file. For this instance, we’ll use a video. - Your Roboflow API key. Discover ways to retrieve your Roboflow API key.

- Your mannequin ID and model quantity. Discover ways to retrieve your mannequin ID and model quantity. The ultimate worth ought to appear to be

<model_id>/<model>. - A

SERVER_ID, which is the deal with of the server that can obtain inference knowledge. In case you are utilizing the server we arrange within the final step, the server can behttp://localhost:5000.

Substitute out your STEAM_ID, MODEL_ID, SERVER_ID, and API_KEY as related within the Docker command above.

Be certain your UDP receiving server from Step #2 is about up. When you find yourself prepared, run the Docker command you’ve written to begin the server. It’s best to see a message like seem within the console.

In case you are utilizing a mannequin hosted on Roboflow, it might take just a few moments for the server to begin for the primary time. It’s because the mannequin weights must be downloaded to be used in your machine.

Right here is an instance of the output from the inference server (left) and the shopper (proper:

With this output, you are able to do no matter you need. In real-time situations, you possibly can use this output to set off occasions. You can additionally post-process the video for later use, akin to in highlighting gamers when exhibiting an motion second in a sports activities broadcast. For the instance above, we wrote a customized script to parse these predictions right into a video. The outcomes are under:

You will discover the script that plots predictions from the server within the challenge GitHub repository.

We don’t suggest plotting predictions coming over UDP in actual time until latency is important, as there may be plenty of work wanted to synchronize video frames so predictions are displayed on the proper body. Therefore, our visible instance above is post-processing a video.

Our UDP inference system is working efficiently!

Conclusion

Sending inference requests to a server over UDP lets you run inference and retrieve outcomes way more effectively than over HTTP. On this information, we demonstrated learn how to arrange Roboflow Inference to run inference on fashions over UDP, and learn how to obtain predictions from the UDP server to be used in software logic.

Now you’ve all the sources that you must run inference with UDP and Inference!