Controlling computer systems by wanting in numerous instructions is certainly one of many applied sciences being developed to supply alternative routes of interacting with a pc. The related area of analysis is gaze detection, which goals to estimate the place on the display at which somebody is wanting or the route through which somebody is wanting.

Gaze detection has many functions. For instance, you should use gaze detection to supply a way by means of which individuals can use a pc with out utilizing a keyboard or mouse. You would confirm the integrity of an examination proctored on-line by checking for alerts that somebody could also be referring to exterior materials, with assist from different imaginative and prescient applied sciences. You may construct immersive coaching experiences for utilizing equipment. These are three of many potentialities.

On this information, we’re going to present the way to use Roboflow Inference to detect gazes. By the top of this information, we will retrieve the route through which somebody is wanting. We are going to plot the outcomes on a picture with an arrow indicating the route of a gaze and the place at which somebody is wanting.

To construct our system, we’ll:

- Set up Roboflow Inference, by means of which we’ll question a gaze detection mannequin. All inference occurs on-device.

- Load dependencies and arrange our mission.

- Detect the route an individual is gazing and the purpose at which they’re wanting utilizing Inference.

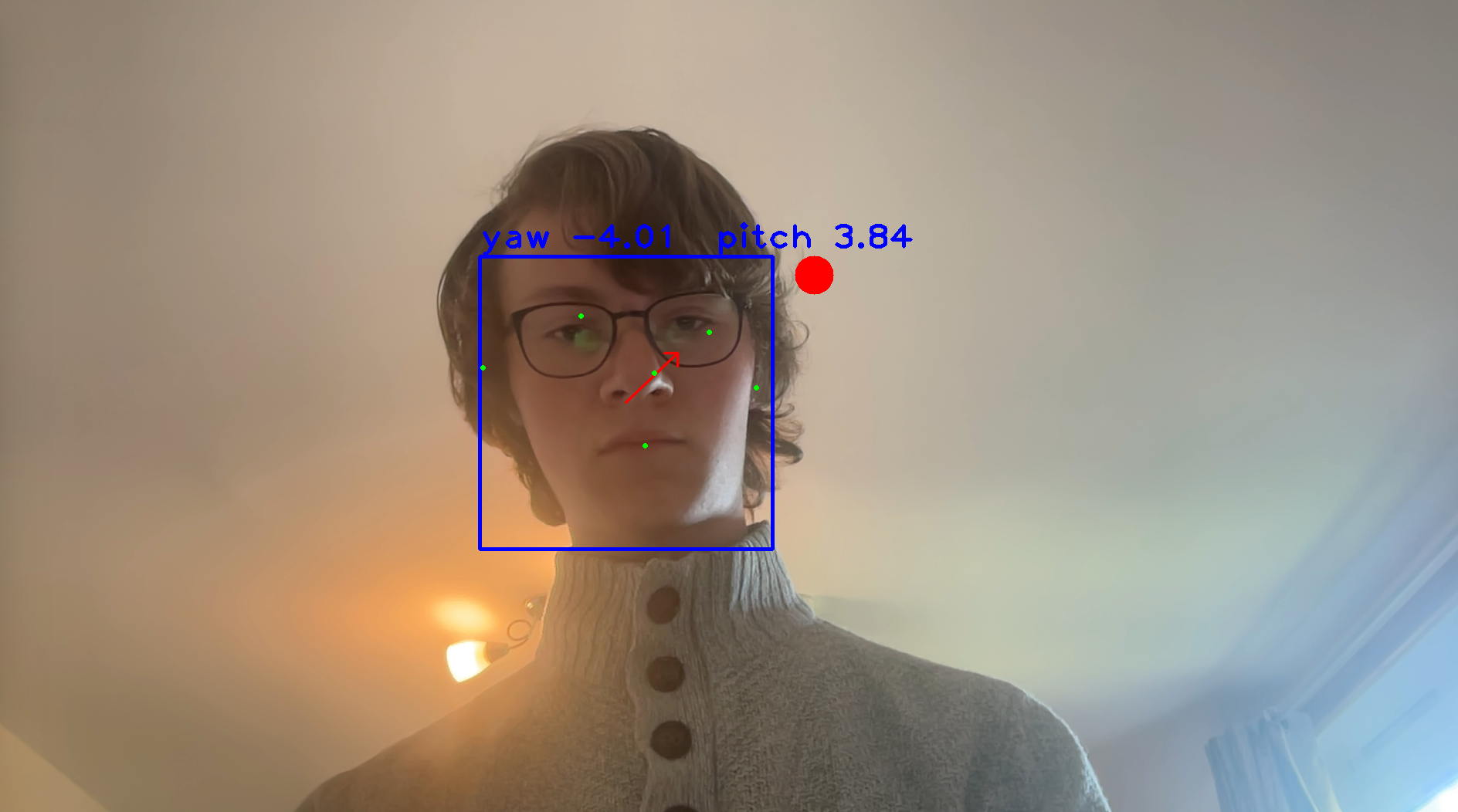

Listed here are the ultimate outcomes:

Eye Monitoring and Gaze Detection Use Circumstances

Eye monitoring and gaze detection have a variety of use circumstances. A well-liked use case is in use for assistive applied sciences, as aforementioned. Eye monitoring allows somebody to work together with a pc with out a keyboard or mouse.

Eye monitoring and gaze detection will also be used to watch for hazards in heavy items car operation. For instance, gaze detection might be used as a part of a system to test whether or not truck or prepare drivers are utilizing cell telephones whereas working a car.

Imaginative and prescient-based eye monitoring lowers the price of operating an eye fixed monitoring system compared to purpose-built glasses, the place the price of tools acquisition could also be important given the specialist nature of the gadgets.

There are extra functions in analysis and medication, akin to monitoring reactions to stimuli.

Step #1: Set up Roboflow Inference and Dependencies

Roboflow Inference is an open supply device you should use to deploy pc imaginative and prescient fashions. With Inference, you possibly can retrieve predictions from imaginative and prescient fashions utilizing an internet request, with out having to write down customized code to load a mannequin, run inference, and retrieve predictions. Inference features a gaze detection mannequin, L2CS-Internet, out of the field.

You should utilize Inference with out a community connection after you have arrange your server and downloaded the mannequin weights for the mannequin you might be utilizing. All inference is run on-device.

To make use of Inference, you have to a free Roboflow account.

For this information, we’ll use the Docker model of Inference. This can allow us to run a server we will question to retrieve predictions. Should you wouldn’t have Docker put in in your system, comply with the official Docker set up directions.

The Inference set up course of barely varies relying on the gadget on which you might be operating. It is because there are a number of variations of Inference, one optimized for a distinct gadget sort. Go to the Roboflow Inference set up directions and search for the “Pull” command on your system.

For this information, we’ll use the GPU model of Inference:

docker pull roboflow/roboflow-inference-server-gpuWhile you run this command, Inference might be put in and arrange in your machine. This may occasionally take a couple of minutes relying on the power of your community connection. After inference has completed putting in, a server might be accessible at http://localhost:9001 by means of which you’ll be able to route inference requests.

Additionally, you will want to put in OpenCV Python, which we might be utilizing to load a webcam to be used in our mission. You may set up OpenCV Python utilizing the next command:

pip set up opencv-pythonStep #2: Load Dependencies and Configure Mission

Now that we now have Inference put in, we will begin writing a script to retrieve video and calculate the gaze of the particular person featured within the video.

Create a brand new Python file and paste within the following code:

import base64 import cv2

import numpy as np

import requests

import os IMG_PATH = "picture.jpg"

API_KEY = os.environ["API_KEY"]

DISTANCE_TO_OBJECT = 1000 # mm

HEIGHT_OF_HUMAN_FACE = 250 # mm

GAZE_DETECTION_URL = ( "http://127.0.0.1:9001/gaze/gaze_detection?api_key=" + API_KEY

)On this code, we import all the dependencies we might be utilizing. Then, we set a couple of world variables that we’ll be utilizing in our mission.

First, we set our Roboflow API key, which we’ll use to authenticate with our Inference server. Learn to retrieve your Roboflow API key. Subsequent, we estimate the gap between the particular person within the body and the digicam, measured in millimeters, in addition to the common top of a human face. We’d like these values to deduce gazes.

Lastly, we set the URL of the Inference server endpoint to which we might be making requests.

Step #3: Detect Gaze with Roboflow Inference

With all the configuration completed, we will write logic to detect gazes.

Add the next code to the Python file through which you might be working:

def detect_gazes(body: np.ndarray): img_encode = cv2.imencode(".jpg", body)[1] img_base64 = base64.b64encode(img_encode) resp = requests.put up( GAZE_DETECTION_URL, json={ "api_key": API_KEY, "picture": {"sort": "base64", "worth": img_base64.decode("utf-8")}, }, ) gazes = resp.json()[0]["predictions"] return gazes def draw_gaze(img: np.ndarray, gaze: dict): # draw face bounding field face = gaze["face"] x_min = int(face["x"] - face["width"] / 2) x_max = int(face["x"] + face["width"] / 2) y_min = int(face["y"] - face["height"] / 2) y_max = int(face["y"] + face["height"] / 2) cv2.rectangle(img, (x_min, y_min), (x_max, y_max), (255, 0, 0), 3) # draw gaze arrow _, imgW = img.form[:2] arrow_length = imgW / 2 dx = -arrow_length * np.sin(gaze["yaw"]) * np.cos(gaze["pitch"]) dy = -arrow_length * np.sin(gaze["pitch"]) cv2.arrowedLine( img, (int(face["x"]), int(face["y"])), (int(face["x"] + dx), int(face["y"] + dy)), (0, 0, 255), 2, cv2.LINE_AA, tipLength=0.18, ) # draw keypoints for keypoint in face["landmarks"]: coloration, thickness, radius = (0, 255, 0), 2, 2 x, y = int(keypoint["x"]), int(keypoint["y"]) cv2.circle(img, (x, y), thickness, coloration, radius) # draw label and rating label = "yaw {:.2f} pitch {:.2f}".format( gaze["yaw"] / np.pi * 180, gaze["pitch"] / np.pi * 180 ) cv2.putText( img, label, (x_min, y_min - 10), cv2.FONT_HERSHEY_PLAIN, 3, (255, 0, 0), 3 ) return img if __name__ == "__main__": cap = cv2.VideoCapture(0) whereas True: _, body = cap.learn() gazes = detect_gazes(body) if len(gazes) == 0: proceed # draw face & gaze gaze = gazes[0] draw_gaze(body, gaze) image_height, image_width = body.form[:2] length_per_pixel = HEIGHT_OF_HUMAN_FACE / gaze["face"]["height"] dx = -DISTANCE_TO_OBJECT * np.tan(gaze['yaw']) / length_per_pixel # 100000000 is used to indicate out of bounds dx = dx if not np.isnan(dx) else 100000000 dy = -DISTANCE_TO_OBJECT * np.arccos(gaze['yaw']) * np.tan(gaze['pitch']) / length_per_pixel dy = dy if not np.isnan(dy) else 100000000 gaze_point = int(image_width / 2 + dx), int(image_height / 2 + dy) cv2.circle(body, gaze_point, 25, (0, 0, 255), -1) cv2.imshow("gaze", body) if cv2.waitKey(1) & 0xFF == ord("q"): breakOn this code, we first outline a operate to attract a gaze onto a picture. This can enable us to visualise the route our program estimates somebody is wanting. We then create a loop that runs till we press `q` on the keyboard whereas viewing our video window.

This loop opens a video window displaying our webcam. For every body, the gaze detection mannequin on our Roboflow Inference Server is named. The gaze is retrieved for the primary particular person discovered, then we plot an arrow and field displaying the place the face of the particular person whose gaze is being detected is and the route through which they’re wanting. It’s assumed that there might be one particular person within the body.

Gazes are outlined when it comes to “pitch” and “yaw” within the mannequin we’re utilizing. Our code does the work we have to do to transform these values right into a route.

Lastly, we calculate the purpose on the display at which the particular person in view is wanting. We show the outcomes on display.

Right here is an instance of the gaze detection mannequin in motion:

Right here, we will see:

- The pitch and yaw related to our gaze.

- An arrow displaying our gaze route.

- The purpose at which the particular person is wanting, denoted by a pink dot.

The inexperienced dots are facial keypoints returned by the gaze detection mannequin. The inexperienced dots will not be related to this mission, however can be found in case you want the landmarks for extra calculations.

Bonus: Utilizing Gaze Factors

The gaze level is the estimated level at which somebody is wanting. That is mirrored within the pink dot that strikes as we glance round within the earlier demo.

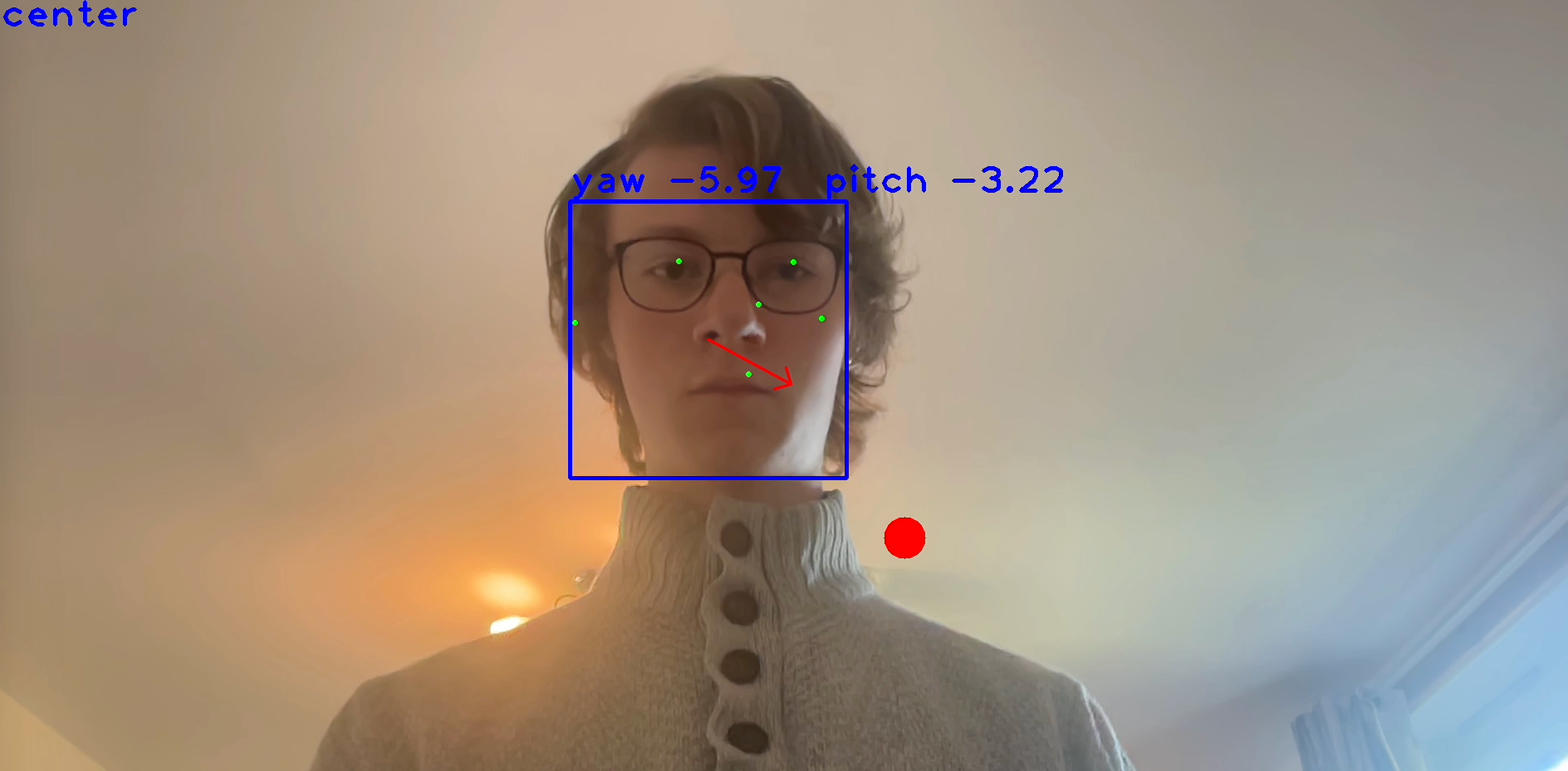

Now that we will detect the purpose at which somebody is wanting, we will write logic to do one thing once we have a look at a selected part on the display. For instance, the next code calculates the overall route we’re wanting (high, backside, left, proper, middle) and exhibits textual content within the video body with the route through which we’re wanting. Add the next code within the loop we outlined earlier, above the cv2.imshow() name:

quadrants = [ ("center", (int(image_width / 4), int(image_height / 4), int(image_width / 4 * 3), int(image_height / 4 * 3))), ("top_left", (0, 0, int(image_width / 2), int(image_height / 2))), ("top_right", (int(image_width / 2), 0, image_width, int(image_height / 2))), ("bottom_left", (0, int(image_height / 2), int(image_width / 2), image_height)), ("bottom_right", (int(image_width / 2), int(image_height / 2), image_width, image_height)), ] for quadrant, (x_min, y_min, x_max, y_max) in quadrants: if x_min <= gaze_point[0] <= x_max and y_min <= gaze_point[1] <= y_max: # present in high left of display cv2.putText(body, quadrant, (10, 50), cv2.FONT_HERSHEY_PLAIN, 3, (255, 0, 0), 3) breakLet’s run our code:

Our code efficiently calculates the overall route through which we’re wanting and shows a message with the route through which we’re wanting within the high left nook.

We will join the purpose at that are wanting or the area through which we wish to logic. For instance, wanting left may open a menu in an interactive software; taking a look at a sure level on a display may choose a button.

Gaze detection know-how has been utilized through the years to allow eye-controlled keyboards and person interfaces. That is helpful for each growing entry to know-how and for augmented actuality use circumstances the place the purpose at which you’re looking serves as an enter to an software.

Conclusion

Gaze detection is a area of analysis in imaginative and prescient that goals to estimate the route through which somebody is wanting and the purpose somebody is wanting on a display. A main use case for this know-how is accessibility. With a gaze detection mannequin, you possibly can enable somebody to regulate a display with out utilizing a keyboard or mouse.

On this information, we confirmed the way to use Roboflow Inference to run a gaze detection mannequin in your pc. We confirmed the way to set up Inference, load the dependencies required for our mission, and use our mannequin with a video stream. Now you have got all of the assets it is advisable to begin calculating gazes.