Introduction

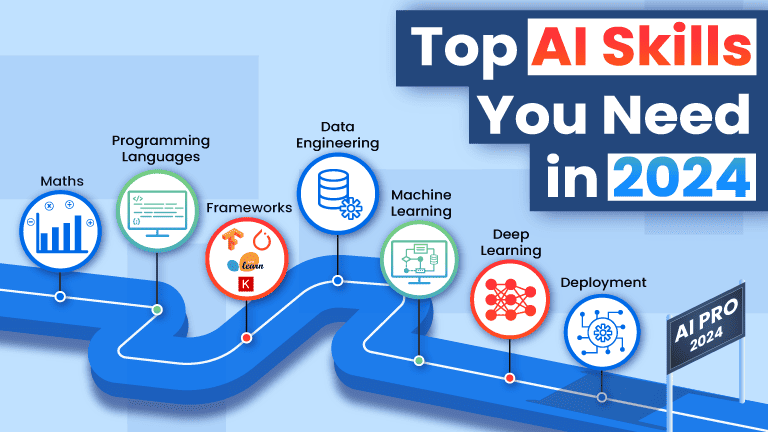

Synthetic Intelligence is undoubtedly probably the most current developments within the technological world. With its development and purposes throughout a wide selection of industries starting from Healthcare to Digital Actuality in gaming, it has additionally introduced an enormous surge in demand for AI professionals. However the subject of Synthetic Intelligence isn’t a stroll within the park. However don’t fret.

This learn will cowl the highest 11 AI Abilities wanted for a profitable profession in Synthetic Intelligence in 2024. So allow us to get to it!

AI Abilities Wanted for a Profitable Profession in Synthetic Intelligence

The World Synthetic Intelligence market was valued at $6.three billion again in 2014. Quick ahead a decade, it’s anticipated to hit a staggering $305.9 billion in 2024. This may be attributed to many elements like breakthroughs in Deep Studying and algorithms; mixed with the large computing energy, assets, and information storage, AI isn’t stopping! With over 80% of companies starting from SMEs to MNCs adopting Synthetic Intelligence into their methods, it’s essential for somebody in search of to get into the sphere to know all of the important synthetic intelligence abilities wanted. Allow us to kick issues off with arduous abilities!

Exhausting Abilities

Mastering any subject requires one to grasp a set of arduous and tender abilities, no matter the specialization. The sphere of AI is not any completely different. This part will cowl all of the arduous abilities wanted for AI mastery, so let’s get to it with out losing any extra time!

Arithmetic

One of many first arduous abilities one must grasp is Arithmetic. Why is arithmetic an AI ability one has to grasp? What does math should do with synthetic intelligence?

AI methods are primarily constructed to automate many of the processes and to raised perceive and support people. AI methods represent fashions, instruments, frameworks, and logic, all of which represent mathematical subjects. Ideas like linear algebra, statistics, and differential calculus all type main subjects to kickstart one’s AI profession. Allow us to discover them one after the other.

Linear Algebra

Linear algebra is used to unravel information issues and computations in machine studying fashions. It is likely one of the most vital math ideas one must grasp. Most fashions and datasets are represented as matrices. Linear algebra is used for information preprocessing, transformation, and analysis. Allow us to take a look at a number of the main areas of use.

Graphical illustration of linear algebra

Information Illustration

Information types a vital first step in coaching fashions. However earlier than this, the info must be transformed into arrays earlier than it may be fed into the fashions. Computations are carried out on these arrays that return outputs as tensors or matrices. Additionally, any issues in scaling, rotation, or projection will be proven as matrices.

Matrix for sure areas of a Greyscale Picture

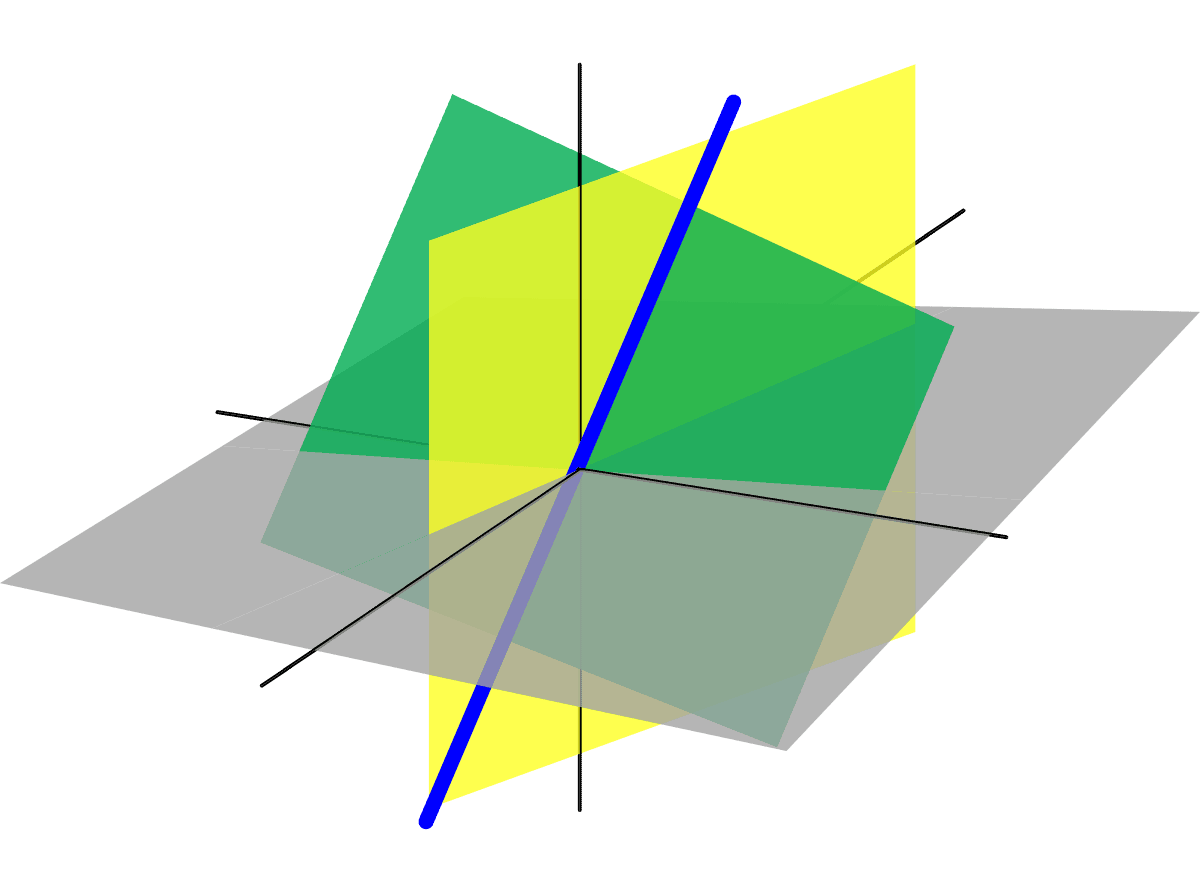

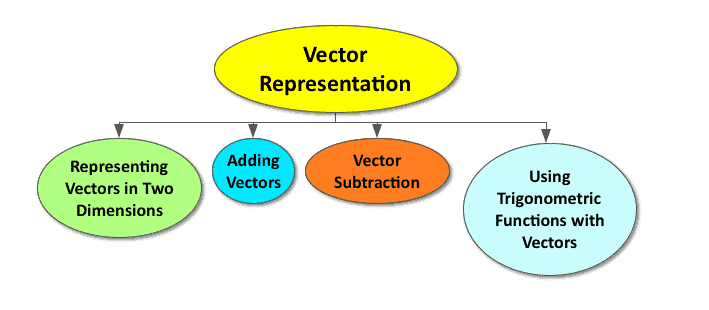

Vector Embedding

Vector is used to arrange information and comprises each magnitude and path. Vector embedding entails leveraging machine studying and synthetic intelligence. Right here, a selected engineered mannequin is skilled to transform several types of photos or textual content into numerical representations as vectors or matrices. Utilizing vector embeddings can drastically enhance information evaluation and achieve insights.

Vector Illustration

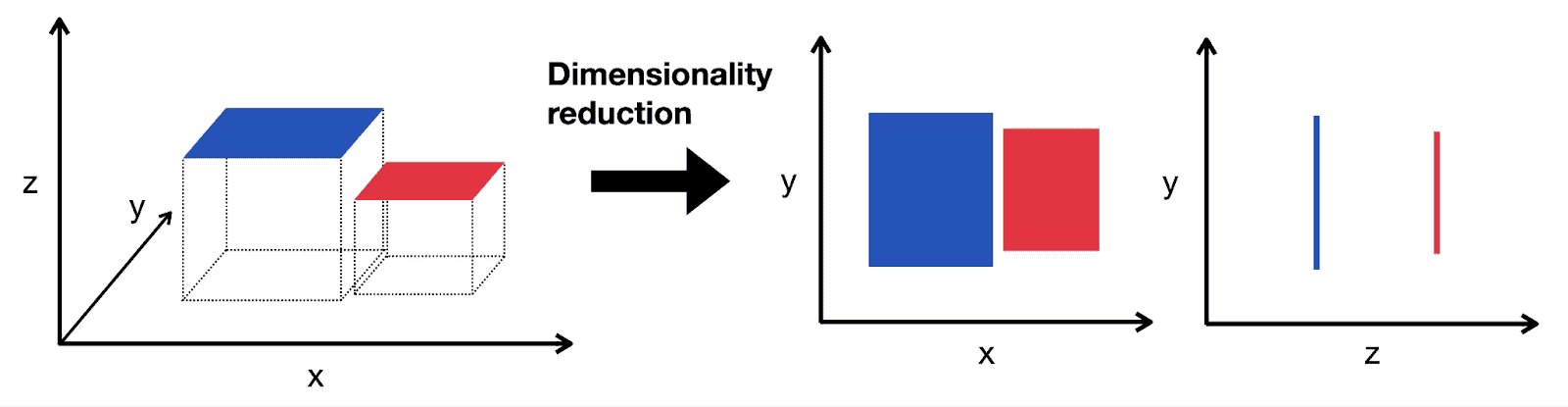

Dimensionality Discount

This system is used once we wish to cut back the variety of options in a dataset whereas additionally retaining as a lot data as doable. With dimensionality discount, high-dimensional information is remodeled right into a lower-dimensional house. It reduces the mannequin complexity and improves generalization efficiency.

Dimensionality Discount

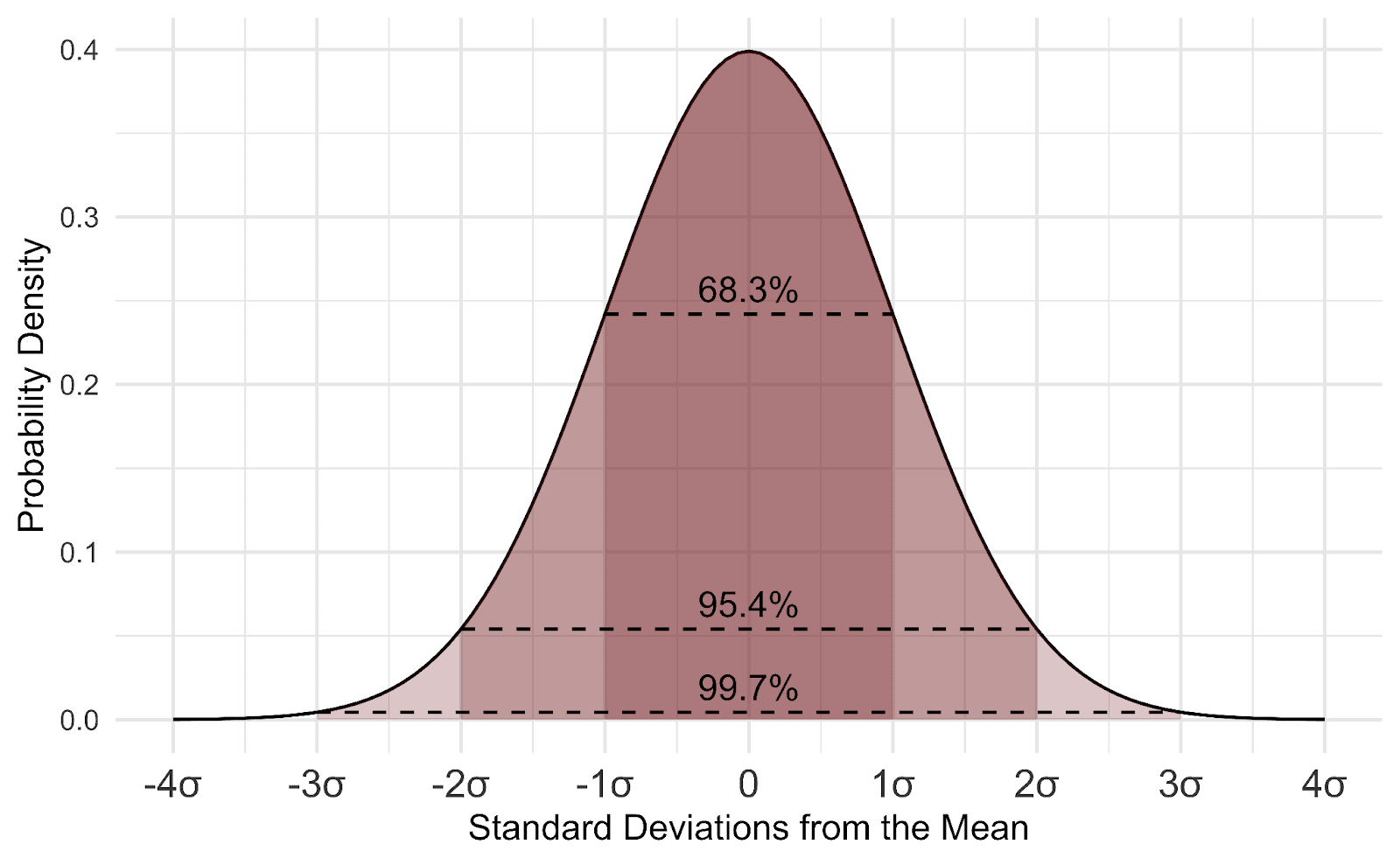

Statistics

Statistics is one other mathematical idea wanted to search out unseen patterns from analyzing and presenting uncooked information. Two widespread statistical subjects one should grasp are as follows.

Customary Regular Deviation

Inferential Statistics

Inferential statistics makes use of samples to make generalizations about bigger information. We will make estimates and predict future outcomes. By leveraging pattern information, inferential statistics makes inferential manipulations to make predictions.

Descriptive Statistics

In Descriptive statistics, the options are described and information is offered that’s purely factual. Predictions are created from identified information utilizing metrics like distribution, variance, or central tendency.

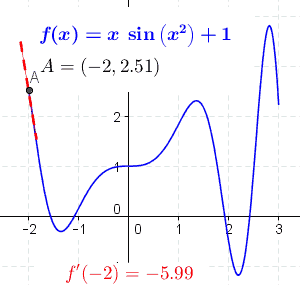

Differential Calculus

Differential calculus is the method of discovering a by-product from a operate. This by-product measures the change in a operate charge. Calculus performs a significant position when working with deep studying or machine studying algorithms and fashions. They support algorithms in gaining insights from information. Merely put, they cope with the charges at which portions change.

Differential calculus additionally finds use for algorithm optimizations and mannequin features. They measure how a operate adjustments when its enter variables change. When utilized, algorithms that be taught from information enhance.

So what’s the position of differential calculus in AI?

Properly, in AI, we largely cope with value features and loss features. To search out these features, we have to discover the maxima or minimal. To do that, adjustments have to be made to all of the parameters, which is a trouble, i.e., time-consuming and costly too. That is the place methods like gradient descent come into the image. They’re used to investigate how an output adjustments when the enter is modified.

Tangent Perform

Arithmetic proves to be a foundational step in your AI abilities record, aiding in processing information, studying patterns, and gaining insights.

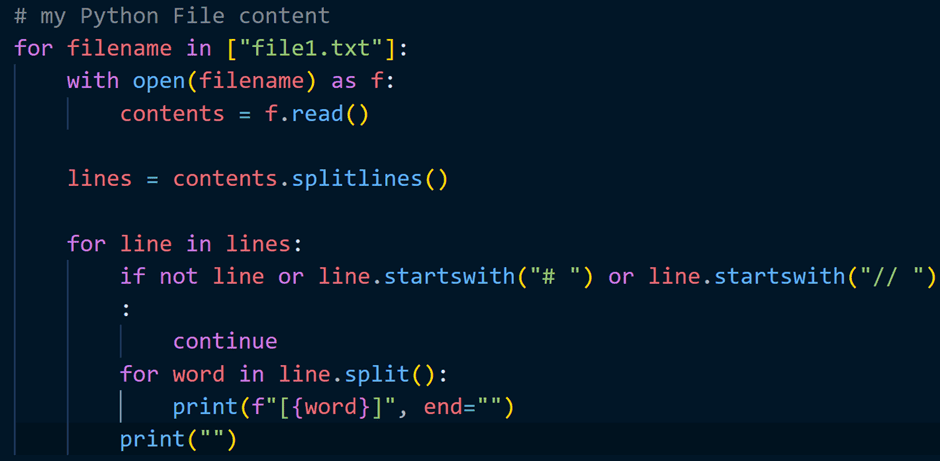

Programming

One of many first AI abilities wanted to have a profitable profession within the subject is programming. It’s by means of programming that one can apply AI theories and ideas in purposes. As an example, it serves as a constructing block to construct deep studying and machine studying fashions and prepare them. One other occasion is the assist in cleansing, analyzing, and manipulating information.

Just a few could argue that the improved sophistication of AI would make programming abilities much less related. These methods and algorithms have their limitations. A programmer can drastically enhance the effectivity of those methods. The demand for proficient coders is excessive, with most industries incorporating AI into their operations. It will additionally maintain one related on this aggressive job market.

There are a ton of coding languages used, the most typical ones being C, C++, Java, and Python. Allow us to take a more in-depth take a look at them.

Python

Python is likely one of the hottest programming languages utilized by builders. It’s an interpreted language, that means it needn’t be translated into machine language directions to run applications. Python is taken into account a basic course of language that can be utilized throughout numerous fields and industries.

Why has Python gained a lot recognition?

- It’s appropriate with many working methods, giving it very excessive flexibility; one needn’t develop elaborate codes.

- Python drastically reduces the strains of code for execution, lowering the time wanted for execution.

- It gives a ton of pre-built libraries like NumPy for scientific computations and SciPy for superior computations.

C++

C++ is a flexible and highly effective programming language that can be utilized to construct high-performance AI methods. It’s the second hottest selection amongst programmers, particularly in areas the place scalability and efficiency are vital.

They run fashions a lot sooner than interpreted languages like Python. C++. One other plus with utilizing C++ is they can interface with different languages and libraries.

- Being a compiled language, C++ gives excessive efficiency, appropriate for constructing methods requiring excessive computational energy.

- C++ is simpler to make use of for efficiency optimizations and reminiscence utilization.

- One other nice side is that C++ can run on completely different platforms, making deploying purposes in numerous environments simple.

With a variety of libraries and frameworks, C++ is a robust and versatile language apt for creating deep studying and machine studying throughout manufacturing.

As seen above, programming languages are one of many first foundational steps to a profitable profession in Synthetic Intelligence. Now allow us to transfer on to the subsequent AI ability – Frameworks and Libraries.

Frameworks and Libraries

Frameworks and libraries in AI confer with pre-built packages providing all of the important parts to construct and run fashions. They often embody algorithms, information processing instruments, and pre-trained fashions. These frameworks function a basis for implementing machine studying and deep studying algorithms. Frameworks remove the necessity for guide coding or coding from scratch, proving very cost-effective for companies to construct AI purposes. So why use an AI framework?

- Frameworks come outfitted with pre-implemented algorithms, optimization methods, and utilities for information dealing with aiding builders in fixing particular issues. This facilitates the app growth stream.

- As mentioned earlier, frameworks are very cost-effective. Improvement prices are drastically curbed as a result of availability of pre-built parts. Firms are in a position to create purposes in a extra environment friendly method and a a lot shorter span when in comparison with conventional strategies.

Frameworks will be broadly categorised into

- Open Supply Frameworks

- Industrial Frameworks

Allow us to delve a bit into them.

Open Supply Frameworks

Frameworks which are launched beneath an open-source license are open-source frameworks. Customers can use it for any function. They’re free to make use of and often embody the supply code and permits derived works. Backed by an lively neighborhood, one can discover a ton of assets for troubleshooting and studying.

Industrial Frameworks

Not like open-source frameworks, industrial frameworks are developed and licensed by particular manufacturers. Customers are restricted to what they’ll do with the software program and may very well be levied further charges. Industrial frameworks often have devoted help in case one bumps into any points. Since these frameworks are owned by a selected firm, one can discover superior options and optimizations which are often user-focused.

That’s sufficient concerning the varieties of frameworks. Allow us to discover the important Frameworks and Libraries you’ll be able to add to your AI abilities record.

PyTorch

PyTorch is an open-source library developed by Meta in 2016. It’s primarily utilized in deep studying, laptop imaginative and prescient, and pure language processing. It’s simple to be taught as a result of efforts made by the devs to enhance its construction, making it similar to conventional programming. Since most duties in PyTorch will be automated, productiveness will be improved drastically. With an enormous neighborhood, PyTorch gives a lot help from devs and researchers alike. GPyTorch, Allen NLP, and BoTorch are just a few standard libraries.

TensorFlow

TensorFlow is an open-source framework developed by Google in 2015. It helps many classification and regression algorithms and is used for high-performance numerical computations for machine studying and deep studying. TensorFlow is utilized by giants like AirBnB, eBay and Coca-Cola. It gives simplifications and abstractions, conserving the code small and extra environment friendly. TensorFlow is extensively used for picture recognition. There’s additionally TensorFlow Lite, the place one can deploy fashions on cell and edge gadgets.

MLX

Very similar to the earlier frameworks we mentioned, MLX can be an open-source framework developed by Apple to deploy machine studying fashions on Apple gadgets. Not like different frameworks like PyTorch and TensorFlow, MLX gives distinctive options. MLX is particularly constructed for Apple’s M1, M2, and M3 collection chips. It leverages Neural engine and SIMD directions, considerably rising the coaching and inference pace in comparison with different frameworks that run on Apple {hardware}. The consequence: smoother and extra responsive expertise on iPhones, iPads and Macs. MLX is a robust package deal for builders with superior efficiency and adaptability. One disadvantage is that being a reasonably new framework, it could not supply all of the options of its seasoned counterparts like TensorFlow and PyTorch.

SciKit-learn

SciKit-learn is a free, open-source Python library for machine studying constructed on NumPy, SciPy, and Matplotlib. It gives a clear, uniform, and streamlined API accompanied by complete documentation and tutorials, information mining, and machine studying capabilities. Switching to a different mannequin or algorithm is simple as soon as a developer understands the fundamental syntax for one mannequin sort. SciKit-learn gives an in depth consumer information to shortly entry assets starting from multilabel algorithms to covariance estimation. It’s numerous and used for smaller prototypes to extra advanced deep studying duties.

Keras

Keras is an open-source, high-level neural networks API that runs over different frameworks. It is part of the TensorFlow library the place we will outline and prepare neural community fashions in only a few strains of code. Keras gives easy and constant APIs, lowering the time to run widespread codes. It additionally takes much less prototyping time, that means fashions will be deployed in a shorter span of time. Giants like Uber, Yelp, and Netflix use Keras.

Information Engineering

The 21st Century is the period of Huge Information. Information is an important side that fuels the innovation behind Synthetic Intelligence. It gives the knowledge to companies to streamline their processes and make knowledgeable choices aligned with their enterprise targets. With the explosion of IoT (Web of Issues), social media, and digitization, the quantity of knowledge has superior drastically. However with this huge quantity of knowledge, accumulating, analyzing, and storing it’s fairly difficult. That is the place information engineering comes into the image. It’s primarily used to assemble, set up, and preserve methods and pipelines, facilitating organizations to gather, clear, and course of information effectively.

Though we lined Statistics in one of many earlier sections, it additionally performs an vital position in information engineering. The fundamentals would support information engineers in understanding the mission necessities higher. Statistics assist in drawing inferences from information. Information engineers can leverage statistical metrics to measure using information in a database. It’s good to have a fundamental understanding of descriptive statistics, like calculating percentiles from collected information.

Now that we’ve understood what information engineering is, we’ll go just a little deeper into the position of knowledge engineering in Synthetic Intelligence.

Information Assortment

Because the title suggests, information assortment is accumulating information from numerous sources to extract insightful data. The place can we discover information? Information will be collected from numerous sources like on-line monitoring, surveys, suggestions, and social media. Companies leverage information assortment to optimize work high quality, make market forecasts, discover new prospects, and make worthwhile choices. There are 3 ways for information assortment.

First-Social gathering Information Assortment

On this type of information assortment, the info is instantly obtained from the shopper. This may very well be by the use of web sites, social media platforms, or apps. First-party information is correct and extremely dependable, with nobody concerned within the center. This type of information assortment refers to buyer relationship administration information, subscriptions, social media information, or buyer suggestions.

Second-Social gathering Information Assortment

Second-party information assortment is the place information is collected from trusted companions. This may very well be a enterprise outdoors of the model accumulating the info. That is fairly much like first-party information because the information is acquired by means of dependable sources. Manufacturers leverage second-party information to get higher insights and scale up their enterprise.

Third-Social gathering Information Assortment

Right here, the info is collected from an outdoor supply unrelated to the enterprise or the shopper. This type of information is collected from numerous sources after which offered to varied manufacturers for advertising and gross sales functions. Third-party information assortment gives a a lot wider viewers vary than the earlier two. However this comes at a price; the info must be dependable and wouldn’t be collected with adherence to privateness legal guidelines.

Information Integration

Information Integration dates again to the 80s. The principle intent was to suppress the variations of relational databases utilizing enterprise guidelines. In these days, information integration depended extra on bodily infrastructures and tangible repositories, not like right now’s cloud expertise. Information Integration entails combining numerous information sorts from completely different sources into one dataset. This can be utilized to run purposes and support enterprise analytics. Companies can leverage this dataset to make higher choices, drive gross sales, and supply higher buyer expertise.

Information integration is present in virtually each sector starting from finance to logistics. Allow us to discover a number of the several types of information integration strategies.

Guide Information Integration

That is essentially the most fundamental approach of knowledge integration. With Guide information integration, we now have full management over the mixing and administration. An information engineer can conduct information cleaning, re-organization, and manually transfer it to the fascinating vacation spot.

Uniform Information Entry Integration

On this type of integration, information is displayed persistently for ease of usability whereas conserving the info supply at its authentic location. It’s easy, gives a unified view of knowledge, gives a number of methods or apps to hook up with one supply, and doesn’t require excessive storage.

Software-Primarily based Information Integration

Right here, software program is leveraged to find, fetch, and format information which is then built-in into the specified vacation spot. This consists of pre-built connections to a wide range of information sources and with the ability to connect with further information sources if essential. With application-based information integration, the info switch occurs seamlessly and makes use of fewer assets, because of automation. It is usually easy to make use of and doesn’t at all times require technical experience.

Widespread Storage Information Integration

With extra voluminous information, corporations are resorting to extra widespread storage choices. Very similar to the uniform entry integration, the knowledge undergoes information transformation earlier than it’s copied to a knowledge warehouse. With the info in a single location accessible at any time, we will run enterprise analytical instruments when wanted. This type of information integration gives increased information integrity and is much less strenuous on information host methods.

Middleware information integration

Right here, the mixing occurs between the appliance layer and the {hardware} infrastructure. A Middleware information integration answer transfers information from numerous purposes to databases. With Middleware, the community methods talk higher and may switch enterprise information in a constant method.

Machine Studying Approaches and Algorithms

Pc applications that may adapt and evolve primarily based on the info they course of are Machine Studying Algorithms. Known as coaching information, they’re basically mathematical information that be taught by means of information fed to them. Machine Studying algorithms are probably the most extensively used algorithms right now. They’re built-in into virtually each type of {hardware}, from smartphones to sensors.

Machine studying algorithms will be categorized in numerous methods relying on their function. We’ll delve into every of them.

Supervised Studying

In Supervised studying, machines be taught by instance. They achieve inferences from beforehand discovered information to get new information utilizing labeled information. The algorithm identifies patterns within the information and makes predictions. The algorithm makes predictions and is corrected by the dev till it attains excessive accuracy. Supervised studying consists of

- Classification – Right here, the algorithm attracts inferences from noticed values and determines which class the brand new remark belongs to.

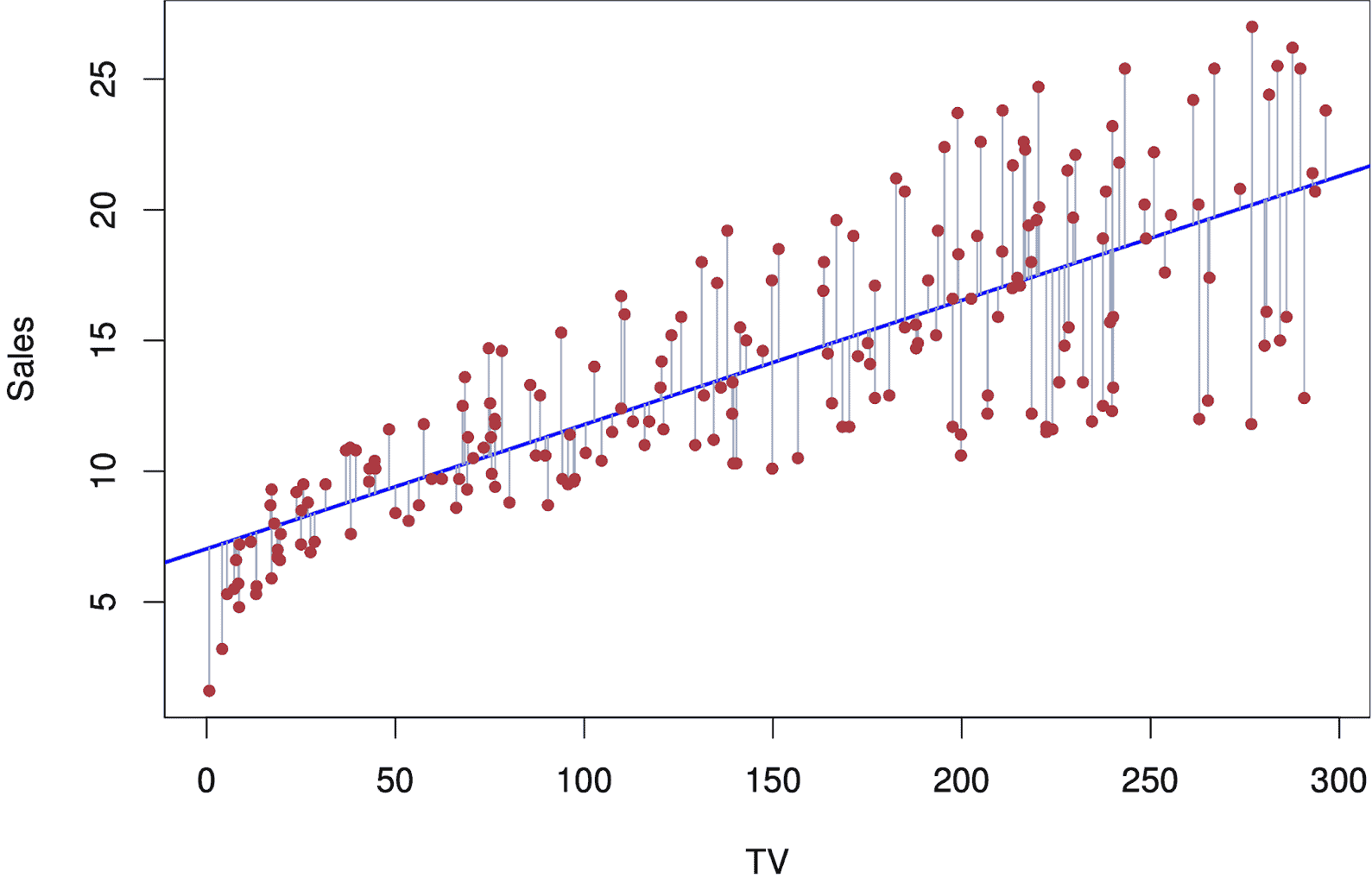

- Regression – In regression, the connection between the assorted variables is known the place the emphasis is positioned on one dependent variable and a collection of different altering variables, making it helpful for predictions and forecasting.

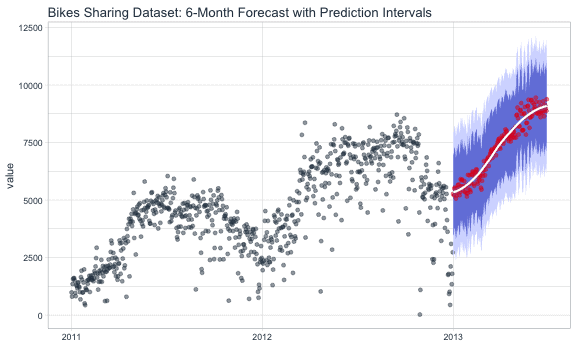

- Forecasting – It’s the course of of creating future predictions primarily based on previous and current information.

Forecasting

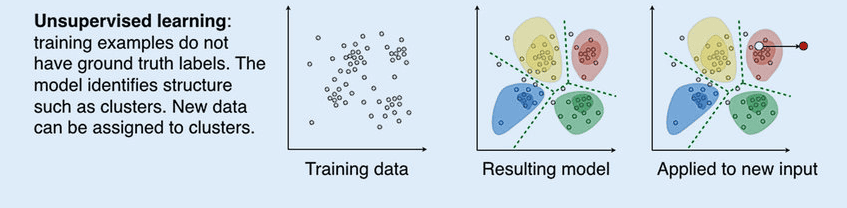

Unsupervised Studying

In unsupervised studying, the algorithms analyze information to get patterns. The machine research the obtainable information and infers the correlations. The algorithm interprets giant information and tries to arrange it in a structured method. Unsupervised studying consists of

- Dimension discount – This type of unsupervised studying reduces the variety of variables thought-about to search out the knowledge required.

- Clustering – This entails grouping comparable information units primarily based on some outlined standards.

Unsupervised Studying

Semi-supervised Studying

Semi-supervised studying, or SSL, is an ML approach that leverages a small portion of labeled information and a great deal of unlabeled information to coach a predictive mannequin. With this type of studying, bills are diminished on guide annotation and curbs information prep time. Semi-supervised studying serves as a bridge between supervised and unsupervised studying and solves their issues. SSL can work for a variety of issues starting from classification and regression to affiliation and clustering. Since there may be an abundance of unlabeled information and are comparatively low-cost, SSL can be utilized for a ton of purposes with out compromising on accuracy.

Semi-supervised Studying

Allow us to discover a number of the widespread machine studying algorithms.

Logistic Regression

Logistic regression is a type of supervised studying used to foretell the chance of a sure or no primarily based on prior observations. These predictions are primarily based on the connection between one or just a few current impartial variables. Logistic regression proves to be paramount in information preparation actions by placing information units into predefined containers throughout extraction, transformation, and cargo processes to stage the knowledge.

Logistic Regression

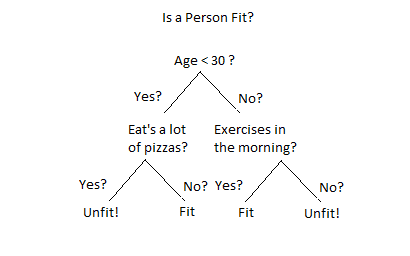

Determination Tree

Determination Tree is a supervised studying algorithm that creates a stream diagram to make choices primarily based on numeric predictions Not like different supervised studying algorithms, we will remedy regression and classification issues too. By studying easy determination guidelines, the category or worth of the goal variable will be predicted. Determination timber are versatile and are available numerous types for enterprise decision-making purposes. They use information that doesn’t want a lot cleaning or standardization and don’t take a lot time to coach new information.

Determination Tree

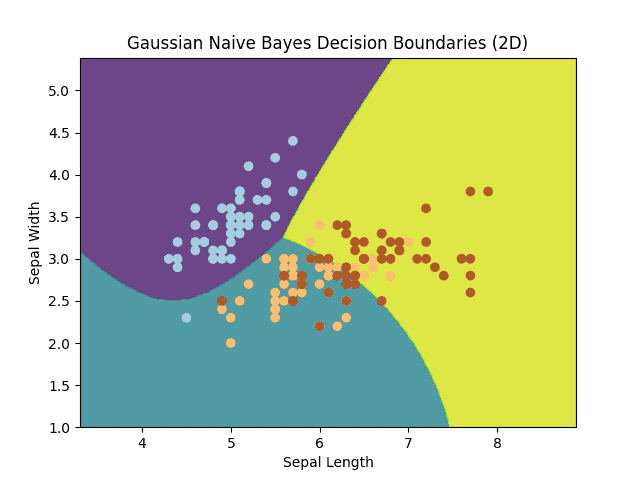

Naive Bayes

Naive Bayes is a probabilistic ML algorithm used for numerous classification issues like textual content classification the place we prepare excessive dimensional datasets. It’s a highly effective predictive modeling algorithm primarily based on the Bayes Theorem. Constructing fashions and making predictions are a lot sooner with this algorithm, but it surely requires excessive experience to develop them.

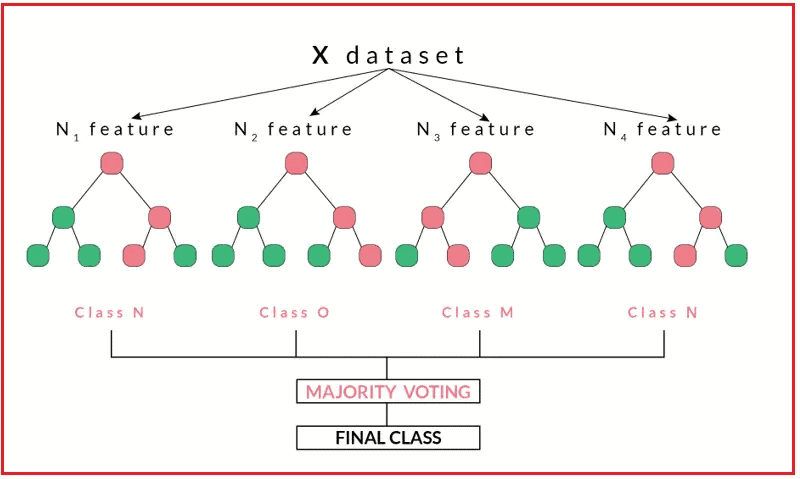

Random Forest

Random forest is a well-known ML algorithm used for classification and regression duties that additionally makes use of supervised studying strategies. It produces nice outcomes even with out hyper-parameter tuning. It’s a go-to algorithm amongst machine studying practitioners on account of its simplicity and variety. Random forest is a classifier that comprises a number of determination timber on completely different subsets of a given dataset and finds the common to optimize the accuracy of that dataset.

Random Forest

Ok Nearest Neighbour (KNN)

KNN is an easy algorithm that shops all of the obtainable circumstances and classifies the brand new information. It’s a supervised studying classifier used to make predictions leveraging proximity. Though it finds use in classification and regression duties, usually, it’s used as a classification algorithm. It might deal with each categorical and numerical information making it versatile for several types of datasets for classification and regression duties. As a consequence of its simplicity and ease of implementation, it’s a widespread go-to for builders.

Machine studying algorithms are vital in harnessing one’s AI abilities and rising in the direction of a profitable profession in synthetic intelligence. On this part, we lined the several types of ML algorithms and a few widespread methods. Allow us to head over to the subsequent AI ability – Deep studying.

KNN

Deep Studying

The current advances in Synthetic Intelligence will be attributed to Deep Studying, starting from giant language fashions like ChatGPT to self-driving automobiles like Tesla.

So what precisely is Deep Studying?

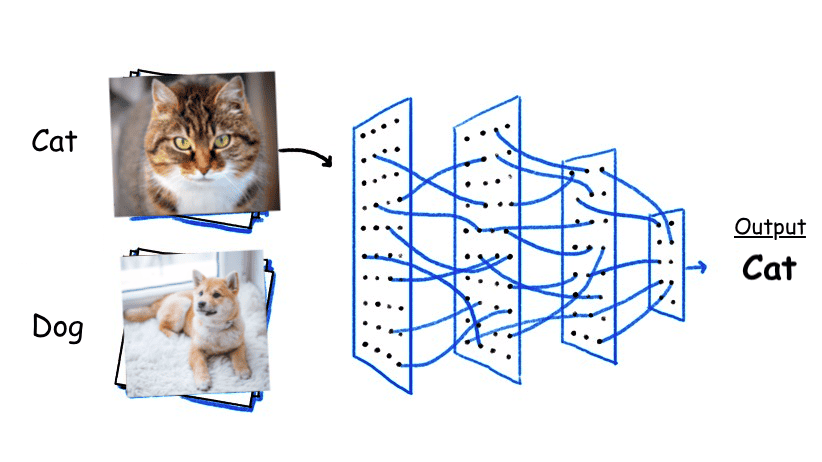

Deep Studying is a subfield of Synthetic Intelligence that tries to duplicate the workings of the human mind in machines by processing information. Deep studying fashions analyze advanced patterns in texts, photos, and different types of information producing correct insights and predictions. Deep studying algorithms want information to unravel issues; in a manner, it’s a subfield of Machine Studying. However not like machine studying, deep studying constitutes a multi-layered construction of algorithms referred to as Neural Networks.

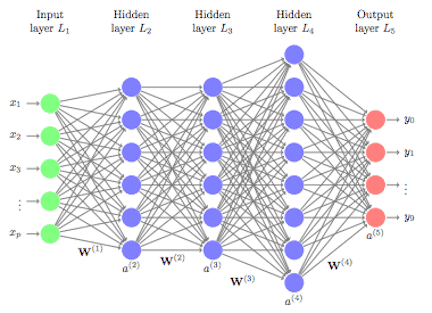

Neural networks are computational fashions that attempt to replicate the advanced features of the human mind. Neural networks have a number of layers of interconnected nodes that course of and be taught from information. By analyzing the hierarchical patterns and options within the information, neural networks can be taught advanced representations of the info.

Forms of Neural Networks

This part will focus on the generally used architectures in deep studying.

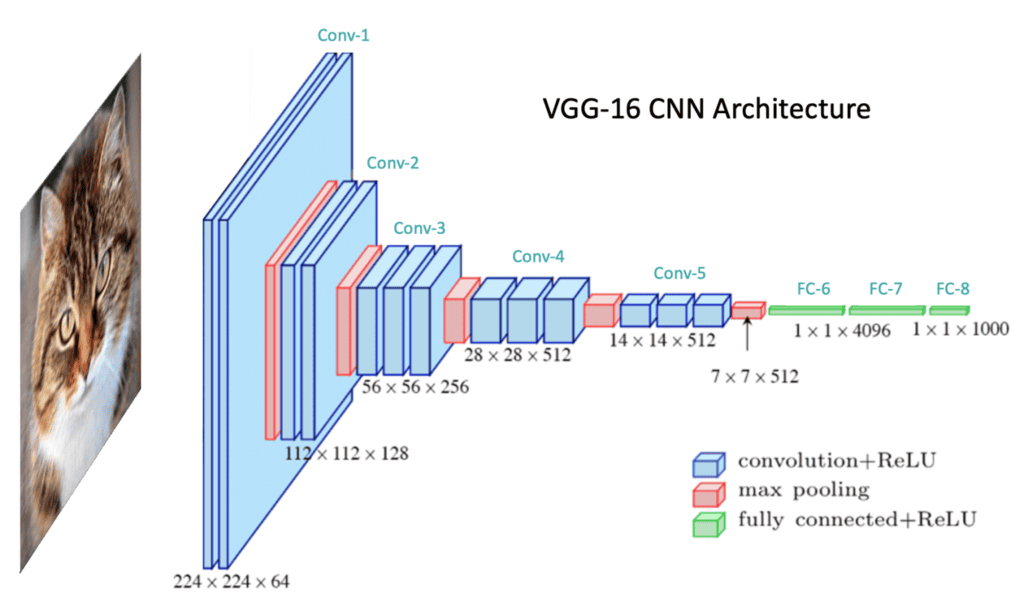

Convolutional Neural Networks

Convolutional Neural Networks, or CNNs, are deep studying algorithms designed for duties like object detection, picture segmentation, and object recognition. They will autonomously extract options at a big scale, eradicating the necessity for guide characteristic engineering and enhancing effectivity. CNNs are versatile and will be utilized to domains like Pc Imaginative and prescient and NLPs. CNN fashions like ResNet50 and VGG-16 can adapt to new duties with little information.

CNN

Feedforward Neural Networks

An FNN, additionally referred to as a deep community or multi-layer perceptron (MLP), is a fundamental neural community the place the enter is processed in a single path. FNNs have been among the many first and most profitable studying algorithms being carried out. An FNN contains an enter layer, an output layer, a hidden layer, and neuron weights. The enter neuron receives information that travels by means of the hidden layers and leaves by means of the output neuron.

FNN

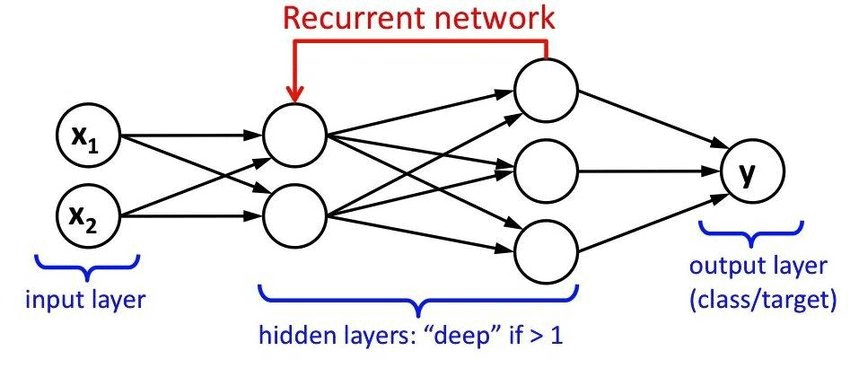

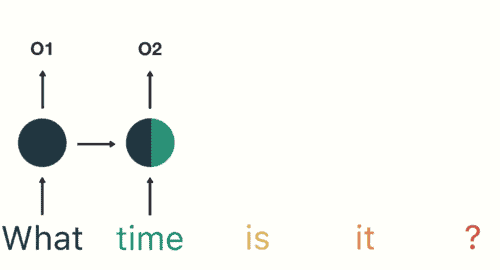

Recurrent Neural Networks

RNNs are state-of-the-art algorithms that course of sequential information like time collection and pure language. They preserve an inside state that captures details about earlier inputs, making them apt for speech recognition and language translations like Siri or Alexa. RNNs are the popular algorithm for sequential information like speech, textual content, audio, video, and extra.

RNN

Deep studying constitutes many subtypes, just a few of which we’ll discover.

Pc Imaginative and prescient

Pc Imaginative and prescient, or CV, is one other subject in AI that has seen a growth lately. We will owe this to the huge availability of knowledge (roughly three billion photos being shared each day) generated right now. We will date Pc Imaginative and prescient again to the 50s.

What’s Pc Imaginative and prescient?

Pc Imaginative and prescient is a subfield of AI that trains machines and computer systems to interpret their environment like we do. In easy phrases, it offers the facility of sight to machines. In the true world, this might take the type of face unlock on our cell phones or filters on Instagram.

Are you trying to deep dive into Pc Imaginative and prescient? Try our complete information right here.

Pure Language Processing (NLP)

One other subfield that accelerates Deep Studying, Pure language processing, or NLP, offers with giving machines the flexibility to course of and perceive human language. We’ve all used NLP tech in some type or one other, as an example, digital assistants like Amazon’s Alexa or Samsung’s Bixby. This expertise is often primarily based on machine studying algorithms to investigate examples and make inferences primarily based on statistics, that means the extra the machine receives information, the extra correct the consequence might be.

How does NLP profit a enterprise?

NLP methods can analyze and course of giant volumes of knowledge from completely different sources, from information studies to social media, and supply invaluable insights to evaluate the model’s efficiency. By streamlining processes, this tech could make information evaluation extra environment friendly and efficient.

NLP tech is available in completely different sizes and styles within the type of Chatbots, autocomplete instruments, language translations, and lots of extra. A few of the key facets for one to be taught to grasp NLP embody

- Information Cleansing

- Tokenization

- Phrase Embedding

- Mannequin Improvement

Possessing sturdy fundamentals of NLP and Pc Imaginative and prescient can open doorways to high-paying roles like Pc Imaginative and prescient Engineer, NLP Engineer, NLP Analyst and lots of extra.

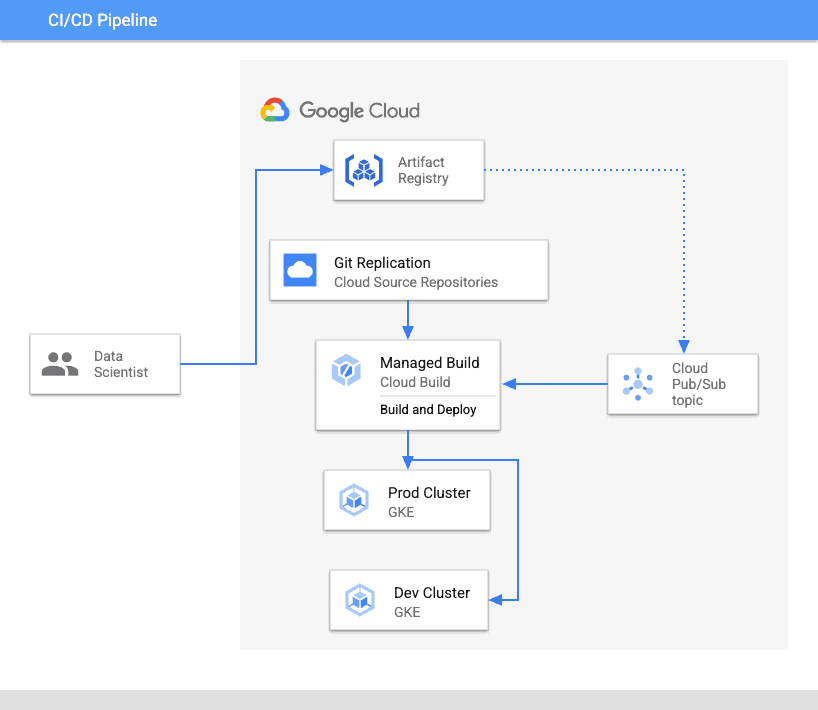

Deployment

Mannequin deployment is the ultimate step that ties the entire above collectively. It’s the strategy of facilitating accessibility and its operations inside a confined setting the place they’ll make predictions and achieve insights. Right here, the fashions are built-in into bigger methods. These predictions are made obtainable to the general public for his or her use. This might pose a problem for various causes like testing and scaling or variations between mannequin growth and coaching. However with the appropriate mannequin frameworks, instruments, and processes, they are often overcome.

Historically fashions have been deployed on native servers or machines, which restricted their accessibility and scalability. Quick ahead to right now, with cloud computing platforms like Amazon Internet Providers and Azure, deployment has change into much more seamless. They’ve improved how the fashions are deployed, handle assets, and deal with scaling and upkeep complexities.

Allow us to take a look at a number of the core options of mannequin deployment.

Scalability

Mannequin scalability refers back to the skill of a mannequin to deal with big volumes of knowledge with out compromising efficiency or accuracy. It entails

- Scaling up or down on cloud platforms primarily based on the demand

- It ensures optimum efficiency and makes it cheaper

- Gives load balancing and auto-scaling – that are essential for dealing with various workloads and guaranteeing excessive availability

- Helps gauge if the system is ready to deal with rising workloads and the way adaptable it may be

Reliability

This refers to how effectively the mannequin performs what it was meant to do with minimal errors. Reliability relies on just a few elements.

- Redundancy is having backups for vital assets in case of failures or unavailability.

- Monitoring is finished to evaluate the system throughout deployment and resolve any points that pop up.

- Testing validates the correctness of the system earlier than and after its deployment.

- Error Dealing with is how the system recovers from failures with out compromising the performance and high quality.

Cloud Deployment

The subsequent step is to pick the deployment setting particular to our necessities, like prices, safety, and integration capabilities. Cloud computing has come a good distance over the previous decade. Cloud mannequin deployment choices have been very restricted throughout its preliminary years.

What’s Cloud deployment?

It’s the association of distinct variables like possession and accessibility of the distributed framework. It serves as a digital computing setting the place we will select a deployment mannequin primarily based on how a lot information we wish to retailer and who controls the infrastructure.

Personal Cloud

That is the place the corporate builds, operates, and owns its information facilities. MNCs and enormous manufacturers typically undertake personal cloud for higher customizations and compliance necessities though it could want funding in software program and staffing. Personal clouds greatest match corporations trying to have good management over information and assets and in addition curb prices. It’s superb for storing confidential information which is just accessible by licensed personnel.

Public Cloud

Public cloud deployment entails third-party suppliers who host the infrastructure and software program shared information facilities. Not like the personal cloud, one can save on infrastructure and staffing prices. They’re simple to make use of and are extra scalable.

Hybrid Cloud

It’s a cloud sort that mixes a non-public cloud with a public cloud. They facilitate the motion of knowledge and purposes transfer in between two environments. Hybrid platforms supply extra

- Flexibility

- Safety

- Deployment choices

- Compliance

Selecting the best public cloud supplier is usually a daunting job among the many tons of. So allow us to make it simpler for you as we now have picked out the highest gamers dominating the market.

Amazon Internet Providers

Developed by Amazon, AWS was launched in 2006 and was one of many first pioneers within the cloud business. With over 200 cloud providers throughout 245 nations, AWS stands on the prime of the leaderboard with 32% of the market share. It’s utilized by giants like Coca-Cola, Adobe and Netflix.

Google Cloud Platform

Launched in 2008 and began out as an “App Engine” that turned Google Cloud Platform in 2012. Right this moment it boasts 120 cloud providers, a good selection for builders. Compute Engine, considered one of its greatest options helps any working system and gives customized and predefined machine sorts.

Microsoft Azure

Azure was launched in 2010, providing conventional cloud providers throughout 46 areas and taking the second-highest share within the cloud market. One can shortly deploy and handle fashions, deploy and handle fashions, and share for cross-workspace collaborations.

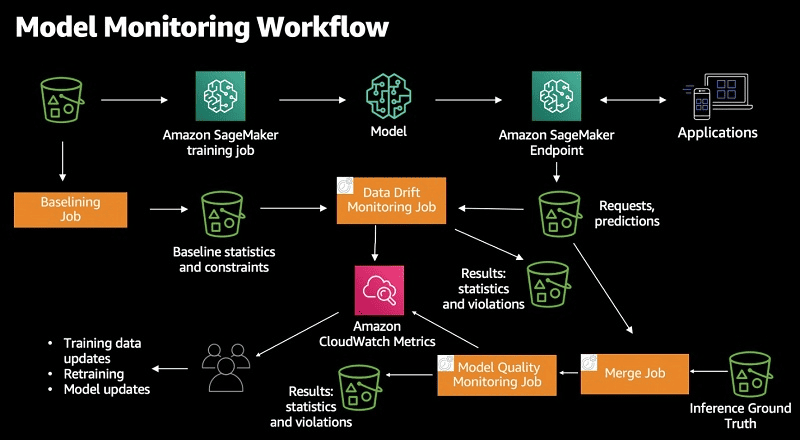

Monitoring Mannequin efficiency

As soon as the fashions are deployed, the subsequent step is to observe the fashions.

Why monitor the mannequin’s efficiency?

Fashions often degrade over time. From the time of deployment, the mannequin begins to lose its efficiency slowly. That is finished to make sure that they carry out as anticipated and persistently. Right here we monitor the behaviour of the deployed mannequin and analyze and make inferences from them. Subsequent, if the mannequin requires any updates in manufacturing, we’d like a real-time view to make evaluations. This may be made doable by means of validation outcomes.

Monitoring will be categorized into:

- Operational stage monitoring is the place one wants to make sure that the assets used for the system are wholesome and might be acted upon if in any other case.

- Useful stage monitoring is the place we monitor the enter layer, the mannequin, and the output predictions.

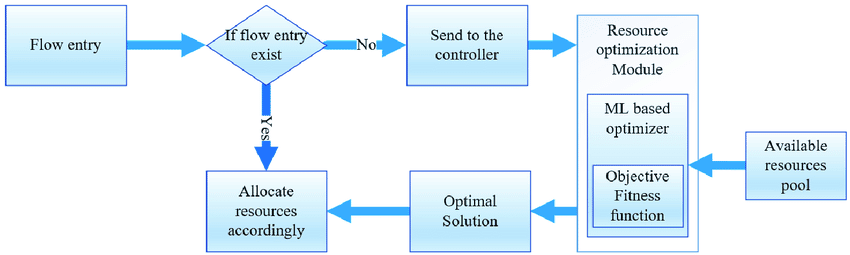

Useful resource Optimization

Useful resource optimizations type a vital side of mannequin deployment. That is particularly good when the assets are restricted.

A technique of optimizing assets is by making changes to the mannequin itself. Allow us to discover just a few strategies.

Useful resource Optimization

Simplification

One technique to optimize a mannequin may very well be to undertake one with easier and fewer parts or operations. How can we do that? By utilizing the below-mentioned options:

- Fashions with smaller architectures

- Fashions which have fewer layers

- Fashions which have sooner activation features

Pruning

Pruning is the method of eradicating undesirable elements of a mannequin that don’t contribute a lot to the output. It entails lowering the variety of layers or connections within the mannequin, making it smaller and sooner. Some widespread pruning methods are

- Weight pruning

- Neuron pruning

Quantization

Mannequin quantization is one other methodology to make a mannequin extra optimum. This entails lowering the bit-width of the numerical values used within the mannequin. Very similar to the earlier mannequin optimization strategies, quantization can decrease a mannequin’s reminiscence and storage wants and bump up the inference pace too.

This concludes the technical AI abilities wanted to tread the Synthetic intelligence path. However wait, there’s extra; I’m speaking about tender abilities. What precisely are tender abilities, and why do they matter? The subsequent part discusses that intimately.

Gentle Abilities

Gentle abilities are the “non-essentials” one must possess in addition to their subject experience. Gentle abilities are one thing inside us all, it’s not one thing we be taught by means of books or coursework. Gentle abilities are the bridge between your technical prowess and your employer or peer, i.e., how successfully you’ll be able to talk and collaborate. In response to Delloite Insights, 92% of manufacturers say tender abilities have comparable weightage to arduous abilities. They show an individual’s skill to make inside communications inside an organization, lead groups, or make choices to enhance the enterprise’s efficiency.

Allow us to discover a number of the essential tender abilities one should possess to have an edge over others.

Drawback-Fixing

Why are you employed for a job position? To make use of your experience in your subject to unravel issues. That is one other vital tender ability that requires one to determine the issue, analyze it, and implement options. It is likely one of the most sought-after abilities with 86% of employers searching for resumes possessing this ability. On the finish of the day, corporations are at all times looking out for expertise that may remedy their issues. Anybody who is an efficient downside solver will at all times be of worth within the job market.

Vital pondering

With many extra automation processes in place, it turns into paramount for leaders and specialists to interpret and contextualize outcomes and make choices. Vital pondering helps in evaluating these outcomes providing a factual response. Logical reasoning facilitates one to determine any discrepancies within the system. This entails a mixture of rational pondering separating the related from the irrelevant and reflective pondering the place one considers the context of the knowledge they’ve obtained and considers its implications. So in all its simplicity, it entails fixing advanced issues by analyzing the professionals and cons of assorted options utilizing logic and reasoning relatively than intestine intuition.

Mental Curiosity

Probing types a key side of 1’s profession arsenal. It’s the eagerness to probe into issues, ask questions, and delve deeper. Curiosity prompts one to enterprise out of 1’s consolation zone and discover unchartered territory of their specialised subject. Though AI methods can analyze and make inferences from huge quantities of knowledge, they lack the understanding or skill to query. The extra one probes, the extra one can convey innovation to the desk.

Moral Determination Making

With the huge information obtainable right now, AI methods function giant datasets and make inferences from patterns drawn from this information. Nevertheless, we can’t depend on these methods to make proper or honest choices since they’ll depend on societal biases. These biases can result in organizational discrimination on account of perpetuating inequities if left unattended.

That is the place moral decision-making comes into play. It sheds gentle on the flexibility of 1 to make sure that the end result safeguards the liberty of a person or people and aligns with societal norms. This ensures that the deployed system isn’t utilized in an invasive or dangerous method.

Conclusion

We’ve lastly come to the tip of this tremendous complete learn. We’ve lined all of the important arduous abilities like programming and deep studying to tender abilities like vital pondering and downside fixing. I hope this learn has given you insights and the appropriate mindset to kickstart your journey in harnessing your AI abilities. Preserve your eyes peeled, extra enjoyable reads coming your manner. See you guys within the subsequent one!

<!–

–>