YOLOv11 (also called YOLO11) is a household of fashions for object detection, classification, occasion segmentation, keypoint detection, and oriented bounding field detection (OBB). For every job, the mannequin is available in 5 variants of accelerating measurement and accuracy: N, S, M, L, and X. You could find the comparability within the Mannequin Leaderboard.

You may fine-tune YOLOv11 to calculate segmentation masks that correspond to the particular area during which an object seems in a picture.

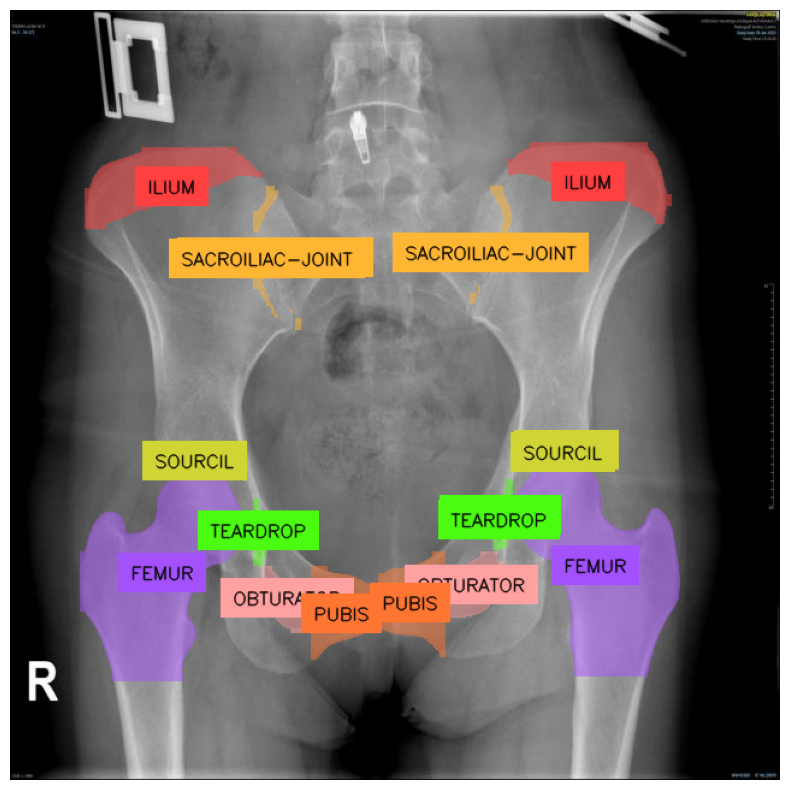

On this article, we’ll discover a medical use case by retraining YOLO11 on the Pelvis X-ray dataset from Roboflow Universe. You may entry the total code on this Pocket book. You should use any dataset you need.

This fine-tuned YOLO11 mannequin will be utilized throughout a number of areas:

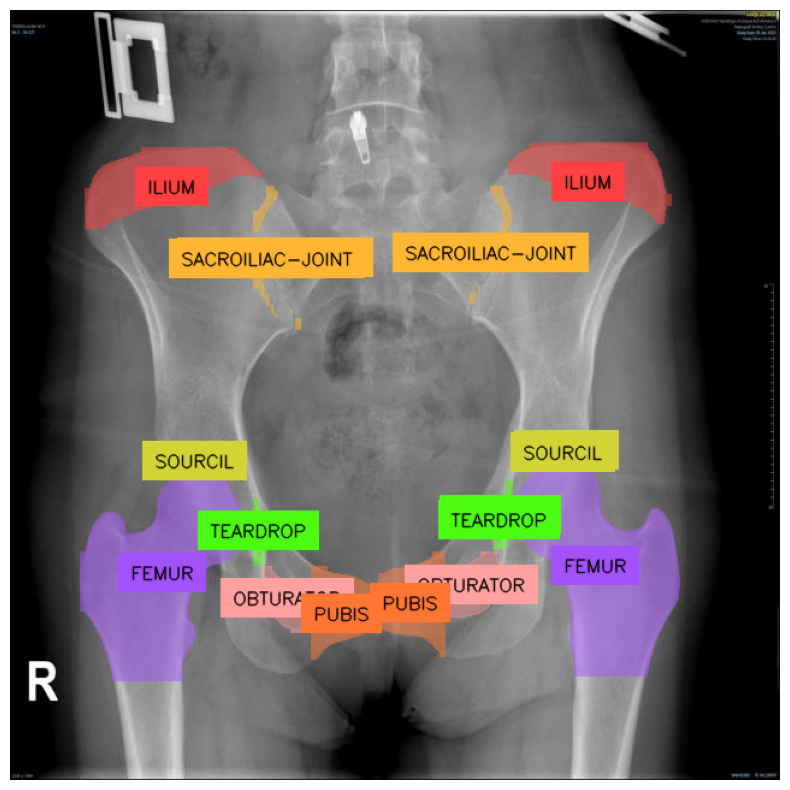

- Medical Analysis: The mannequin can help physicians in diagnosing pelvis-related circumstances, equivalent to fractures or degenerative illnesses, in areas just like the Iliac, Obturator, Femur, Teardrop, Pubis, and Supracetabular areas, serving to expedite therapy.

- Medical Coaching: It may be used as an academic software for medical college students and radiologists, aiding within the identification and differentiation of femur lessons in pelvis AP X-rays.

- Damage Evaluation: In sports activities drugs and physiotherapy, the mannequin can observe and analyze hip damage restoration, enabling more practical rehabilitation plans.

- Forensic Anthropology: Forensic consultants can leverage the mannequin to determine key pelvic options important for figuring out intercourse, age, and different identifiers in unidentified stays.

- AI-Pushed Prosthetics: The mannequin can help superior prosthetic improvement by offering exact knowledge for hip joint alignment.

Let’s prepare our mannequin.

Stipulations

To get began, you must set up the ultralytics package deal. Moreover, we will use roboflow to retrieve the dataset and supervision to visualise the ultimate outcomes.

Run the next command in a Colab or Jupyter Pocket book:

%pip set up ultralytics supervision roboflowimport os

HOME = os.getcwd()YOLO CLI

The ultralytics package deal features a command-line interface (CLI) for YOLO, simplifying duties like coaching, validation, and inference with out the necessity for code modification. The CLI helps a number of modes, together with detection, classification, pose monitoring, and segmentation, making it a super place to begin.

For instance, you possibly can run the mannequin on a single picture as follows:

!yolo phase predict mannequin=yolo11n-seg.pt supply='https://media.roboflow.com/notebooks/examples/canine.jpeg' save=true

The outcomes will likely be saved to ./runs/<job>/predict/<image_name>. Additional prediction runs will create numbered job folders equivalent to ./runs/<job>/predict2/.

Prepare YOLO11 on a Customized Dataset

We will use the labeled Pelvis X-ray dataset from Roboflow Universe. Alternatively:

- When you’ve got a set of unlabeled pictures, see Find out how to Label Knowledge knowledge for Yolo11. It is usually worthwhile to have a look at Labeling Knowledge with Grounded SAM 2, as it might velocity the method up considerably.

- When you’ve got a labeled dataset within the appropriate format, you need to use it straight.

- Should you add pictures to the dashboard, you possibly can label them, type a dataset, apply post-processing & augmentation, after which obtain the dataset within the required format.

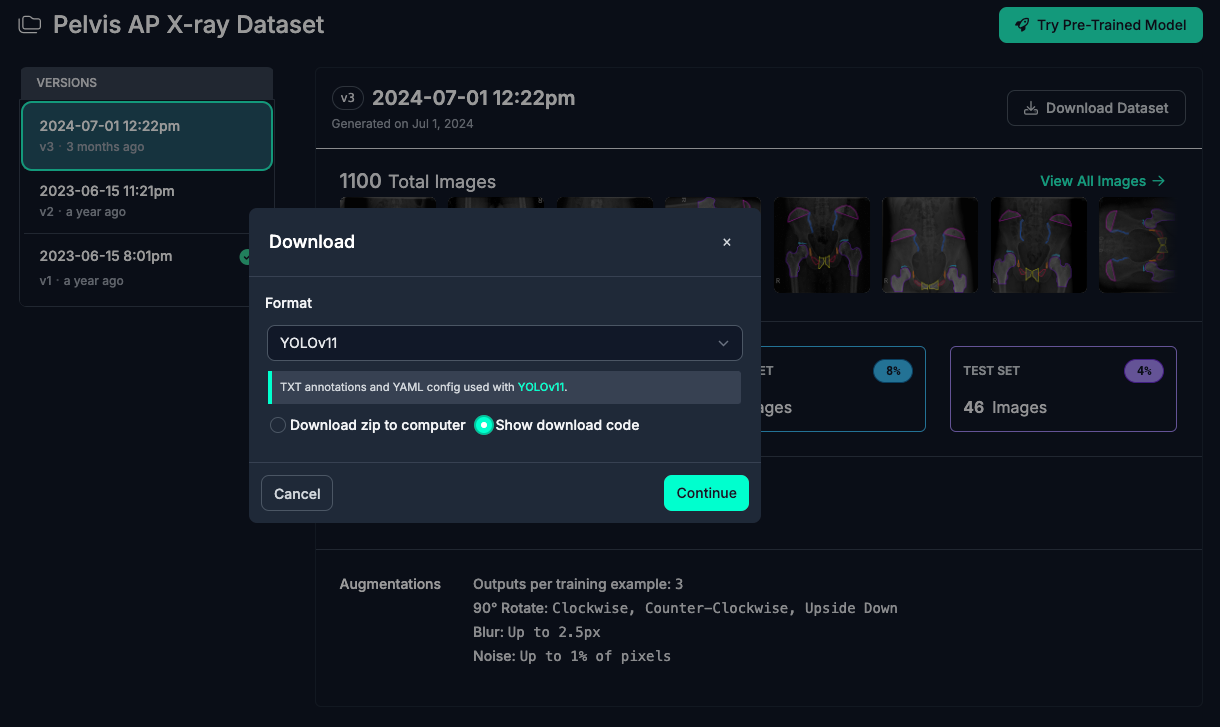

Step #1: Retrieve Dataset Obtain Code

Let’s retrieve the dataset from Universe.

- Navigate to the dataset web page.

- Click on on

Dataseton the left. - On the precise, click on

Obtain Dataset. - Choose

Yolov11, examinePresent obtain code, and click onProceed.

Step #2: Obtain the Dataset

Ensure you are working within the datasets folder. Then, run the dataset obtain code.

!mkdir {HOME}/datasets

%cd {HOME}/datasets from roboflow import Roboflow rf = Roboflow(api_key="<YOUR_API_KEY>")

undertaking = rf.workspace("ks-fsm9o").undertaking("pelvis-ap-x-ray")

model = undertaking.model(3)

dataset = model.obtain("yolov11") 🟣

Step #3: Prepare a Customized YOLO11 Occasion Segmentation Mannequin

Now that you’ve your customized dataset, you can begin coaching your YOLO11 occasion segmentation mannequin. Let’s return to the house folder and begin coaching.

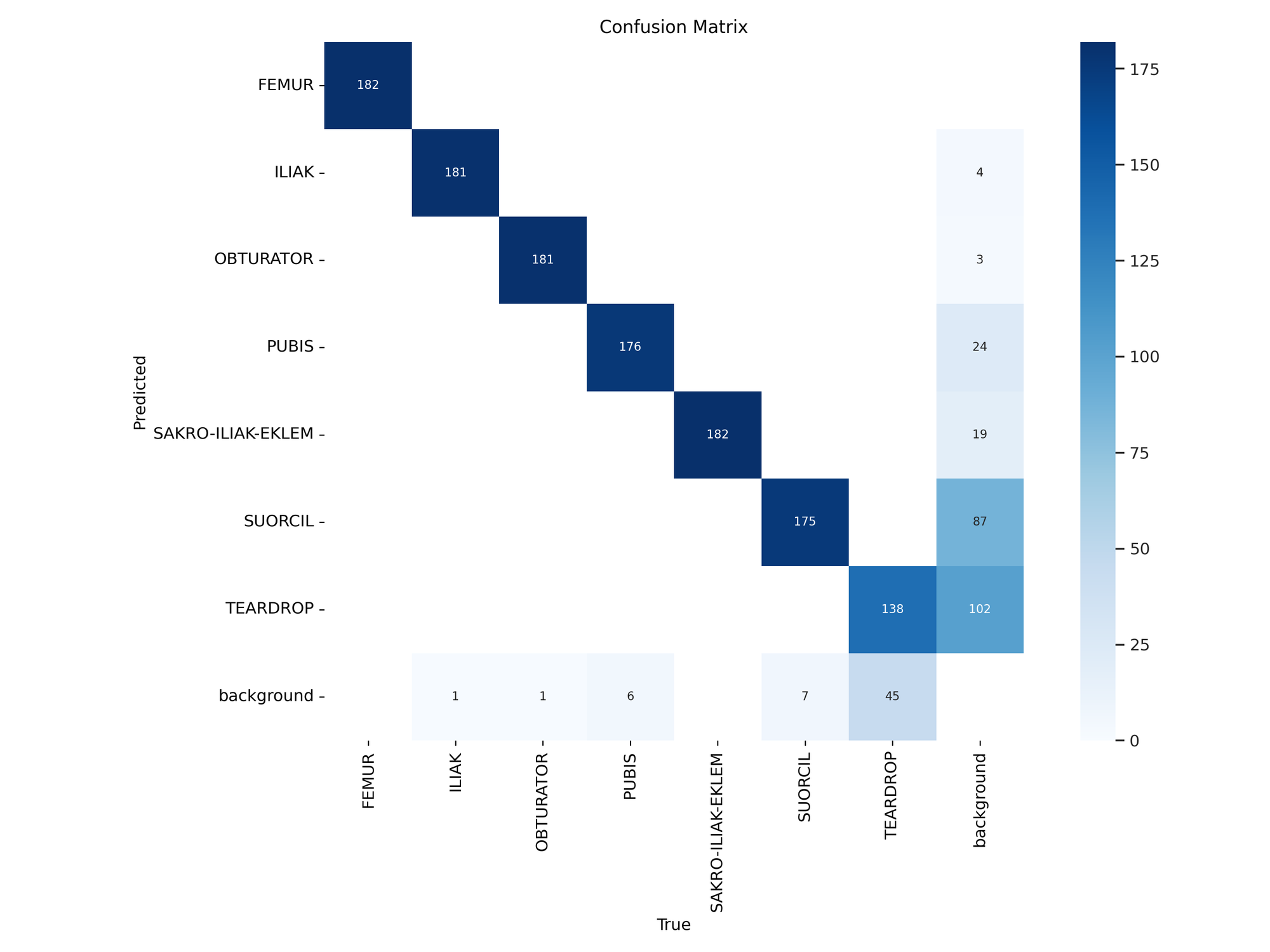

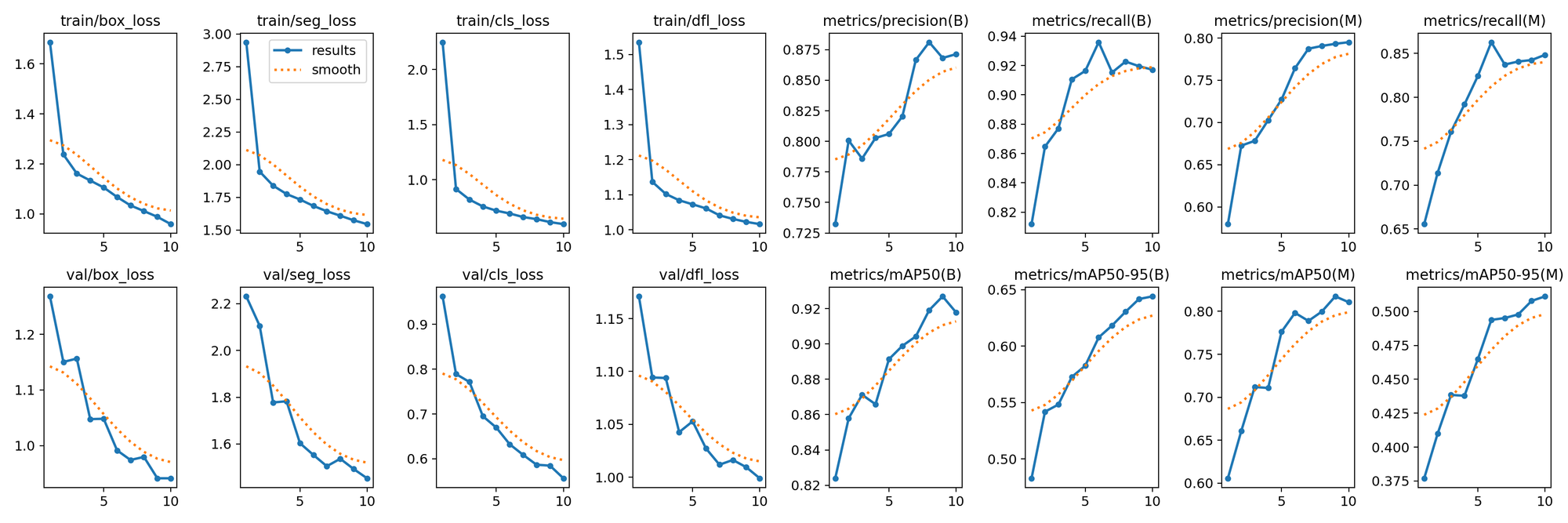

%cd {HOME} !yolo job=detect mode=prepare mannequin=yolo11s-seg.pt knowledge={dataset.location}/knowledge.yaml epochs=10 imgsz=640 plots=TrueAfter coaching, you possibly can study the outcomes, together with the confusion matrix, predictions, and validation batch, by executing the next code:

from IPython.show import Picture as IPyImage IPyImage(filename=f'{HOME}/runs/phase/prepare/confusion_matrix.png', width=600)

IPyImage(filename=f'{HOME}/runs/phase/prepare/outcomes.png', width=600)

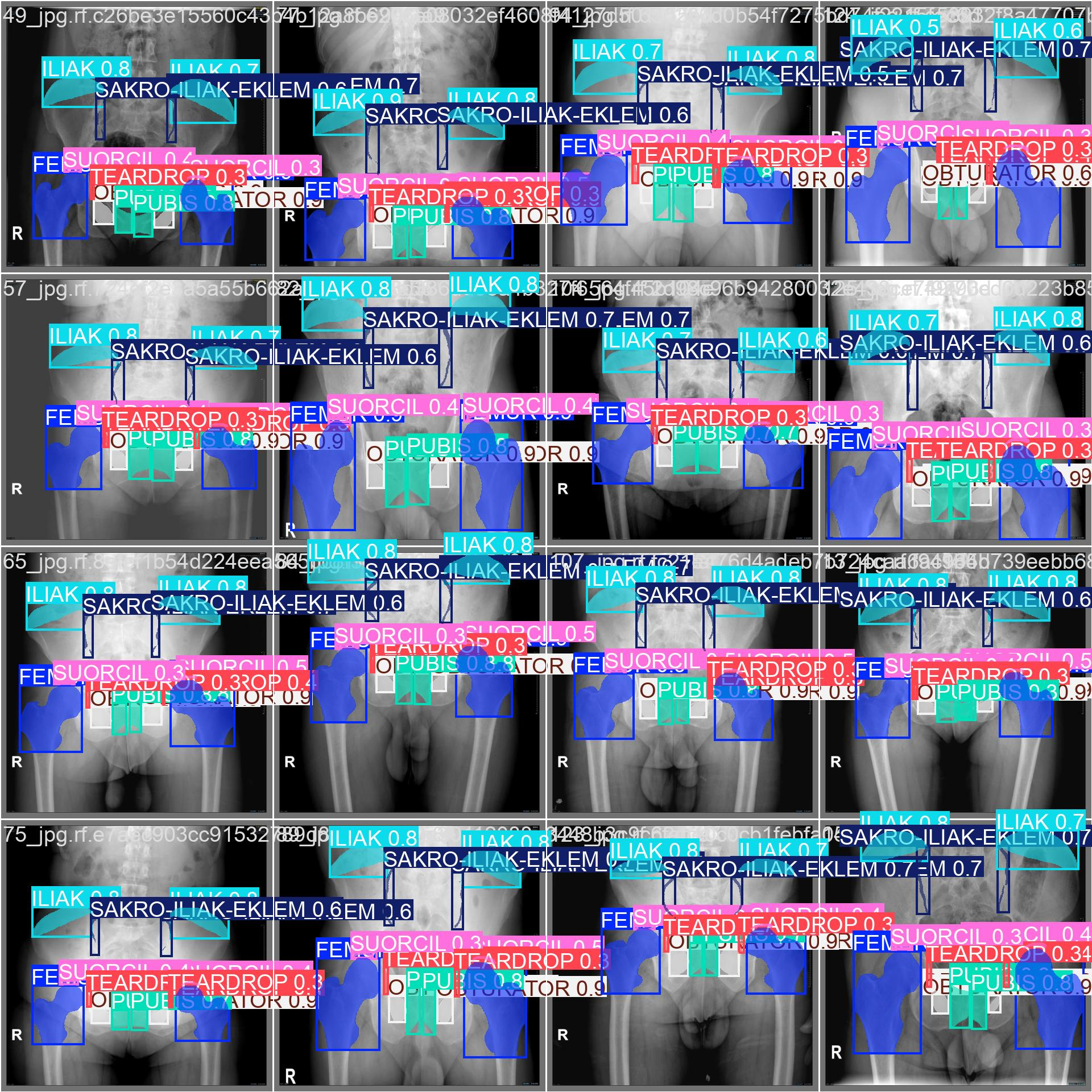

IPyImage(filename=f'{HOME}/runs/phase/prepare/val_batch0_pred.jpg', width=600)

Step #4: Inference with a Customized YOLO11 Mannequin

After getting completed coaching your YOLOv11 mannequin, you’ll have a set of skilled weights prepared to be used. These weights will likely be within the /runs/detect/prepare/weights/greatest.pt folder of your undertaking.

You may add your mannequin weights to Roboflow Deploy to make use of your skilled weights on our infinitely scalable infrastructure. You can too run the mannequin by yourself {hardware} with Roboflow Inference.

To add mannequin weights, use the next code:

undertaking.model(dataset.model).deploy(model_type="yolov11", model_path=f"{HOME}/runs/detect/prepare/")It might take a couple of minutes in your mannequin weights to be processed. Then, an API will likely be made out there that you need to use to run your mannequin.

We are able to then use the Roboflow Python package deal to question our mannequin:

# Run inference in your mannequin on a persistant, auto-scaling, cloud API import os, random, cv2

import supervision as sv

import IPython # load mannequin

mannequin = undertaking.model(dataset.model).mannequin # select random check set picture

test_set_loc = dataset.location + "/check/pictures/"

random_test_image = random.selection(os.listdir(test_set_loc))

print("operating inference on " + random_test_image) pred = mannequin.predict(test_set_loc + random_test_image, confidence=40, overlap=30)

detections = sv.Detections.from_inference(pred) picture = cv2.imread(test_set_loc + random_test_image)Discover that some lessons are in Latin, and a few in Turkish. Let’s change it to Latin & English.

name_dictionary = { "SUORCIL": "SOURCIL", "SAKRO-ILIAK-EKLEM": "SACROILIAC-JOINT", "ILIAK": "ILIUM"

}

detections.knowledge["class_name"] = [ name_dictionary.get(class_name, class_name) for class_name in detections.knowledge["class_name"]

]Now, we will visualize our mannequin predictions:

mask_annotator = sv.MaskAnnotator()

label_annotator = sv.LabelAnnotator(text_color=sv.Colour.BLACK, text_position=sv.Place.CENTER) annotated_image = picture.copy()

mask_annotator.annotate(annotated_image, detections=detections)

label_annotator.annotate(annotated_image, detections=detections) sv.plot_image(annotated_image, measurement=(10, 10))

Conclusion

On this article we have proven how one can prepare the YOLO11 mannequin for example segmentation. The mannequin began off with data of the 80 COCO lessons, but with the dataset discovered on Roboflow Universe, we introduced it to the sphere of Drugs, educating it to phase objects in X-ray picture of pelvis. The total code is accessible in our Pocket book.

YOLO11 is the most recent mannequin within the YOLO household, that includes improved precision, at decrease reminiscence and runtime prices. My hope is – you now perceive how one can wrangle it to your occasion segmentation wants. Better of luck!