On this information, we discover how we will fine-tune a completely open-source, small imaginative and prescient language mannequin, Moondream2, utilizing a pc imaginative and prescient dataset to depend gadgets, a process at which GPT-4V has been inconsistent, and do it in a means so we will depend on the output to be used in a manufacturing software.

Imaginative and prescient language fashions (VLMs), typically known as multimodal fashions, have grown in recognition. With the arrival of applied sciences like CLIP, GPT-Four with Imaginative and prescient, and different developments, the power to question questions from visible inputs has turn into extra accessible than ever earlier than.

VLMs are a brand new frontier in machine studying and their efficiency is regularly enhancing as new breakthroughs happen. As we’ve discovered with GPT-Four with Imaginative and prescient and extra just lately with GPT-4o, there are some duties, like counting, the place VLMs wrestle. Whereas comprehensible, since excelling at each single process is tough given the constraints of coaching prices and inference speeds, the dearth of expert-ability makes it tough to make use of and depend on VLMs for manufacturing use circumstances.

Whereas some multimodal fashions are higher than others, many expertise points with outputting a constant, parsable format. This creates a problem for incorporating VLMs into purposes and methods.

What’s Moondream2

Moondream2 is an open-source small imaginative and prescient language mannequin with supply code on GitHub, made by “vikhyatk”. Though not a state-of-the-art mannequin, its capacity to run regionally on-device with affordable pace and accuracy makes it a compelling choice as a VLM, and price experimenting with to fine-tune to see if it really works to your use case. For comparability with different VLMs, it scores comparatively excessive. It even beats the just lately launched GPT-4o on VQAv2, which is spectacular contemplating the native, open-source and far smaller measurement mannequin of Moondream2.

|

Benchmark |

Moondream2 (5/8/2024) |

GPT-4o |

Gemini 1.5 Professional |

PaliGemma |

|

VQAv2 |

79.0% |

77.2% |

73.2% |

85.6%* |

|

TextVQA |

53.1% |

78.0% |

73.5% |

73.15%* |

🚧

*: PaliGemma statistics are remoted “single-task” mannequin outcomes, that means they have been fine-tuned to that particular take a look at.

In comparison with one other just lately launched multimodal open VLM by Google known as PaliGemma, this mannequin is a a lot smaller 1.86 billion, in comparison with the Eight billion of PaliGemma. GPT-4o and Gemini 1.5 Professional are suspected to be considerably bigger than these two, however their precise sizes are unknown.

Moondream2 Open-Supply Licensing

In contrast to some “open” fashions, together with PaliGemma, which have been scrutinized for restrictive phrases, Moondream2 is open-source underneath the Apache 2.Zero license, permitting industrial use as properly.

How you can Superb-tune Moondream2

For this information, we’ll modify a model of the fine-tuning pocket book offered by the creator, and enhance Moondream2’s efficiency when used to depend several types of US forex.

To get began, set up packages that we are going to want all through the method.

!pip set up torch transformers timm einops datasets bitsandbytes speed up roboflow supervision -q

Acquire Information for Superb-tuning Moondream2

One of many challenges that exist with creating any kind of machine studying mannequin is getting high quality coaching information.

Since we wish to fine-tune Moondream2 to depend cash and payments, we’ll use this dataset from Roboflow Universe. It’s additionally doable to construct and use your personal Roboflow mission to do that.

Whereas that is an object detection dataset, we’ll present how it’s doable to make use of it to finetune a VLM.

First, obtain the dataset from Universe:

from roboflow import Roboflow

from google.colab import userdata rf = Roboflow(api_key=userdata.get('ROBOFLOW_API_KEY'))

mission = rf.workspace("alex-hyams-cosqx").mission("cash-counter")

model = mission.model(8)

dataset = model.obtain("coco")Then, we create a helper class to make use of throughout fine-tuning. We use Supervision to import the dataset from the COCO format we downloaded it in.

from torch.utils.information import Dataset

import json

from PIL import Picture

import supervision as sv class RoboflowDataset(Dataset): def __init__(self, dataset_path, break up='prepare'): self.break up = break up sv_dataset = sv.DetectionDataset.from_coco( f"{dataset_path}/{break up}/", f"{dataset_path}/{break up}/_annotations.coco.json" ) self.dataset = sv_dataset def __len__(self): return len(self.dataset) # ... different strategies listed under (full code in Colab pocket book)

Then, we get to the necessary step of defining our dataset. On this implementation of finetuning, the dataset is learn from an object, the place picture is the dataset picture, the array qa incorporates an object with a query and reply, which can outline the immediate/response pair that we want to fine-tune towards.

def __getitem__(self, idx): CLASSES = ["dime", "nickel", "penny", "quarter", "fifty", "five", "hundred", "one", "ten", "twenty"] # Retrieve the picture/annotation information from the Supervision DetectionDataset image_name, annotations = record(self.dataset.annotations.gadgets())[idx] picture = self.dataset.photographs[image_name] # Finds the quantity of every kind of forex there may be from the variety of annotations there are cash = {} for class_idx, money_type in enumerate(CLASSES): depend = len(annotations[annotations.class_id == (class_idx+1)]) # Counts the variety of annotations with that class if depend == 0: proceed; cash[money_type] = depend # Outline the immediate/reply immediate = f"What number of of every kind of the forex ({', '.be part of(CLASSES)}) are there? Reply in JSON format with the forex kind as the important thing and a integer depend as the worth." reply = json.dumps(cash, indent=2) # Codecs the JSON and makes it the reply # Return as the correct format return { "picture": Picture.fromarray(picture), "qa": [ { "question": prompt, "answer": answer, } ] }The next code retrieves the info and creates the dataset lessons for every break up of our information.

datasets = { "prepare": RoboflowDataset(dataset.location,"prepare"), "val": RoboflowDataset(dataset.location,"legitimate"), "take a look at": RoboflowDataset(dataset.location,"take a look at"),

}Preliminary Testing of Moondream2

Now that we’ve our dataset, we will start testing the way it performs with no fine-tuning. We are able to initialize Moondream2 by working the next:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM DEVICE = "cuda"

FLASHATTENTION = "flash_attention_2" # "flash_attention_2" if A100, RTX 3090, RTX 4090, H100, None if CPU

DTYPE = torch.float32 if DEVICE == "cpu" else torch.float16 # CPU would not help float16

MD_REVISION = "2024-04-02" tokenizer = AutoTokenizer.from_pretrained("vikhyatk/moondream2", revision=MD_REVISION)

moondream = AutoModelForCausalLM.from_pretrained( "vikhyatk/moondream2", revision=MD_REVISION, trust_remote_code=True, attn_implementation=FLASHATTENTION, torch_dtype=DTYPE, device_map={"": DEVICE}

)

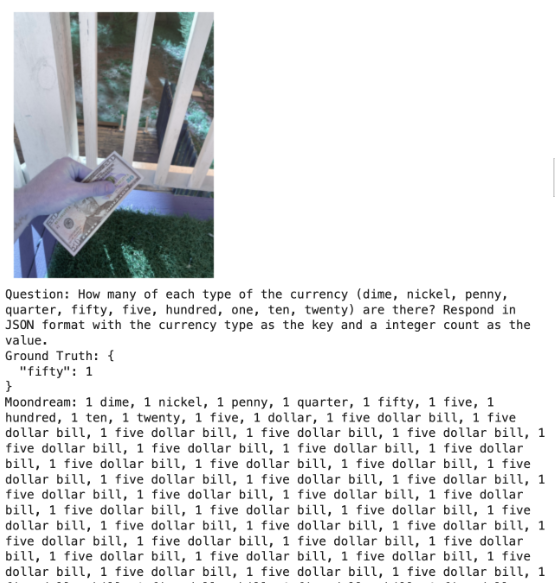

Then, we go in an image of a greenback invoice with the immediate What number of of every kind of forex (dime, nickel, penny, quarter, fifty, 5, hundred, one, ten, twenty) are there? Reply in JSON format with the forex kind as the important thing and an integer depend as the worth.:

pattern = datasets['test'][0] md_answer = moondream.answer_question( moondream.encode_image(pattern['image']), pattern['qa'][0]['question'], tokenizer=tokenizer,

) sv.plot_image(pattern['image'], (3,3))

print('Query:', pattern['qa'][0]['question'])

print('Floor Reality:', pattern['qa'][0]['answer'])

print('Moondream:', md_answer)It returned a really unhelpful, damaged, and incorrect reply:

Different instance responses from different photographs from the dataset have been additionally not significantly useful, appropriate, or constant:

[0.39, 0.28, 0.67, 0.52]There may be one silver coin within the picture, which is a silver greenback coin. The coin is silver in colour and includes a profile of a person on it. The coin is value one greenback.01 dime, 1 nickel, 1 penny, 1 quarter, 1 fifty, 1 5, 1 hundred, 1 ten, 1 twenty, 1 greenback invoice…

After evaluating the whole take a look at break up of the dataset, it achieved roughly 0%, with not one of the responses assembly the anticipated floor fact output.

Superb-Tuning Moondream2 for Counting Objects

Subsequent, we finetune Moondream2 by configuring hyperparameters. Right here, we set the variety of epochs to 2 since our personal testing corroborated that any much less/extra would result in underfitting/overfitting.

The batch measurement was modified to make the most of a extra highly effective GPU that occurred to be out there. For a T4 you would possibly use in Google Colab, we suggest 6.

The remainder of the parameters have been left default to the creator’s implementation.

# Variety of instances to repeat the coaching dataset. Rising this may occasionally trigger the mannequin to overfit or

# lose generalization as a consequence of catastrophic forgetting. Reducing it could trigger the mannequin to underfit.

EPOCHS = 1 # Variety of samples to course of in every batch. Set this to the very best worth that does not trigger an

# out-of-memory error. Lower it when you're working out of reminiscence. Batch measurement Eight at the moment makes use of round

# 15 GB of GPU reminiscence throughout fine-tuning.

BATCH_SIZE = 24 # Variety of batches to course of earlier than updating the mannequin. You should utilize this to simulate a better batch

# measurement than your GPU can deal with. Set this to 1 to disable gradient accumulation.

GRAD_ACCUM_STEPS = 1 # Studying price for the Adam optimizer. Must be tuned on a case-by-case foundation. As a normal rule

# of thumb, enhance it by 1.Four instances every time you double the efficient batch measurement.

#

# Supply: https://www.cs.princeton.edu/~smalladi/weblog/2024/01/22/SDEs-ScalingRules/

#

# Observe that we linearly heat the educational price up from 0.1 * LR to LR over the primary 10% of the

# coaching run, after which decay it again to 0.1 * LR over the past 90% of the coaching run utilizing a

# cosine schedule.

LR = 3e-5 # Whether or not to make use of Weights and Biases for logging coaching metrics.

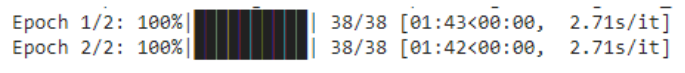

USE_WANDB = FalseAs soon as we kick off coaching, the coaching time will rely extremely on the system, primarily the GPU, that’s out there to you.

Evaluating Superb-tuned Moondream2 Outcomes

Now that we’ve accomplished the coaching course of, we will consider the efficiency of the fine-tuned mannequin utilizing the identical take a look at information which was not a part of the fine-tuned information.

moondream.eval() appropriate = 0

for i, pattern in enumerate(datasets['test']): md_answer = moondream.answer_question( moondream.encode_image(pattern['image']), pattern['qa'][0]['question'], tokenizer=tokenizer, ) if md_answer == pattern['qa'][0]['answer']: appropriate += 1 if i < 21: sv.plot_image(pattern['image'], (3,3)) print('Floor Reality:', pattern['qa'][0]['answer']) print('Moondream:', md_answer) print(f"nnAccuracy: {appropriate / len(datasets['test']) * 100:.2f}%")

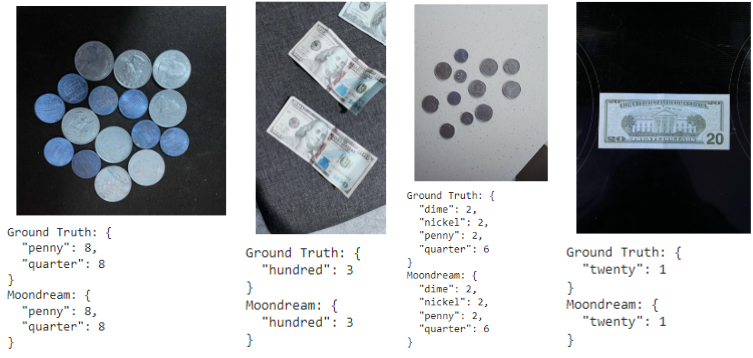

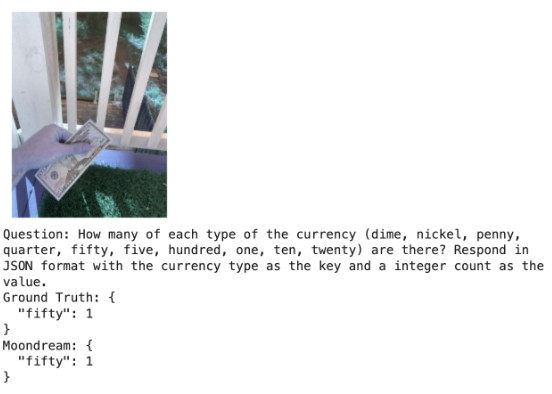

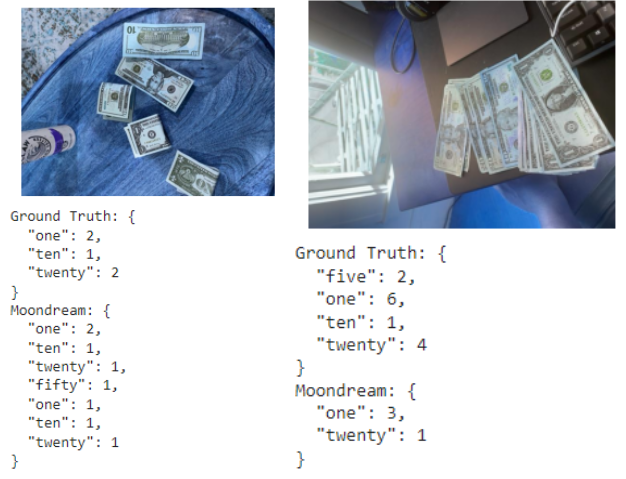

Wanting on the samples, we see a way more constant, predictable, and correct output reply and output format.

Superb-tuned Moondream2 answered our very first take a look at picture with a way more correct reply:

Nevertheless, there are circumstances by which the fine-tuned model of Moondream nonetheless will get counts incorrect.

Total, throughout the identical testing dataset break up, we obtained an accuracy of 85.50%.

Conclusion

By way of this information, we have been capable of make the most of a pc imaginative and prescient dataset so as to finetune a imaginative and prescient language mannequin to provide extra constant and correct ends in a format that makes it straightforward to parse to be used in manufacturing purposes. This brings VLMs from a degree of an fascinating experiment to a way more helpful element to a bigger pc imaginative and prescient system.