This text was contributed to the Roboflow weblog by Mason, a highschool pupil within the applied sciences that drive the trendy world. He plans to pursue arithmetic and pc science.

Object trackers can play an necessary position in sports activities sport evaluation, replay, and stay breakdowns. Nonetheless, these trackers aren’t simply restricted to conventional sports activities. They may also be helpful for quite a few different actions or video games.

One area of interest exercise that advantages from this know-how are robotics competitions. These techniques can enormously enhance technique and evaluation of opponents, giving groups an edge over the others. On this article, we cowl how object detection fashions, object segmentation, and object monitoring may be utilized to map out robotic paths within the First Robotics Competitors (FRC).

Venture Overview

Particularly, this undertaking will run robotic object detection on a video the person uploads. As soon as detections are accomplished working on the frames, every robotic shall be mapped from the 3d subject to a 2nd high down diagram of the sphere.

To calculate this, we’ll make use of subject segmentation and easy arithmetic. As soon as completed, the positions shall be saved to a JSON file as a way to be saved for evaluation afterward.

For this undertaking, I used Node.js with packages in addition to a little bit of Python to attach with my Roboflow undertaking.

On this information, we’ll stroll by the excessive degree steps that describe the undertaking. Full code for the undertaking is on the market on GitHub.

Step #1: Construct robotic detection mannequin

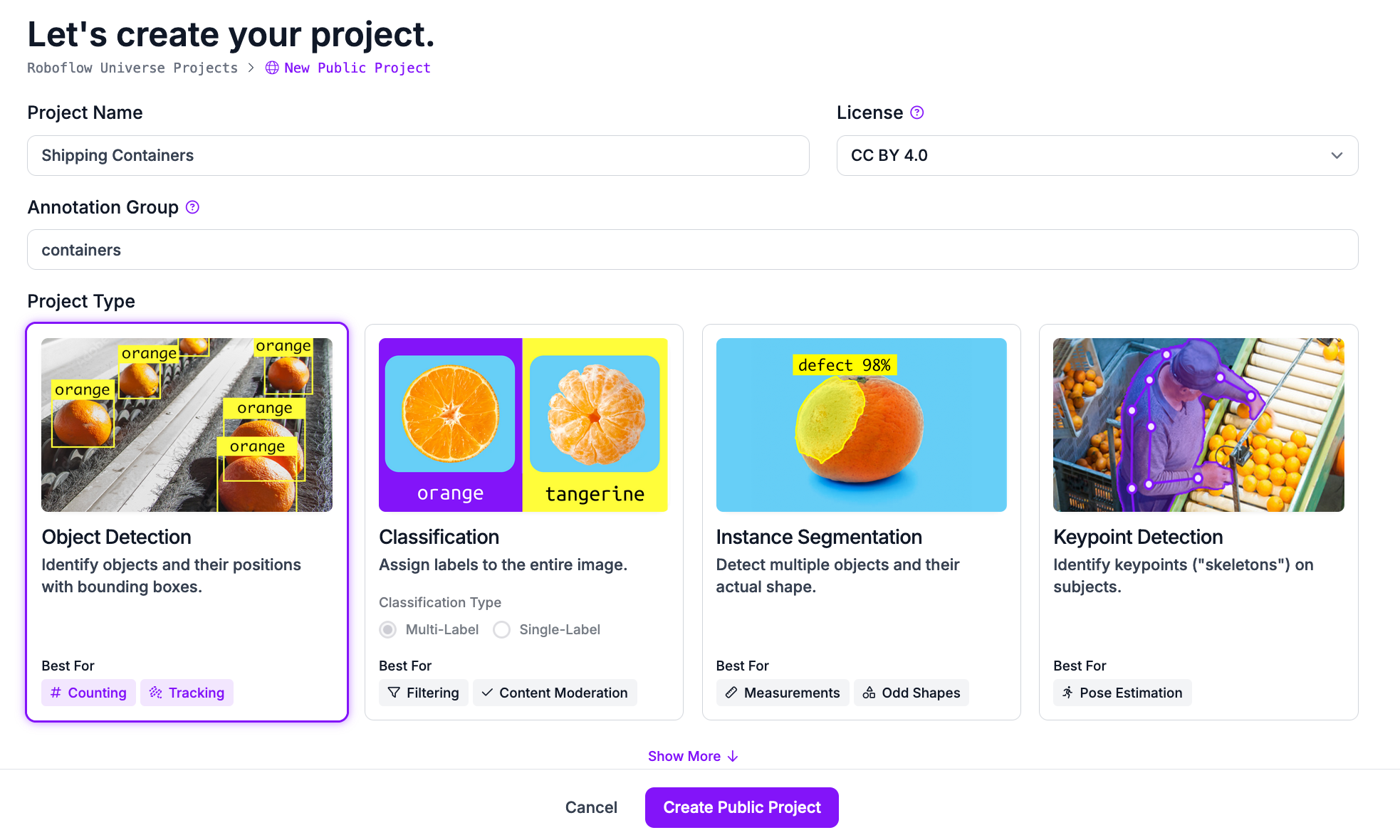

First, join Roboflow and create an account.

Subsequent, go to workspaces and create a brand new object detection undertaking. This shall be used to detect robots within the video frames Customise the undertaking title and annotation group to your selection.

Subsequent, add photos to make use of for annotation. For FRC robotics and lots of different actions, movies of previous competitions may be discovered on YouTube. Roboflow gives a YouTube video downloader, enormously lowering time spent discovering photos. Make certain to add a number of completely different occasions/competitions to make the mannequin extra correct in numerous environments. Now add the photographs to the dataset for annotation.

Subsequent, add the courses for the several types of objects you want the mannequin to detect. Within the case of FRC robotics, the category names “Pink” and “Blue” work effectively for differentiating robots on the pink group and blue group.

Now annotation can start. With massive datasets, it might be helpful to assign annotations to group members. Roboflow has this function inbuilt. Nonetheless, you too can assign all photos to your self for annotation.

Utilizing Roboflow’s annotation instruments, rigorously label the objects and assign the suitable class. Within the case of FRC robotics, I discovered it best to focus on the robotic bumpers as an alternative of the whole robotic. This could stop overfitting, as normal robotic shapes change every year. Moreover, this permits the mannequin to be reused for different tasks equivalent to automated robotic avoidance or autonomous protection.

As soon as we’ve our annotations and pictures, we will generate a dataset model of labeled photos. Every model is exclusive and related to a skilled mannequin so you possibly can check out completely different augmentation setups.

Step #2: Prepare robotic detection mannequin

Now, we will practice the dataset. Roboflow gives quite a few strategies for coaching. You possibly can practice utilizing Roboflow, permitting for particular options equivalent to compatibility with Roboflow’s javascript API. Nonetheless, this methodology requires coaching credit.

Alternatively, Roboflow gives Google Colab notebooks to coach all kinds of fashions. On this case, I used this Colab pocket book. These notebooks present nice step-by-step instructions and explanations. As soon as coaching is accomplished, it makes it straightforward to validate and add the mannequin again to Roboflow.

Step #3: Construct subject segmentation mannequin

After the robotic object detection mannequin is precisely skilled, we will transfer on to coaching the sphere segmentation mannequin. This step will enable us to shortly convert 3D world dimensions to 2D picture dimensions afterward.

Via testing, I discovered full subject segmentation to be tough and unreliable. As an alternative, concentrating on the central taped area of the sphere proved to work far more reliably.

For segmentation, I discovered much less photos are wanted to have it working reliably, as most fields look very related. Nonetheless, because the fields for FRC change every year, it is very important use photos of present fields, or else the tape strains gained’t match up.

Step #4: Construct subject segmentation mannequin

After utilizing Roboflow’s segmentation annotation instruments, we as soon as once more practice the mannequin. The Google Colab pocket book that labored nice for me may be discovered right here.

Google Colab

Step #5: Section fields for coordinate mapping

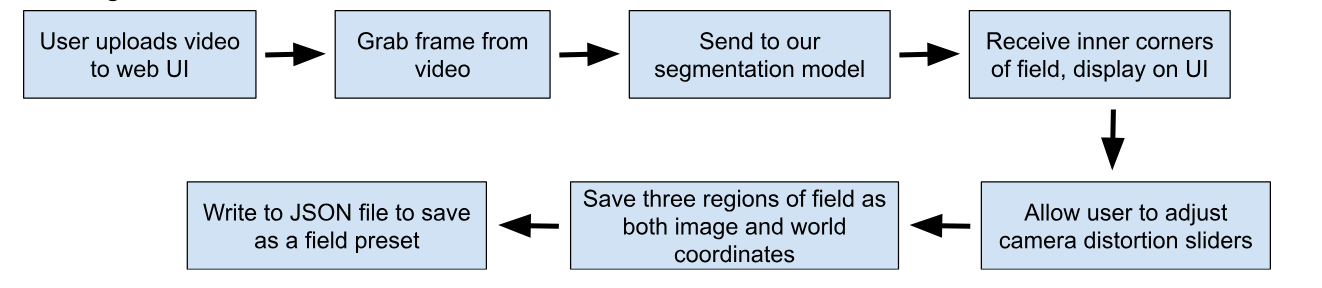

At this level, we will start the logic for our undertaking. Step one is to section the sphere, as this may enable us to map the robotic detection coordinates afterward. For segmentation, the method I selected goes as follows:

The net UI side provides extra complexity that isn’t needed for the undertaking. Nonetheless, I selected so as to add it to permit inexperienced customers a option to higher perceive the best way to use the undertaking. Utilizing Specific and Socket.io in my undertaking, I used to be capable of ship the person an HTML web page and trade knowledge between the back and front ends of the undertaking.

For this text, we’ll follow the bare-bones of how the system works. First, utilizing the Node.js ffmpeg-extract-frames bundle, we’re capable of seize one body from the video:

import extractFrames from 'ffmpeg-extract-frames'; // Millisecond timestamp of body

var ms = Zero extractFrames({

enter: './path/to/video.mp4',

output: './path/to/vacation spot.jpg',

offsets: [

ms

]

});

This protects the desired body of the video to your vacation spot. Now, we will use the Axios bundle to ship our picture to the Roboflow mannequin we skilled. First, acquire your Roboflow non-public API key from the settings panel -> API Keys web page. Keep in mind to maintain this non-public. The picture variable must be the video body we simply saved. You possibly can learn it utilizing Node.js’ filesystem API:

import fs from 'fs'; const IMAGE = fs.readFileSync('/path/to/fieldframe.jpg', {

encoding: 'base64'

});

All that’s left is to alter the URL variable to the URL of the segmentation mannequin you skilled. For instance, I used https://detect.roboflow.com/frc-field/1 as my mannequin.

axios({ methodology: 'POST', url: URL, params: { api_key: API_KEY }, knowledge: IMAGE, headers: { 'Content material-Sort': 'software/x-www-form-urlencoded' }

}).then(perform (response) {

// Segmentation factors are acquired right here console.log(response.knowledge);

}).catch(perform (error) { // Within the case of an error: console.log(error.message);

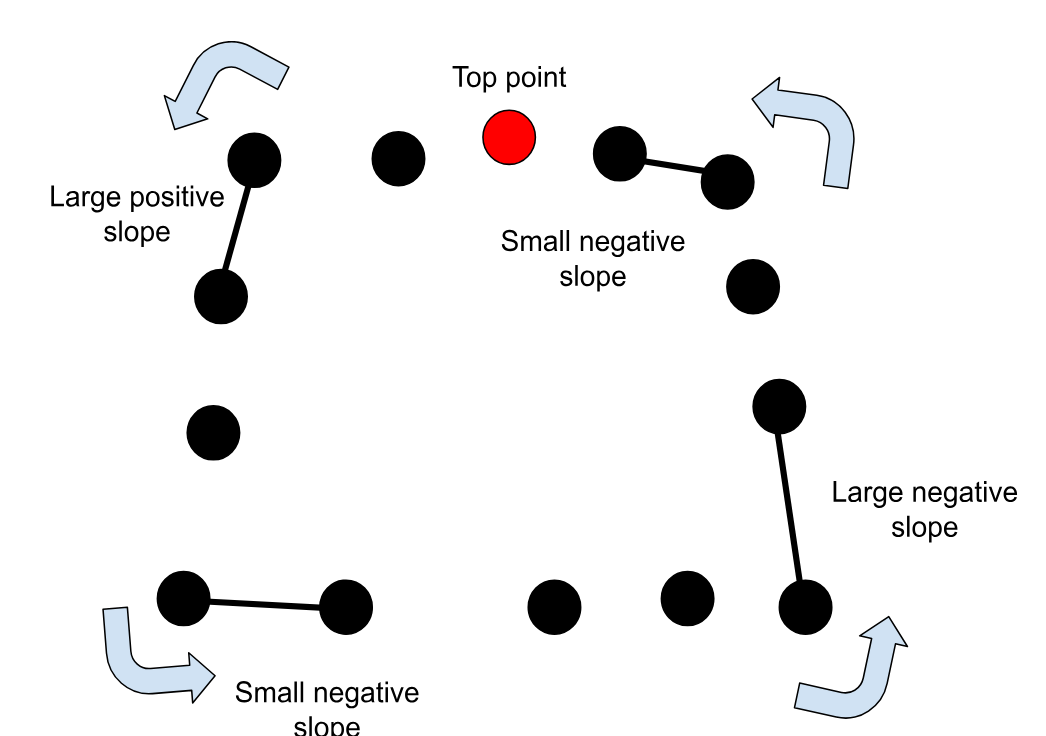

});Now, we obtain an array with factors alongside the sting of the sphere. Discovering which of the factors are the 4 corners is so simple as checking the slope to the subsequent level. I began by sorting the factors by top and beginning on the high level. Within the array of factors Roboflow returns, the factors are sorted alongside the outside of the segmented area.

As we transfer counterclockwise from the highest level, the primary time the slope from one level to the subsequent is larger than a continuing, for instance 2, we all know that the purpose is probably going a nook level. An identical course of may be repeated to search out the opposite corners, getting into a circle.

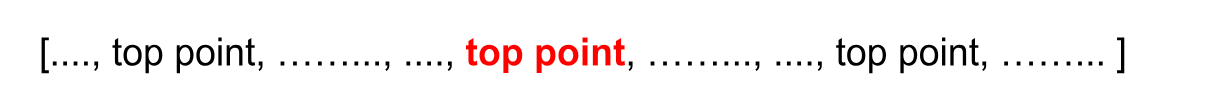

If the highest level shouldn’t be the primary ingredient within the array, we’ll fail to verify all factors. A fast resolution is to create a brand new array that duplicates the unique array 3 times and discovering the second occasion of the highest level:

Now, we will safely go forwards and backwards within the new massive array with the peace of mind each level shall be accounted for.

As soon as we discover the corners of the inside subject, we will now predict the place the actual subject corners are. We are able to go alongside the horizontal strains fashioned to search out the outer corners on both facet. Utilizing multiplication will guarantee the attitude stays intact:

Nonetheless, as a result of completely different cameras are utilized in completely different competitions, distortion of the sphere will differ. Fisheye cameras could must be tuned by sliding the brand new estimated factors (pink) up or down relying on the digital camera used:

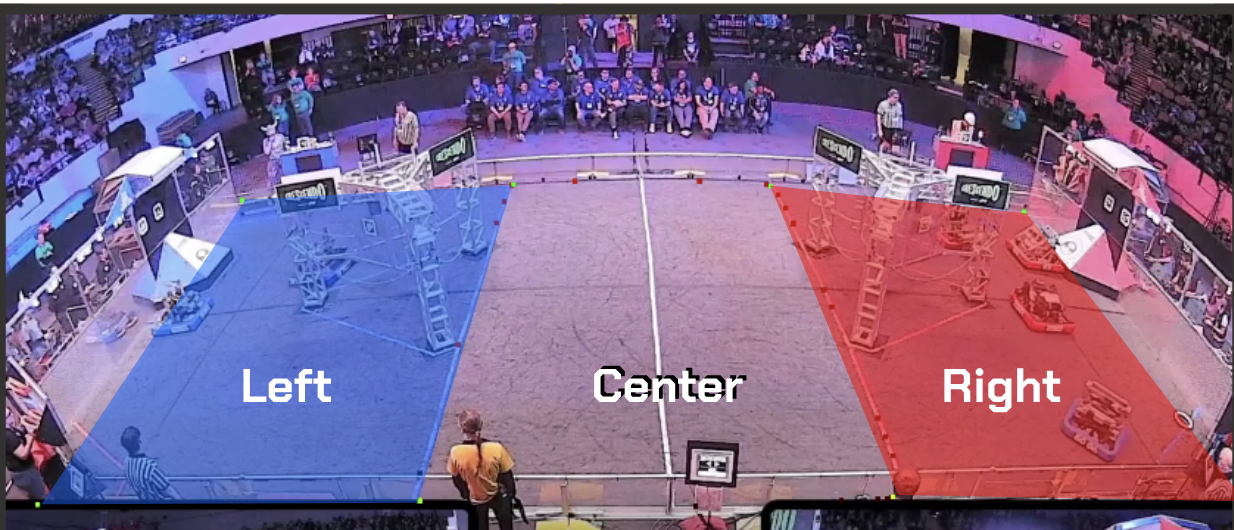

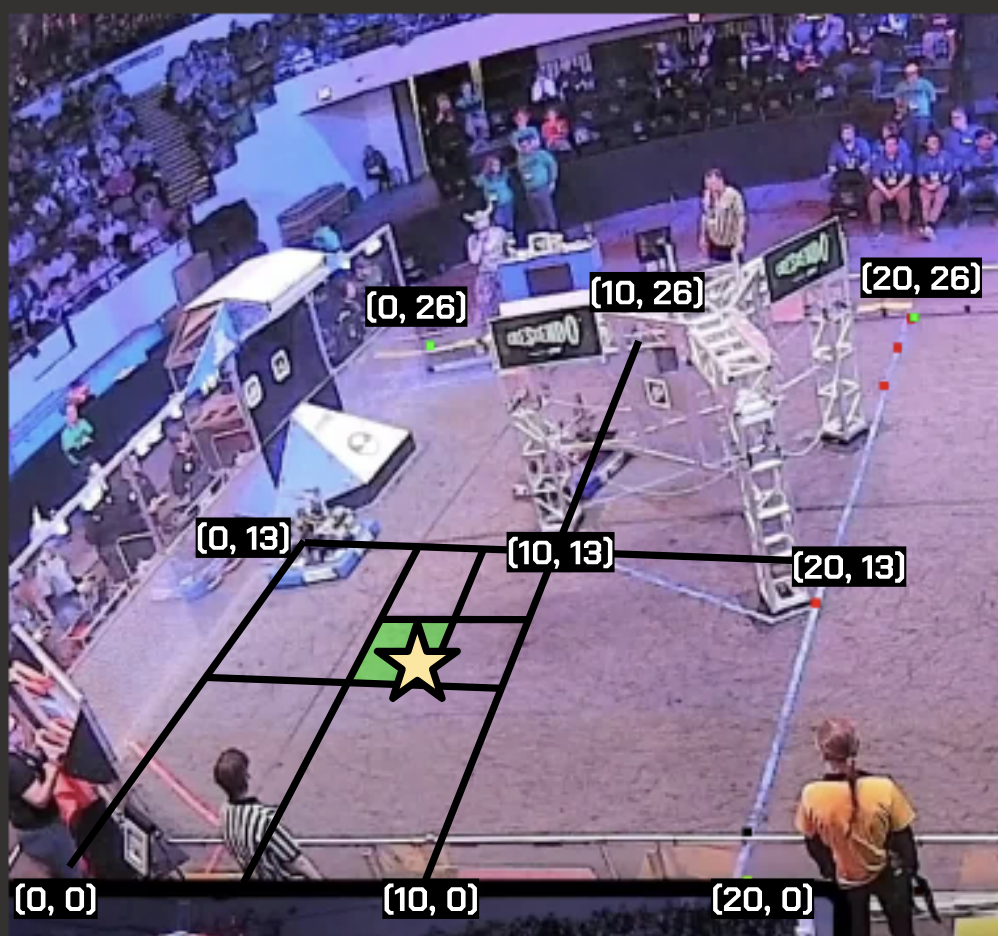

After this, the sphere is roughly divided into three sections: left, heart, and center:

At this level, we all know each the display screen coordinates in addition to the world coordinates, because the tape strains that divide the sections are at identified positions, roughly 19 toes from the suitable fringe of the sphere. So, if we will work out the robots’ positions on display screen, we’ve sufficient data to approximate their world positions.

Step #6: Detect robotic objects from video

Now we will ship the total match movies for detection. On this instance, I discovered it best to make use of Python with Roboflow’s Inference undertaking. The next code will ship a whole video for object detection.

Make certain to set the API key to the important thing obtained earlier, the undertaking title to your object detection undertaking, and the model to the mannequin model you might be utilizing (skilled iteration).

You can even tune properties equivalent to confidence and overlap thresholds, set under at 50 and 25 respectively. As soon as the enter video path and output json paths are set, you possibly can run this code to get the robotic detections saved to a json file.

import os

import sys

import json

from roboflow import Roboflow rf = Roboflow(api_key="API_KEY")

undertaking = rf.workspace().undertaking("PROJECT_NAME")

mannequin = undertaking.model("PROJECT_VERSION").mannequin mannequin.confidence = 50

mannequin.iou_threshold = 25 job_id, signed_url, expire_time = mannequin.predict_video(

"path/to/video.mp4",

fps=15,

prediction_type="batch-video",

) outcomes = mannequin.poll_until_video_results(job_id) parsed = json.dumps(outcomes, indent=4, sort_keys=True) # Forgot so as to add this, add tonight

write_path = “path/to/outputfile.json” f = open(write_path, "w")

f.write(parsed)

f.shut()Step #7: Estimate detections in world coordinates

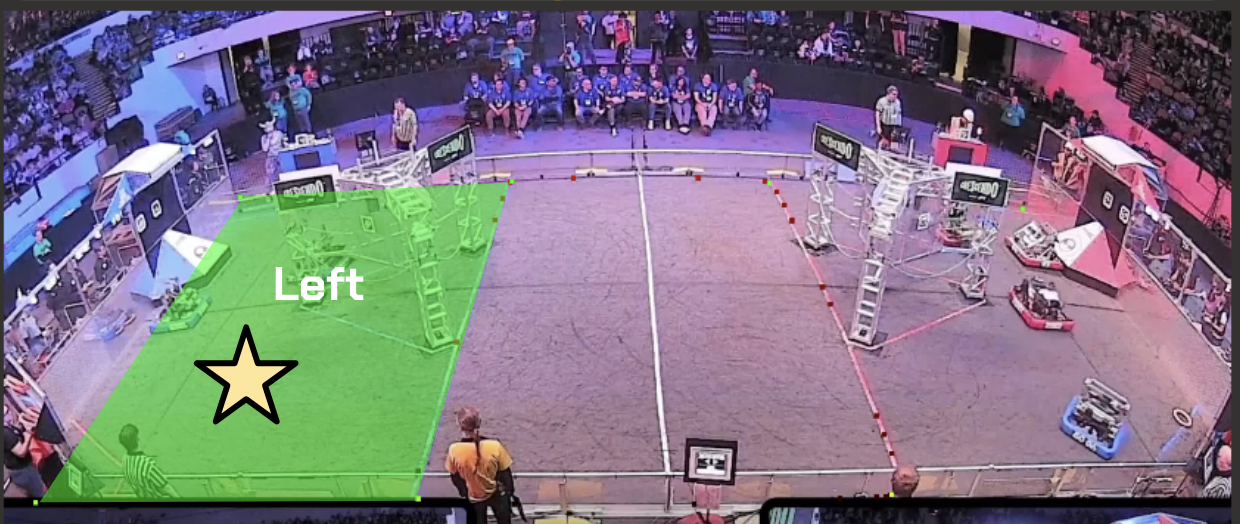

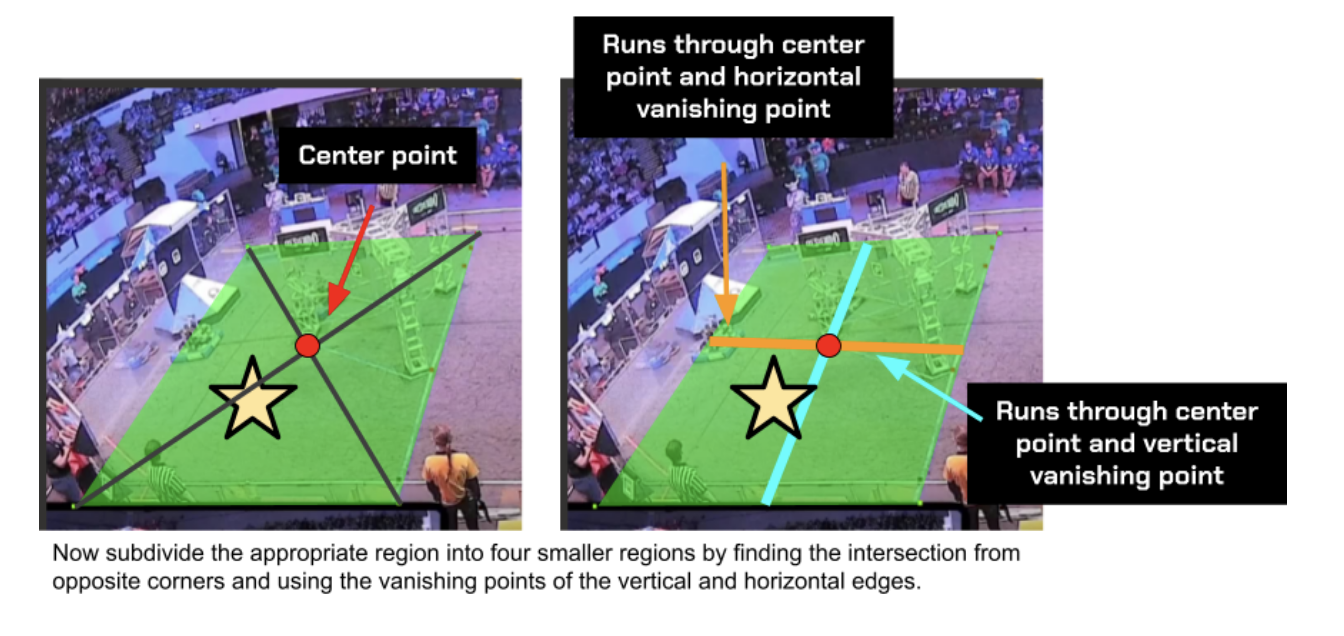

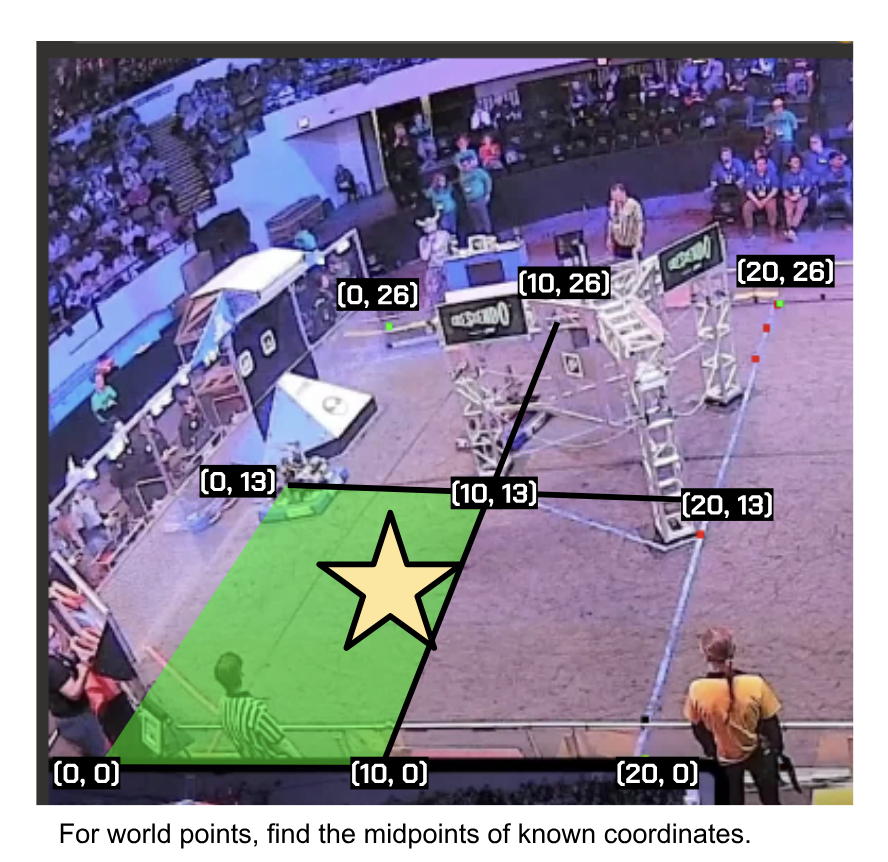

Now that we’ve the display screen coordinates for every detection, we will run an algorithm that makes use of our three subject areas to estimate world positions. Whereas it might be extra correct to make use of extra sections or advanced mathematical equations, a easy method that works very effectively for this job is discovering which area the robotic is in and subdividing that area.

After a number of iterations, we’ve a sound estimate of the place the robotic is in world dimensions. Take the instance under utilizing the star:

Now discover which of the 4 new quadrants the item is in. Right here, the star is within the decrease left quadrant. Utilizing this area, repeat the method of subdividing into 4 areas and discovering which the middle of the item is in whereas additionally discovering the midpoints of the world coordinates.

After just a few iterations (I used 7), there’s little change in accuracy. We are able to use the underside level of the final quadrant the item is in, and now we’ve the item’s world place. All that’s left now could be to implement a monitoring algorithm.

Step #8: Implement an object tracker

There are lots of completely different strategies to implement object trackers. They will vary from utilizing distances since final detections to accounting for velocities and object dimension.

For this undertaking, I discovered {that a} tracker utilizing solely distances labored extraordinarily precisely. For improved accuracy, I tracked every group (pink and blue) individually.

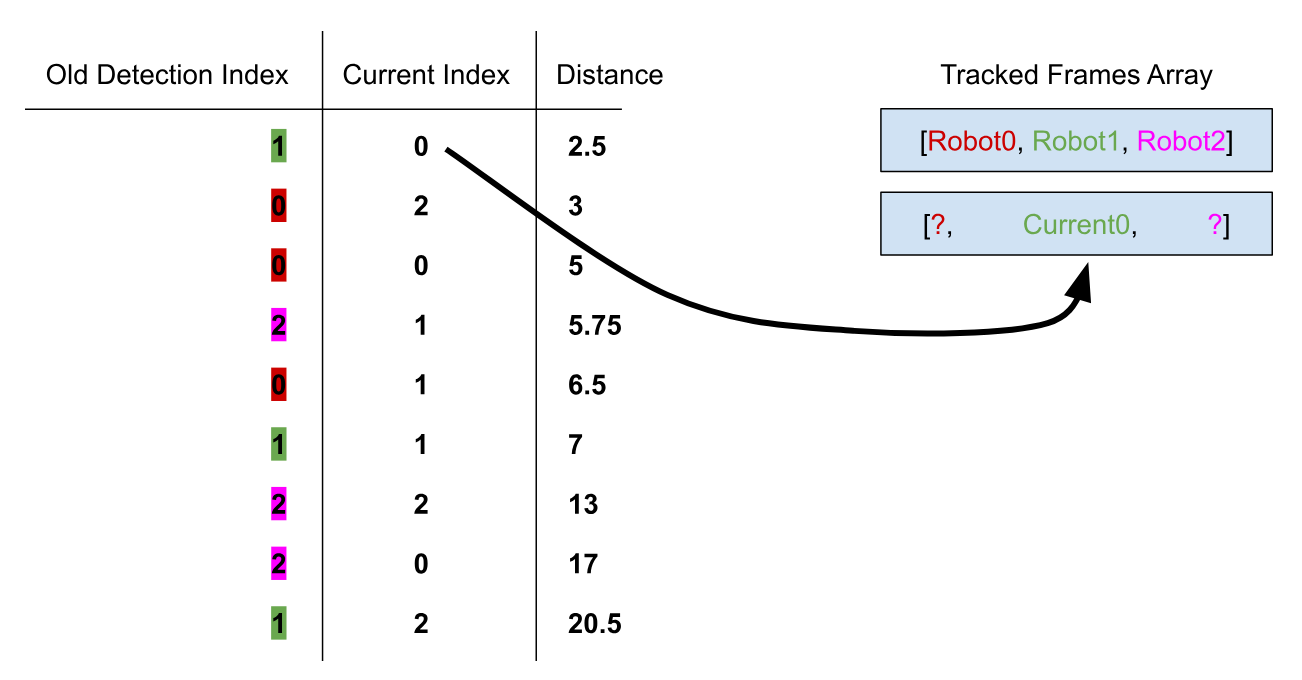

iterating by all frames, I discovered which contained predictions for Three robots of the specified group. This makes it a lot simpler to deal with the monitoring, as every prediction ought to have all three robots for the specified group, eliminating the necessity for filling in gaps.

I created a brand new array with three parts for every body, which shall be added to the 2nd array of tracked frames. Every robotic will get its personal index, which is able to stay constant for all frames. This implies for every body, the robotic in index 1 would be the similar. I went forward and added the primary body of detections to the array.

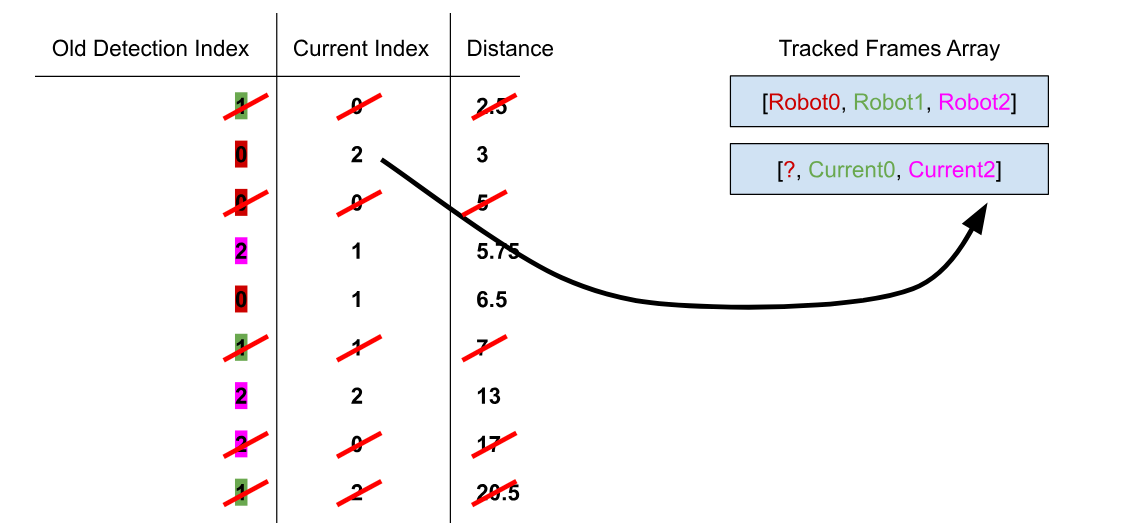

With the bottom laid out, for every body, discover the space from every of the present robots in body to every of the robots within the earlier body. I added every as a JavaScript object, so I may preserve monitor of the index of each the previous and present robotic within the detection array. Now, kind the objects based mostly on their distances:

let sortedDistances = [];

for (let present = 0; present < fullAlliancePredictions[i].size; present++) {

for (let previous = 0; previous < fullAlliancePredictions[i - 1].size; previous++) {

let currentDistance = (getDistance(fullAlliancePredictions[i][current], sortedAllianceFrames[i - 1][old]));

sortedDistances.push({

'present': present,

'previous': previous,

'distance': currentDistance

});

}

} // Kind the array based mostly on object property distance

sortedDistances.kind((a, b) => a.distance - b.distance);

Notice the previous index and present index of the shortest distance, these are the identical robotic. So, we will add the present robotic object into the previous index of the subsequent ingredient within the checklist of frames. The graphic under explains this course of higher:

sortedAllianceFrames[i][sortedDistances[0].previous] = fullAlliancePredictions[i][sortedDistances[0].present];

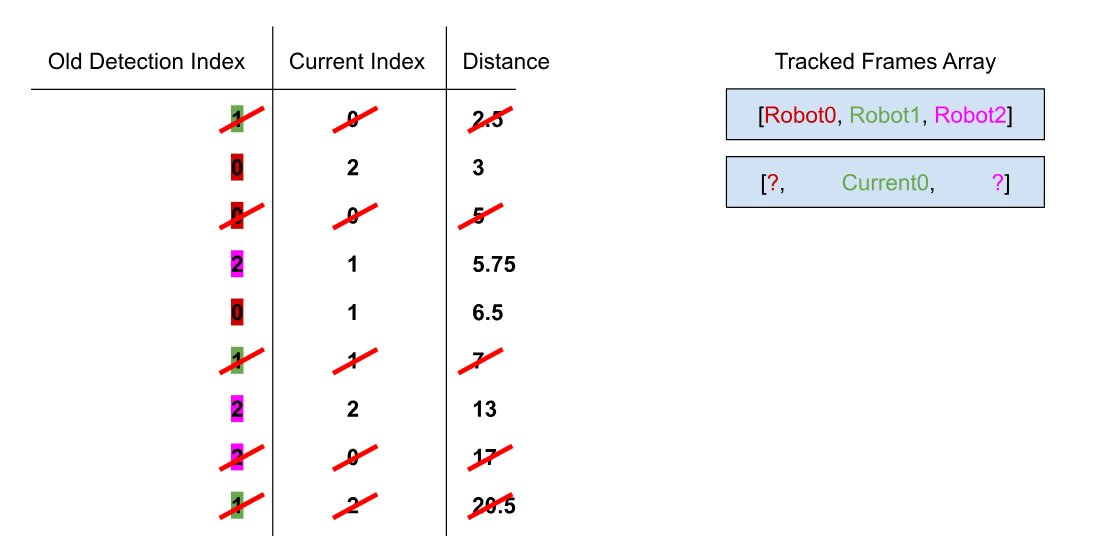

Now, we will take away all situations of previous index 1 and present index 0, as they’re taken:

sortedDistances = sortedDistances.filter(ingredient => ingredient.present !== sortedDistances[0].present && ingredient.previous !== sortedDistances[0].previous);

And repeat the method with the subsequent closest distance:

whereas (sortedDistances.size > 0) {

sortedAllianceFrames[i][sortedDistances[0].previous] = fullAlliancePredictions[i][sortedDistances[0].present];

sortedDistances = sortedDistances.filter(ingredient => ingredient.present !== sortedDistances[0].present && ingredient.previous !== sortedDistances[0].previous);

}

Now, the present 1st ingredient should be the identical robotic because the earlier robotic in index place 0. Repeat this course of for every body utilizing the previous present as the subsequent previous array. This can lead to a tracked array of every robotic for every body for the desired group. I repeated this course of for each groups, successfully monitoring all robots throughout the frames.

There are some flaws with this technique. For instance, there are possible frames lacking as a result of they solely contained 1 or 2 detections. An algorithm may very well be applied to easy this out, because the Roboflow circulate detection output incorporates body numbers. Moreover, notice that the detection itself is metered by Roboflow. You can use your personal {hardware} to carry out detections, such because the NVIDIA Jetson or perhaps a gadget equivalent to an Orange Pi.

Now, we’ve efficiently laid the blueprint for the best way to monitor robotic positions throughout a video. This could serve many functions, equivalent to evaluating robotic’s autonomous routines or speeds. This may also be utilized to quite a few different actions and sports activities utilizing an analogous setup.

Full code for the undertaking is on the market on GitHub.